A method for extracting low-level visual information from complex scenes

A technology of visual information and extraction methods, applied in the field of scene visual cognition, can solve the problems of loss, inability to obtain the underlying visual feature value, affecting the reliability of cognitive analysis, etc., to achieve the effect of ensuring accuracy and validity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] The present invention will be further described below in conjunction with the accompanying drawings and examples. It should be understood that the following examples are intended to facilitate the understanding of the present invention, and have no limiting effect on it.

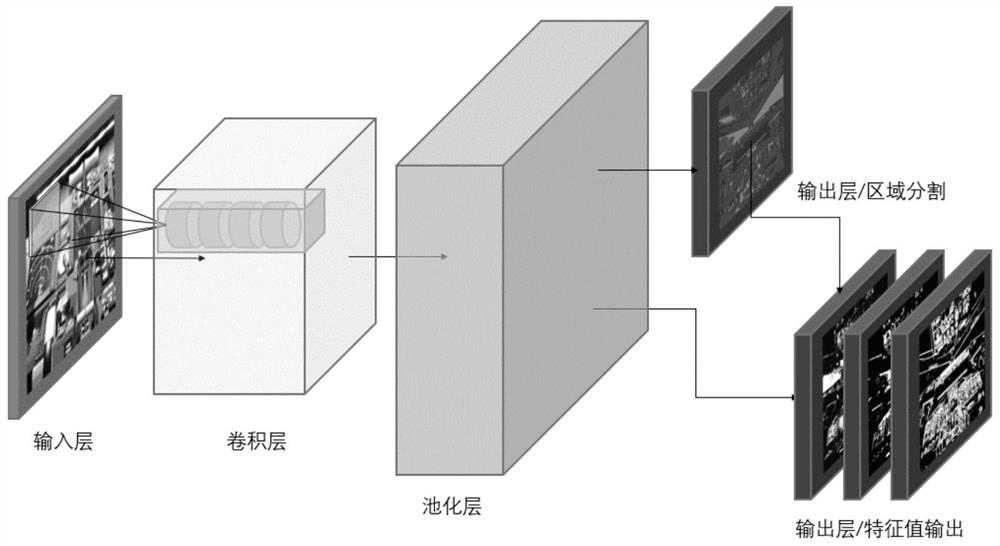

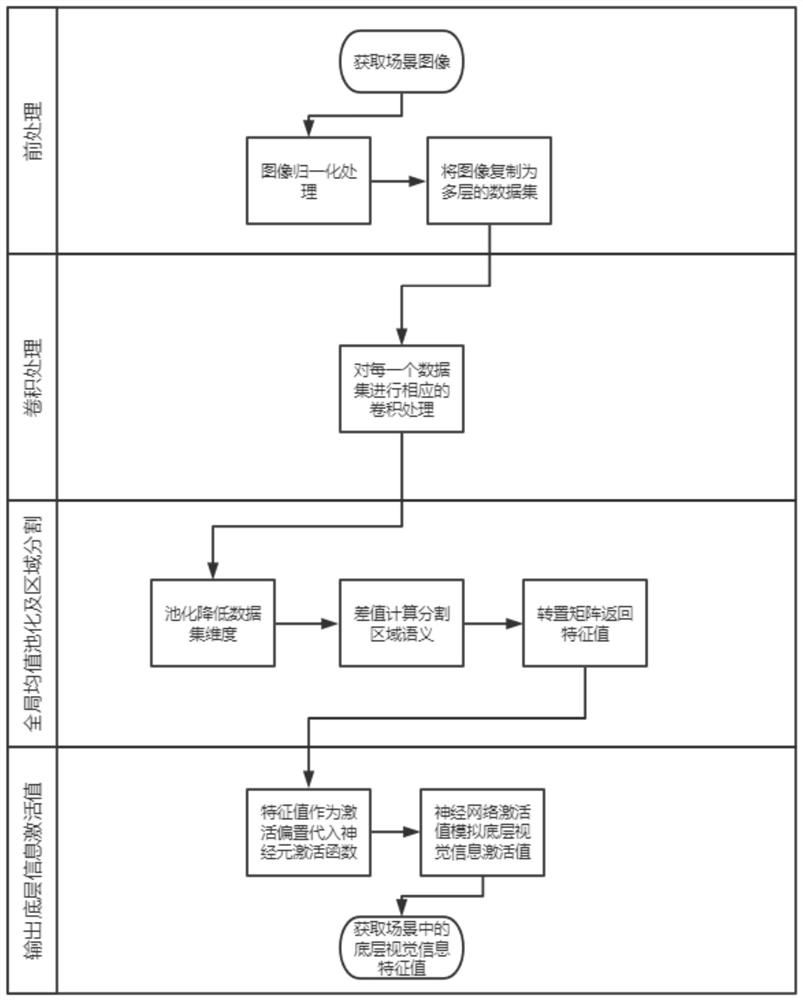

[0029] In this embodiment, the aircraft cockpit scene is taken as an example, such as figure 1 As shown, due to the high global complexity of complex scenes such as the aircraft cockpit, local details will be lost due to the influence of information noise when the feature value extraction of the underlying visual information is performed. The present invention introduces an improved convolutional neural network structure, such as figure 2 As shown, four kinds of convolution filters are used to form a multi-depth analysis set to perform image semantic segmentation on scene images. Introduce the feature convolution filter to filter and extract the regional semantics of complex scenes, and then use the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com