Image quality determination method and device, electronic equipment and medium

An image quality and image technology, applied in the field of image processing, can solve problems affecting the conversion rate of advertisements, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

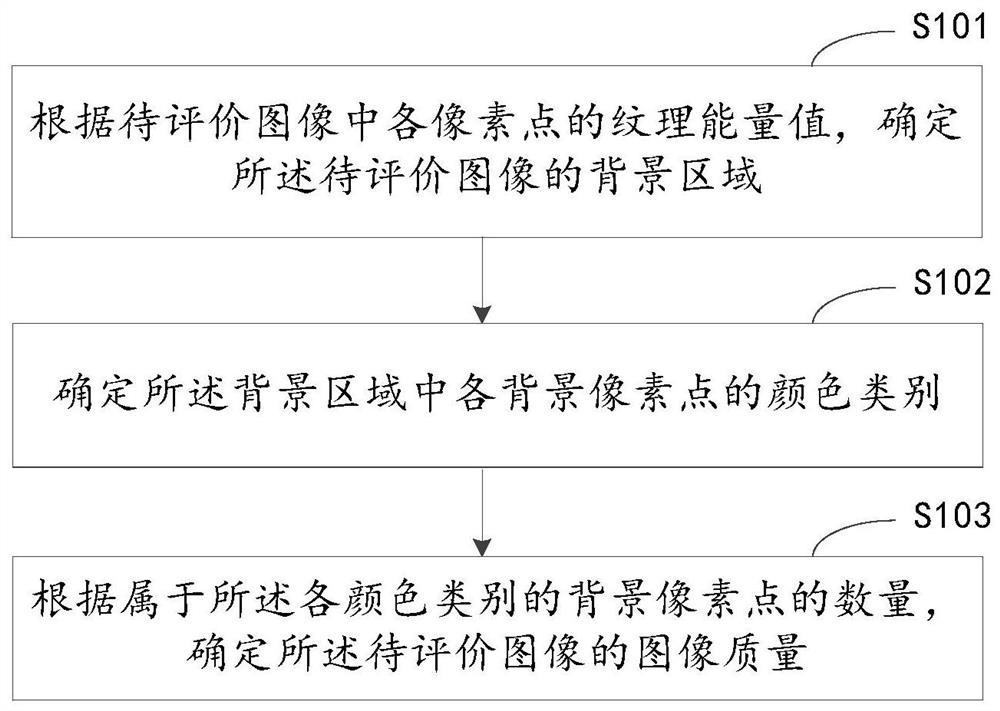

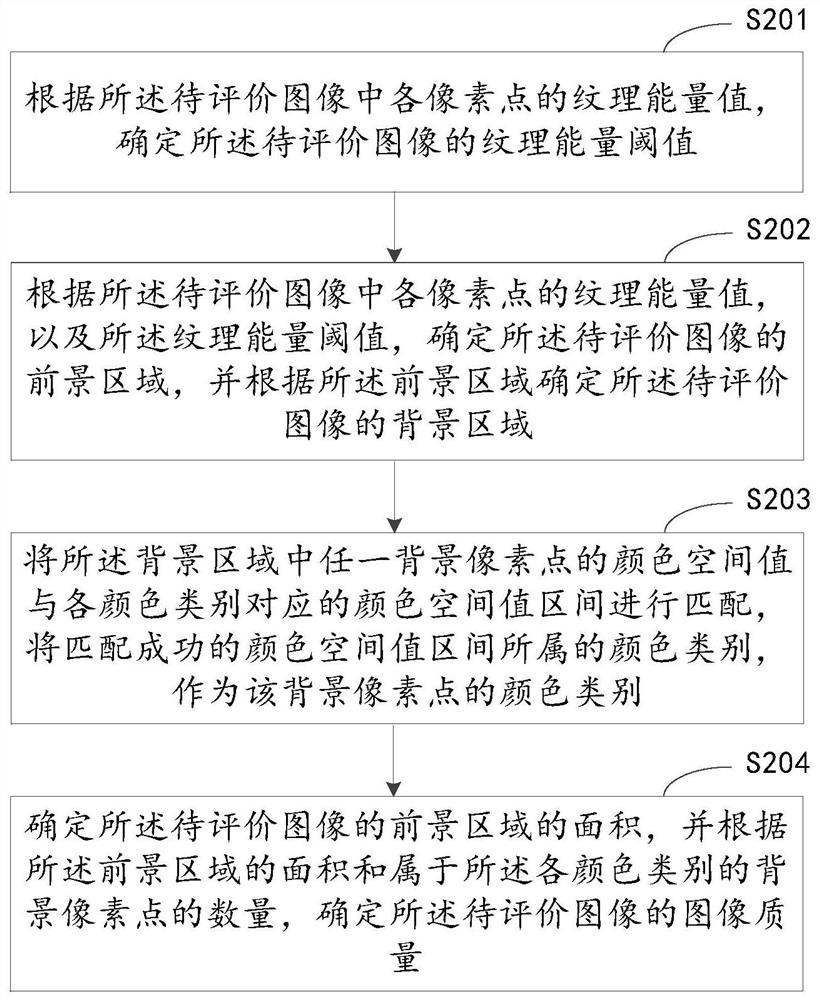

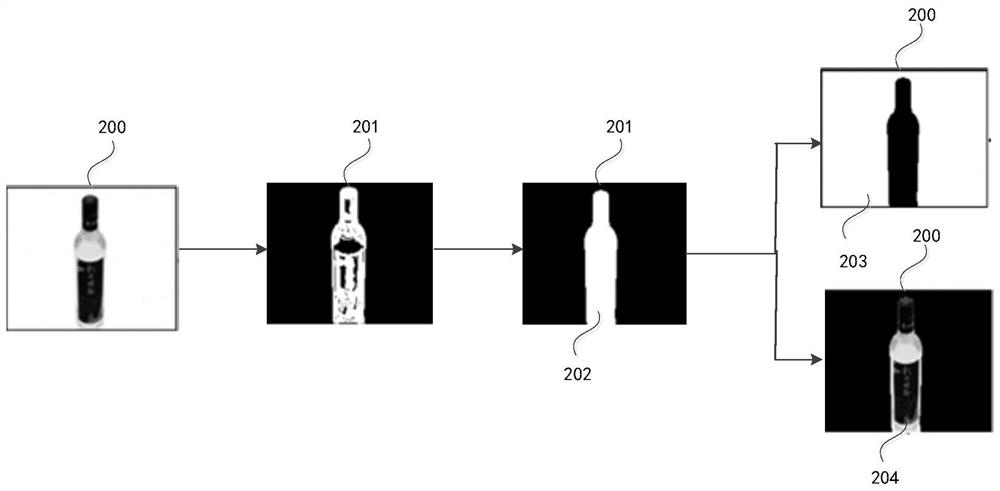

[0026] Exemplary embodiments of the present disclosure are described below in conjunction with the accompanying drawings, which include various details of the embodiments of the present disclosure to facilitate understanding, and they should be regarded as exemplary only. Accordingly, those of ordinary skill in the art will recognize that various changes and modifications of the embodiments described herein can be made without departing from the scope and spirit of the disclosure. Also, descriptions of well-known functions and constructions are omitted in the following description for clarity and conciseness.

[0027] During the research and development process, the applicant found that the prior art detects the problem of monotonous background color of an image, first obtains the background area of the image to be detected through target detection or segmentation technology, and then determines whether the color is monotonous based on the acquired background area.

[0028] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com