Multi-edge base station joint cache replacement method based on agent deep reinforcement learning

A technology of cache replacement and reinforcement learning, applied in the field of communication

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

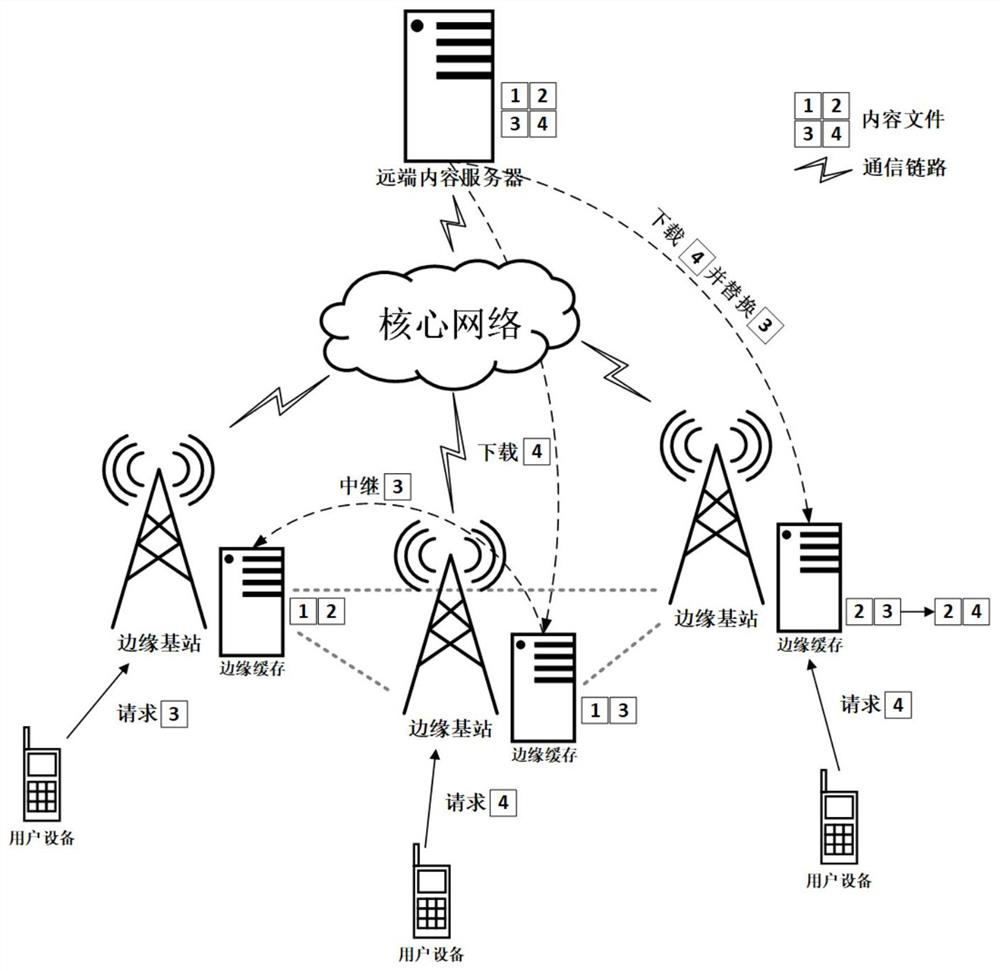

[0047] In this example, if figure 1 As shown, in the edge caching system, when the user equipment sends a request to the edge base station, if the content requested by the user is cached in the base station that accepts the request, it will directly deliver the content to the user equipment; if the base station that accepts the request does not include the user The requested content is sent to other base stations in its neighborhood. If other base stations in the neighborhood cache the content requested by the user, it will transmit the content to the base station that accepts the request and then deliver it to the user equipment; if the base station that accepts the request If the base station and all base stations in its neighborhood do not cache the content requested by the user, it sends a request to the remote content server (the remote content server contains all the content), and the base station that accepts the request downloads the content requested by the user from t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com