Method for training object classification model and identification method using object classification model

A training method and object classification technology, applied in the training field of object classification models, can solve the problems of not making good use of inter-frame information and not considering the influence of object features.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

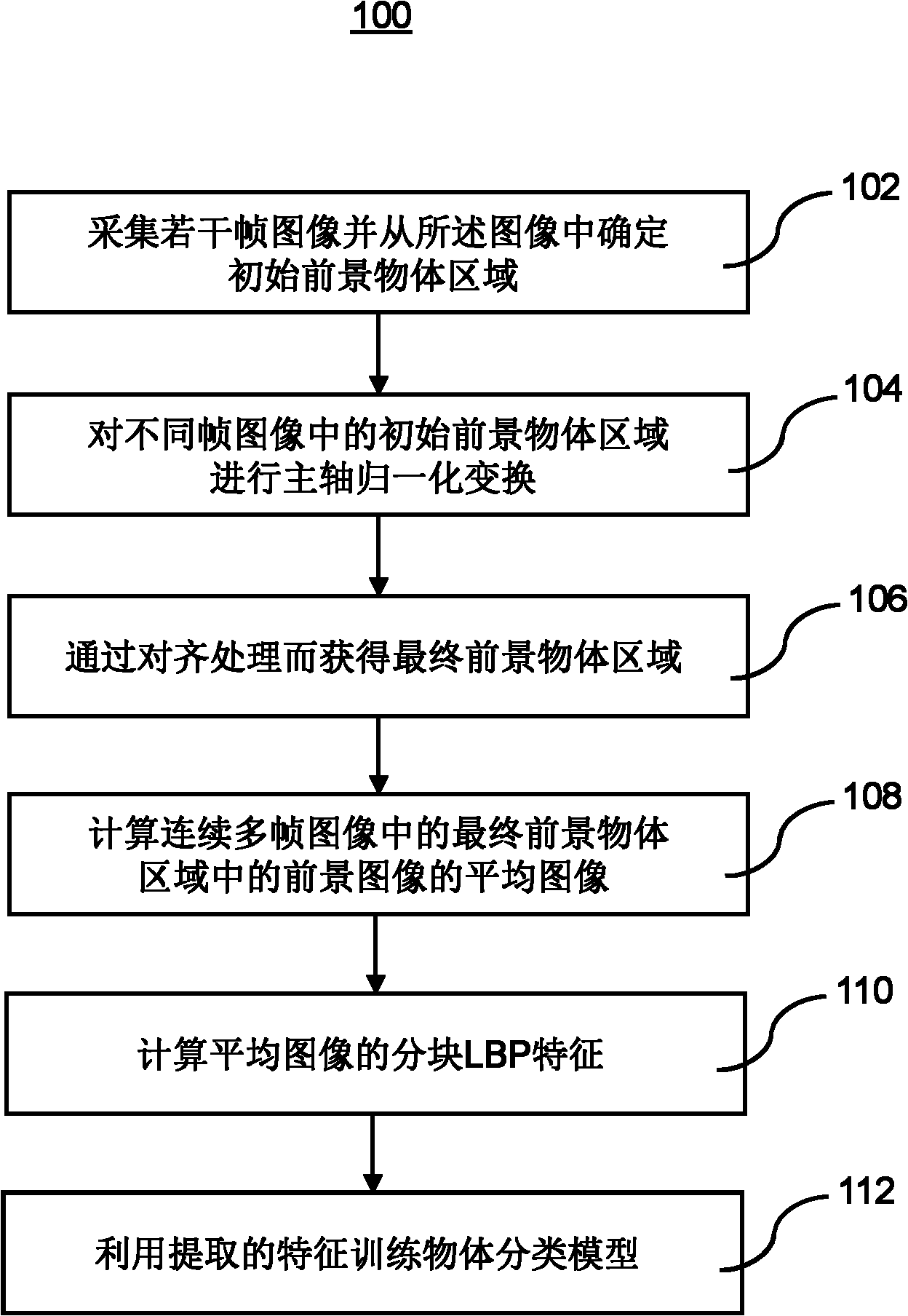

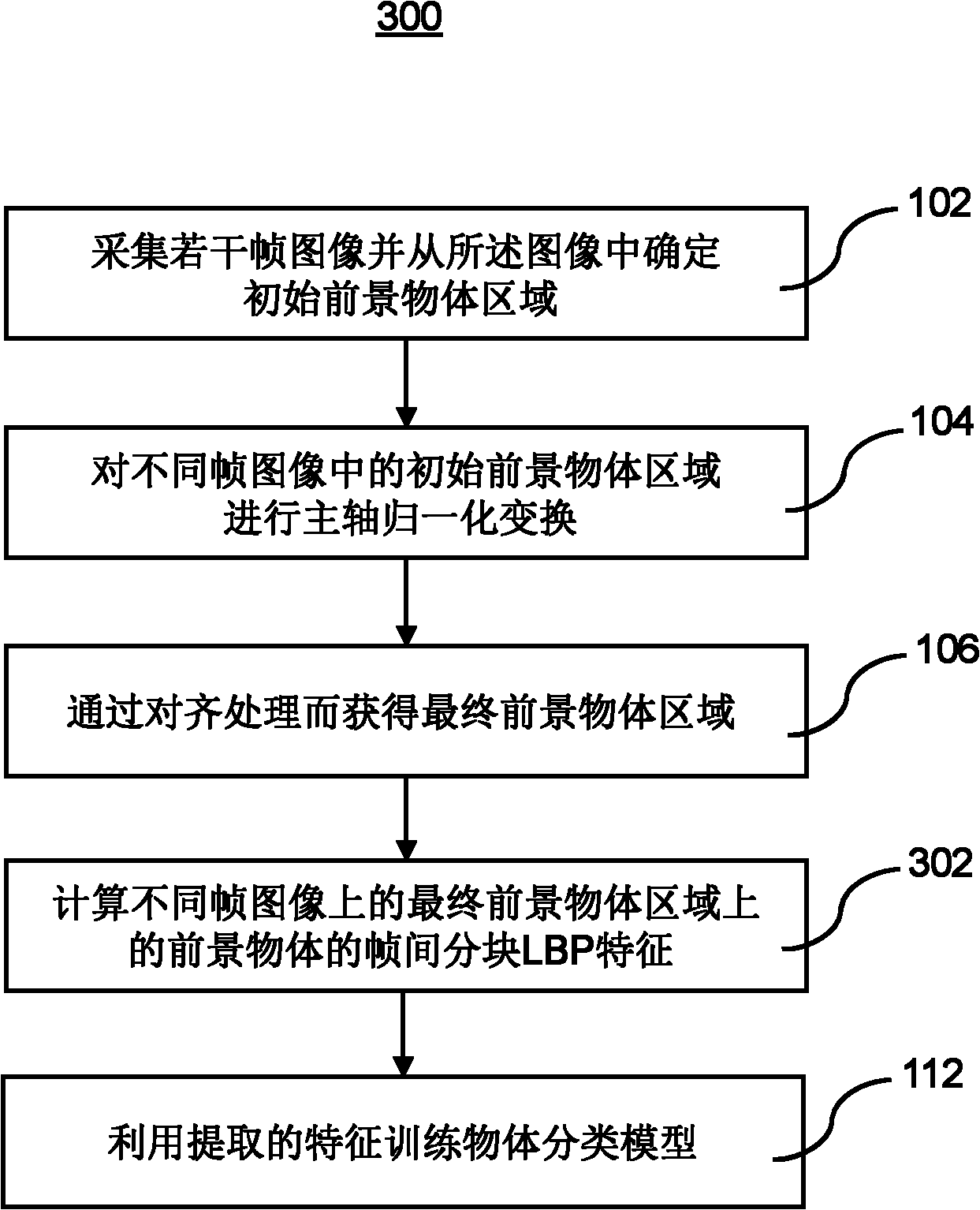

Method used

Image

Examples

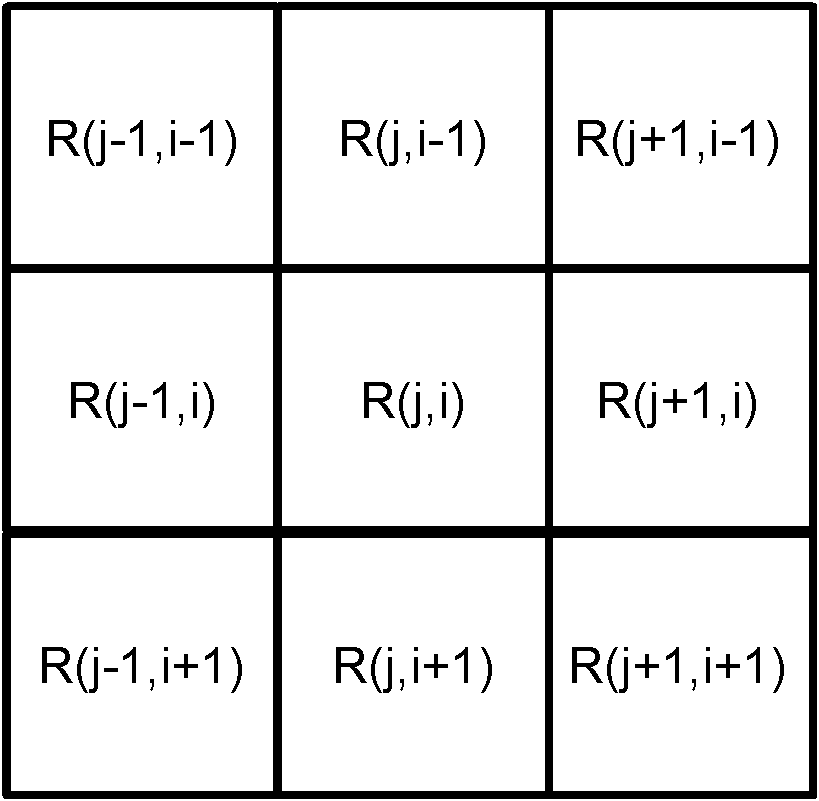

Embodiment Construction

[0043] The detailed description of the present invention directly or indirectly simulates the operation of the technical solution of the present invention mainly through programs, steps, logic blocks, processes or other symbolic descriptions. In the ensuing description, numerous specific details are set forth in order to provide a thorough understanding of the present invention. Rather, the invention may be practiced without these specific details. These descriptions and representations herein are used by those skilled in the art to effectively convey the substance of their work to others skilled in the art. In other words, for the purpose of avoiding obscuring the present invention, well-known methods, procedures, components and circuits have not been described in detail since they are readily understood.

[0044] Reference herein to "one embodiment" or "an embodiment" refers to a particular feature, structure or characteristic that can be included in at least one implementa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com