A Multi-camera Editing Method Based on Cluster Rendering

A multi-camera and editing technology, applied in the field of multi-camera editing based on cluster rendering, can solve problems such as video freezing and affecting editing efficiency, and achieve the effect of avoiding jamming

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

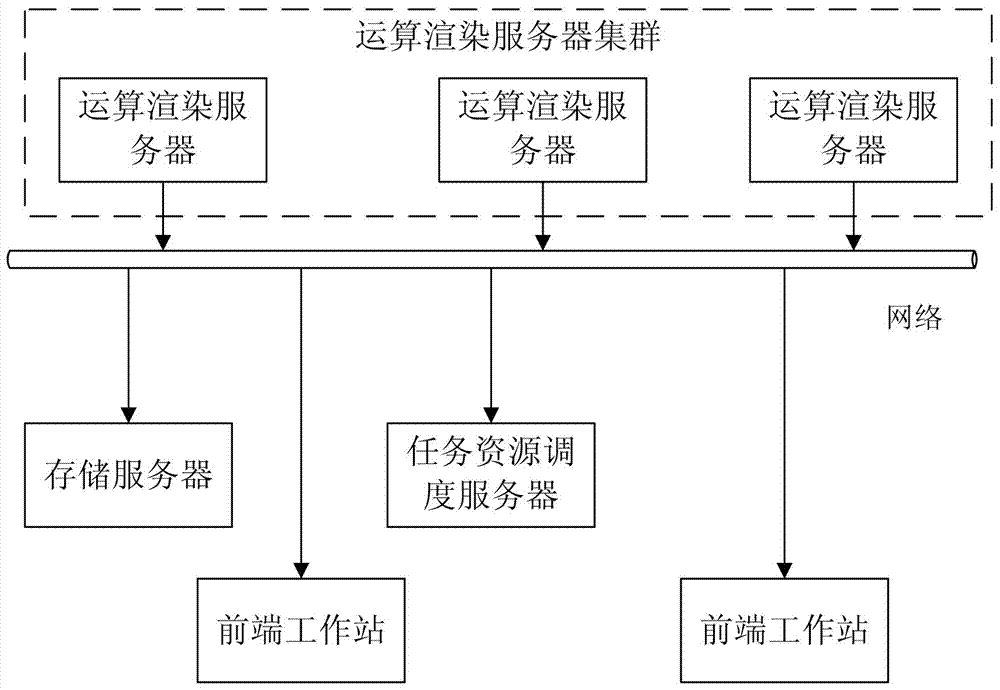

[0030] This embodiment is a multi-shot editing method based on cluster rendering. The principle diagram of the system used in the method is as figure 1 Shown. figure 1 The computing rendering server cluster in, only draws 3 servers, in fact there can be 4, 5, 6, or even more, and front-end workstations can have 2, 3, 4 or more.

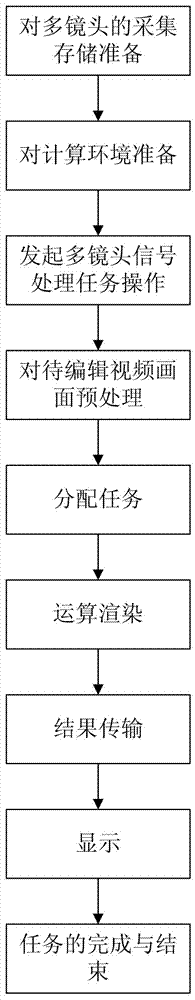

[0031] The steps of the method described in this embodiment are as follows, and the flow diagram is as follows figure 2 Shown:

[0032] Steps to prepare for multi-camera capture and storage: it is used to capture and save the video signals of multiple cameras at the same time. For each captured video, the time code is marked for each frame of video according to a unified time standard. The obtained original video files are stored in the storage server uniformly.

[0033] Collection and storage of multi-camera signals: collect and save the video signals of multiple cameras at the same time, and mark each frame of video according to a unified time standard ...

Embodiment 2

[0064] This embodiment is an improvement of the first embodiment, and is a refinement of the steps of the first embodiment regarding result transmission. In the result transmission step described in this embodiment, the calculation rendering server transmits to the front-end workstation in time every time a frame of video is completed. The front-end workstation analyzes the calculation results and the multiple rendered video screen information for each of the same time code frames. The channel video can be decoded and corrected in time, and can be sent to display after processing is completed, and the video picture information decoded, corrected, and processed in advance will be cached in the memory of the front-end workstation and sent to the display device for display at an appropriate time.

Embodiment 3

[0066] This embodiment is an improvement of the first embodiment, and is a refinement of the steps of the first embodiment regarding result transmission. In the result transmission step described in this embodiment, the front-end workstation judges whether the current network speed and bandwidth meet the transmission requirements of each channel of uncompressed video images, and if they are satisfied, they will ask the current computing rendering server responsible for decoding and processing the channel of image video. Compressed video data; if the network speed and bandwidth do not meet the transmission requirements of uncompressed video images, the front-end workstation will request the compressed video data from the current computing rendering server responsible for decoding and processing this channel of image video, and compress the received channel After decoding the video data.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com