Method and apparatus for synthetizing animations in videos in real time

A video and animation technology, applied in animation production, image data processing, instruments, etc., can solve problems such as inability to effectively satisfy visual communication, short duration, unpredictability of camera shooting angle, and camera position, etc., to meet the requirements of video The effect of visual communication needs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

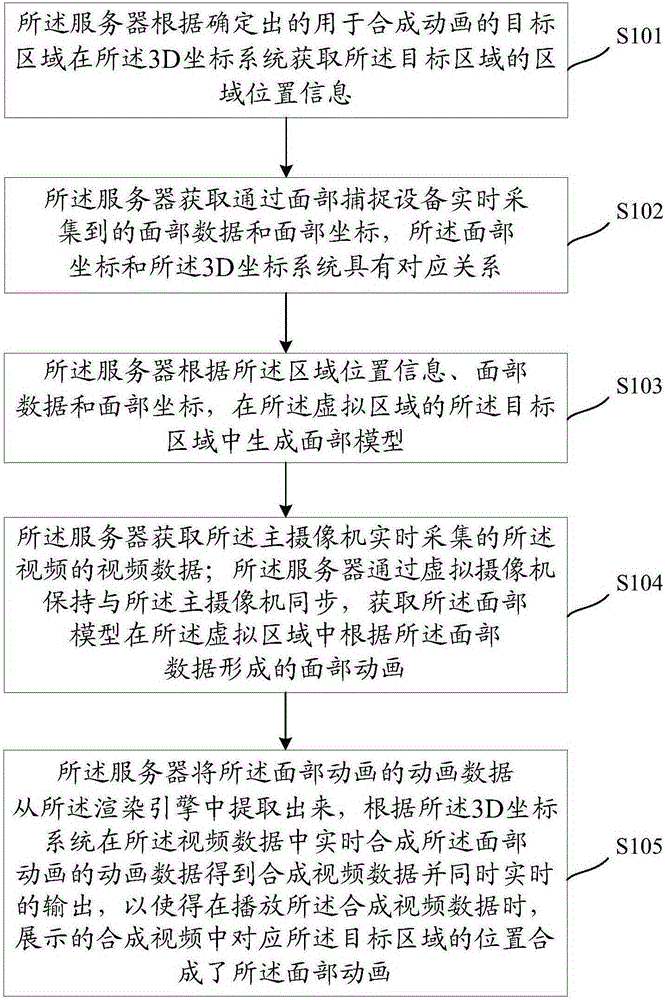

[0057] figure 1 It is a method flowchart of a method for synthesizing animation in video in real time provided by an embodiment of the present invention. The method is applied to video collected in real time, and relevant parameters need to be obtained before implementing the method.

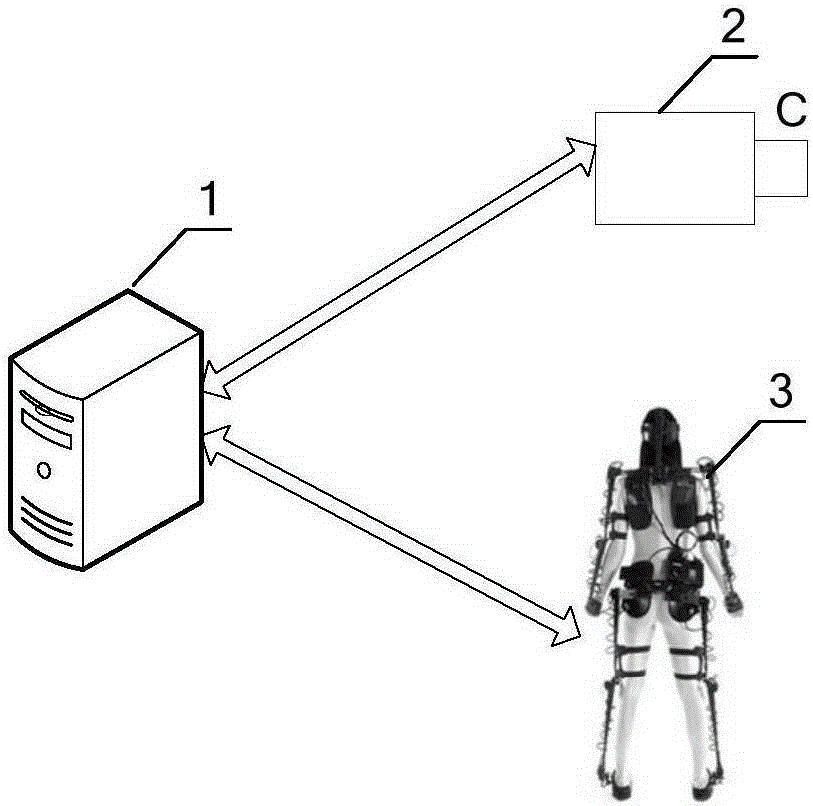

[0058] The fixed area where the video is collected includes at least one camera, and the video is collected by the main camera in the at least one camera; the server establishes a 3D coordinate system in the fixed area, and the server collects the at least one video in real time. The position information of a camera in the 3D coordinate system and the video acquisition parameters of the main camera; the server uses a rendering engine to establish a virtual area according to the fixed area and the 3D coordinate system, and the fixed area is in the The position information in the 3D coordinate system has a proportional relationship with the position information of the virtual area in the 3D coord...

Embodiment 2

[0090] In the embodiment of the present invention, in addition to synthesizing facial animation in the video in real time, further, skeletal animation can also be synthesized, and the visual communication effect of the synthesized animation can be increased through the body movements of the skeletal animation.

[0091] exist figure 1 On the basis of the corresponding embodiment, image 3 It is a method flowchart of a method for synthesizing animation in video in real time provided by an embodiment of the present invention.

[0092] S301: The server acquires area position information of the target area in the 3D coordinate system according to the determined target area for synthesizing animation.

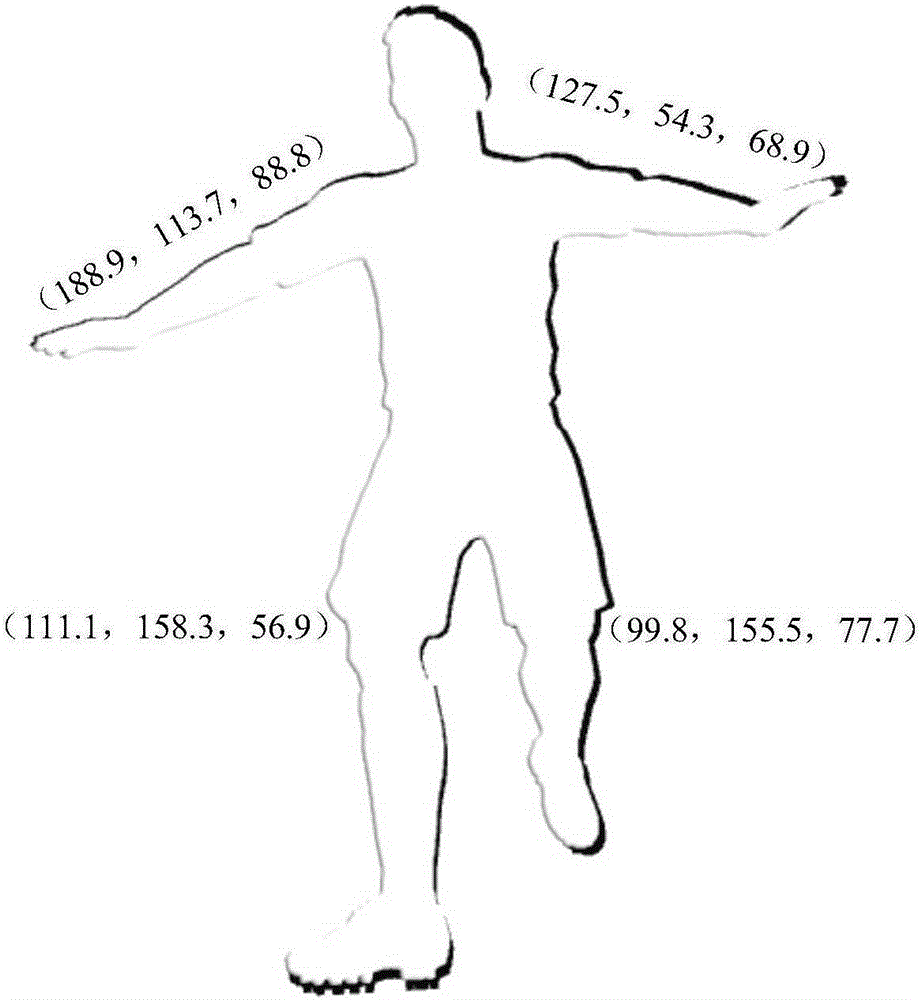

[0093] S302: The server acquires motion data and motion coordinates collected in real time by a motion capture device, and the motion coordinates have a corresponding relationship with the 3D coordinate system. And the server acquires facial data and facial coordinates collected in...

Embodiment 3

[0110] In practical applications, the motion capture device may collect the motion data of the third object according to a preset collection frequency. For example, the preset collection frequency is 50 times per second, that is, the motion capture device collects motion data 50 times within one second. Of course, this numerical value is only for illustration, and the present invention is not limited thereto. In addition, the server may preset a refresh rate, which is referred to as a preset refresh rate for ease of description. The server generates video images of each frame of the target video at a preset refresh rate. For example, the refresh rate of the server may be 40 times per second, then the server generates 40 frames of video images within one second. Of course, this numerical value is only for illustration, and the present invention is not limited thereto.

[0111] There is a situation that the refresh rate of the server is different from the collection frequency...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com