Gesture determination device and method, gesture-operated device, program, and recording medium

A judging device and gesture technology, applied in the direction of character and pattern recognition, input/output process of data processing, image data processing, etc., can solve the problem that it is difficult to distinguish the conscious action of the operator

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

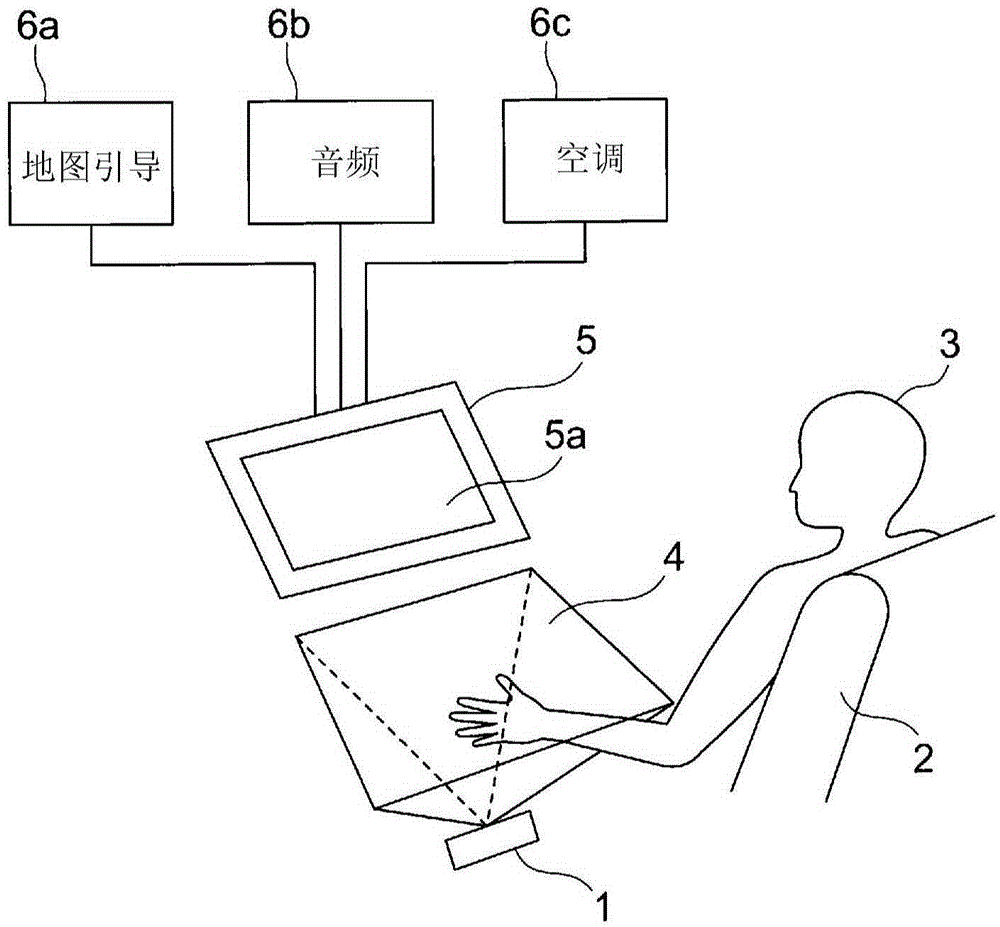

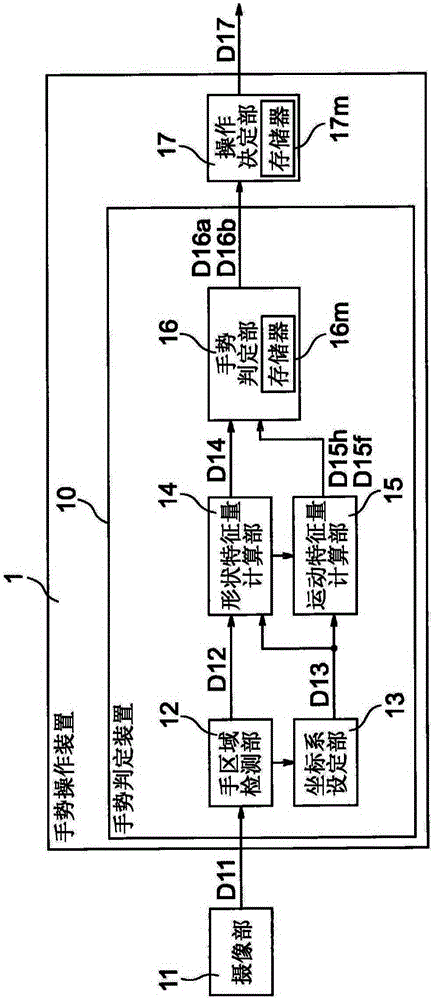

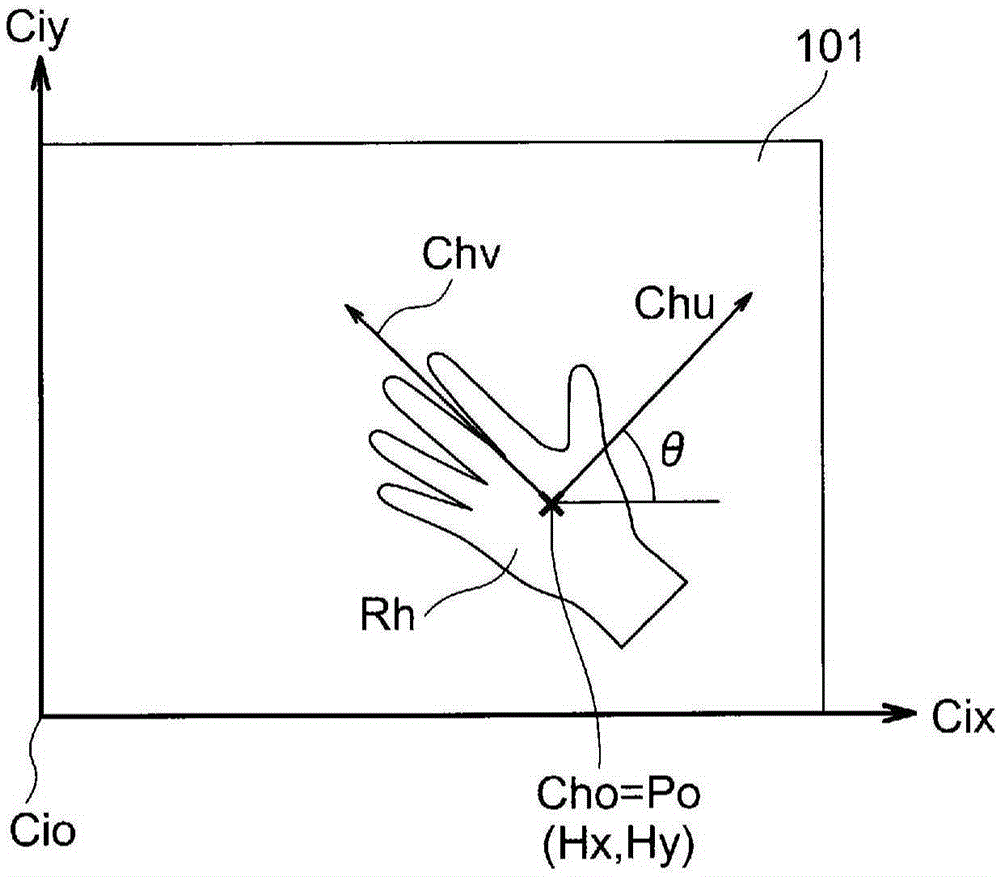

[0038] figure 1 It is a diagram showing an example of use of the gesture operation device according to Embodiment 1 of the present invention. As shown in the figure, the gesture operation device 1 recognizes that the operation performed by the operator 3 is performed in a predetermined operation area 4 within the reach of the hand of an operator 3 sitting on a seat 2 such as a driver's seat, a passenger's seat, or a rear seat of the vehicle. The gesture provides operation instructions to a plurality of in-vehicle devices 6 a , 6 b , and 6 c as operated devices via the operation control unit 5 .

[0039] Next, assume a case where the operated devices are a map guidance device (navigation) 6a, an audio device 6b, and an air conditioner (air conditioning device) 6c. Operation instructions for the map guidance device 6 a , audio device 6 b , and air conditioner 6 c are performed through operation guidance displayed on the display unit 5 a of the operation control unit 5 , and ope...

Embodiment approach 2

[0204] Figure 14 It is a block diagram showing the configuration of the gesture operation device according to Embodiment 2 of the present invention. Figure 14 Gesture operating device shown with figure 2 The gesture control device shown is largely the same as the figure 2 The same reference numerals represent the same or corresponding parts, but the difference is that a mode control unit 18 and a memory 19 are added instead of figure 2 The coordinate system setting part 13 shown is provided with the coordinate system setting part 13a.

[0205] First, an overview of the device will be described.

[0206] The mode control unit 18 is supplied with mode selection information MSI from the outside, and outputs mode control information D18 to the coordinate system setting unit 13a.

[0207] The coordinate system setting unit 13a is supplied with the hand area information D12 from the hand area detection unit 12, and is supplied with the mode control information D18 from the ...

Embodiment approach 3

[0248] Figure 16 It is a block diagram showing the configuration of the gesture operation device according to Embodiment 3 of the present invention. Figure 16 Gesture operating device shown with figure 2 The gesture control device shown is largely the same as the figure 2 The same reference numerals indicate the same or corresponding parts.

[0249] Figure 16 Gesture operating device shown with figure 2 The gesture operation devices shown are substantially the same, but differ in that an operator estimation unit 20 is added, and an operation determination unit 17 a is provided instead of the operation determination unit 17 .

[0250] The operator estimating unit 20 estimates the operator based on one or both of the origin coordinates and the relative angle of the hand coordinate system output by the coordinate system setting unit 13 , and outputs operator information D20 to the operation determining unit 17 a. The estimation of the operator here may be, for example,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com