Speech search device and speech search method

A speech and speech recognition technology, applied in speech analysis, speech recognition, natural language data processing, etc., to achieve the effect of improving retrieval accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

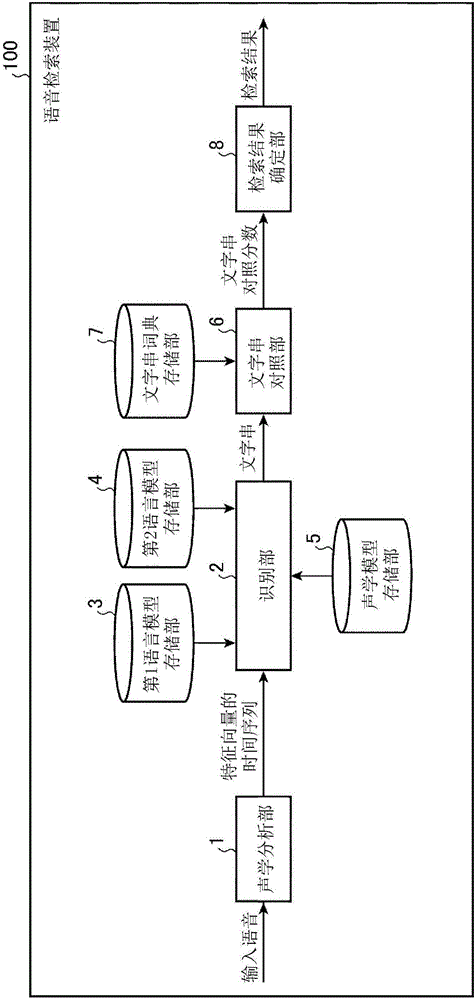

[0025] figure 1 It is a block diagram showing the configuration of the speech search device according to Embodiment 1 of the present invention.

[0026] The speech retrieval device 100 is composed of an acoustic analysis unit 1, a recognition unit 2, a first language model storage unit 3, a second language model storage unit 4, an acoustic model storage unit 5, a character string comparison unit 6, a character string dictionary storage unit 7 and a retrieval system. The result determining unit 8 is constituted.

[0027] The acoustic analysis unit 1 performs acoustic analysis of the input speech and converts it into a time series of feature vectors. The feature vector is, for example, 1 to N-dimensional data of MFCC (Mel Frequency Cepstral Coefficient: Mel Frequency Cepstral Coefficient). The value of N is 16, for example.

[0028] The recognition unit 2 uses the first language model stored in the first language model storage unit 3, the second language model stored in the s...

Embodiment approach 2

[0066] Figure 4 It is a block diagram showing the configuration of the speech search device according to Embodiment 2 of the present invention.

[0067] In the voice search device 100a according to Embodiment 2, the recognition unit 2a not only outputs the character string as the recognition result to the search result determination unit 8a, but also outputs the acoustic likelihood and linguistic likelihood of the character string to the search result determination unit 8a. Spend. In addition to using the character string collation score, the retrieval result specifying unit 8a specifies the retrieval result using the acoustic likelihood and the language likelihood.

[0068] Hereinafter, for parts that are the same as or corresponding to the constituent elements of the speech search device 100 according to Embodiment 1, the symbols and figure 1 The same reference numerals as used in , and descriptions are omitted or simplified.

[0069] The recognition unit 2 a performs th...

Embodiment approach 3

[0080] Image 6 It is a block diagram showing the configuration of the speech retrieval device according to Embodiment 3 of the present invention.

[0081] Compared with the speech search device 100a shown in Embodiment 2, the speech search device 100b of Embodiment 3 has only the second language model storage unit 4 and does not have the first language model storage unit 3 . Therefore, the recognition process using the first language model is performed using the external recognition device 200 .

[0082] Hereinafter, the parts that are the same as or corresponding to the constituent elements of the speech search device 100a according to Embodiment 2 are marked with Figure 4 The same reference numerals as used in , omit or simplify the description.

[0083] The external recognition device 200 can be constituted by, for example, a server with relatively high computing power, and obtains the best A time-series character string close to the feature vector input from the acous...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com