A task processing method and server

A task processing and server technology, applied in the field of resource optimization, can solve problems such as affecting user performance experience, reducing the efficiency of operation centers, and large resource distribution, so as to improve resource utilization, avoid congestion, and improve overall performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

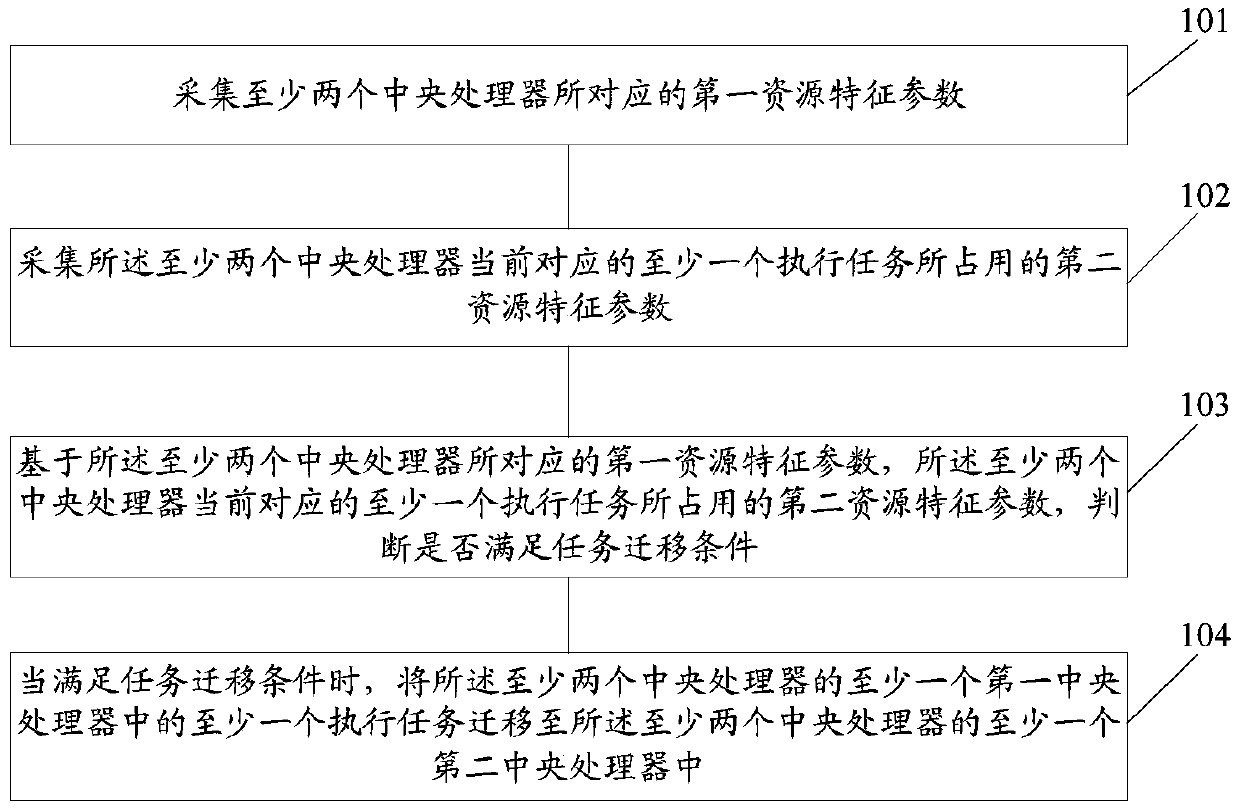

[0022] figure 1 It is a schematic flow diagram of a task processing method in an embodiment of the present invention; as figure 1 As shown, the method includes:

[0023] Step 101: collecting first resource characteristic parameters corresponding to at least two central processing units;

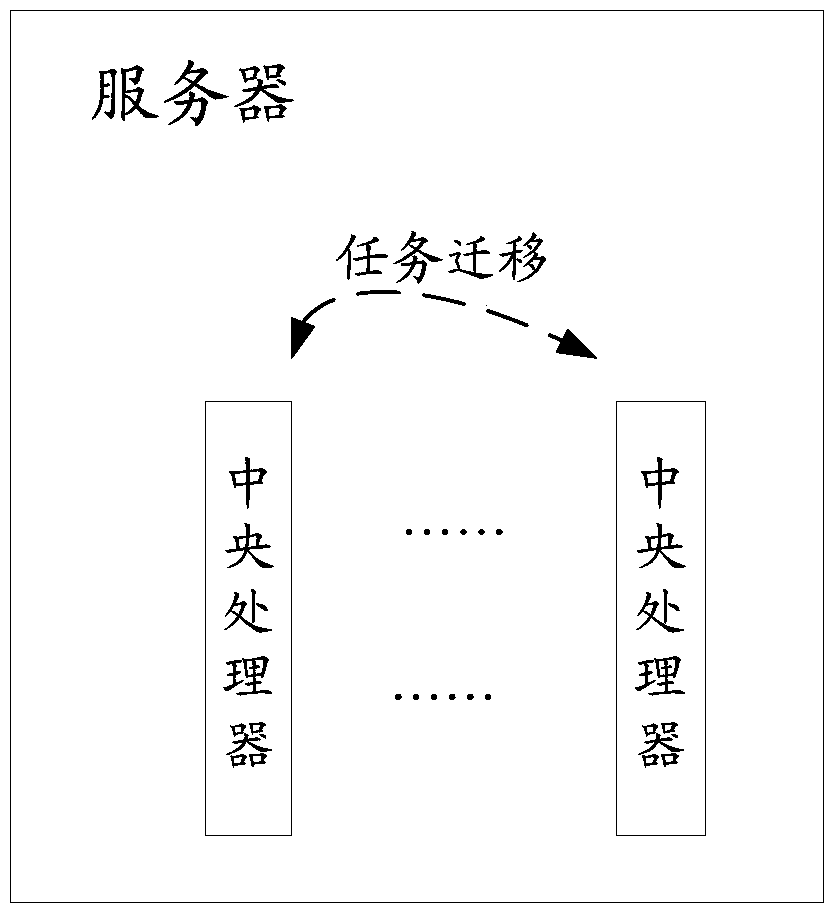

[0024] Here, the method described in this embodiment can be specifically applied to a server or a server cluster; specifically, when the method described in this embodiment is applied to a server, as figure 2 As shown, the server may specifically include at least two central processing units (CPUs). At this time, using the method described in this embodiment can implement task migration between at least two central processing units of the server, so as to realize the The load balancing of the memory bandwidth between CPUs in the server improves the overall performance of the server.

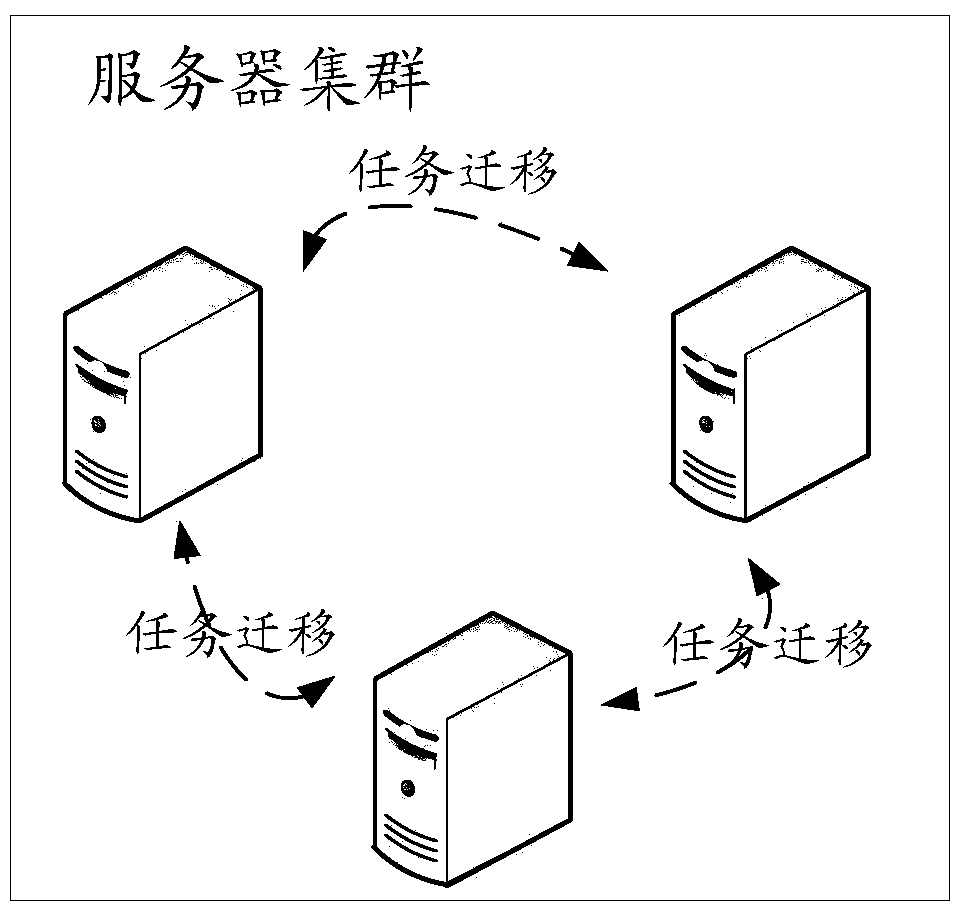

[0025] Or, when the method described in the embodiment of the present invention is applied to a server cl...

Embodiment 2

[0037] Figure 4 It is a schematic flow diagram of the realization of the second task processing method in the embodiment of the present invention; as Figure 4 As shown, the method includes:

[0038]Step 401: collecting first resource characteristic parameters corresponding to at least two central processing units;

[0039] Here, the method described in this embodiment can be specifically applied to a server or a server cluster; specifically, when the method described in this embodiment is applied to a server, as figure 2 As shown, the server may specifically include at least two central processing units (CPUs). At this time, using the method described in this embodiment can implement task migration between at least two central processing units of the server, so as to realize the The load balancing of the memory bandwidth between CPUs in the server improves the overall performance of the server.

[0040] Or, when the method described in the embodiment of the present inven...

Embodiment 3

[0066] This embodiment provides a server, such as Figure 6 As shown, the server includes:

[0067] The collection unit 61 is configured to collect first resource characteristic parameters corresponding to at least two central processors, and collect second resource characteristic parameters currently occupied by at least one execution task corresponding to the at least two central processors;

[0068]The processing unit 62 is configured to determine whether to determine whether to Satisfy the task migration condition; when the task migration condition is met, at least one execution task of at least one first central processor of the at least two central processors is migrated to at least one second central processor of the at least two central processors in the CPU.

[0069] In an embodiment, the processing unit 62 is further configured to perform group processing on the at least two central processors when the task migration condition is satisfied, to obtain a first group ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com