Scene picture character detection method based on discrimination dictionary learning and sparse representation

A technology of dictionary learning and sparse representation, which is applied in character and pattern recognition, instruments, computer components, etc., and can solve problems such as the difficulty of text detection in research scenes and images

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

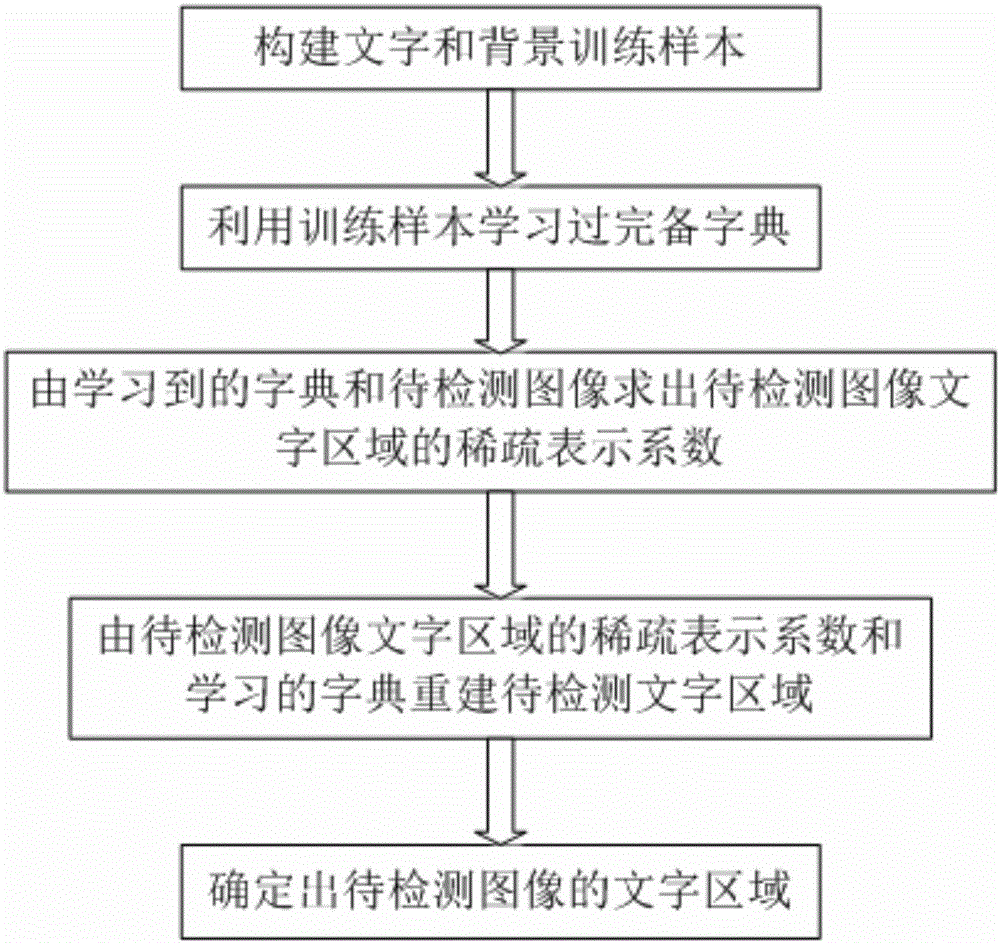

[0059] Embodiment 1: as Figure 1-7 As shown, a method of scene image text detection based on discriminative dictionary learning and sparse representation, first uses the training data and the proposed discriminative dictionary learning method to train and learn two dictionaries: the text dictionary and the background dictionary, and then sequentially merge the text Dictionary and background dictionary; then the sparse representation coefficients of the text and background corresponding to the image to be detected are calculated from the merged dictionary, the image to be detected, and the sparse representation method; finally, the learned dictionary corresponds to the calculated image to be detected Sparse representation coefficients to reconstruct the text in the image to be detected; use heuristic rules to process the text area in the reconstructed text image to detect the candidate text area in the image to be detected;

[0060] The specific steps are:

[0061] Step1, fir...

Embodiment 2

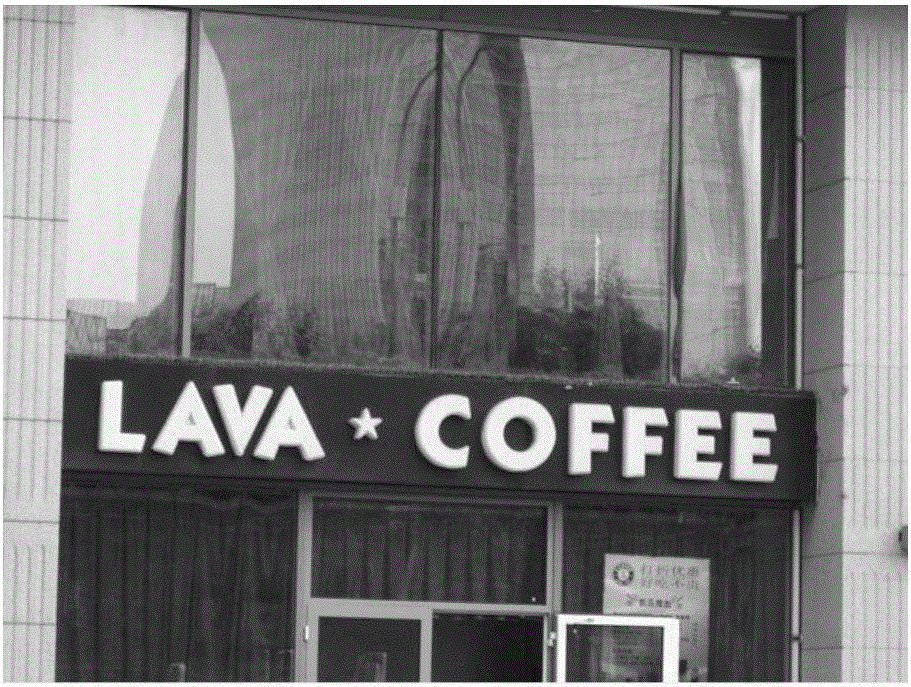

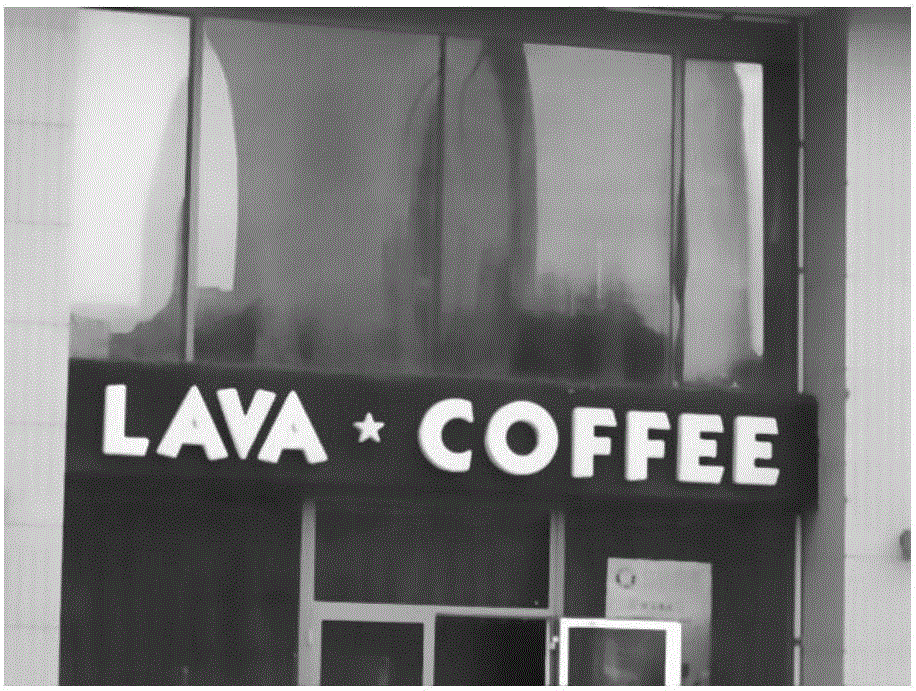

[0095] Embodiment 2: as Figure 1-7 shown, will be attached figure 2 The text in the source image to be detected in is detected. attached figure 2 It is a scene image with a complex background. The overall image is seriously polluted by light, and the geometric features of the background are very similar to those of the text. It is difficult to accurately detect the text in the image with traditional methods. The following describes the detection figure 2 TextArea steps in:

[0096] Step1, first construct the training samples of text and background;

[0097] Step1.1. Collect text images and background images from the Internet, wherein the text images only contain text without background texture, and the background images do not contain text.

[0098] Step1.2, collect the text image and background image data in Step1.1 in the form of sliding window, each window (n×n) collects data as a column vector (n 2 ×1) (hereinafter collectively referred to as atoms, n is the size ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com