Virtual reality interaction method and system

A technology of virtual reality and interaction method, which is applied in the fields of telephone communication, electrical components, equipment with functional cameras, etc., can solve the problems of low cost of VR glasses and high dependence on the surrounding environment, and achieve low dependence on the surrounding environment, high efficiency, Accurate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

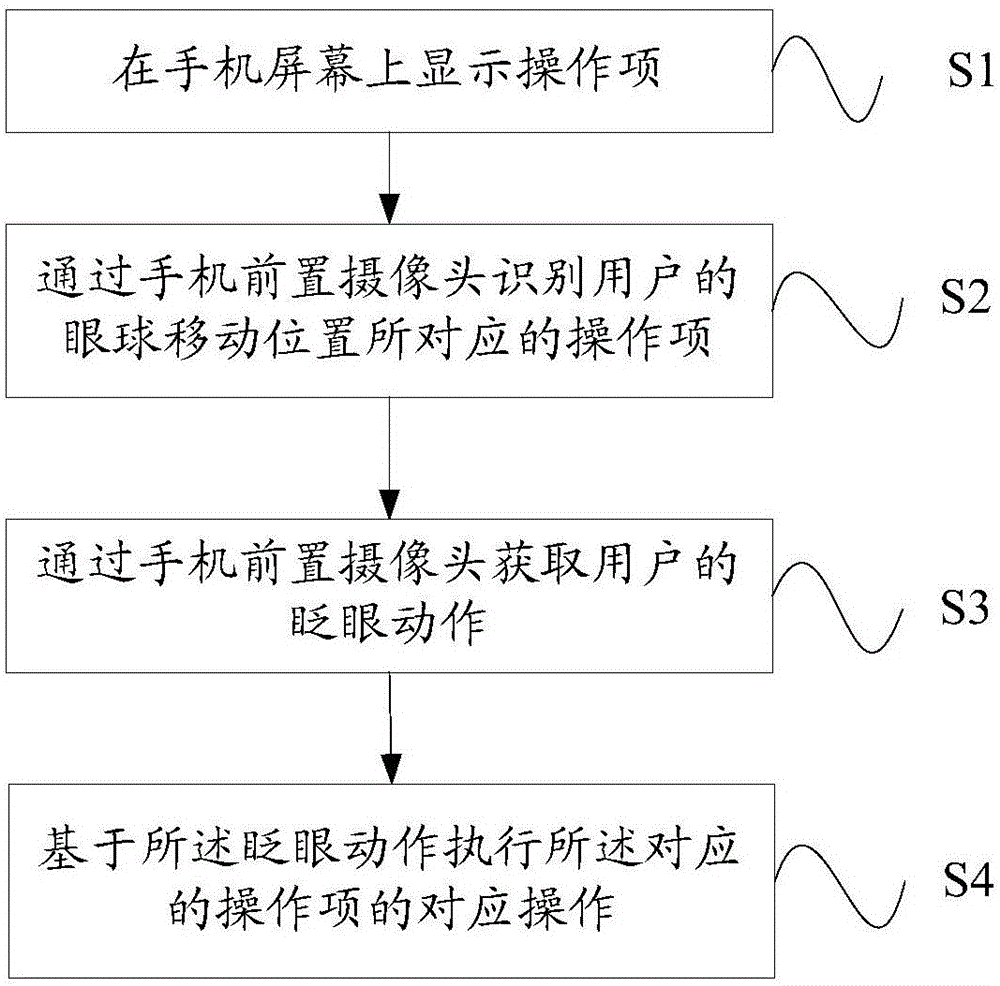

[0029] Such as figure 1 As shown, the present invention provides a virtual reality interaction method, including:

[0030] Step S1, displaying operation items on the screen of the mobile phone;

[0031] Step S2, identifying the operation item corresponding to the user's eye movement position through the front camera of the mobile phone;

[0032] Step S3, acquiring the blinking action of the user through the front camera of the mobile phone;

[0033] Step S4, performing a corresponding operation of the corresponding operation item based on the blinking action. The present invention first recognizes the moving position of the eyeball through the front camera, selects the operation item, and then recognizes the blink of an eye through the front camera, and confirms the operation item. It does not need to interact with other devices other than the mobile phone, and only needs to operate with one eye. Uninterrupted, no need for user hand and mouth operation, low dependence on th...

Embodiment 2

[0041] According to another aspect of the present application, a virtual reality interactive system is also provided, including:

[0042] The display module is used for displaying operation items on the screen of the mobile phone;

[0043] The selection module is used to identify the operation item corresponding to the user's eyeball movement position through the front camera of the mobile phone;

[0044] The confirmation module is used to obtain the blinking action of the user through the front camera of the mobile phone;

[0045] An execution module, configured to execute a corresponding operation of the corresponding operation item based on the blinking action.

[0046] In the virtual reality interactive system according to an embodiment of the present application, in the display module, the selection items displayed on the screen of the machine are operation areas.

[0047] In the virtual reality interactive system according to an embodiment of the present application, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com