Visual mileometer method based on convolutional neural network

A convolutional neural network and visual odometry technology, applied in the field of navigation and positioning, can solve problems such as insufficient point pairs, odometer estimation errors, GPS signals cannot provide positioning and navigation, etc., and achieve robust feature points, accurate mileage or pose estimation Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further described below in conjunction with the accompanying drawings.

[0038] refer to Figure 1 to Figure 5 , a visual odometry method based on a convolutional neural network, including the following steps:

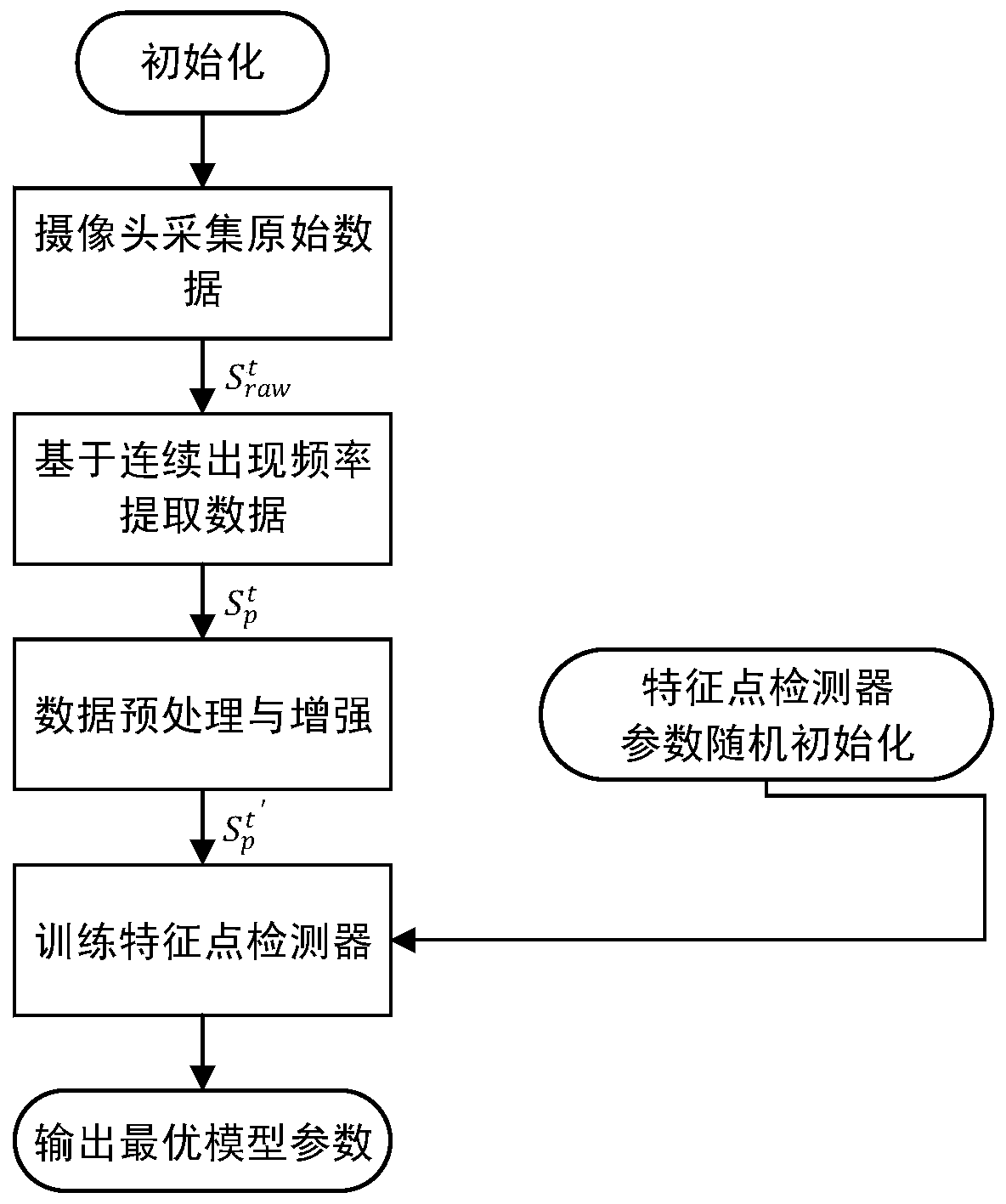

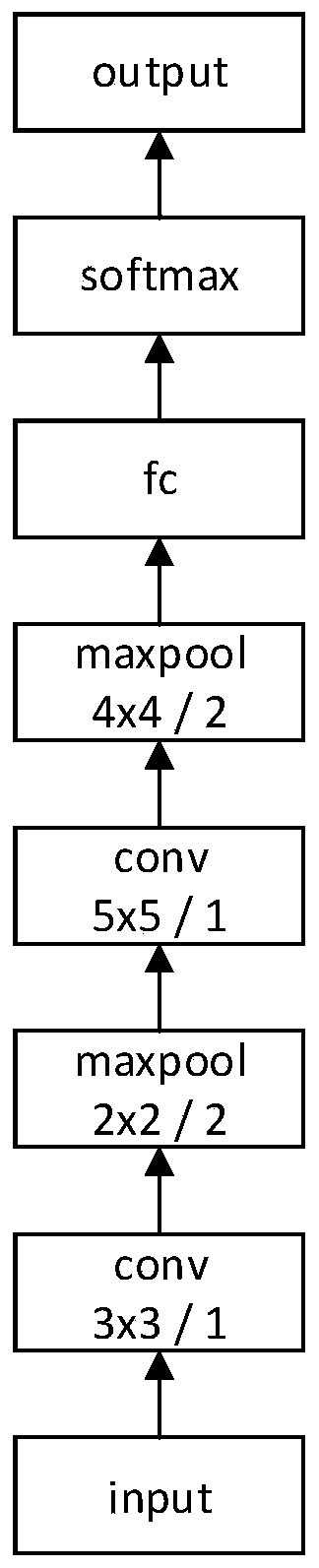

[0039] Step 1, collect the original environmental data through the camera carried by the mobile robot, and train the feature point detector A based on the convolutional neural network;

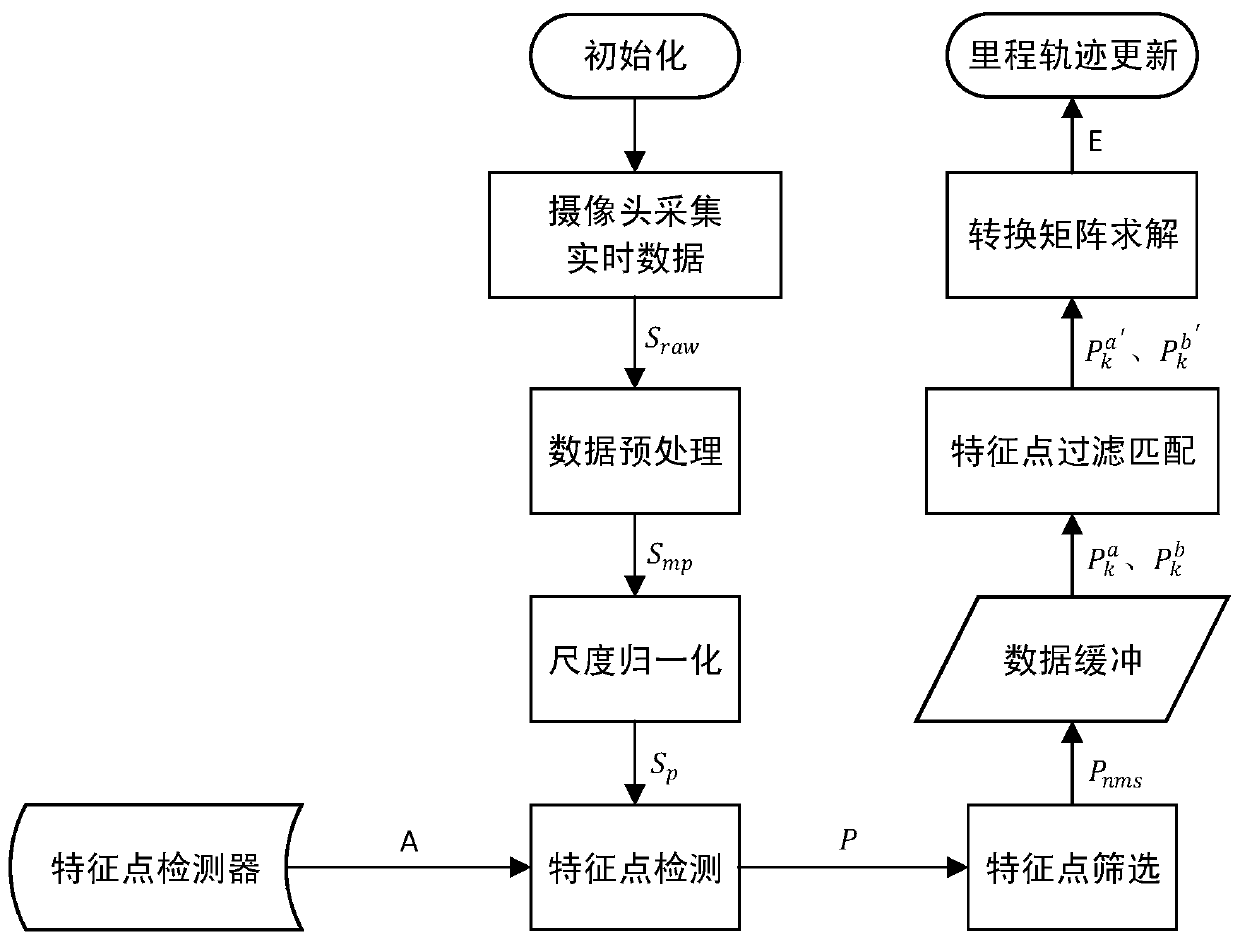

[0040] Step 2, the mobile robot executes the movement of the mileage to be estimated, and collects the raw data to be estimated through the camera carried;

[0041] Step 3, perform data sampling and clipping preprocessing operations on the data to be estimated collected by the camera to obtain the data to be processed;

[0042] Step 4, using the feature point detector A to filter the data to be detected to obtain feature point information;

[0043] Step 5, use the feature point information combined with the pole constraint method to solve the mot...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com