Texture mapping method, device and apparatus based on three-dimensional model

A 3D model and texture mapping technology, applied in the field of computer vision, can solve the problems of texture feature mapping 3D model, poor mapping effect, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

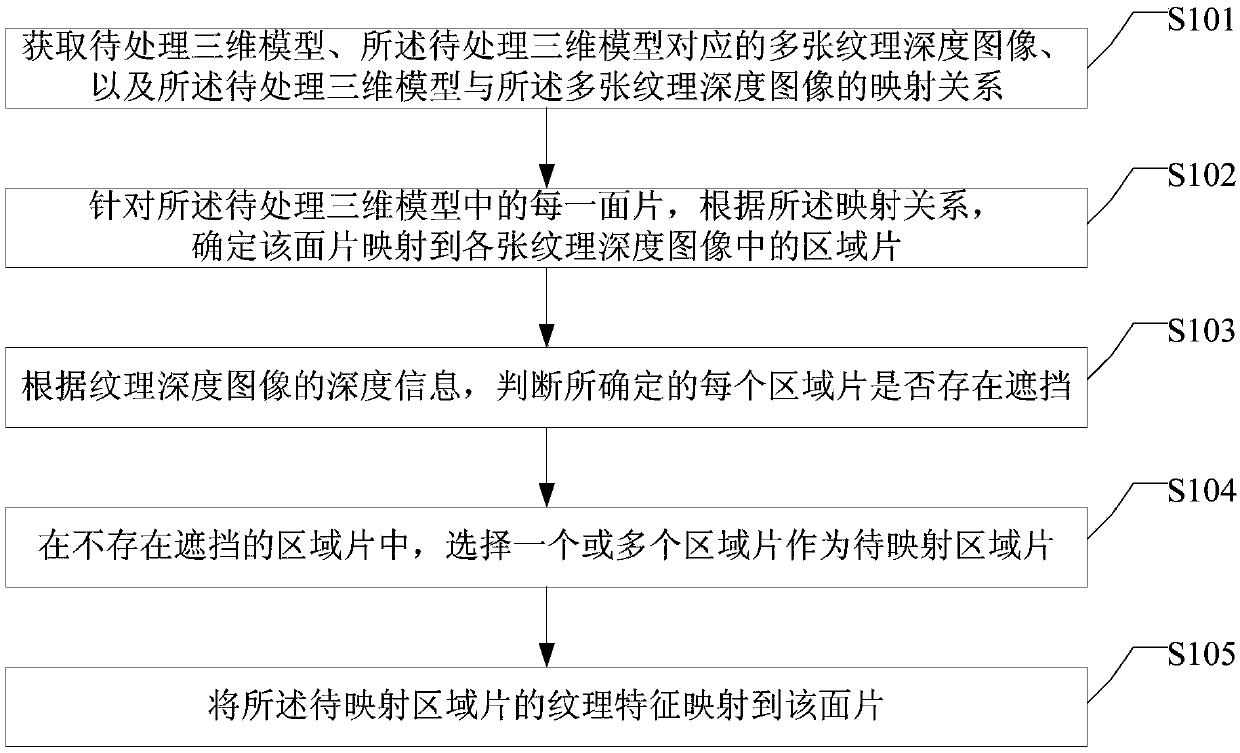

Method used

Image

Examples

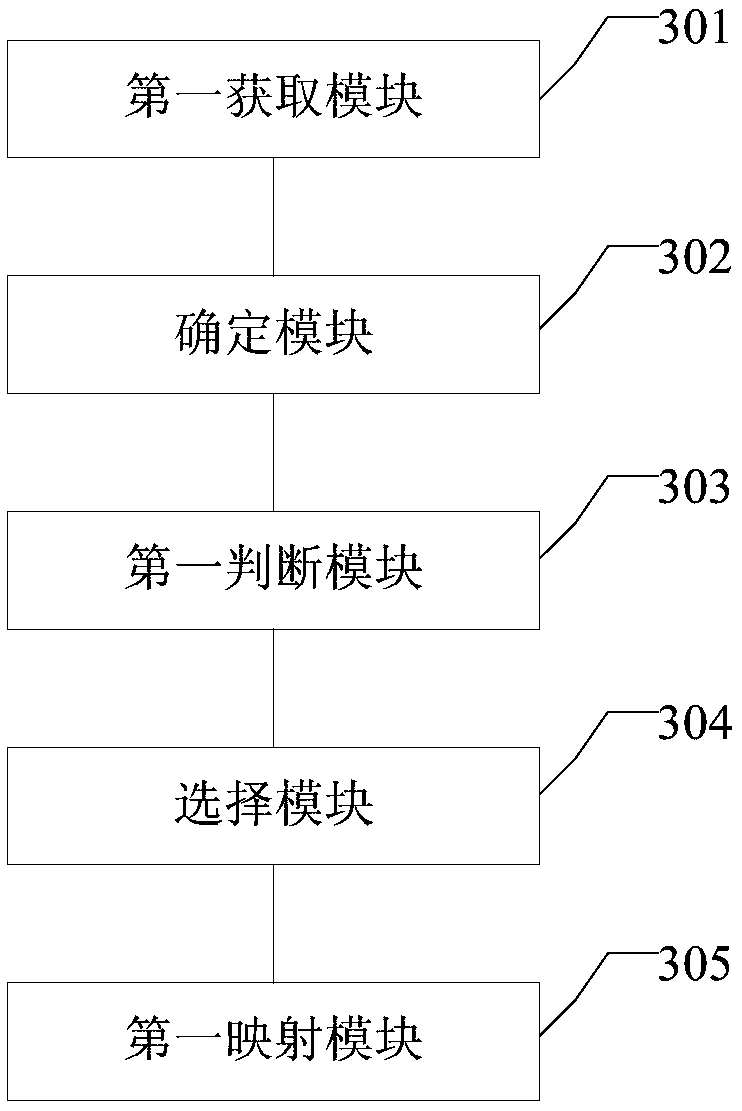

Embodiment approach

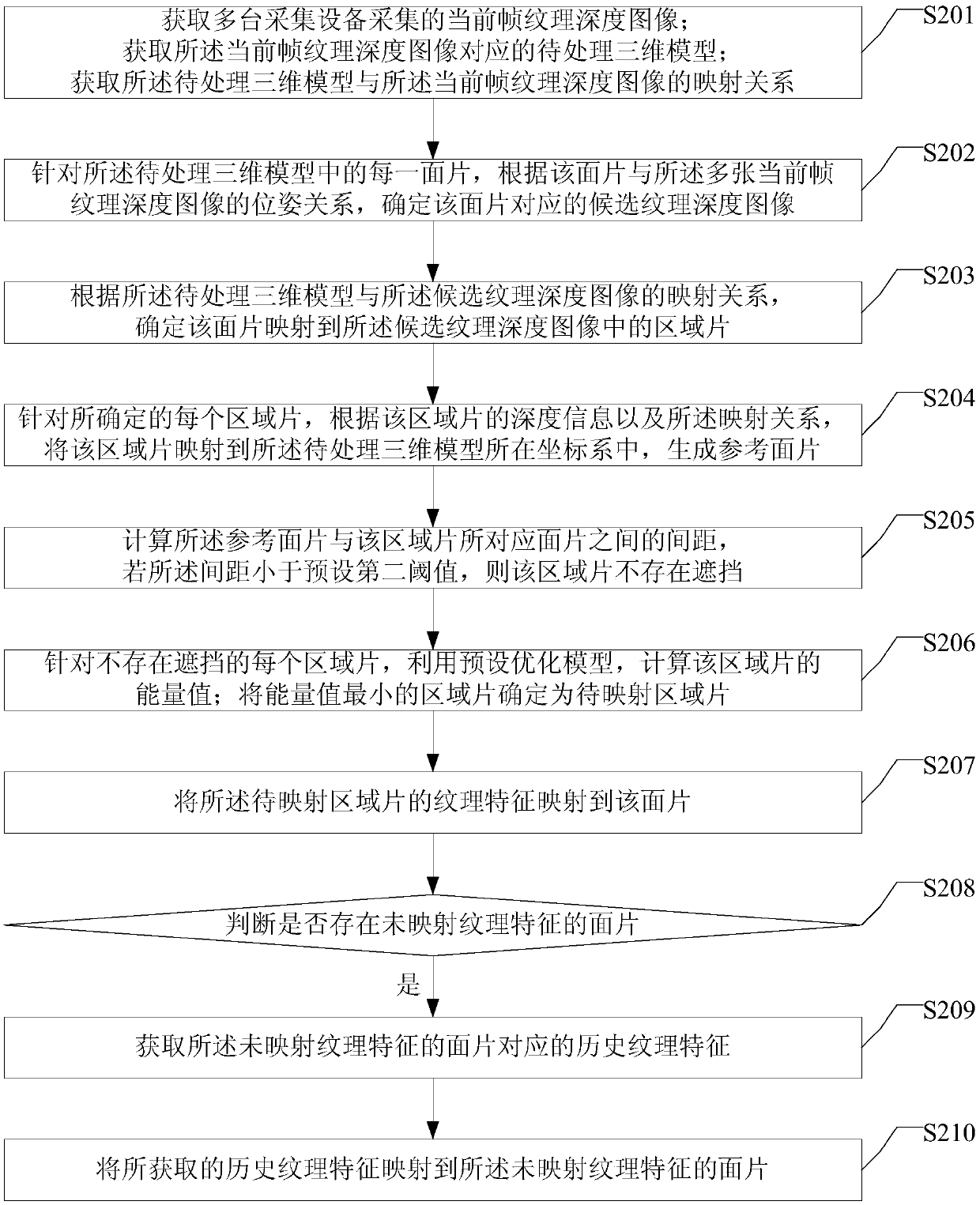

[0138] As an implementation manner, S103 may include:

[0139] For each determined region patch, according to the depth information of the region patch and the mapping relationship, map the region patch into the three-dimensional model to be processed to generate a reference patch; calculate the relationship between the reference patch and the region The distance between the patches corresponding to the patch, the patch corresponding to the region patch is: according to the mapping relationship, the patch mapped to the region patch; if the distance is less than the preset second threshold, the region patch will not There is occlusion.

[0140] Still take the triangular patch F as an example. The patch F contains three vertices A, B, and C. For vertex A, the projection point mapped to the texture depth image I is A'. Suppose A' is in the texture The depth value in the depth image I is d(u A , v A ); then A' is reverse-mapped according to the above mapping relationship, assum...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com