Video face recognition method based on aggregation adversarial network

A face recognition and aggregation network technology, applied in character and pattern recognition, instruments, computer components, etc., can solve the problems of low accuracy and low efficiency of video face recognition, and achieve improved efficiency, high recognition efficiency, and improved performance effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

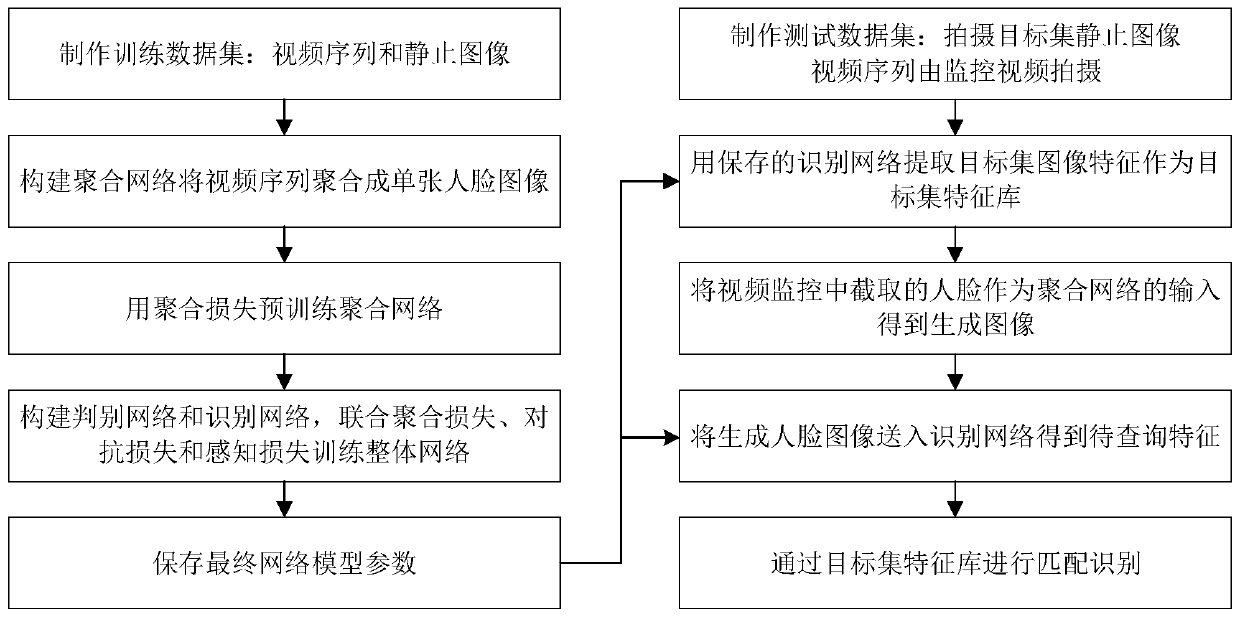

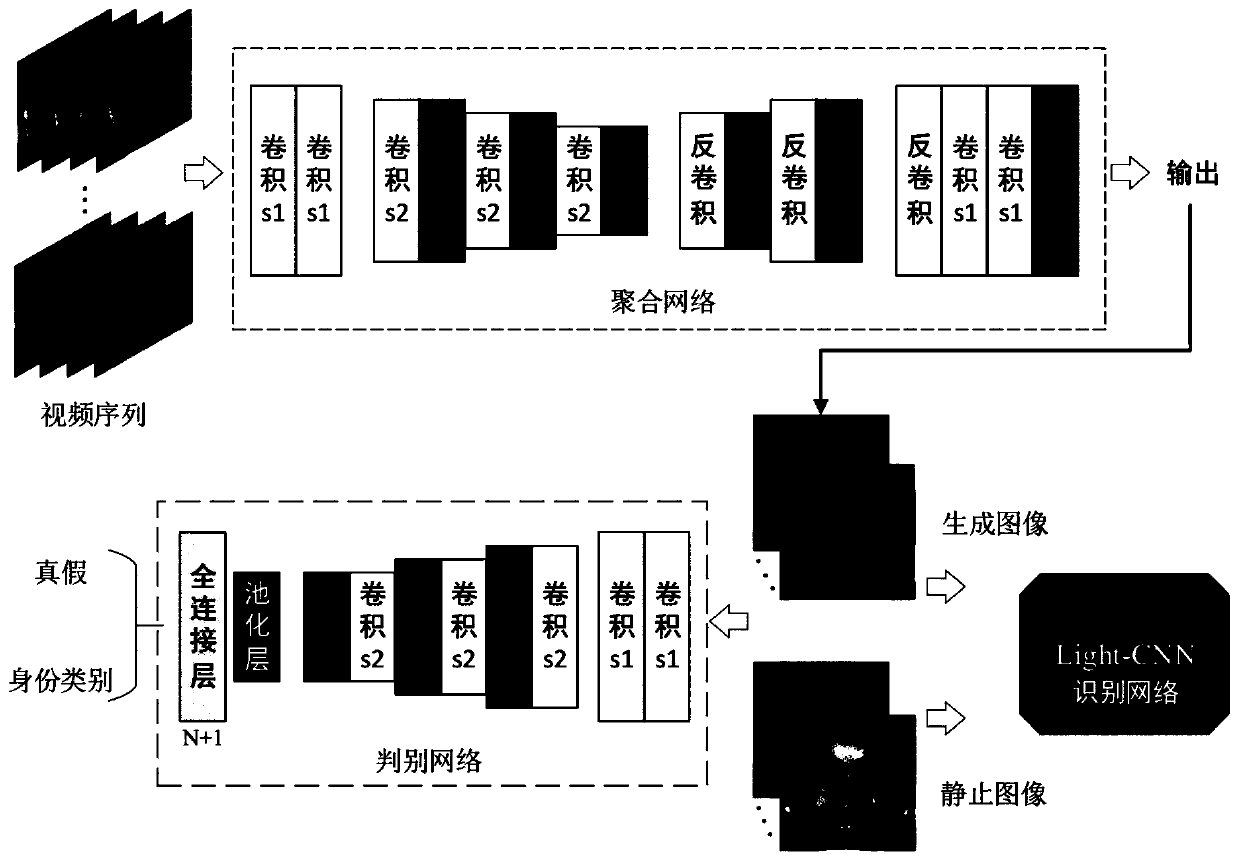

[0045] This embodiment provides a video face recognition method based on aggregation confrontation network, see figure 1 , the method includes:

[0046] Step 1. Obtain the training set, including the video sequence dataset V and the corresponding static image dataset S:

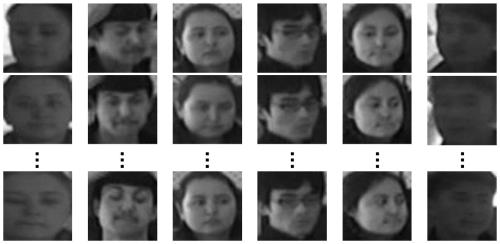

[0047] Step 1.1, obtain the training video sequence data set, denoted as V={v 1 ,v 2 ,...,v i ,...,v N}, where v i Represent the i-th category video sequence, i=1,2,...,N, N is the category number of the video sequence;

[0048] In practical applications, N represents the number of different people appearing in V, and the video sequences corresponding to the same person are called a class.

[0049] Step 1.2, obtain the static image data set corresponding to V, denoted as S={s 1 ,s 2 ,...,s i ,...,s N}, where s i Indicates the static image corresponding to the i-th category;

[0050] In practical applications, S can be obtained by shooting with a high-definition camera, but in some actual video sur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com