Action recognition method based on double-flow space-time attention mechanism

An action recognition and attention technology, applied in the fields of video classification and computer vision, which can solve the problems of complex temporal attention network structure, low recognition efficiency, and inaccurate regions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings.

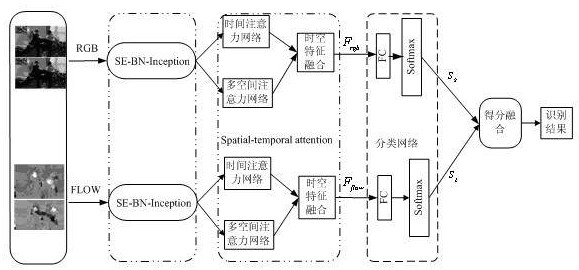

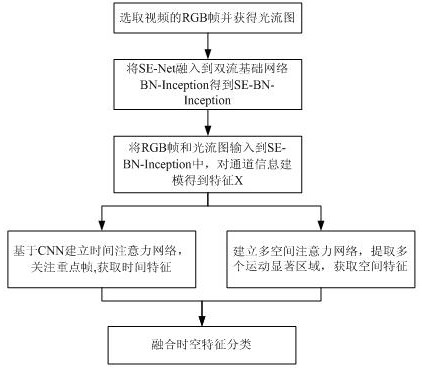

[0045] The present invention designs an action recognition method T-STAM based on a dual-stream spatio-temporal attention mechanism, see figure 1 , the method includes the following steps.

[0046]S1: Process the video and select RGB frames, and obtain the optical flow diagram of the selected RGB frames;

[0047] S2: The channel attention mechanism can learn the importance of each feature channel, improve the channel features that are useful for current recognition according to the importance, and suppress the channel features with weak recognition to obtain the structure. Therefore, the present invention introduces the channel attention network SE-Net to the dual-stream basic network BN-Inception to obtain SE-BN-Inception that can model channel features. The channel attention network SE-Net is introduced into the dual-stream basic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com