Real-time human body action recognition and counting method

A technology of human action recognition and counting method, which is applied in character and pattern recognition, calculation, and counting input signals from several signal sources, etc. Accurate counting and real-time recognition of the effect of human movements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

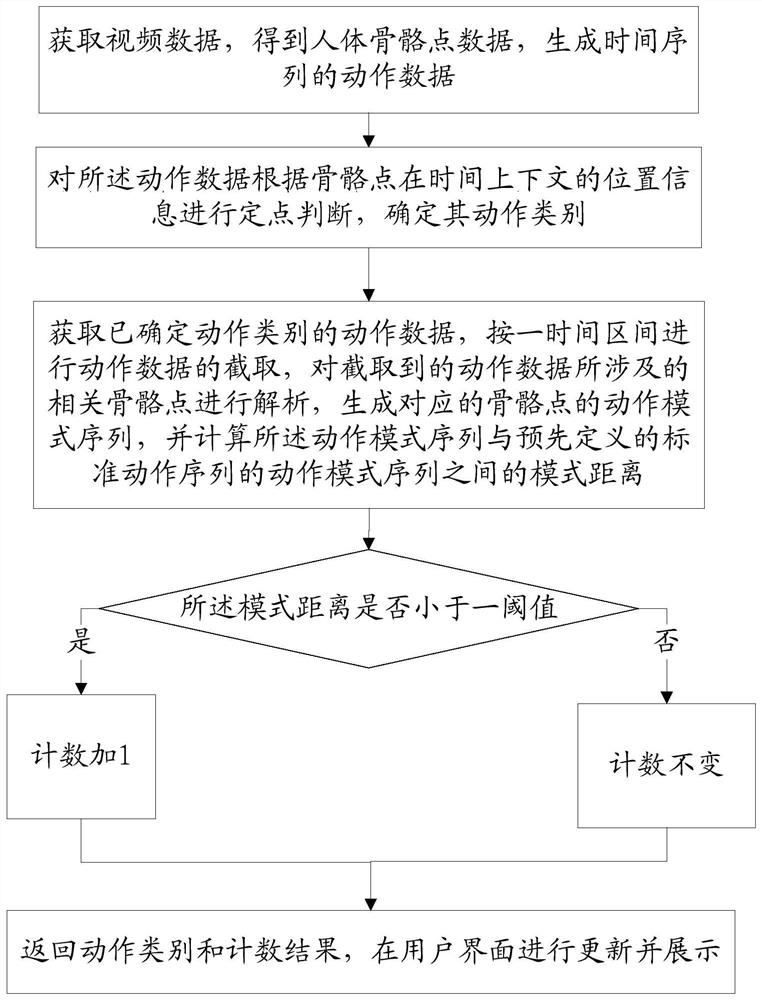

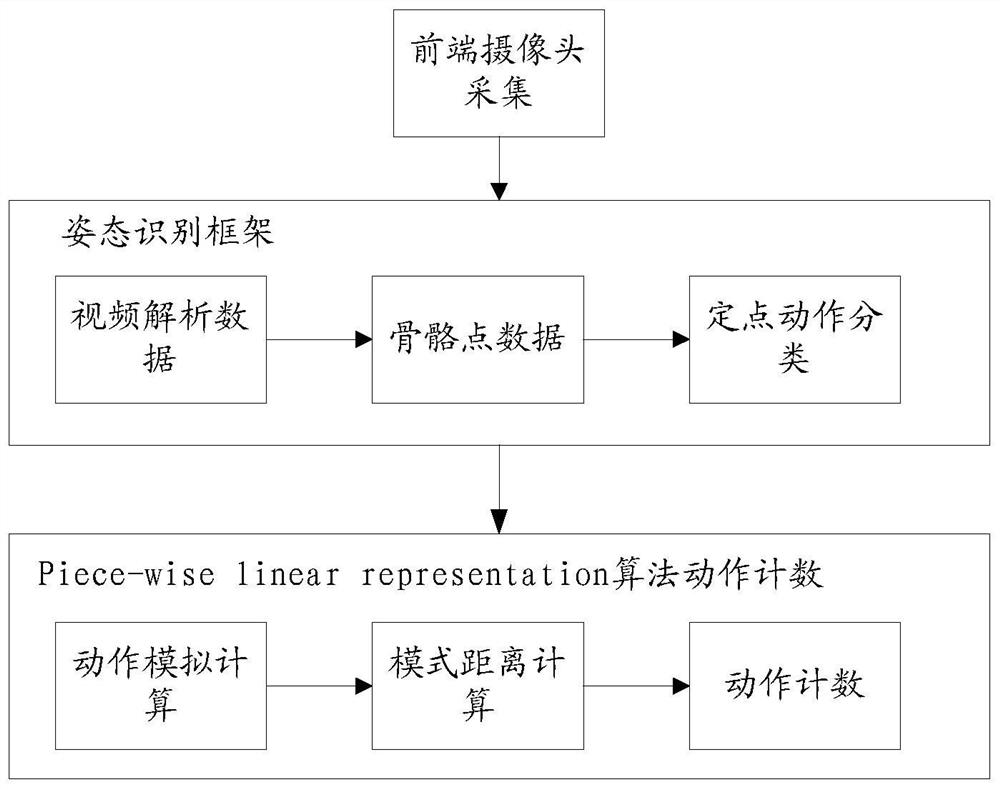

[0032] Such as figure 1 and figure 2 Shown, a kind of real-time human action recognition and counting method of the present invention comprises:

[0033] Step 10. Obtain video data, obtain human skeleton point data, and generate time-series action data;

[0034] Step 20, perform fixed-point judgment on the action data according to the position information of the skeleton point in the time context, and determine its action category;

[0035] Step 30: Acquire the action data of the determined action category, intercept the action data according to a time interval, analyze the relevant skeleton points involved in the intercepted action data, generate the action pattern sequence of the corresponding skeleton points, and calculate the The pattern distance between the action pattern sequence and the action pattern sequence of the predefined standard action sequence, judge whether the pattern distance is less than a threshold, if so, add 1 to the count, otherwise the count remains...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com