Image fusion method based on local contrast preprocessing

A technology of local contrast and image fusion, which is applied in the field of image fusion to achieve the effect of avoiding spatial discontinuity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

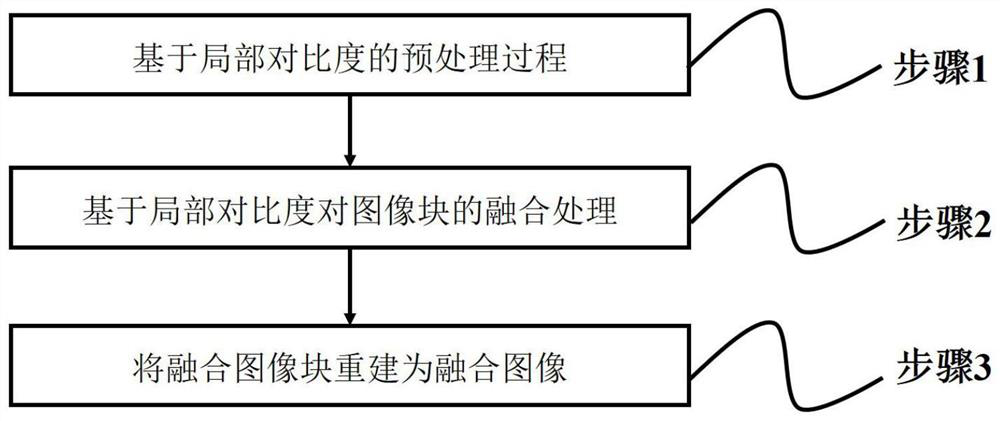

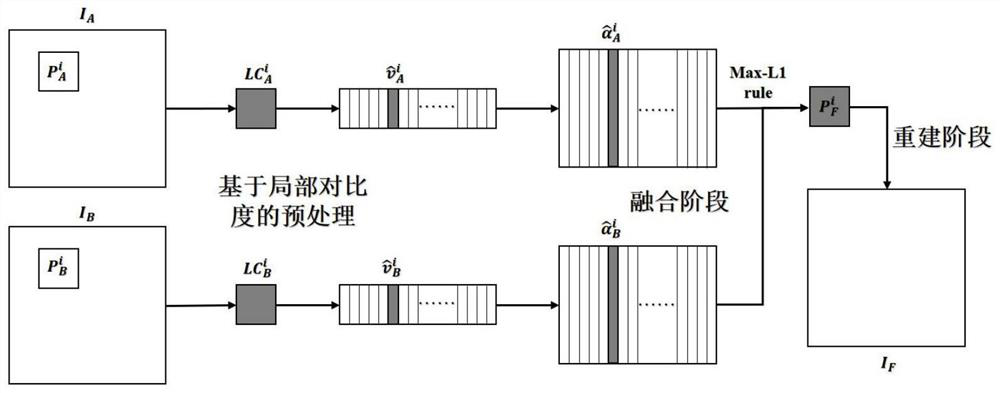

[0038] In this embodiment, a sparse representation image fusion method based on a local contrast preprocessing method is applied in such as figure 2 The source image shown is the case of two, and when the source image is more than two, it can also be deduced by analogy. Specifically, refer to figure 1 , the method includes the following steps:

[0039] Step 1, preprocessing stage:

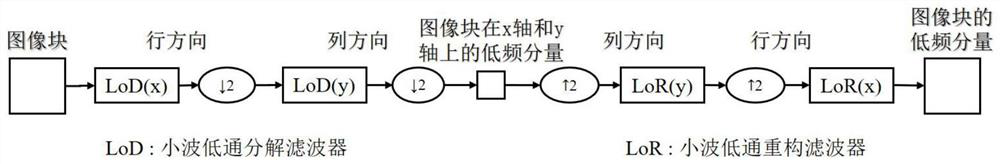

[0040] Step 1.1. Obtain two source images I of size M×N of the same scene after registration A , I B ∈ R M×N , where R M ×N Represents a matrix with M rows and N columns, using a sliding window to combine two source images I A and I B are divided into a series of The image block; thus correspondingly get K segmented image blocks, denoted as and in, represents the source image I A The i-th image block after segmentation, represents the source image I B The i-th image block after segmentation, m represents the size of the dictionary atom;

[0041] Step 1.2, respectively calcula...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com