Video encoding, decoding method and video encoder, decoder

A technology of video encoding and encoding and decoding, which is applied in the fields of decoding methods, video encoders, decoders, and video encoding. The effect of transmission efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

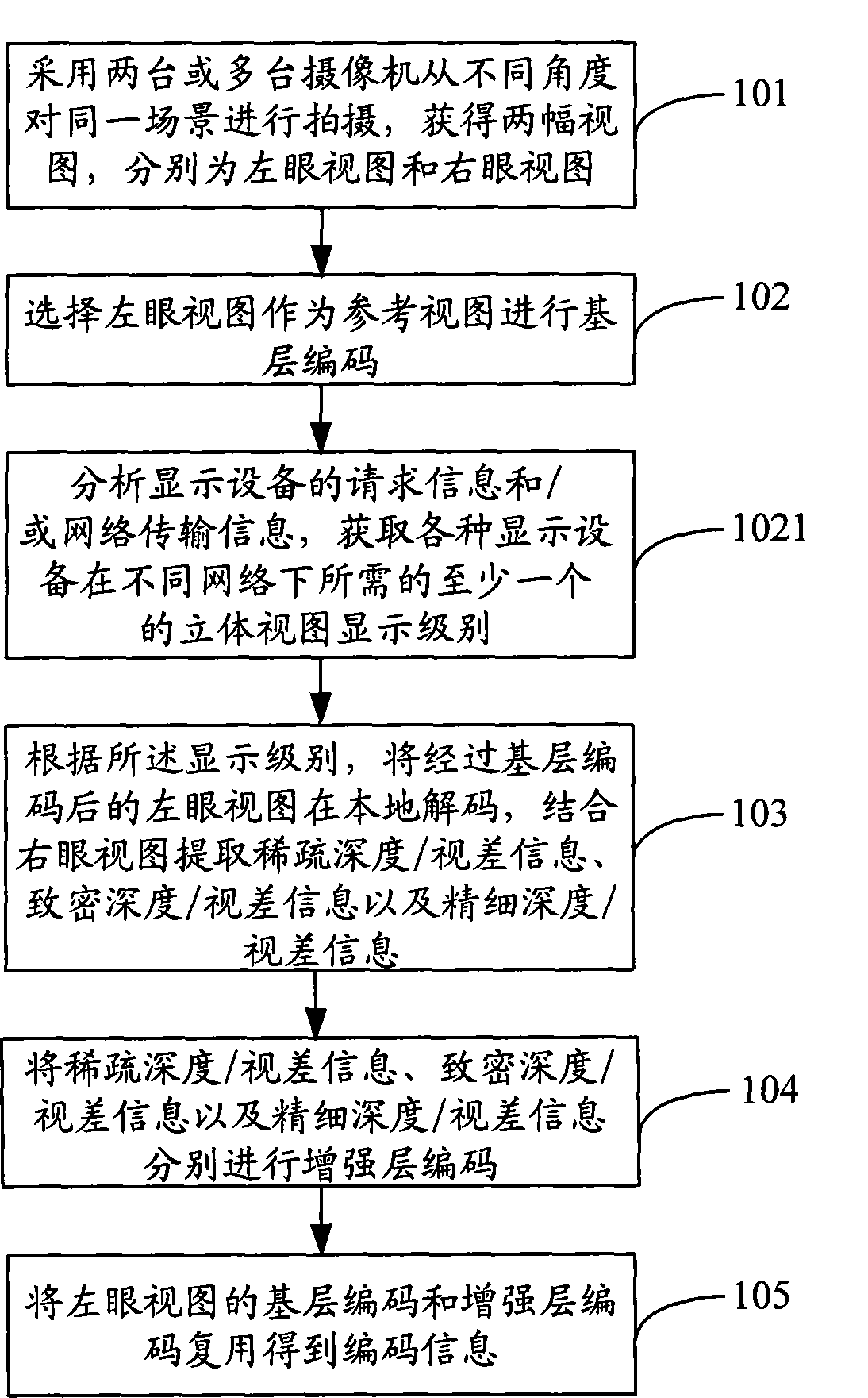

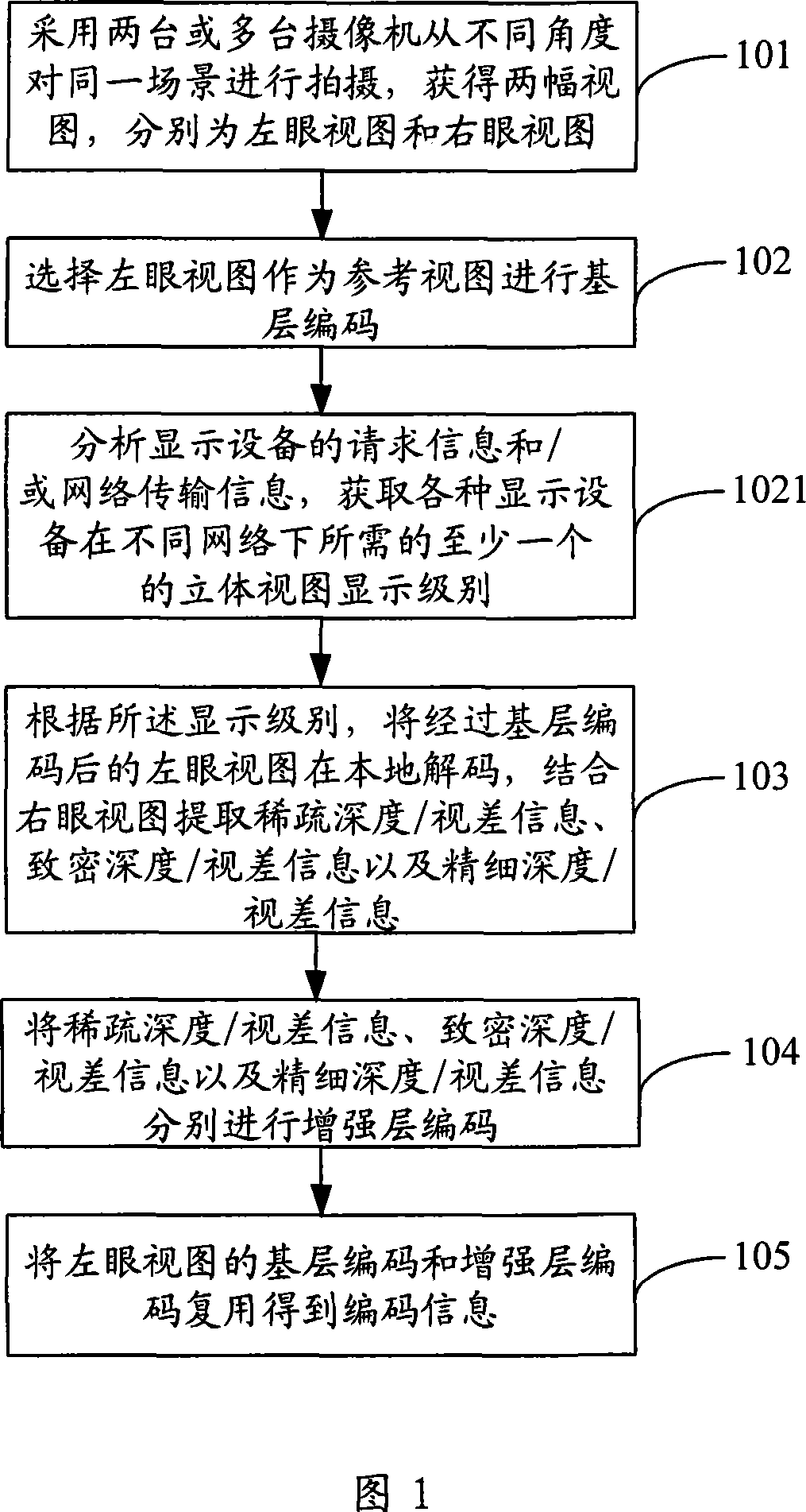

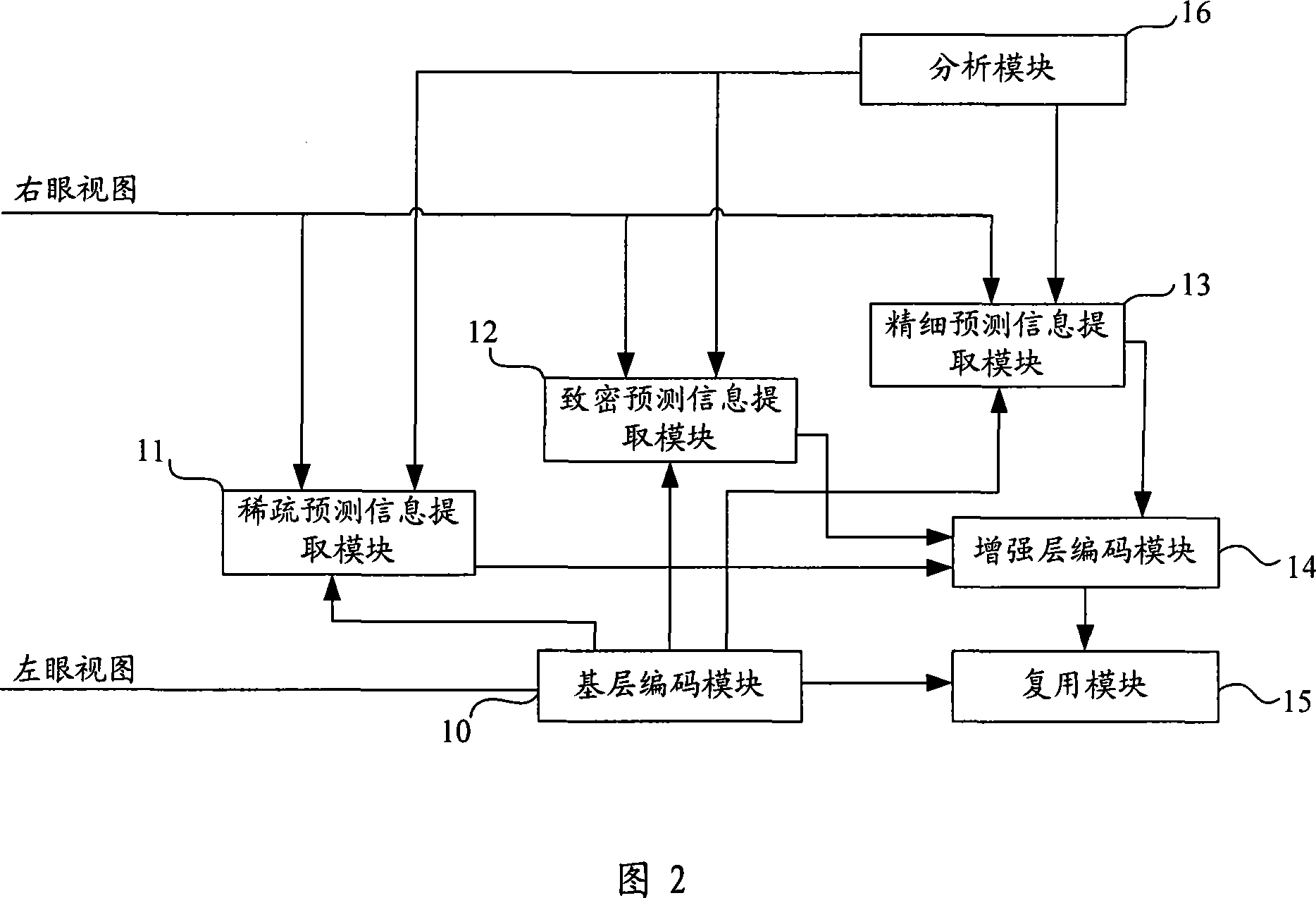

[0070] As shown in FIG. 1 , it is a flowchart of a video encoding method according to an embodiment of the present invention. In this embodiment, depth / disparity information is used as prediction information. Before performing the steps shown in Figure 1, the number of layers and levels of depth / parallax information to be extracted can be preset. This embodiment takes the extraction of three layers of depth / parallax information as an example, from rough to fine in order of sparse depth / disparity information, dense depth / disparity information, and fine depth / disparity information will further introduce the technical solution of this embodiment. The video encoding method of this embodiment performs the following steps:

[0071] Step 101, using two or more cameras to shoot the same scene from different angles to obtain two views, which are left-eye view and right-eye view;

[0072] Step 102: Select one of the left-eye view and the right-eye view as a reference view to perform b...

Embodiment 2

[0104] As shown in FIG. 5 , it is a flowchart of a video encoding method according to Embodiment 2 of the present invention. In this embodiment, depth / disparity information is used as prediction information. Before performing the steps shown in Figure 5, the number of layers and levels of depth / parallax information to be extracted can be preset. This embodiment takes the extraction of three layers of depth / parallax information as an example, from rough to fine in order of sparse depth / Disparity information, dense depth / disparity information, and fine depth / disparity information will further introduce the technical solution of this embodiment. The video encoding method of this embodiment performs the following steps:

[0105] Step 301: Use two or more cameras to shoot the same scene from different angles, and obtain two views, namely the left-eye view and the right-eye view;

[0106] Step 302: Select one of the left-eye view and the right-eye view as a reference view to perfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com