Managing a succession of deployments of an application programming interface (api) server configuration in the software lifecycle development

A pre-start, predictor technology, applied in the direction of program loading/starting, instrumentation, calculation, etc., can solve problems such as insufficient response to users or expected performance, less effective, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0091] Example 1: Overall Rate Predictor

[0092] If the predictor has observed user duration d and event occurrence n times, a natural estimate is to use . if t 0 is the time when the predictor starts to observe the user, then t i is the number of observations of the target event, and t is the current time, then the predictor can take:

[0093]

[0094] give

[0095]

Embodiment 2

[0096] Example 2: Per-Context Rate Predictor

[0097]Assume that the predictor knows that rates can have different contexts. For example, suppose the rate varies on different days of the week. Then, if t = "13:21.02, Friday, May 31, 2013", you might use:

[0098]

[0099] If the rate of events of interest varies within the first minute of login, you might use:

[0100]

[0101] In general, if the context at time t (e.g., day of the week, time of day, or whether it is within or after a certain time logged in) is c(t), then it is possible to compute at each context c (e.g., on Sunday, Monday, ..., Saturday) the number of times the event of interest was observed, which can be represented by n c (t) represents and possibly computes the total duration of observing the user in each context c, which can be denoted by d c (t) representation, and use:

[0102]

[0103] If I c (t) is a function equal to 1 when the context is 1, and 0 otherwise, you might use:

[0104] ...

Embodiment 3

[0105] Example 3: Rate Predictor of Decay

[0106] As t increases, count n c (t) and duration d c (t) grows larger and becomes subject to increasingly outdated user behavior. To overcome this problem, recency-weighting may be introduced such that more recent actions are weighted more heavily in count and duration. One option could be exponential weighting, where, when estimating counts and durations at a later time Δ, at time The behavior of the given weights . In this case it is possible to use:

[0107]

[0108]

[0109]

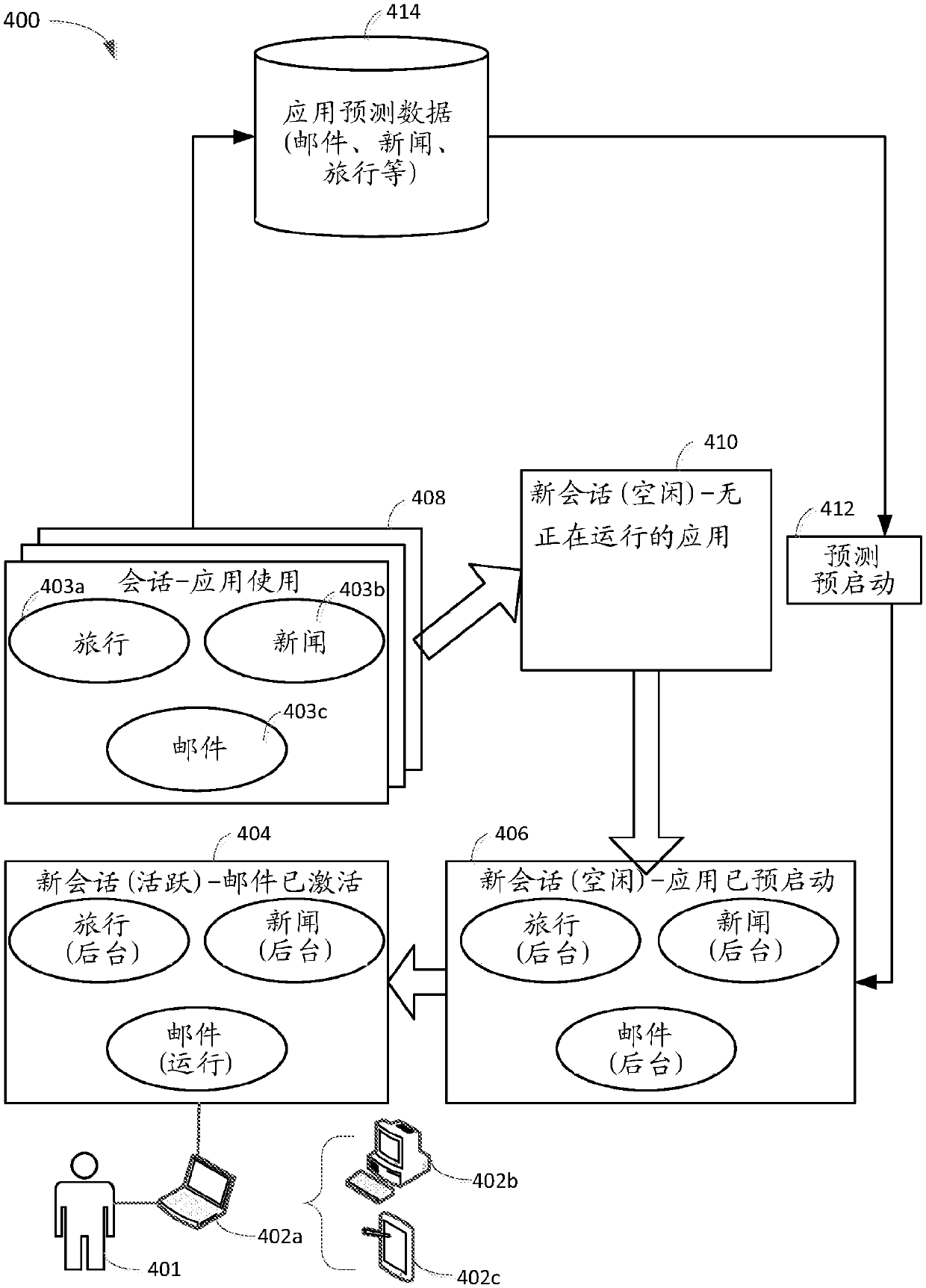

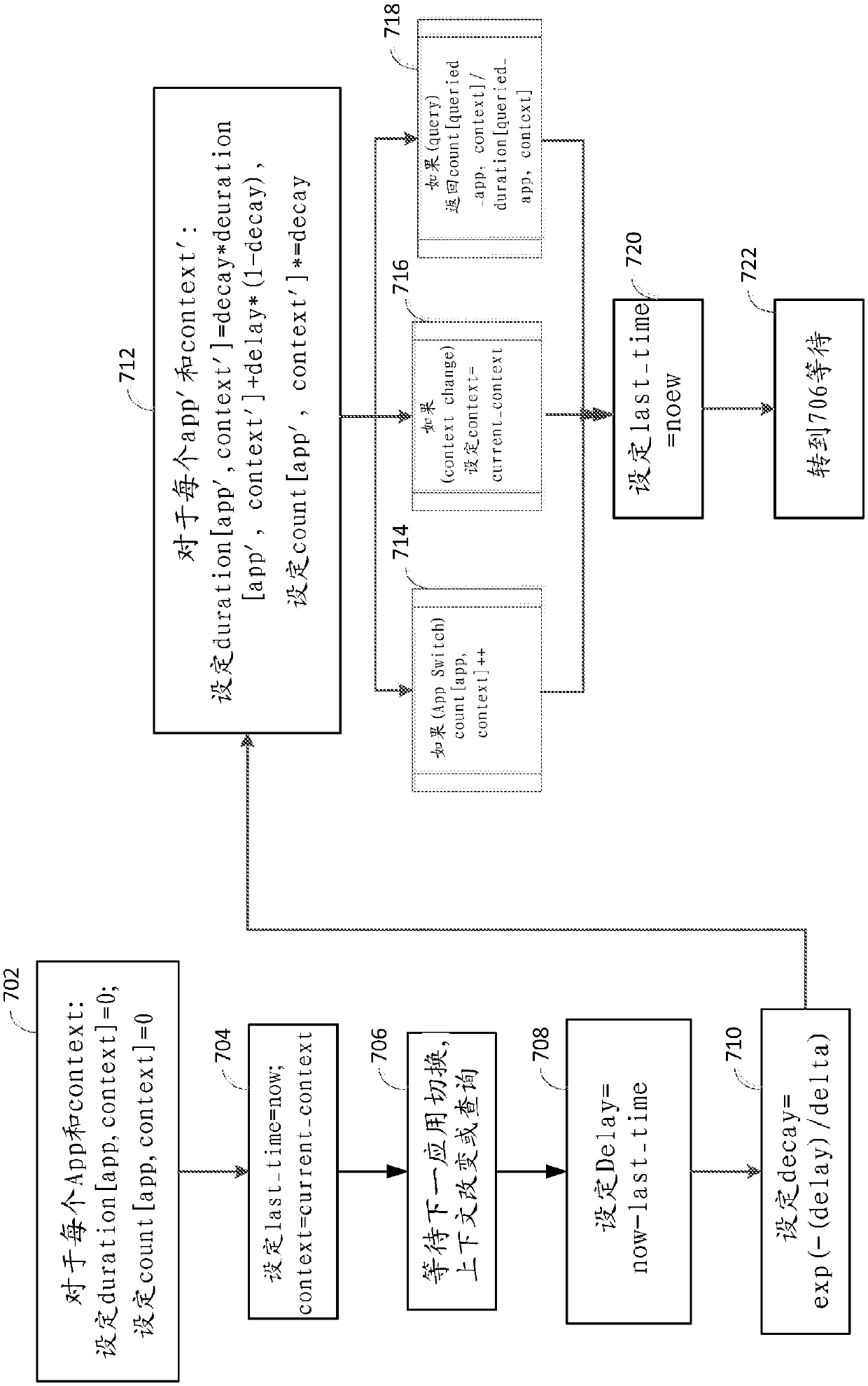

[0110] If an event (in this case, an app switch) changes in context (e.g., a change in day) and the query (i.e., the PLM query predictor) moves forward in time ( forward) occurs, these counts (count), rate and duration (duration) can be calculated, which in Figure 7 Illustrated in flowchart form in and repeated here as follows:

[0111] 1. For each application (app) and context (context): set duration[app, context]=0, set count[app, con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com