Augmented reality-based human face interaction entertainment method

A technology of real people and face features, applied in the field of augmented reality face interactive entertainment, can solve problems such as limiting the scope of use, and achieve the effect of easy promotion, natural effect, and good followability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

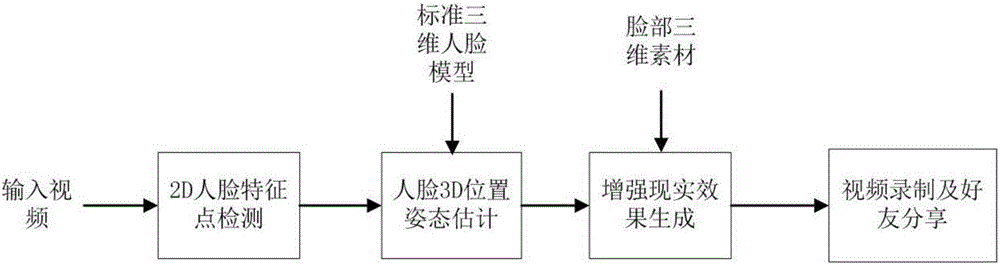

[0033] see figure 1 Shown, a kind of augmented reality human face interactive entertainment method, it comprises the steps:

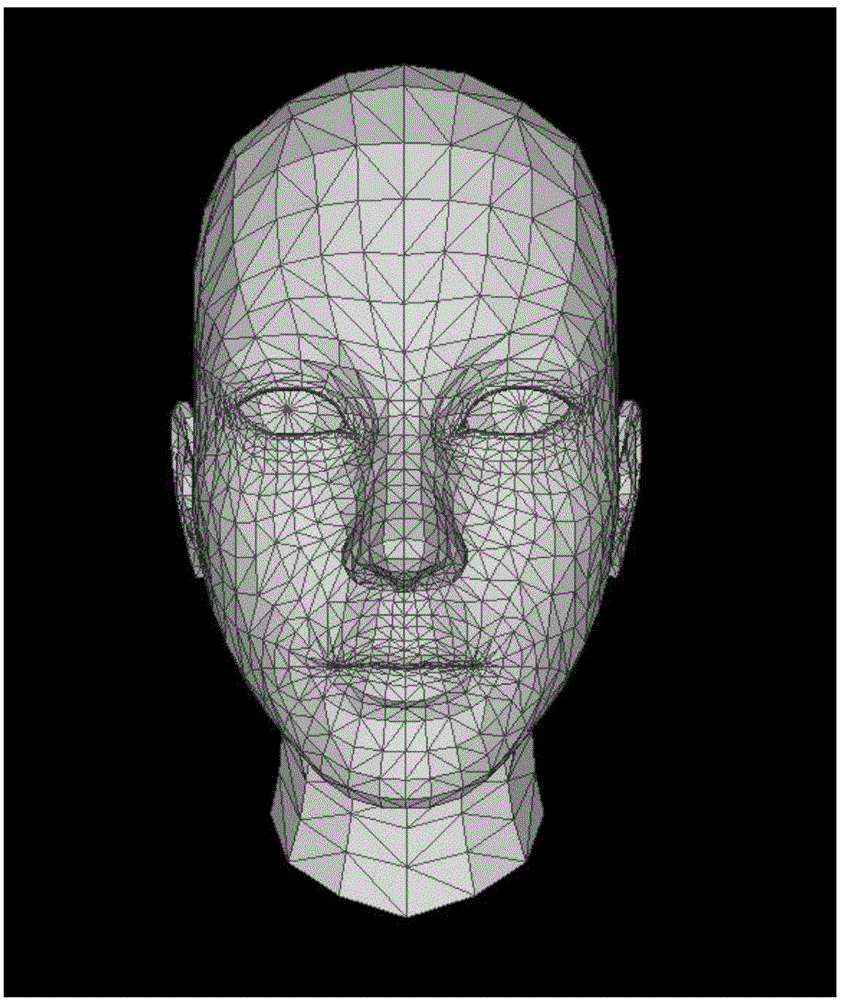

[0034] Step 1. Making standard 3D face models and 3D facial materials in advance;

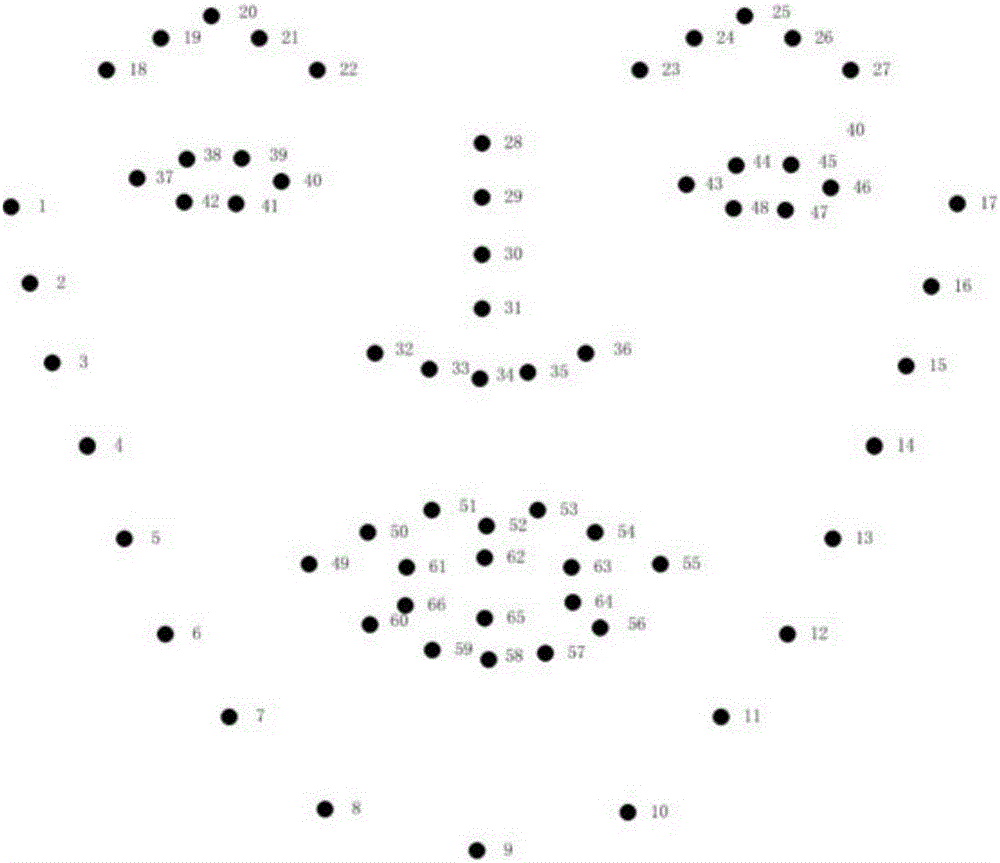

[0035] Step 2, input the face video, and detect 2D face feature points in each frame of the input face video;

[0036] Step 3, estimating the 3D position and posture of the face in the video frame in combination with the standard 3D face model and 2D face feature points;

[0037] Step 4, according to the 3D position and posture of the human face obtained in step 3, the pre-made facial three-dimensional material is converted to the same posture of the human face in the video, and the rendering is superimposed on the human face video to realize the augmented reality effect;

[0038] Step 5. Record and save the face augmented reality video and share it with your friends for interaction.

[0039] In this embodiment, the standard human face three-dimensional model is made ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com