Image style transfer method based on convolutional neural network

A technology of convolutional neural network and transfer method, which is applied in the direction of graphic image conversion, image data processing, 2D image generation, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

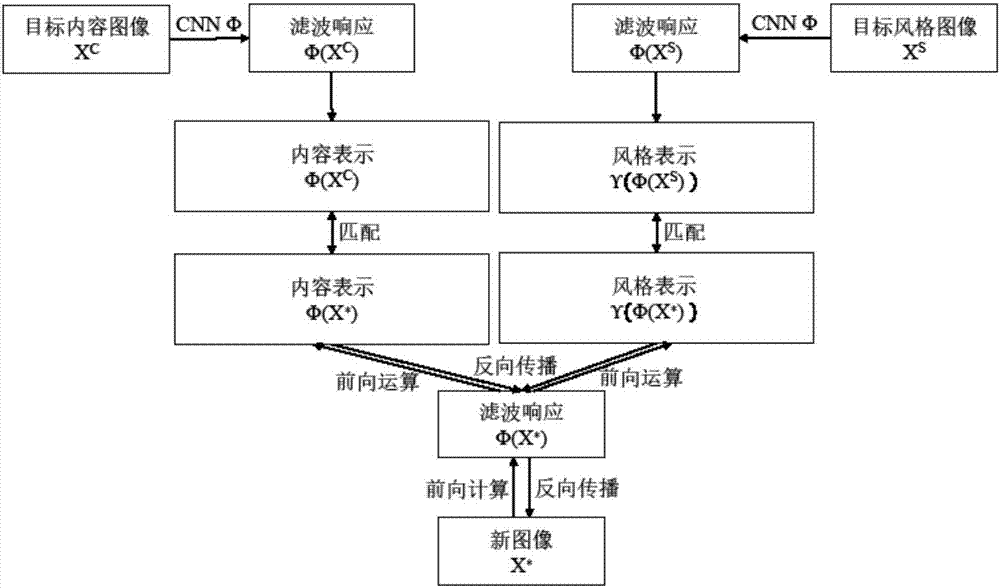

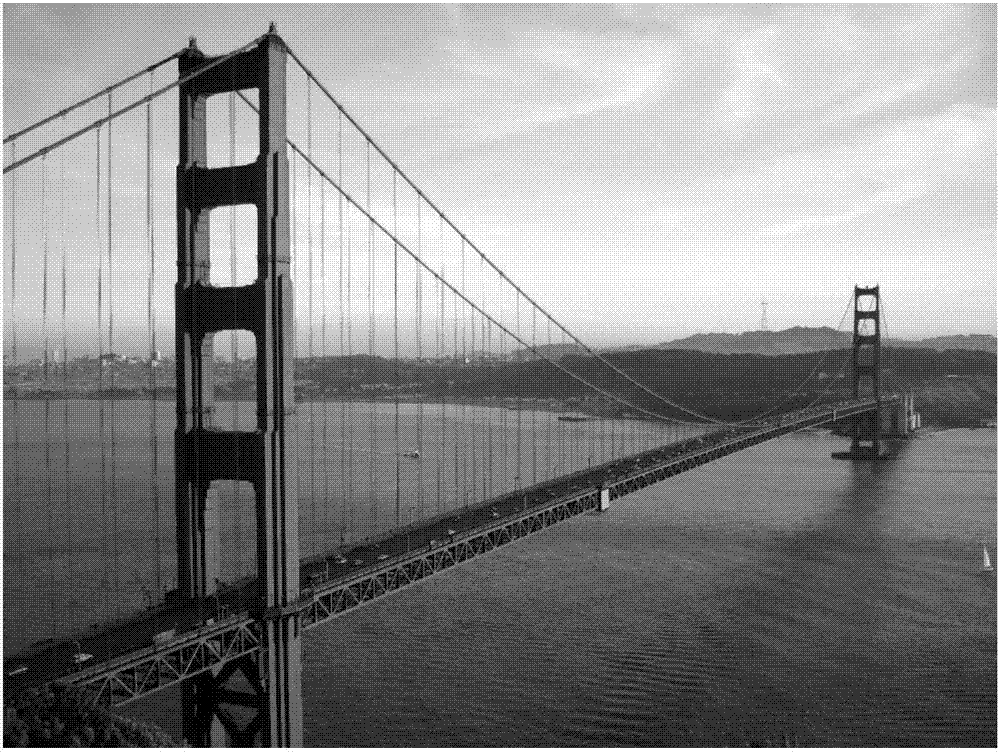

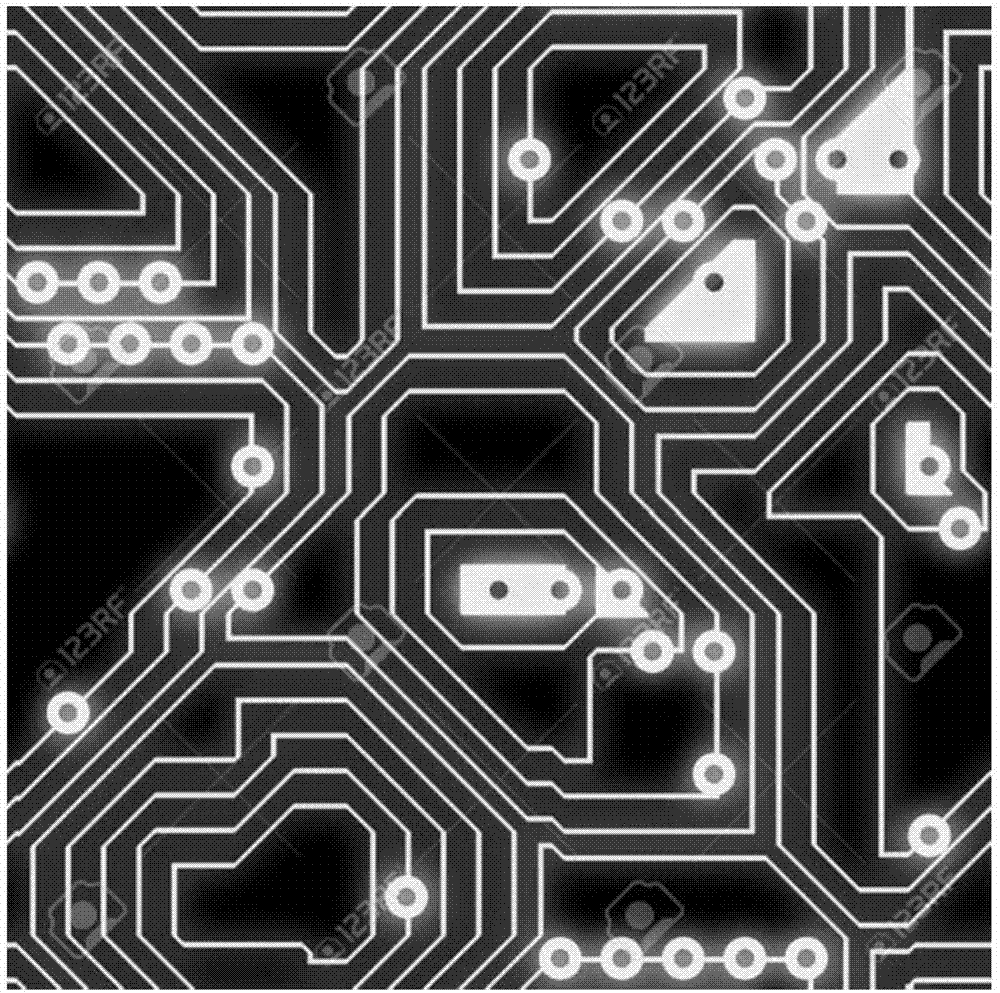

[0026] figure 2 is the target content image, image 3 for the target style image. Our goal is to generate image 4 such that it fuses figure 2 the content of and image 3 style of.

[0027] Step 1. Select the deep convolutional neural network VGG-19, which achieved excellent results in the ImageNet image classification competition in 2014, as our image advanced semantic feature extraction model Φ, and select figure 2 for target content image X C , image 3 For target style image X S , select ReLU2_2 as the content constraint layer, select ReLU1_1, ReLU2_1, ReLU3_1, ReLU4_1 and ReLU5_1 as the style constraint layer, and select the setting threshold ε=5e -3 and the highest number of iterations th = 200;

[0028] Step 2, the target content image X C Input to the convolutional neural network VGG-19 to calculate the filter response Φ(X ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com