Spoken language pronunciation evaluation method based on deep neural network posterior probability algorithm

A deep neural network and posterior probability technology, applied in the field of pronunciation evaluation, can solve the problems of low phoneme recognition rate of acoustic model, inaccurate results of scoring, and low likelihood accuracy, and achieve the effect of improving phoneme recognition rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0020] When using the oral pronunciation evaluation method based on the deep neural network posterior probability algorithm in the present invention, first select a certain amount of audio from one or more relevant voices that need to be evaluated, wherein the number of audio is preferably no more than 10,000 , and the number of words of each audio is limited within a certain range, preferably 1-20, wherein each word contains multiple phonemes.

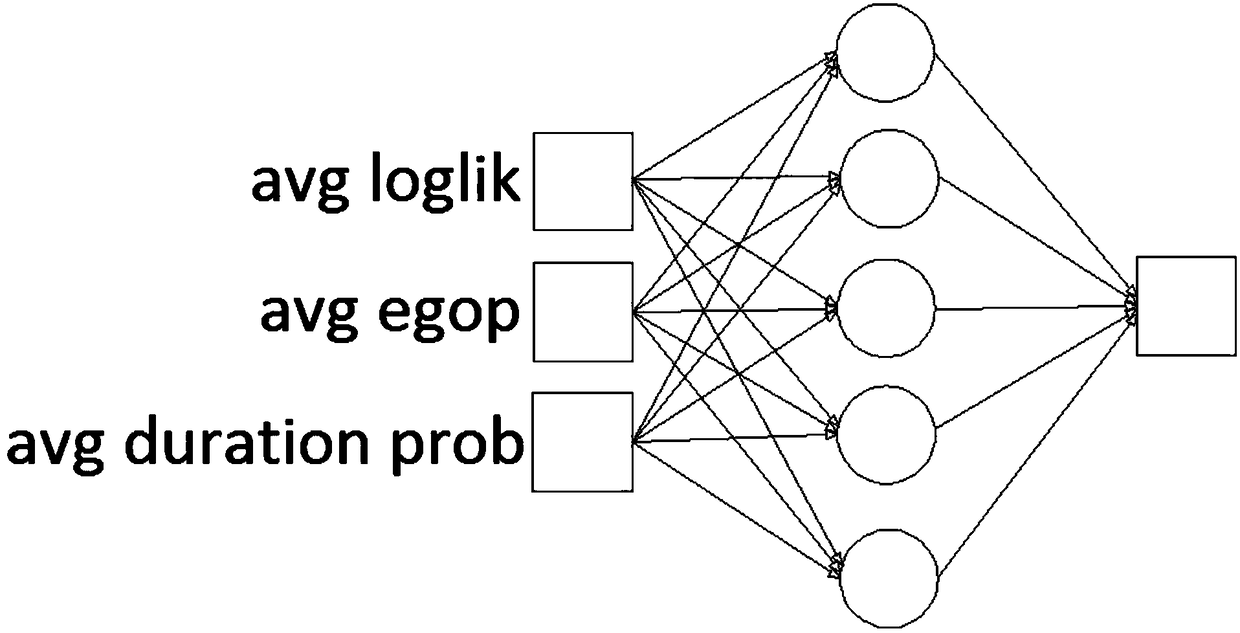

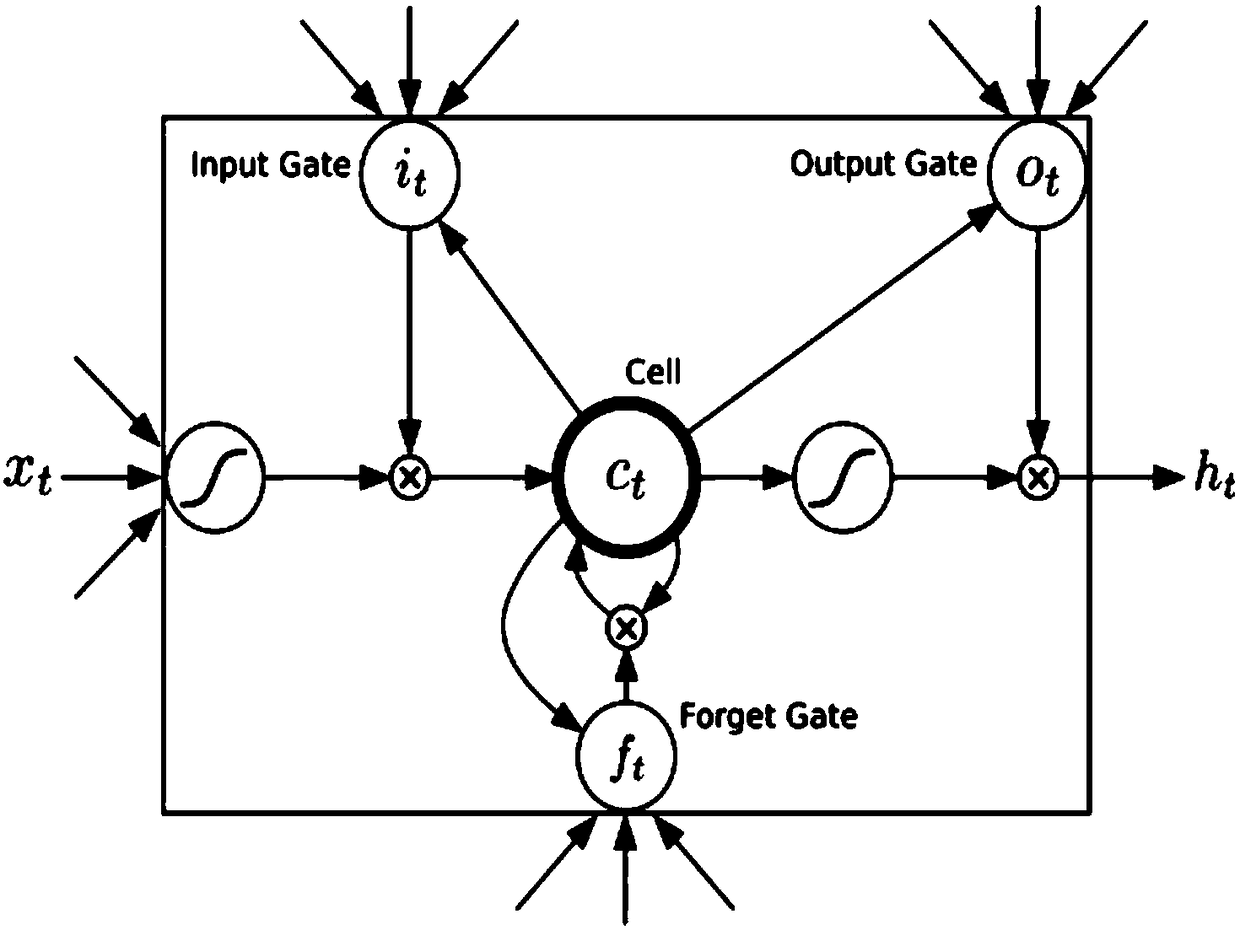

[0021] Suppose word W contains k phonemes, set {P 1 ,P 2 ,…P k}, where the likelihood of each phoneme is set to loglik(P i ). The characteristic formula used by the traditional GOP (Goodness Of Pronunciation) method to measure pronunciation is loglik(numerator)-loglik(denominator), that is, the average likelihood of FA obtained in the FA process and the average likelihood of FP obtained in the FP decoding process...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com