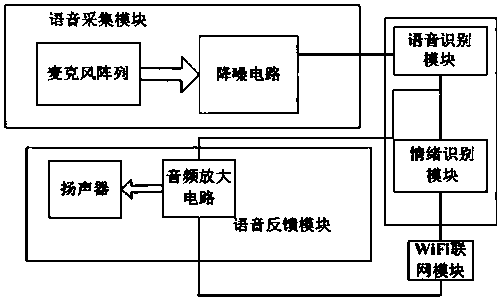

Intelligent voice recognition interactive lighting method and system based on emotion judgment

A technology of intelligent voice and lighting method, applied in the field of lighting, to achieve the effect of soothing user emotions, accurate analysis results, energy saving and comfortable feature extraction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

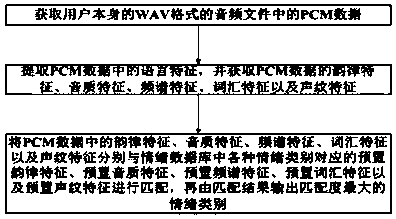

Method used

Image

Examples

Embodiment Construction

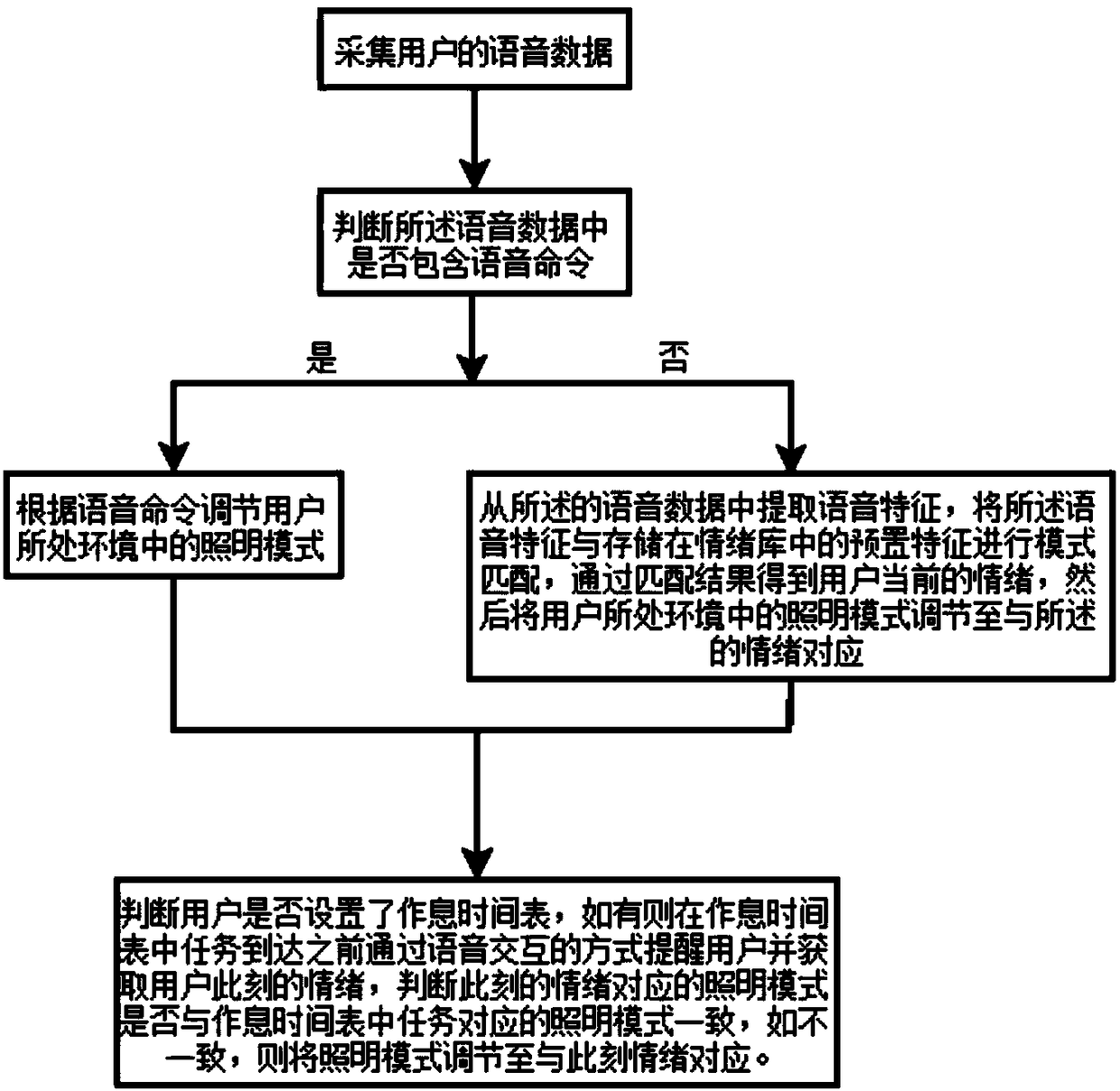

[0051] The invention discloses an intelligent speech recognition interactive lighting method based on emotion judgment, comprising the following steps:

[0052] Step 1, collect the user's voice data

[0053] The voice data described in this solution refers to the voice information collected by the voice collection module when the user is speaking, and the voice data can be saved in wav format.

[0054] Step 2, judging whether the voice data contains a voice command, and if the voice data contains a voice command, adjusting the lighting mode in the environment where the user is located according to the voice command.

[0055] After the speech acquisition module obtains the user's speech data, the vocabulary in the user's speech data is obtained by speech recognition technology in the speech recognition module, and then by comparing with the preset command vocabulary, it is judged whether the speech data contains Voice commands.

[0056] For example, voice commands such as "tu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com