Sign language video translation method based on fusion of temporal convolutional network and recurrent neural network

A technology of recurrent neural network and convolutional network, applied in natural language translation, instruments, computing, etc., can solve the problems of ignoring related information and changing information, affecting the effect of recognition, and difficult to learn videos.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

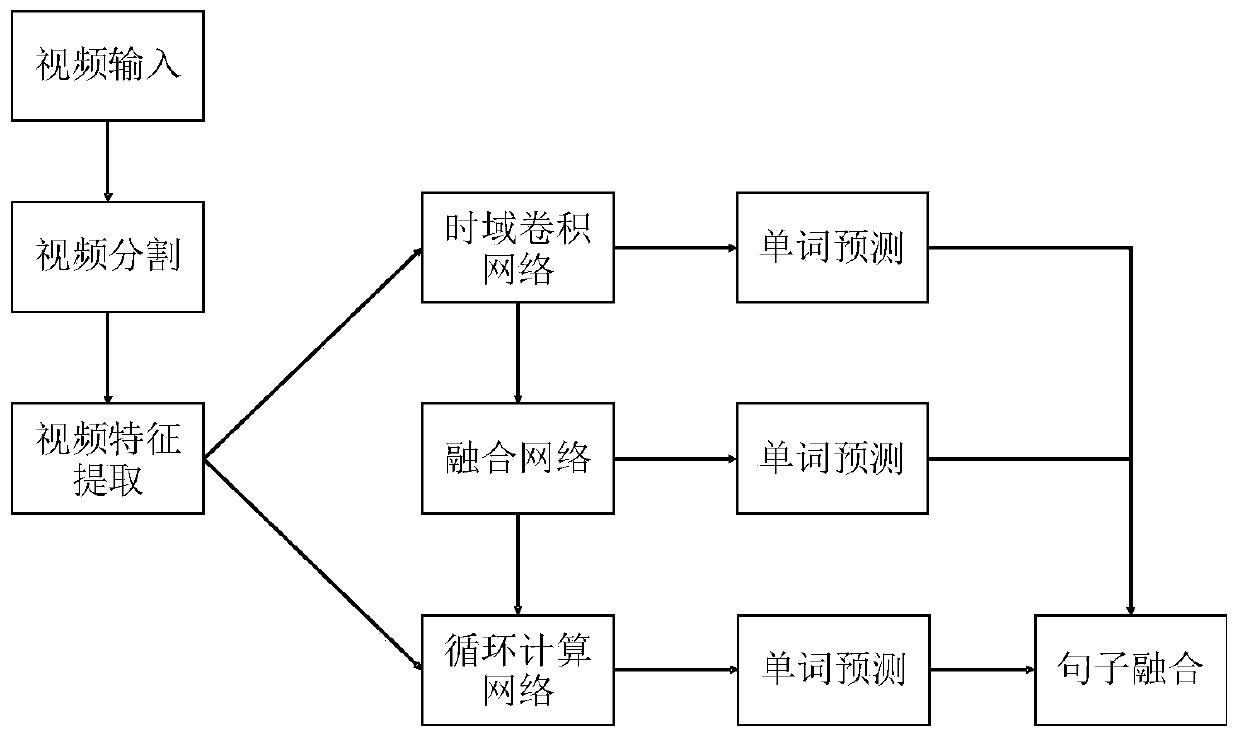

[0051] In this example, if figure 1 As shown, a sign language video translation method based on the fusion of time-domain convolutional network and cyclic neural network is to fully extract the spatial and temporal features in sign language video, effectively learn the features of key actions with high recognition, and effectively Avoid being interfered by factors such as the signer's body shape, sign language speed, and sign language habits during the model learning process. Its steps include:

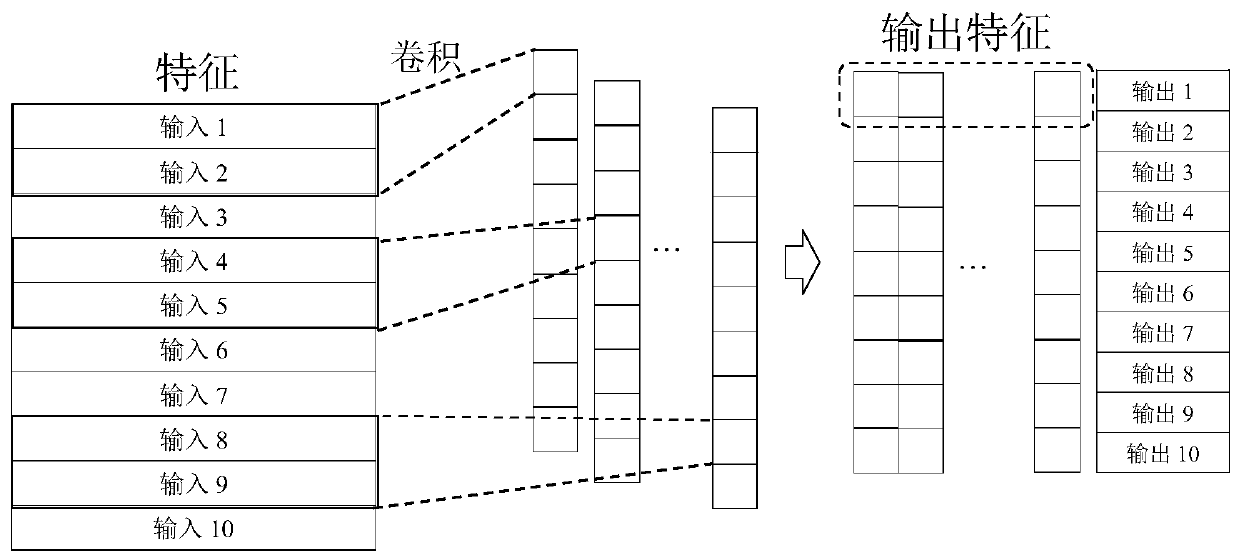

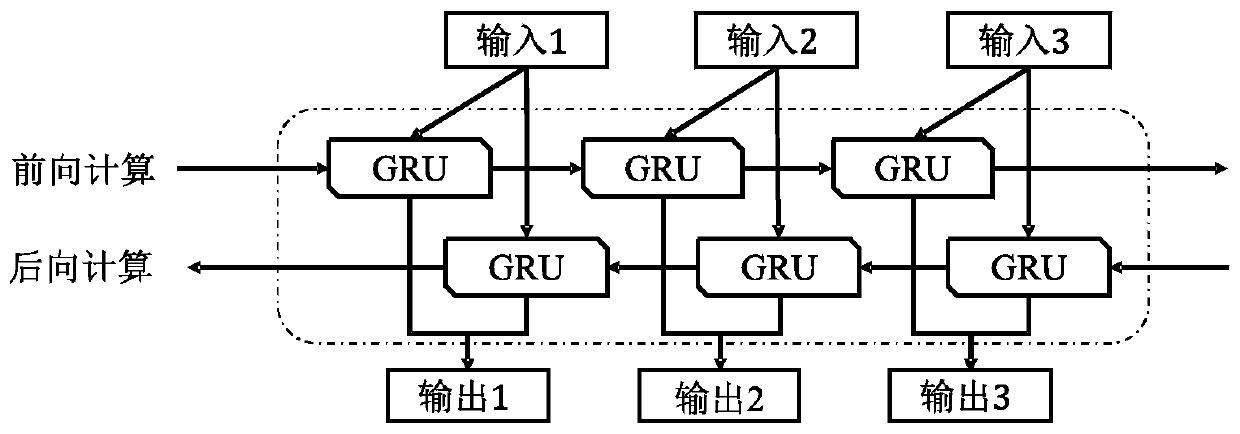

[0052] First, the original sign language video is preprocessed to extract sign language video features; then two different network structures (time-domain convolutional network TCN and bidirectional recurrent neural network BGRU) are used simultaneously to encode continuous sign language video features and output each slice word Generated probability; then the output of the middle layer of the sub-network is spliced and sent to the fusion network (FL) to learn and generate a word s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com