A method for identifying and locating fruits to be picked based on depth target detection

A technology for target detection and fruit identification, applied in the field of fruit identification and positioning to be picked, can solve the problems such as the robustness of the extraction algorithm needs to be improved, uneven illumination, etc., achieve effective and reliable identification and positioning, solve the problem of insufficient feature representation and less robust effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

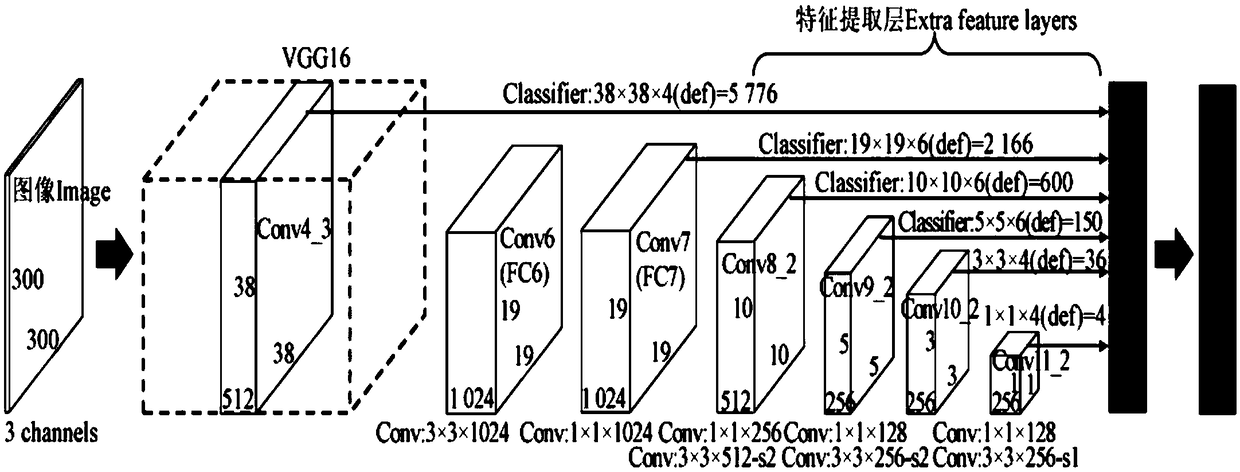

Method used

Image

Examples

Embodiment approach

[0036] The specific implementation is as follows:

[0037] Step 1 Image acquisition

[0038] Use a Canon digital camera to collect images of apples in natural growth scenes. When shooting, the height of the camera is similar to that of an adult (about 1.8 meters), and the angle of the camera lens is random. During the sample shooting, keep the apple target in the middle of the collected image and keep it clear. The focal length was constant throughout the sample capture.

[0039] Step2 image annotation

[0040] Manually mark the apples in the 1000 training set sample pictures, mark the real minimum circumscribed rectangle of each apple and record the coordinate information of the two vertices of the upper left corner and the lower right corner of the rectangle.

[0041] Step3 data set preparation

[0042] For the 2,000 apple pictures collected in Step 1, 600 were manually randomly selected as the verification set, 400 were used as the test set, and the remaining 1,000 were ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com