Neural machine translation inference acceleration method based on attention mechanism

A machine translation and attention technology, applied in the field of neural machine translation inference acceleration, can solve the problem that the decoding speed is difficult to meet real-time response

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

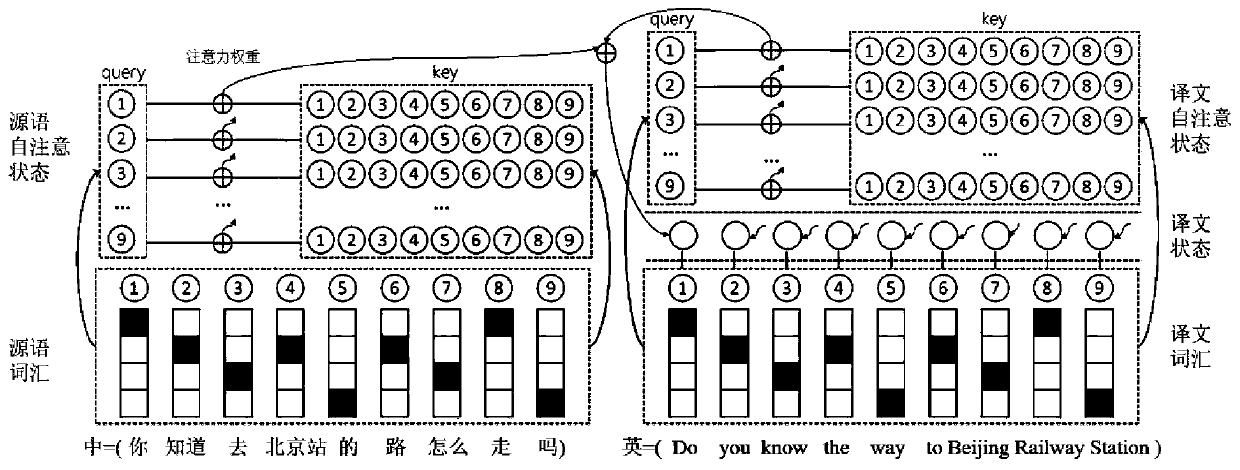

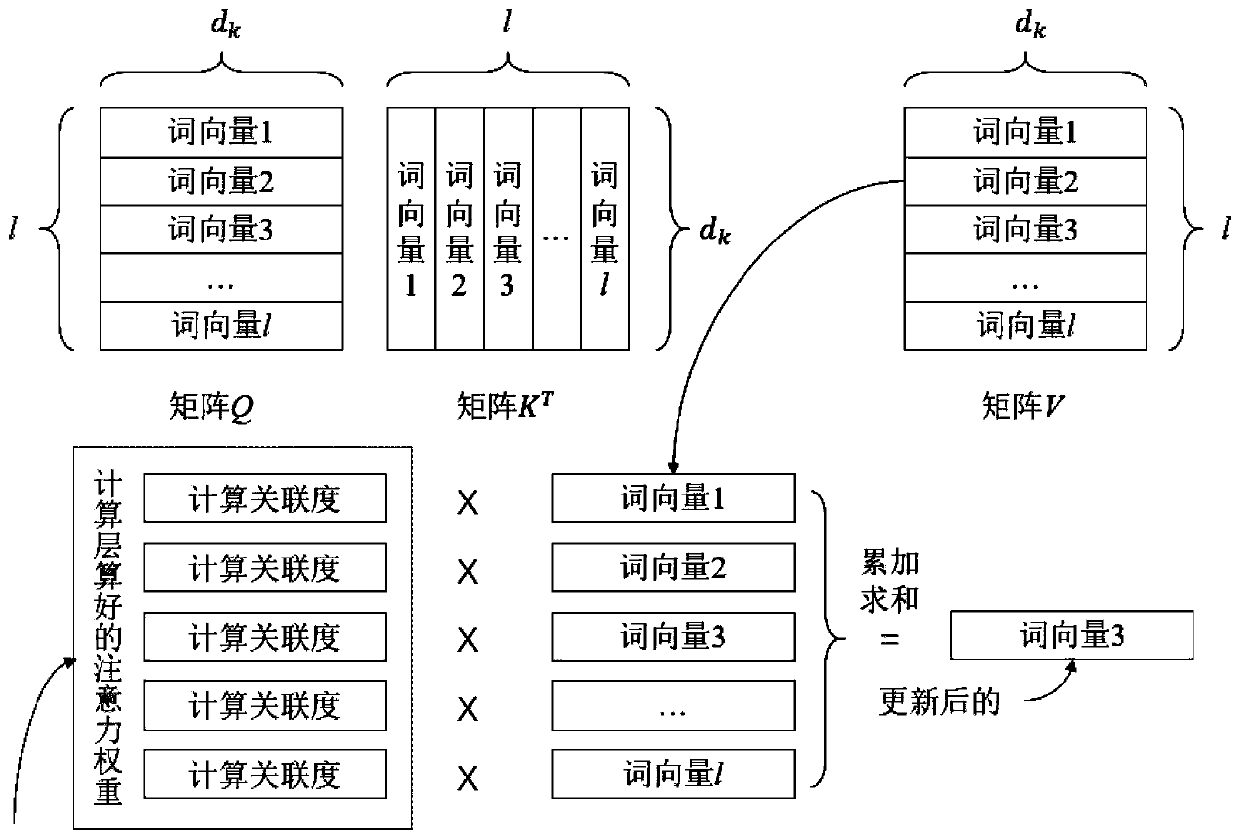

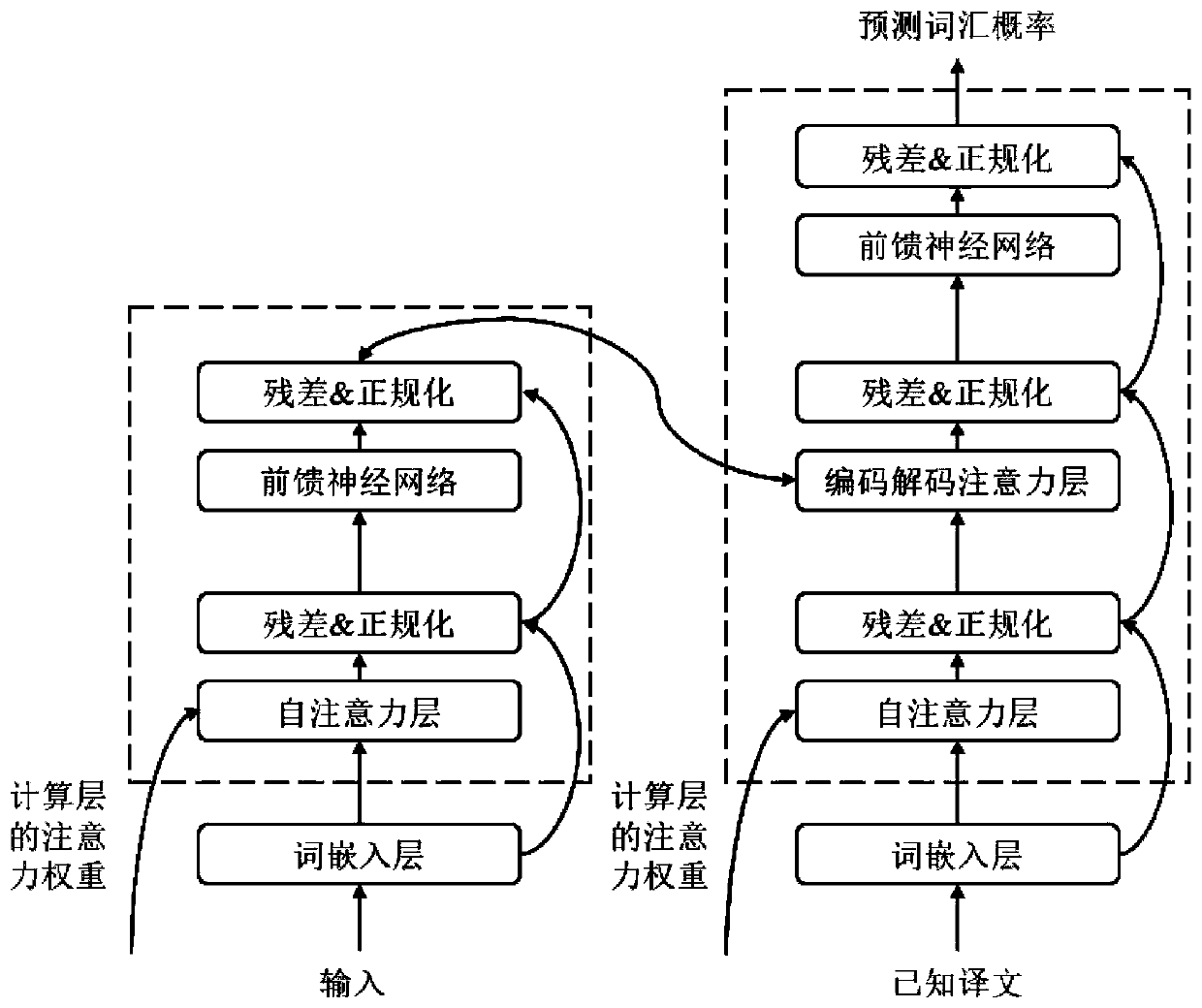

[0037]The present invention will optimize the decoding speed of the neural machine translation system based on the attention mechanism from the perspective of attention sharing, aiming to greatly increase the decoding speed of the translation system at the cost of a small performance loss, and achieve a balance between performance and speed.

[0038] The present invention is based on an attention mechanism neural machine translation inference acceleration method, comprising the following steps:

[0039] 1) Construct a training parallel corpus and a multi-layer neural machine translation model based on the attention mechanism, use the parallel corpus to generate a machine translation vocabulary, and further train to obtain model parameters after training convergence;

[0040] 2) Calculate the parameter similarity between any two layers of the decoder...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com