Image recognition method, device, terminal equipment and readable storage medium

An image recognition and image technology, applied in the field of image recognition, can solve problems such as prolonging the development cycle of terminal equipment, long training data and training time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

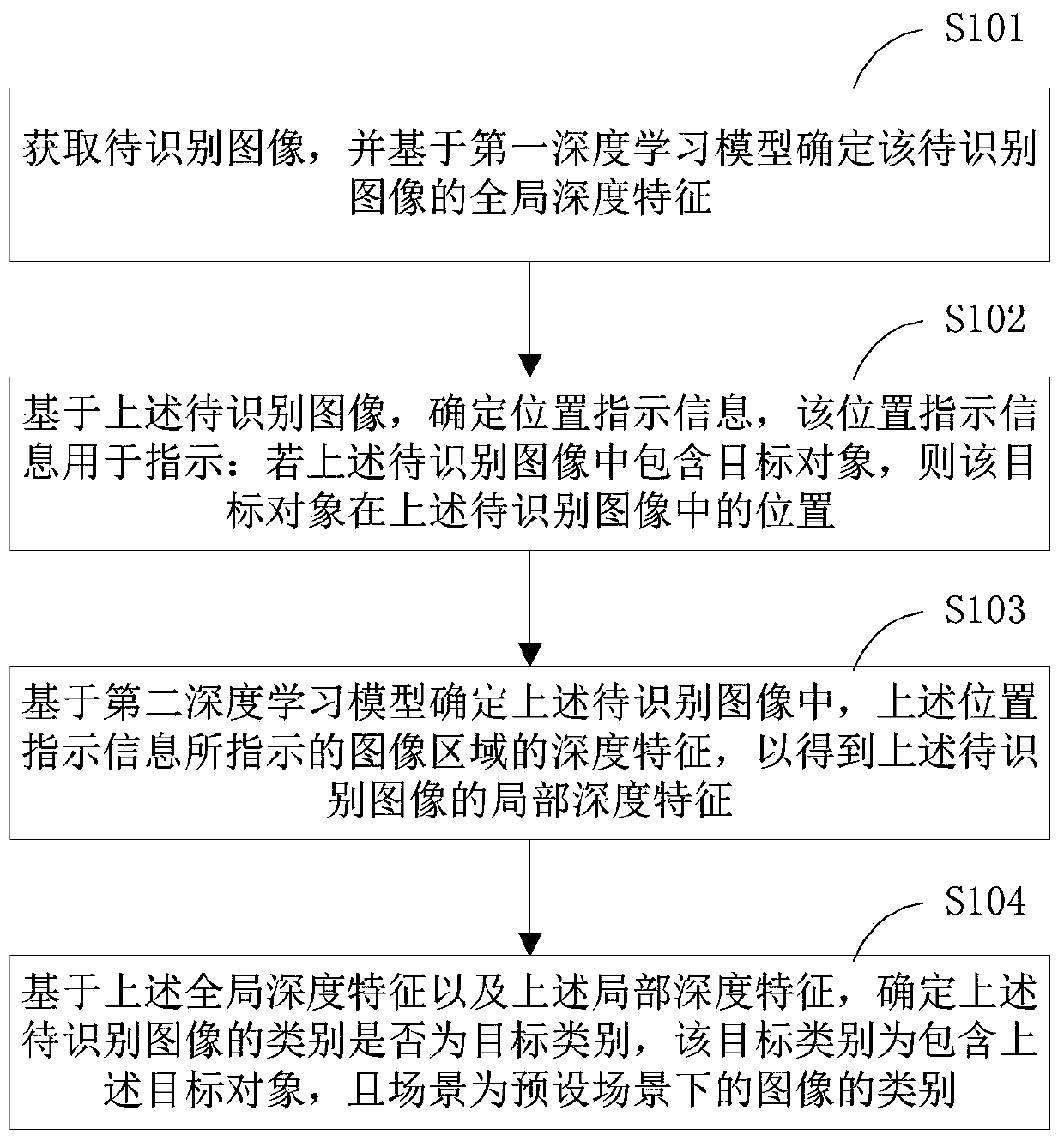

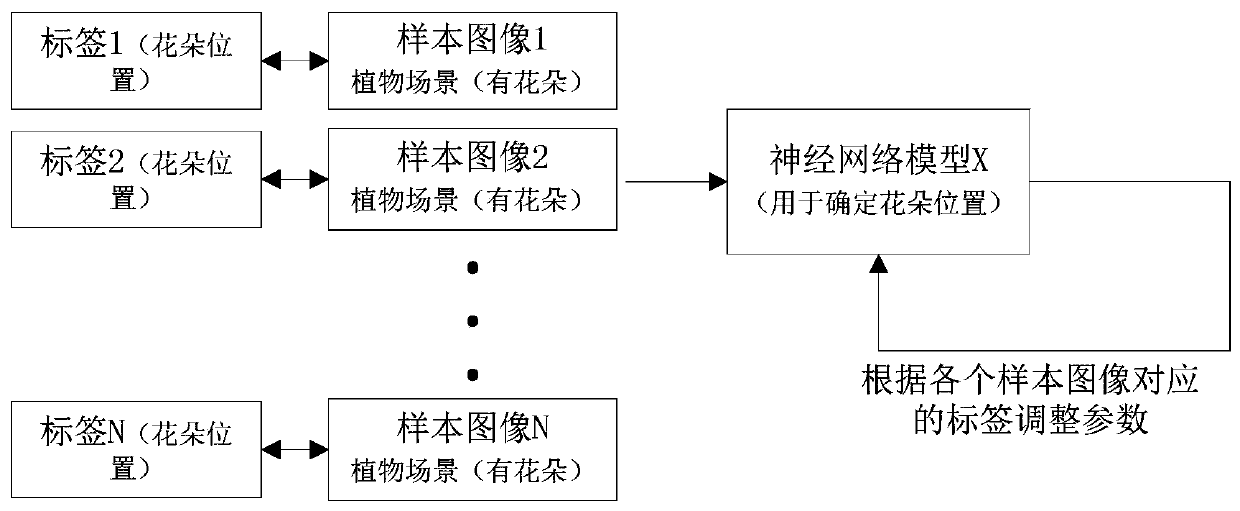

[0037] The image recognition method provided by Embodiment 1 of this application is described below, please refer to the attached figure 1 , the determination method includes:

[0038] In step S101, the image to be recognized is acquired, and the global depth feature of the image to be recognized is determined based on the first deep learning model;

[0039] At present, the convolutional neural network (Convolutional Neural Networks, CNN) model is usually used to learn the characteristics of the image, that is, the entire image is input into the CNN model, and the global depth feature of the image output by the CNN model is obtained. Commonly used CNN models include AlexNet model, VGGNet model, GoogleInception Net model and ResNet model. The specific model architecture is an existing technology, and will not be repeated here.

[0040] In this step S101, the AlexNet model, VGGNet model, GoogleInceptionNet model or ResNet model commonly used in the prior art can be used to obt...

Embodiment 2

[0066] Another image recognition method provided in Embodiment 2 of the present application is described below, please refer to the attached Figure 6 , the method includes:

[0067] In step S201, the image to be recognized is acquired, and the global depth feature of the image to be recognized is determined based on the first deep learning model;

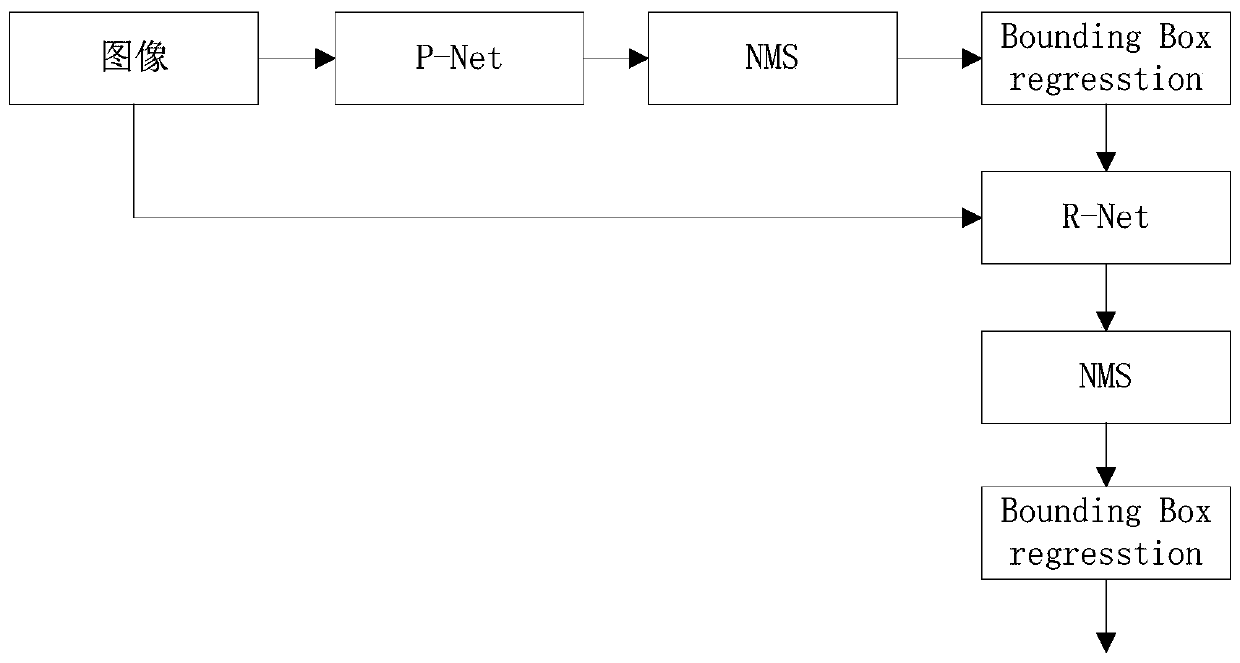

[0068] In step S202, based on the above-mentioned image to be recognized, position indication information is determined, and the position indication information is used to indicate: if the above-mentioned image to be recognized contains a target object, the position of the target object in the above-mentioned image to be recognized;

[0069] In step S203, determining the depth features of the image region indicated by the position indication information in the image to be identified based on the second deep learning model, so as to obtain the local depth features of the image to be identified;

[0070] The specific implementation ...

Embodiment 3

[0086] Embodiment 3 of the present application provides an image recognition device. For ease of description, only the relevant parts of the application are shown, such as Figure 7 As shown, the identification device 300 includes:

[0087] A global feature module 301, configured to acquire an image to be recognized, and determine the global depth feature of the image to be recognized based on the first deep learning model;

[0088] A position determination module 302, configured to determine position indication information based on the image to be recognized, where the position indication information is used to indicate: if the image to be recognized contains a target object, then the target object in the image to be recognized position in

[0089] A local feature module 303, configured to determine, based on a second deep learning model, the depth feature of the image region indicated by the position indication information in the image to be recognized, so as to obtain the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com