Cross-view character recognition method based on shapes and postures under wearable equipment

A wearable device and person recognition technology, which is applied in the field of cross-view person recognition, can solve problems that affect the accuracy of CVPI, pose inaccuracy, ignore pose consistency, etc., and achieve the effects of improving accuracy, solving occlusion, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

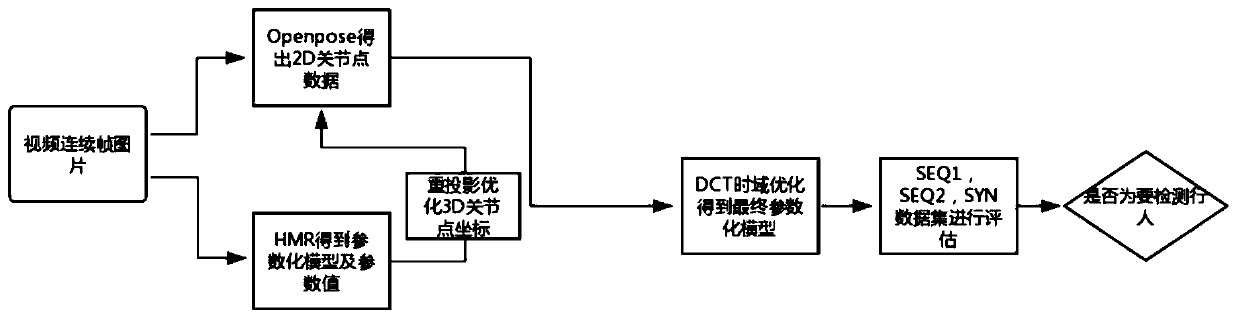

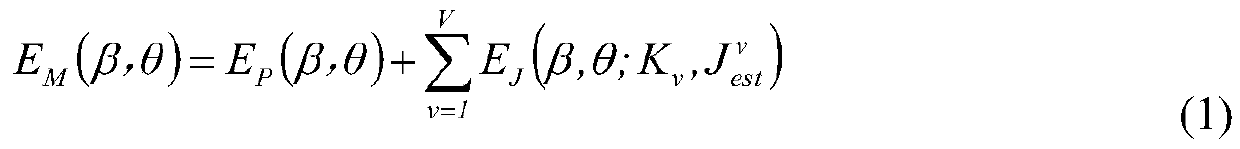

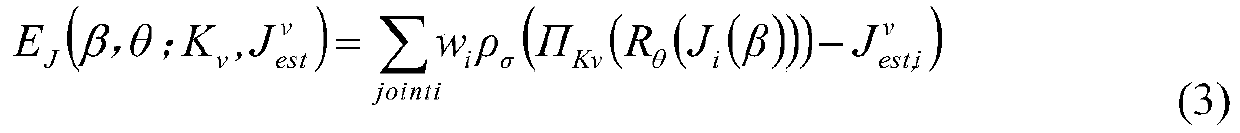

[0032]In order to overcome the shortcomings of the existing technology, a higher accuracy CVPI method is proposed, which can better achieve higher accuracy person re-identification through human body posture information. In order to achieve the above purpose, the solution adopted by the present invention is a cross-view person recognition method based on re-optimization of shape and posture. The overall process of cross-view person recognition is: given the video frame image of the pedestrian to be detected in camera No. For the video obtained by the camera, for all the video frames of the first two, the human body parameterized model and the 2D joint point position corresponding to the frame image are detected. The function of the 2D joint point position is to optimize the Smpl human body parameterized model (3D joint point weight Projection optimization operation), and then obtain the final human body parameterized model through the 3D joint point reprojection optimization op...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com