An Action Recognition Method Based on Two-Stream Convolutional Attention

An attention and convolution technology, applied in the computer field, can solve the problems of not considering spatial feature and motion feature information fusion, different degrees of importance, and differences in key information, etc., to alleviate the lack of time series information, increase diversity, and enrich features. The effect of the amount of information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below in conjunction with accompanying drawing.

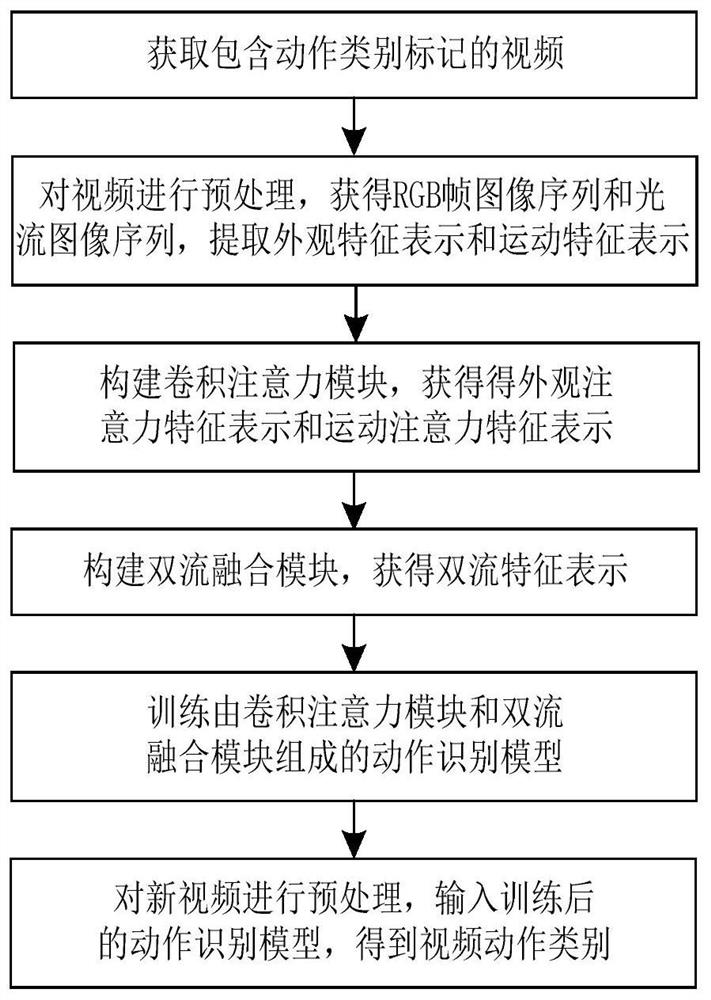

[0039] The action recognition method based on two-stream convolutional attention, first preprocess the given video and extract the appearance feature representation and motion feature representation; then input the two feature representations into the convolutional attention module to capture the appearance of the key content of the video Attention feature representation and motion attention feature representation; then the two attention feature representations are fused with each other through the dual-stream fusion module to obtain a dual-stream feature representation that combines appearance and motion information; finally, the dual-stream feature representation is used to determine the action category of the video content. This method uses the convolutional attention mechanism to capture the latent patterns of video actions, effectively characterize the temporal relation...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com