Video super-resolution method based on time attention and cyclic feedback network

A super-resolution and feedback network technology, applied in the field of video processing, can solve the problems of different contributions of visual information to the super-resolution reconstruction effect, and the feedback mechanism has not been fully utilized, so as to improve the video super-resolution effect and the detail reconstruction effect. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

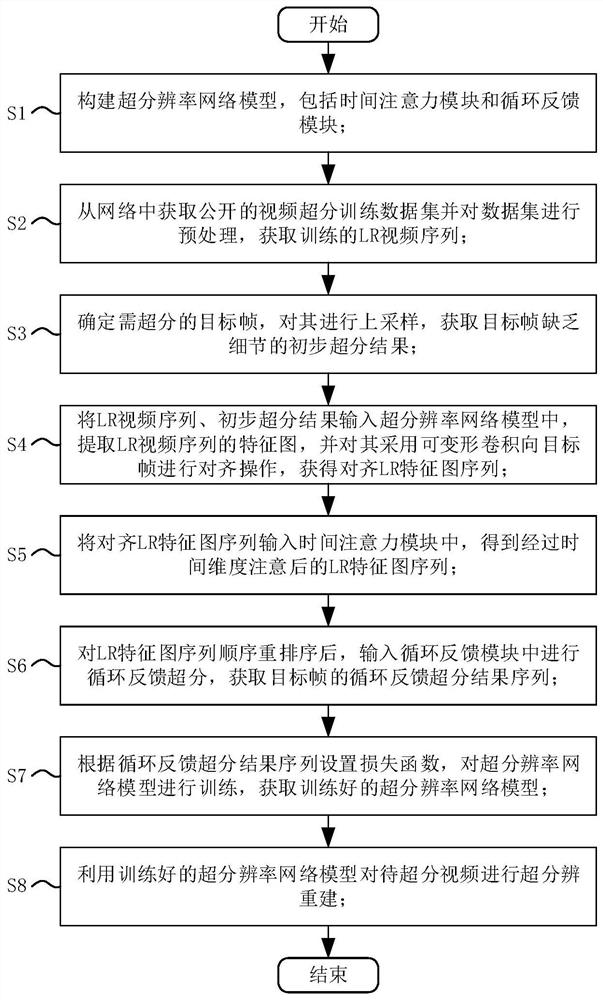

[0064] Such as figure 1 As shown, a video super-resolution method based on temporal attention and loop feedback network, including the following steps:

[0065] S1: Build a super-resolution network model, including a temporal attention module and a loop feedback module;

[0066] S2: Obtain a public video super-resolution training data set from the network and preprocess the data set to obtain a low-resolution (LR) video sequence for training;

[0067] In this embodiment, the videos in the existing public high-resolution dataset Vimeo-90k dataset are selected as the training video data, and the video data are preprocessed.

[0068] S3: Determine the target frame that needs to be super-resolution, upsample it, and obtain the preliminary super-resolution result that the target frame lacks details;

[0069] In this embodiment, the training video data is 5 frames, and the middle frame is selected as the target frame to be super-resolution, and the bicubic interpolation and upsamp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com