Patents

Literature

30 results about "Human learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The research works on the human learning process as a complex adaptive system developed by Peter Belohlavek showed that it is the concept that the individual has that drives the accommodation process to assimilate new knowledge in the long-term memory, defining learning as an intrinsically freedom-oriented and active process.

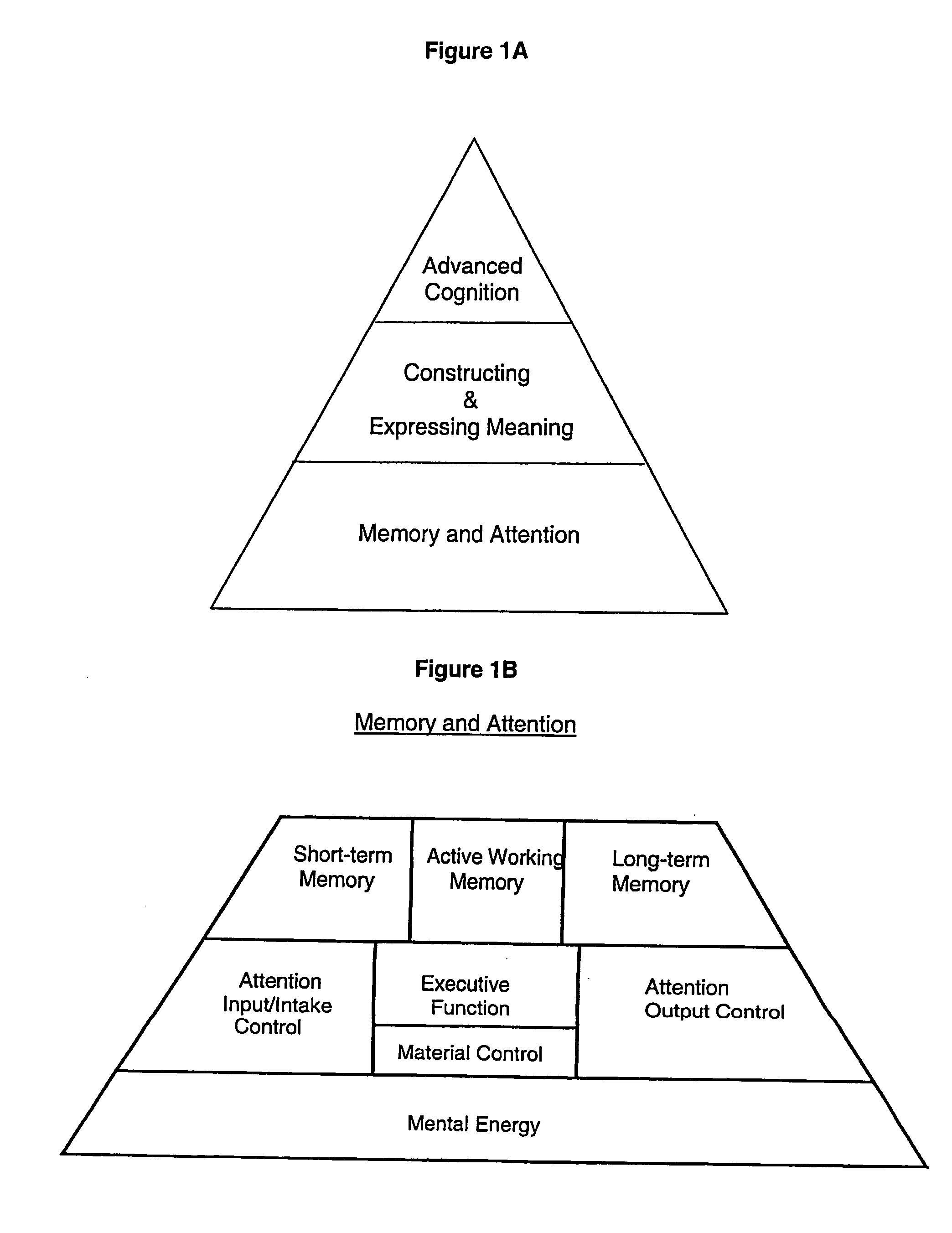

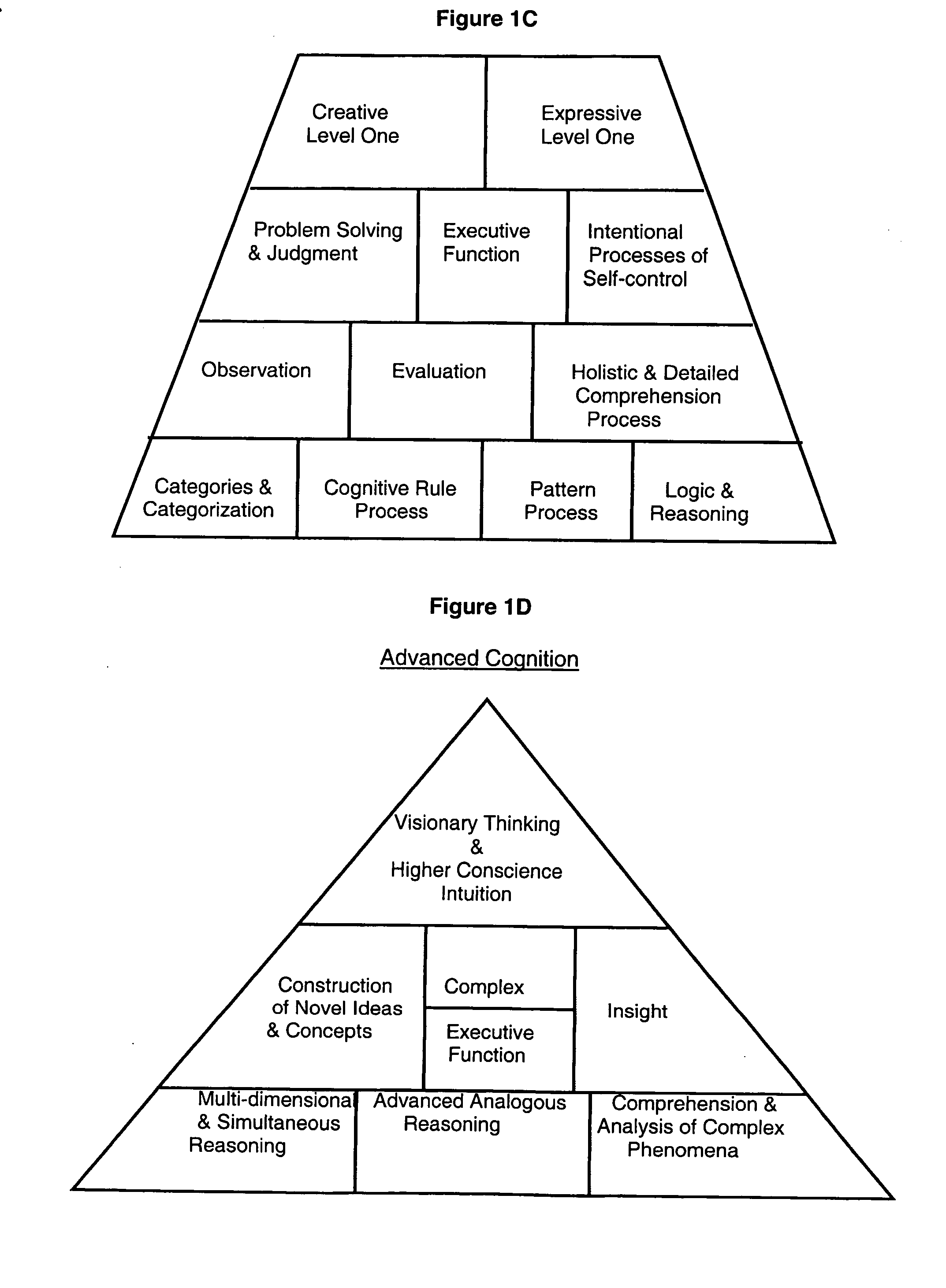

Cognitive learning video game

InactiveUS20080003553A1Affordable and accessibleElectrical appliancesTeaching apparatusWeaknessHuman–computer interaction

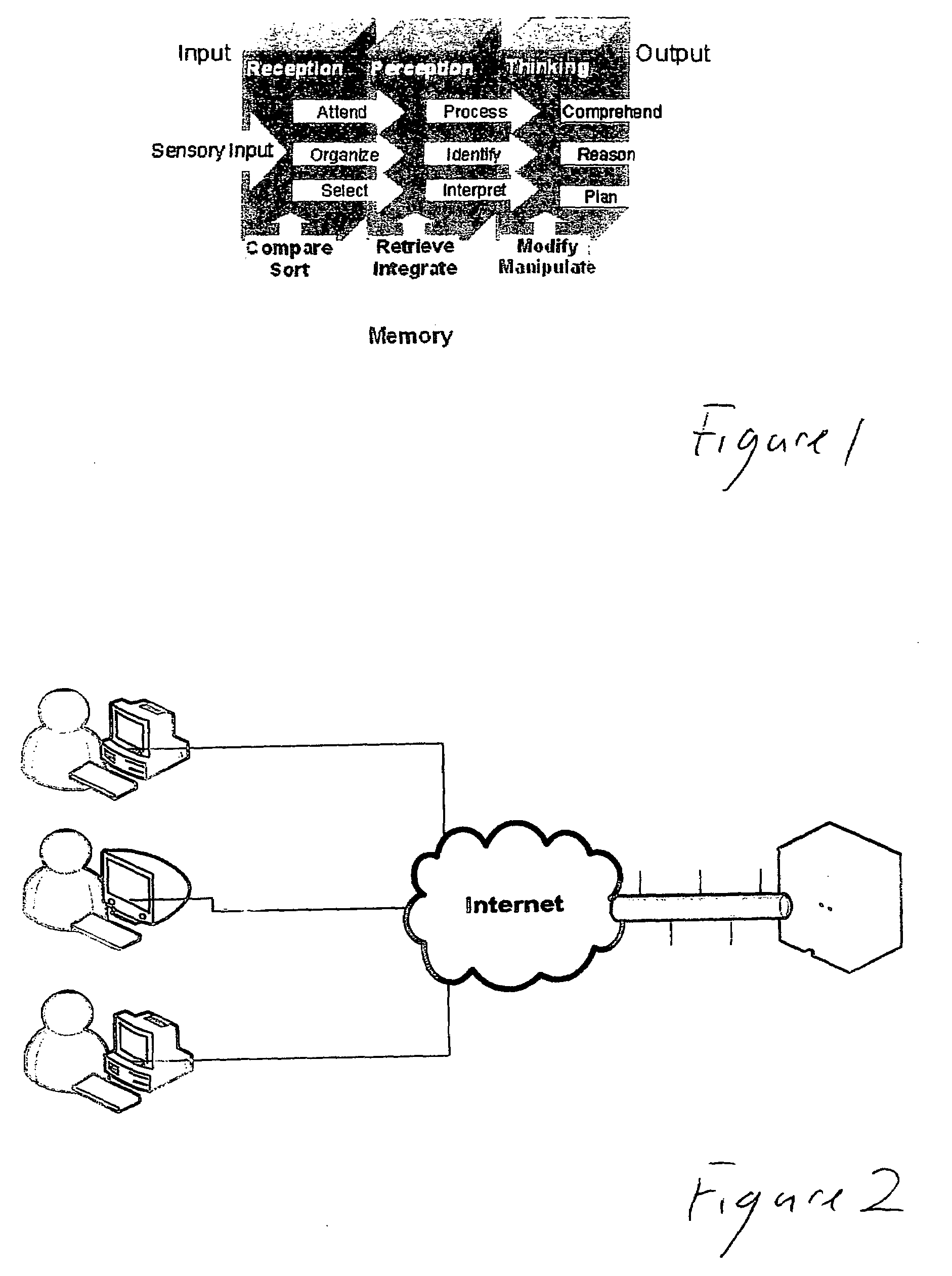

In accordance with the principles of the present invention, a video game is provided having multimedia graphics in an interactive interface. The video game is a cognitive development program made up of a sequence of challenges that address a range of cognitive strengths and weaknesses to provide appropriate levels of challenge and intensity whereby the ability of the mind to assimilate and process information quickly and accurately is enhanced. Hierarchical nature human learning is utilized, with the challenges progressing from simpler to more complex neurological processes. The cognitive skills that are developed include cognitive attention skills, cognitive visual processing skills, cognitive sensory integration skills, cognitive auditory processing skills, cognitive memory skills, and cognitive thinking skills.

Owner:THE KARLIN LAW FIRM LLC +1

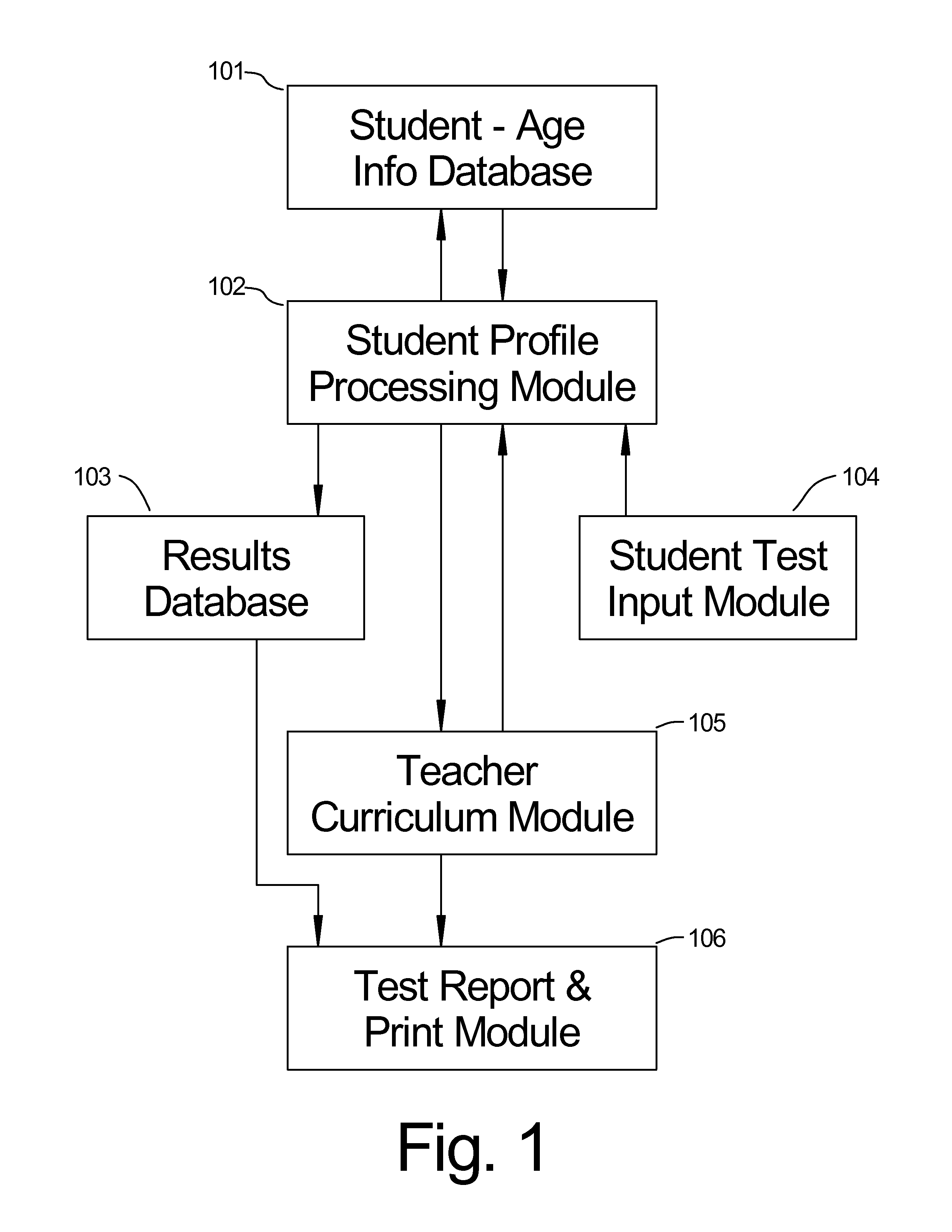

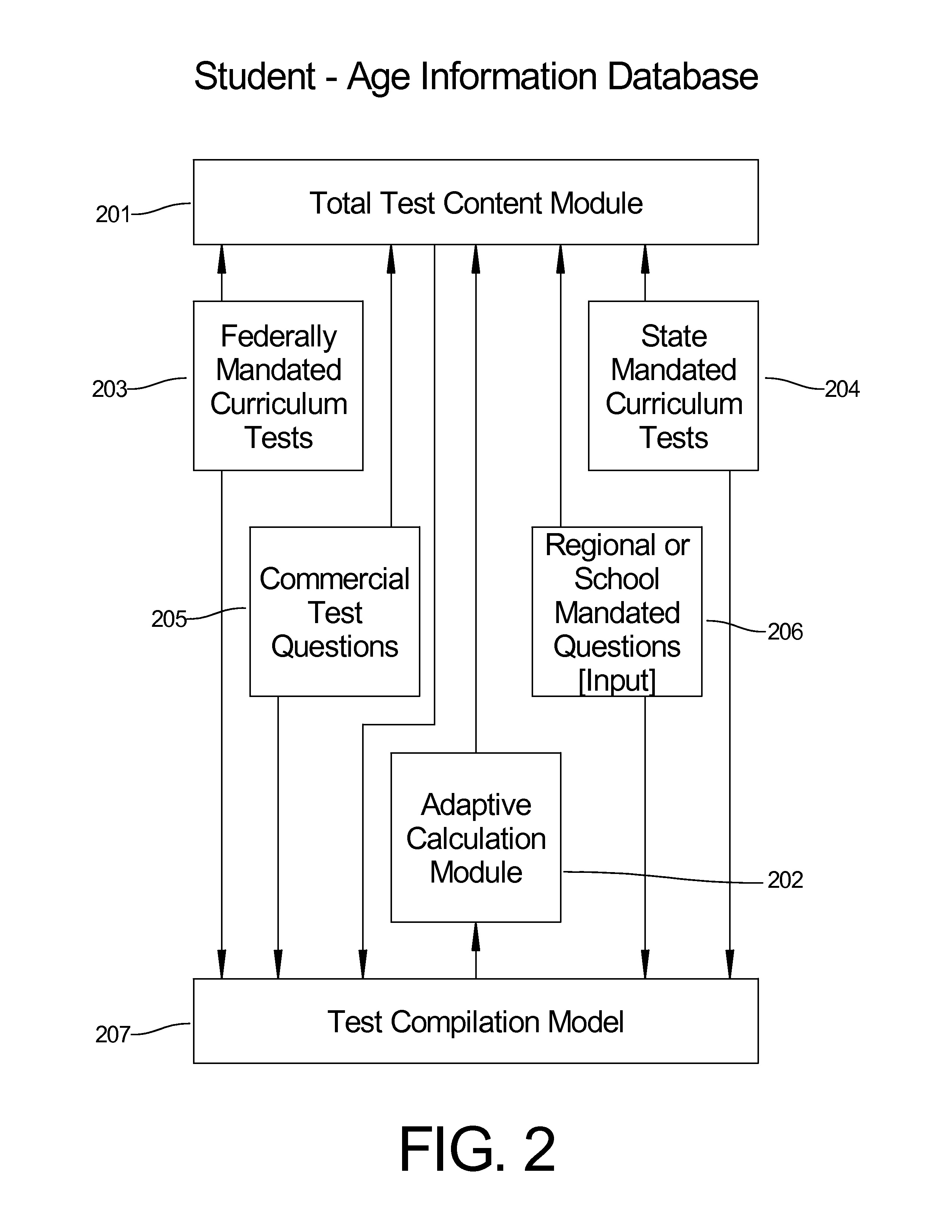

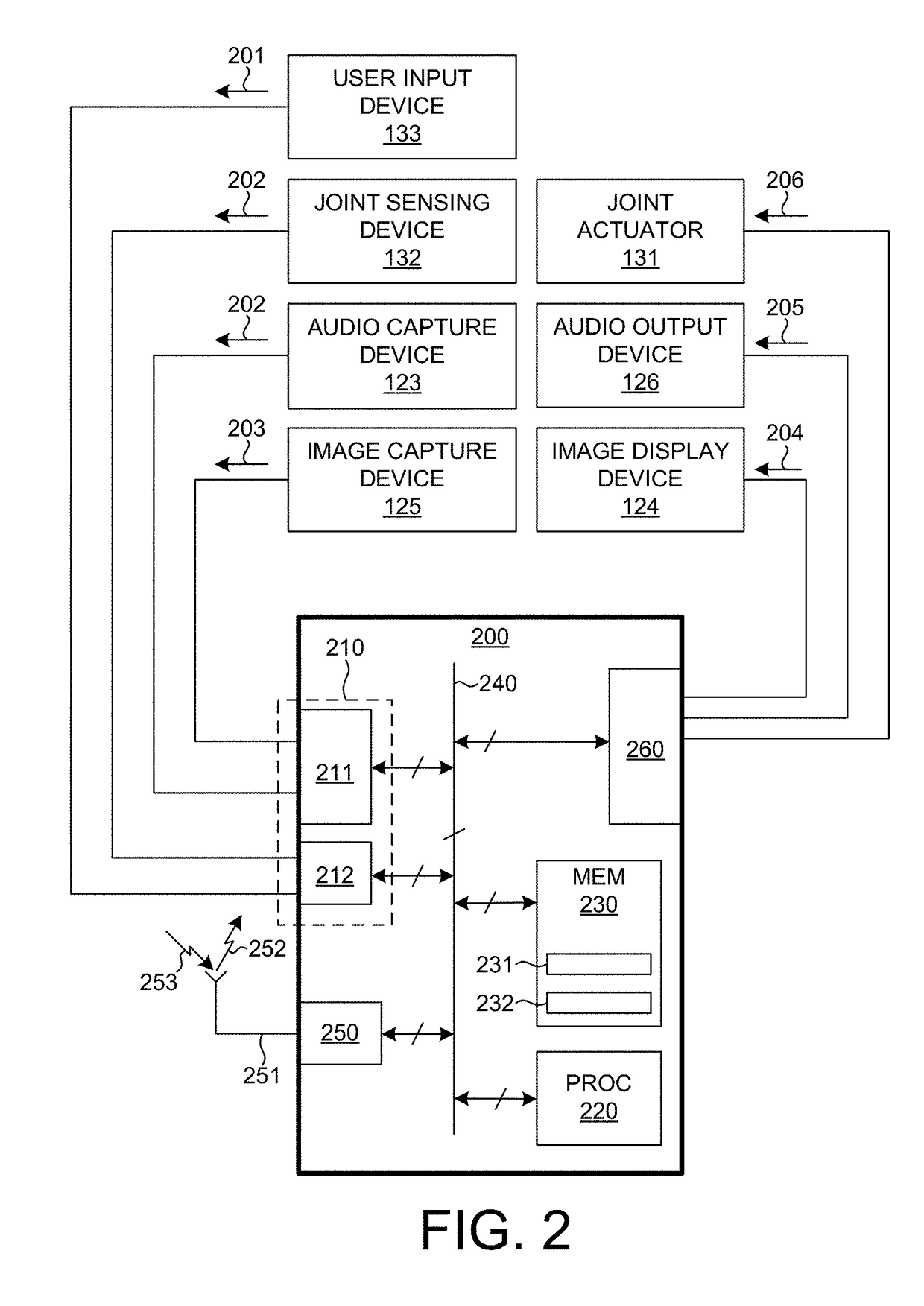

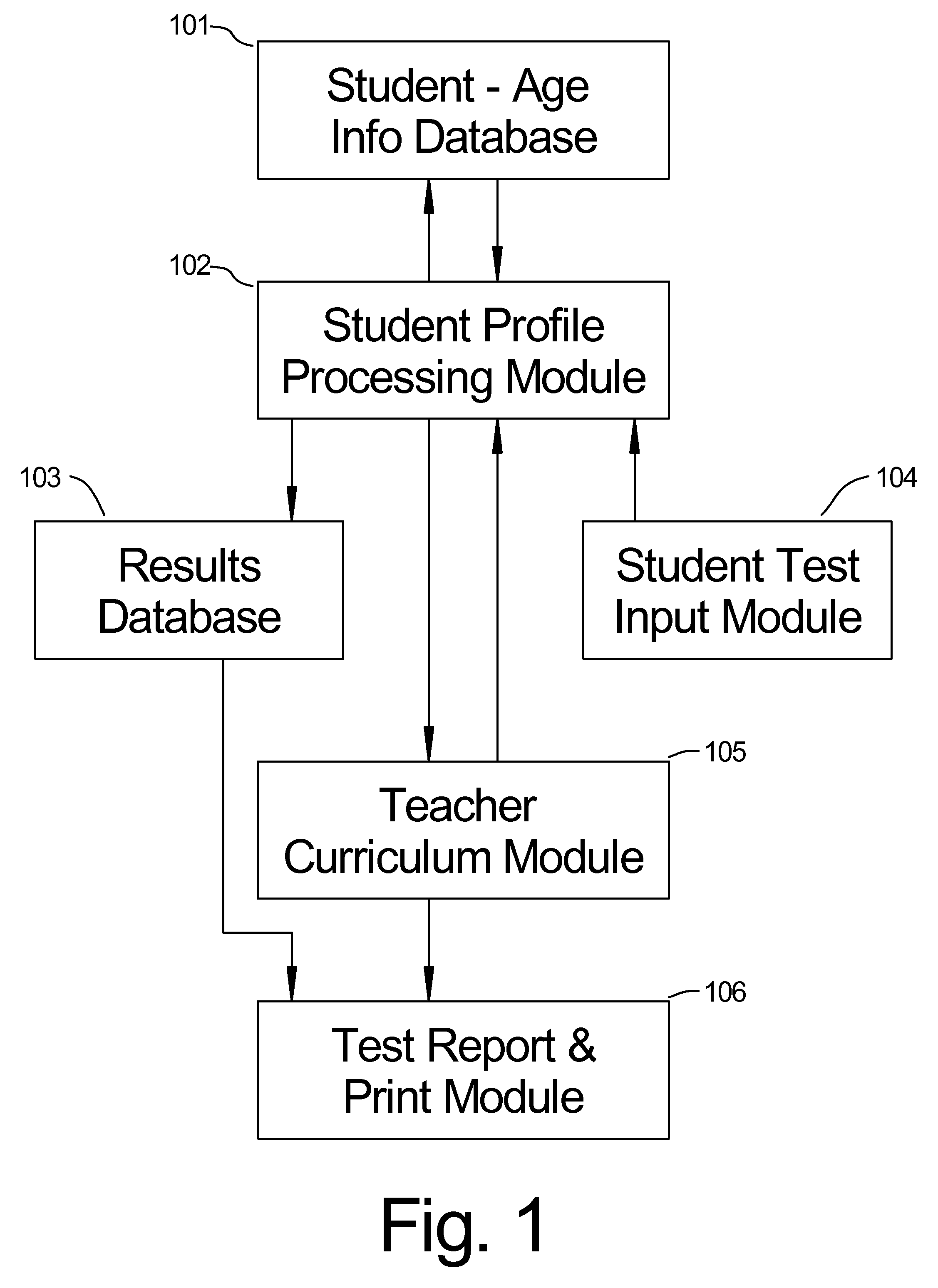

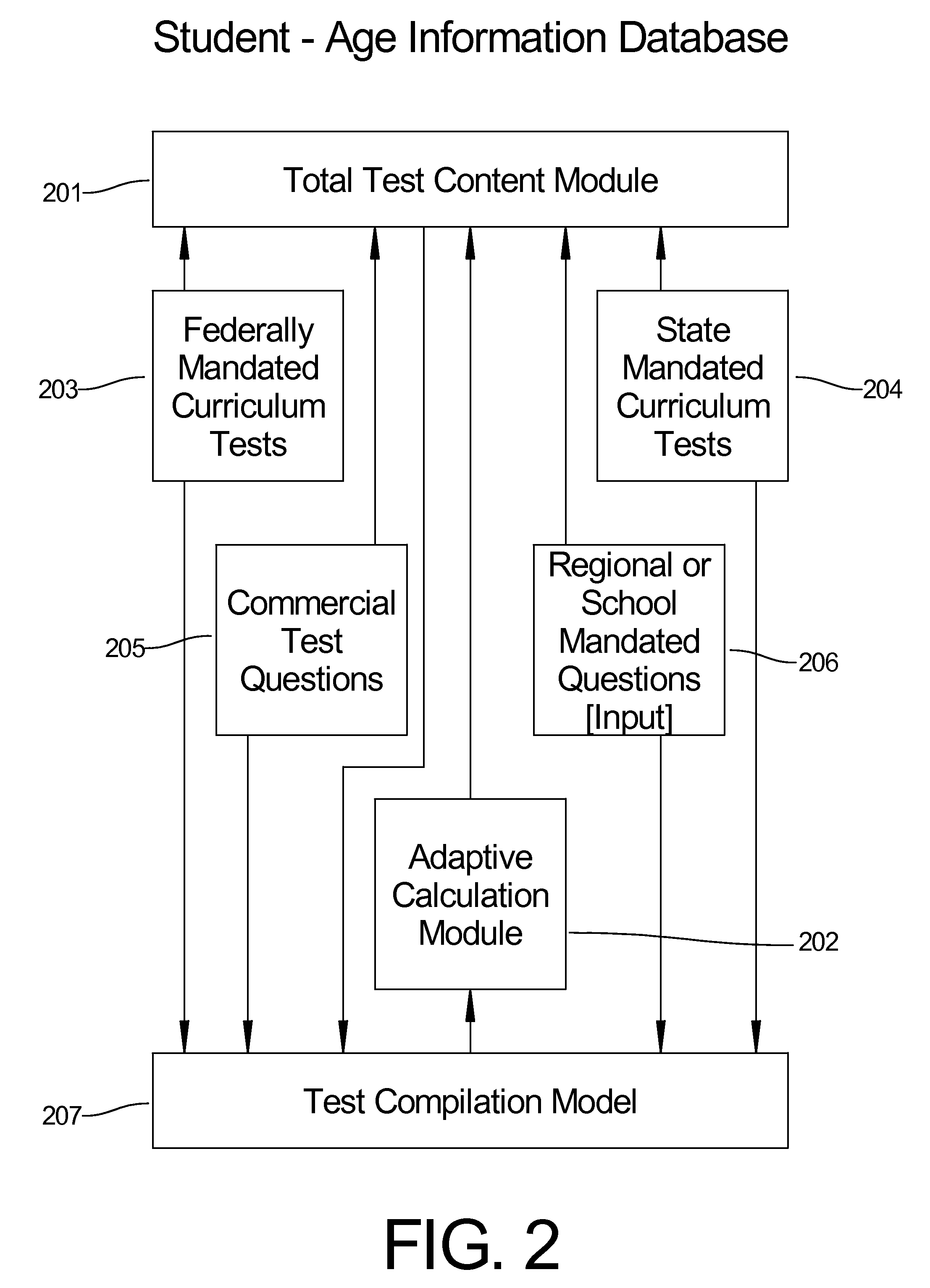

Student profile grading system

ActiveUS8465288B1Measurement performanceElectrical appliancesTeaching apparatusMeasure outcomesFile system

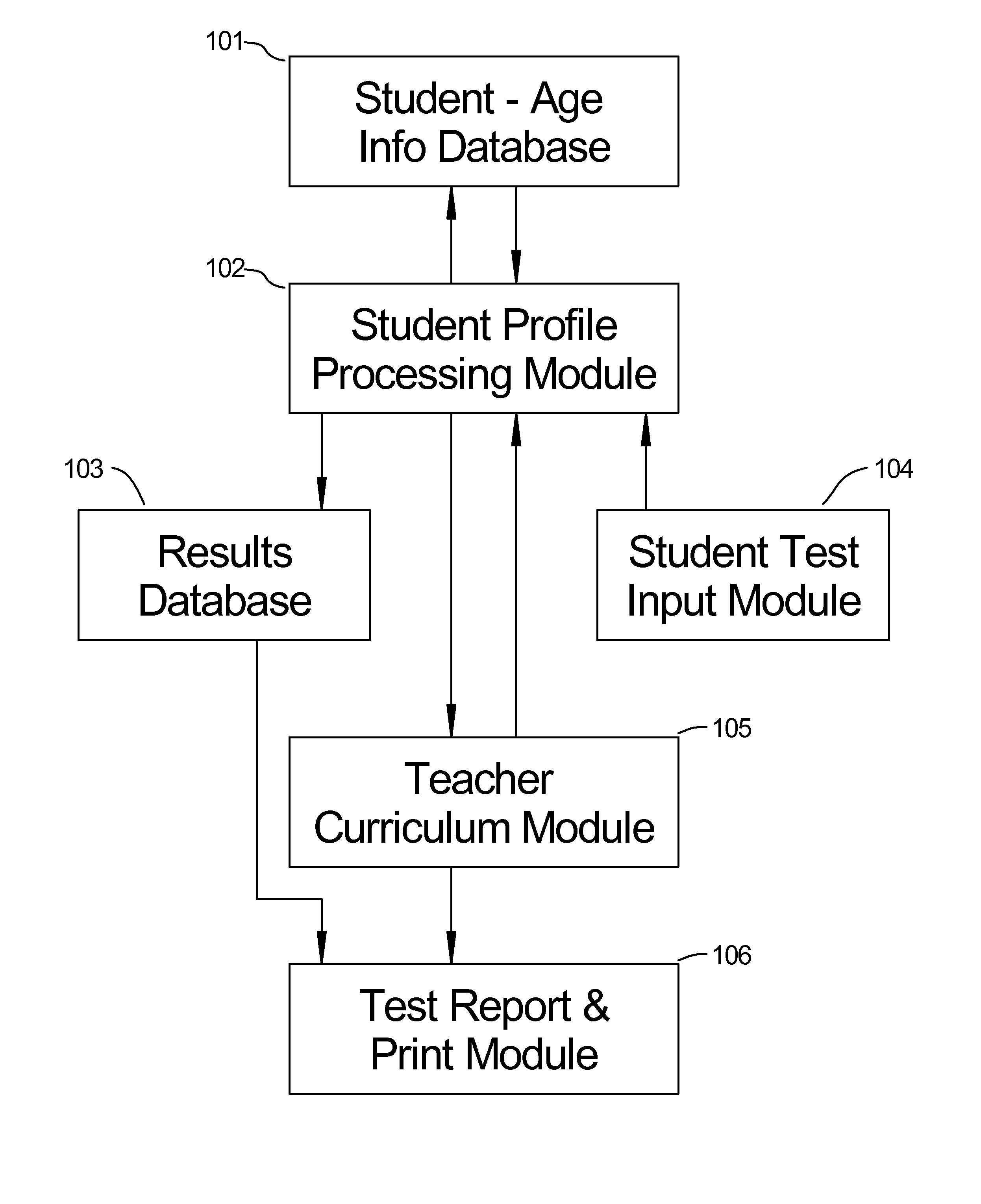

The invention relates to a method and means of grading students. This invention is a software application utilizing profiling techniques that when combined or imbedded with available knowledge on the human learning experience will individually evaluate each student based on their current ability and capacity to learn, by subject. Student's tests are graded electronically against their personal ability and capability profile for grade. The profile system software is applicable to many learning situations requiring a measured outcome.

Owner:ROERS PATRICK G

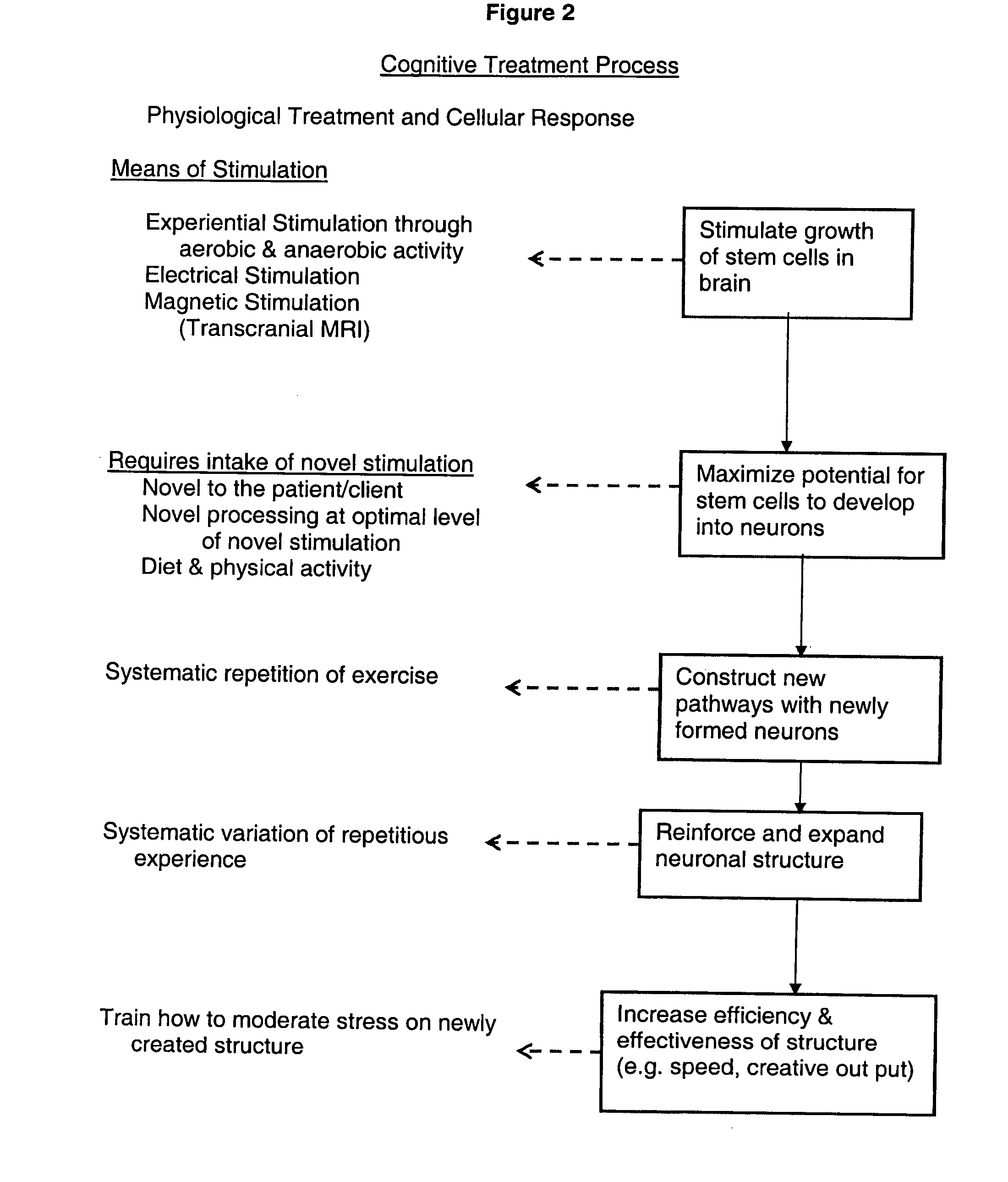

Methods for cognitive treatment

The present invention comprises methods and systems for enhancing learning skills and development of cognitive functions in humans. The methods comprise a defined set of steps or modules that include cognitive function enhancement activities such as enhancing memory and pattern recognition, and to build neuronal pathways necessary for overall cognitive improvement.

Owner:HARDWICKE SUSAN B

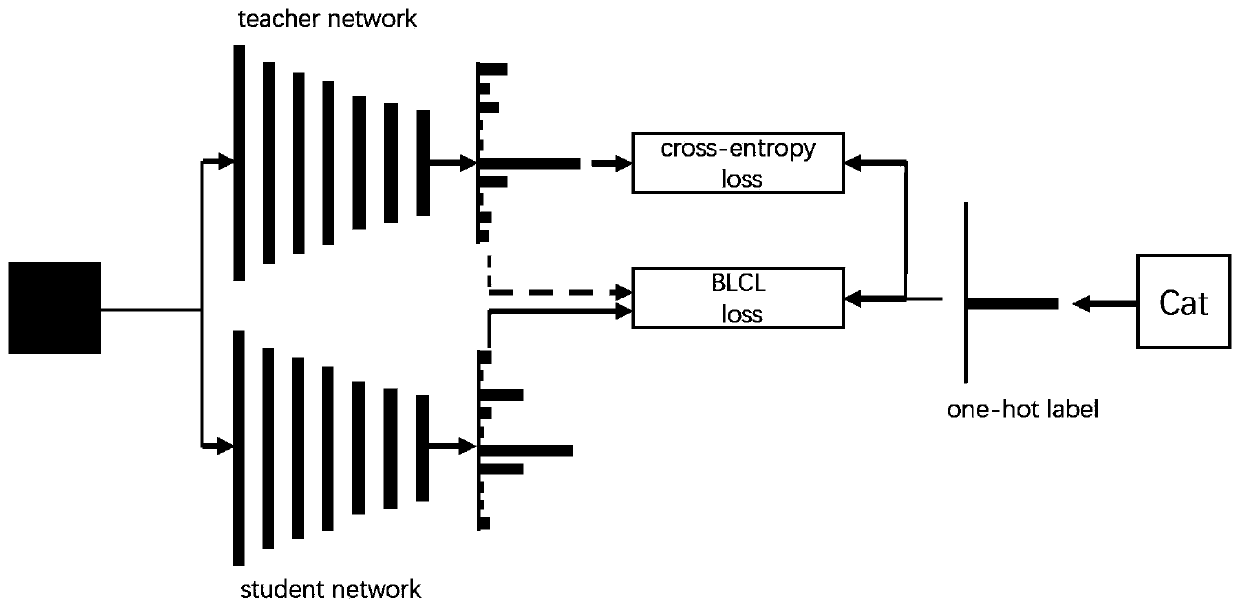

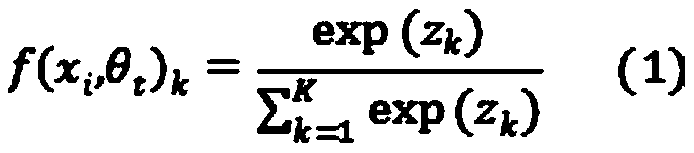

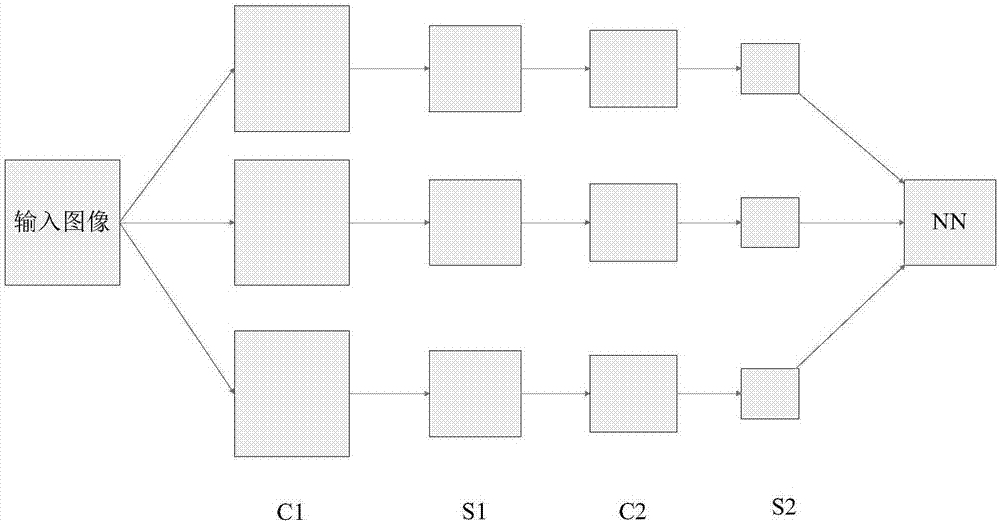

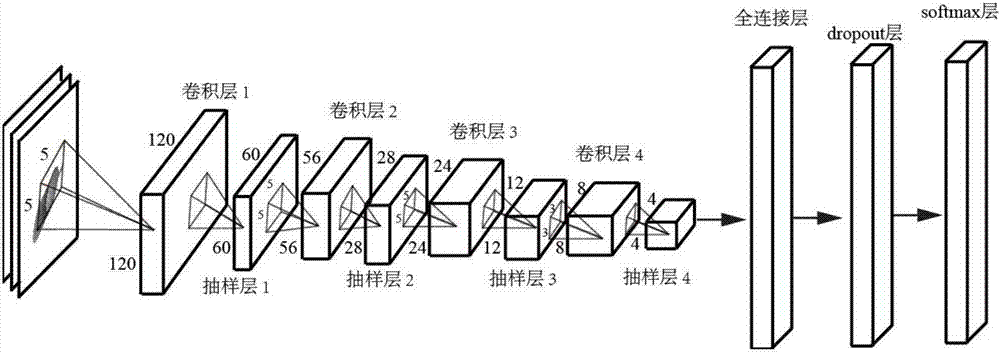

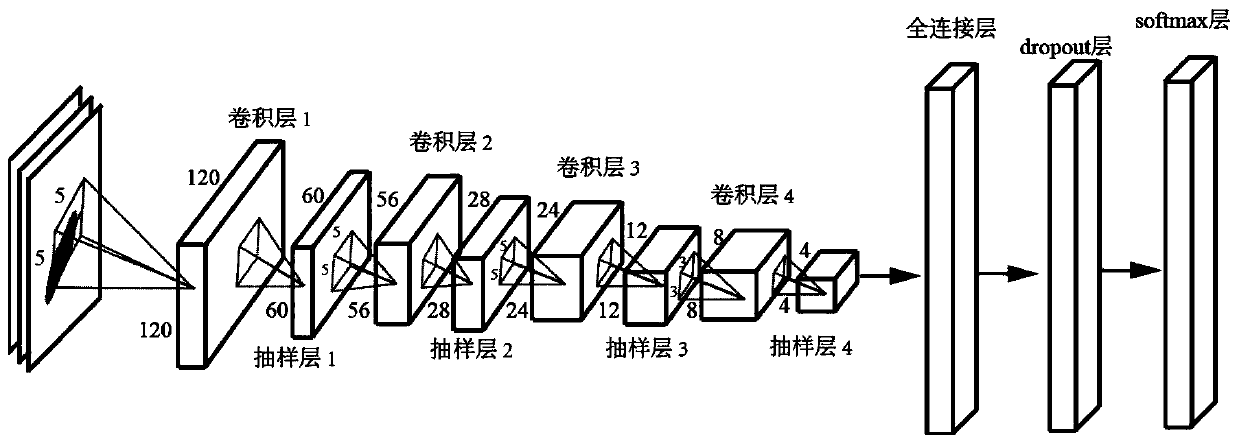

Image recognition method based on deep course learning

ActiveCN111160474AThe training process is reasonableReduce training workloadInternal combustion piston enginesCharacter and pattern recognitionNetwork classificationCoursework

The invention discloses an image recognition method based on deep course learning, and belongs to the field of image recognition. The method comprises the following steps: constructing teacher and student networks based on a deep convolutional neural network; performing image classification training on the teacher network by using a training sample, and predicting the probability that the trainingsample belongs to each category; calculating the difference between the prediction of the teacher network and the labels to update the parameters; transmitting the prediction information to a studentnetwork; training the student network; guiding student network training according to the prediction information result of the teacher network; calculating a difference updating parameter between thestudent network prediction result and the label; completing student network classification training; and the trained student network realizes recognition and classification of the images. According tothe method, the process of human learning from easiness to difficulty is simulated, the training process is reasonable, the workload is greatly reduced, the network parameters are updated quickly, the influence of the samples is balanced by gradient differences generated by different samples, the prediction precision is higher, and the performance is more reliable and stable.

Owner:HEFEI UNIV OF TECH +1

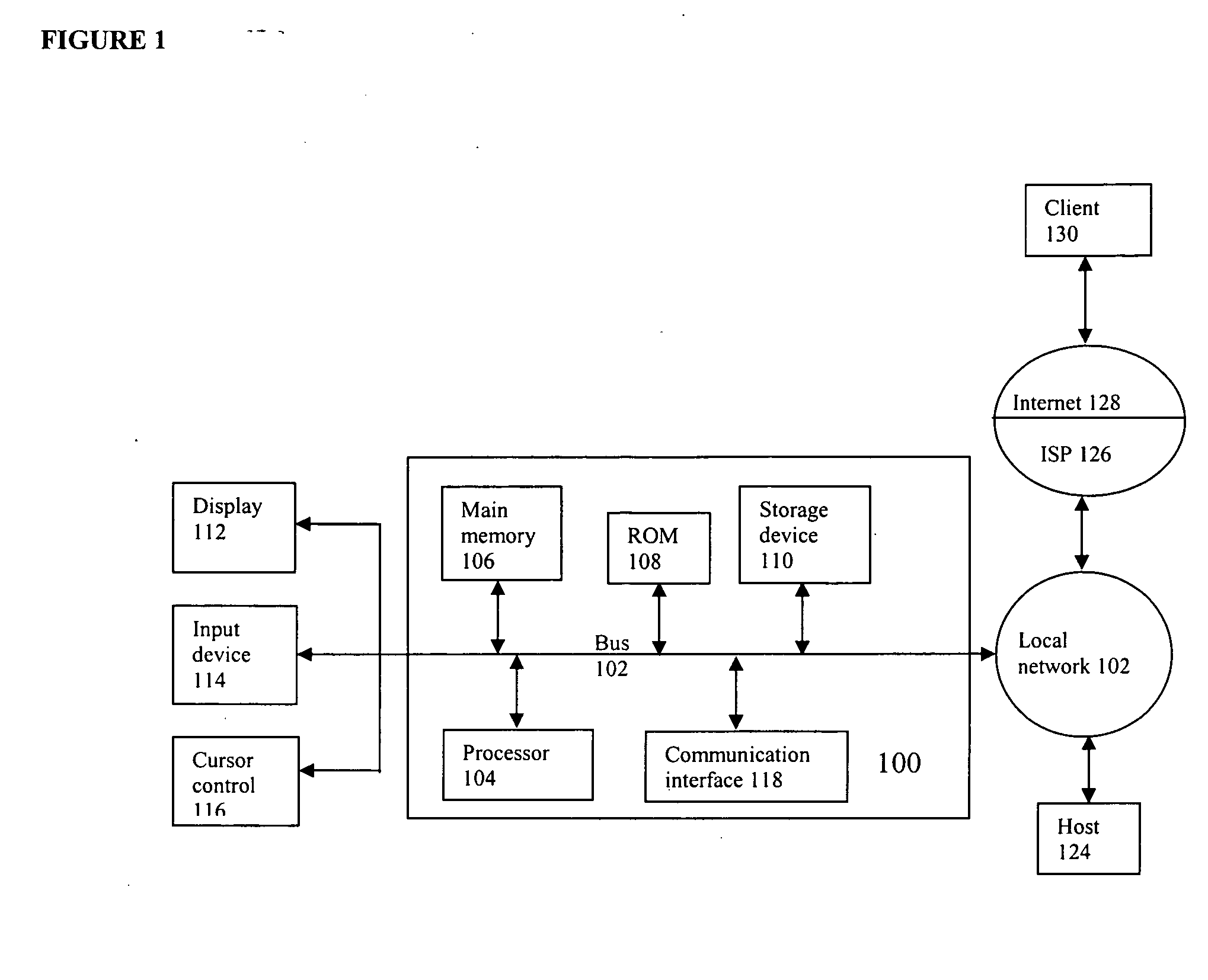

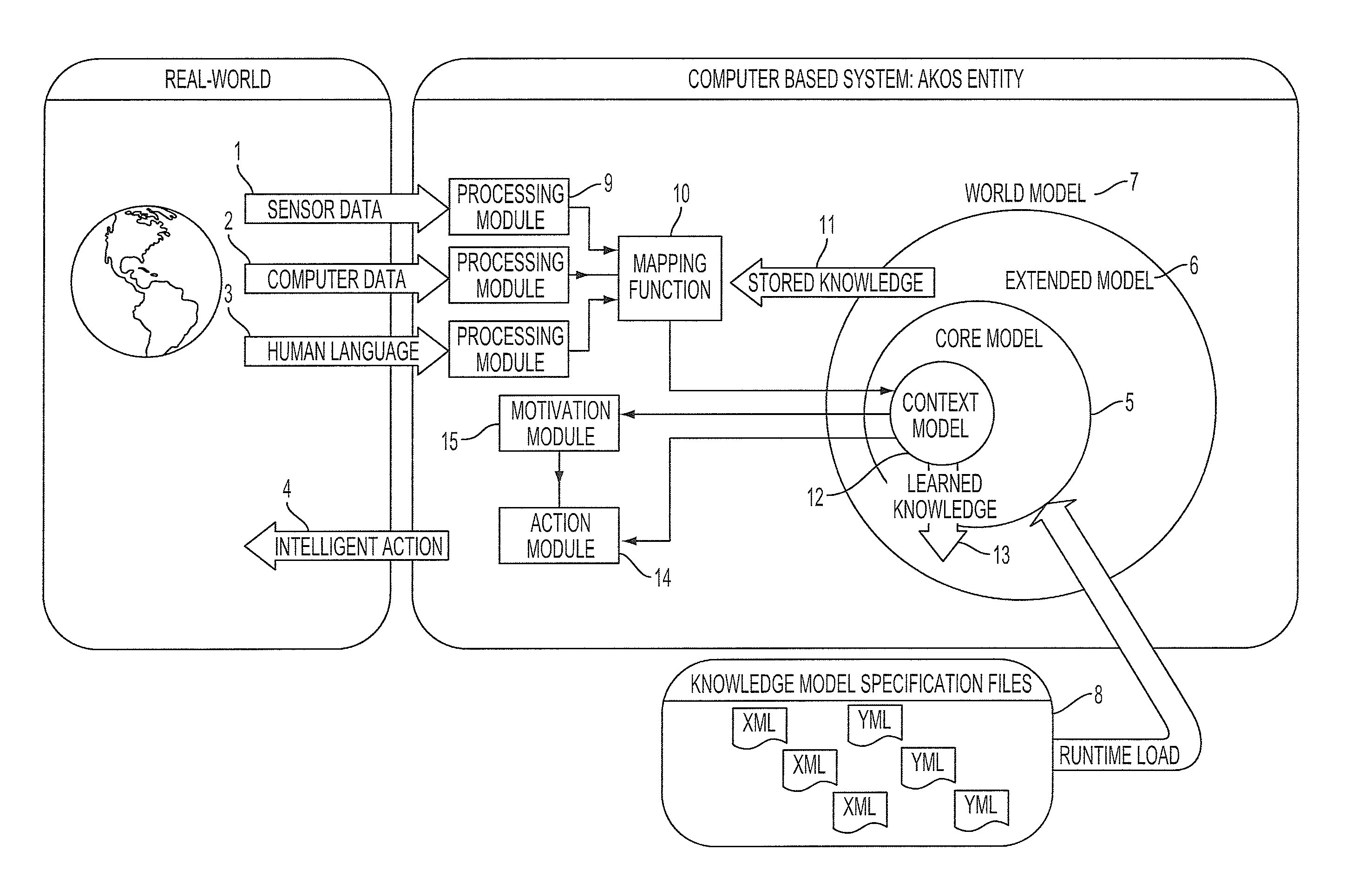

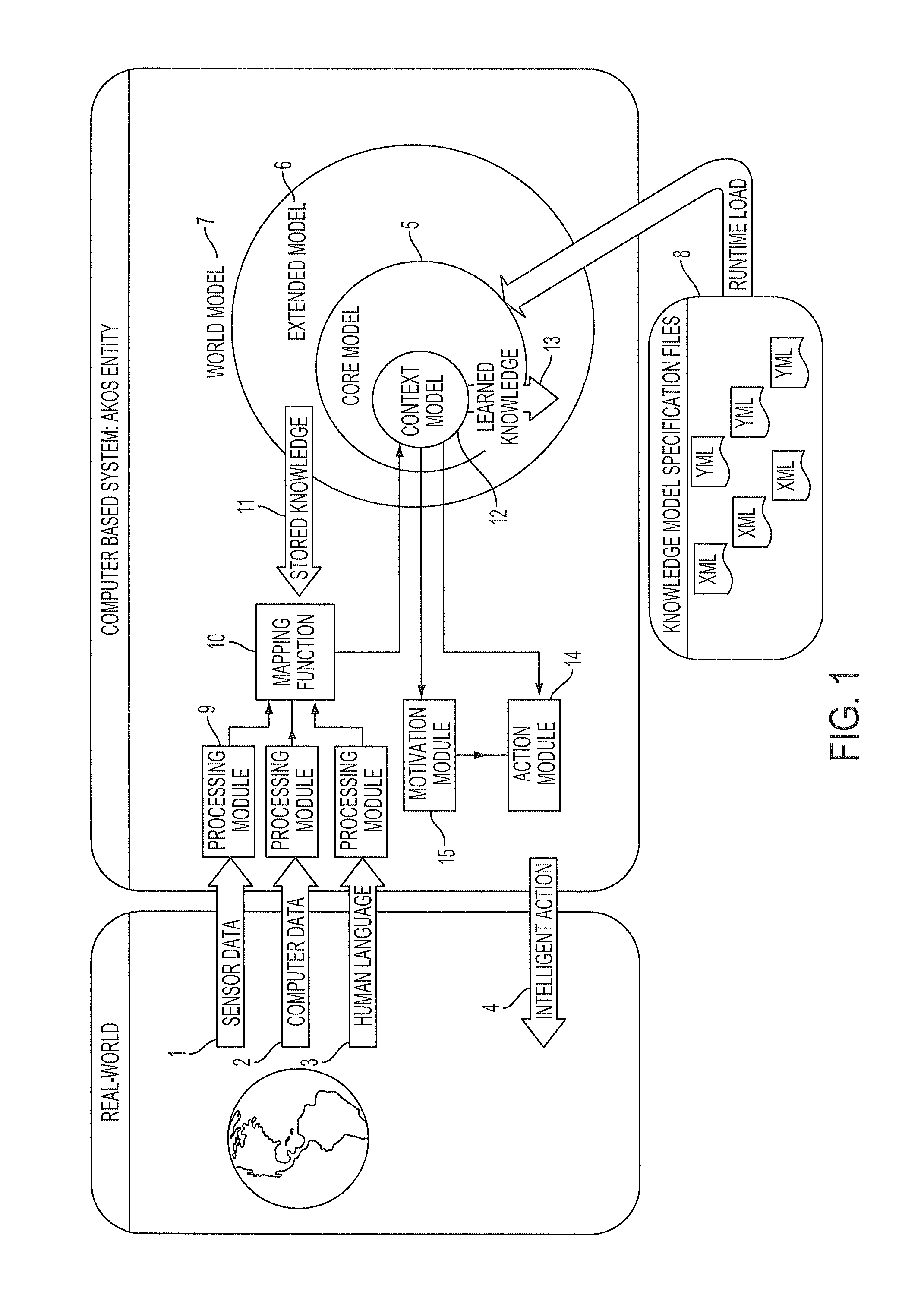

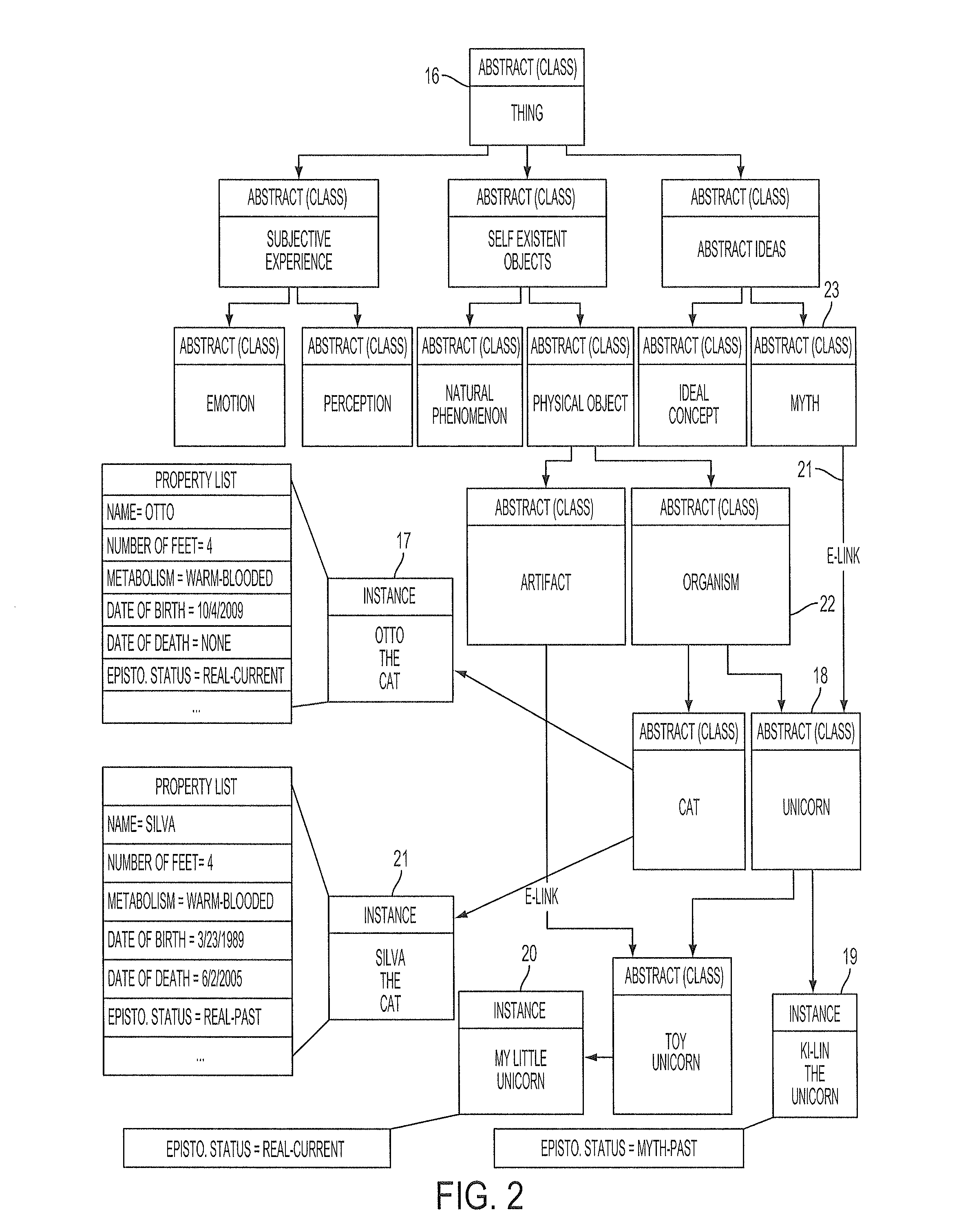

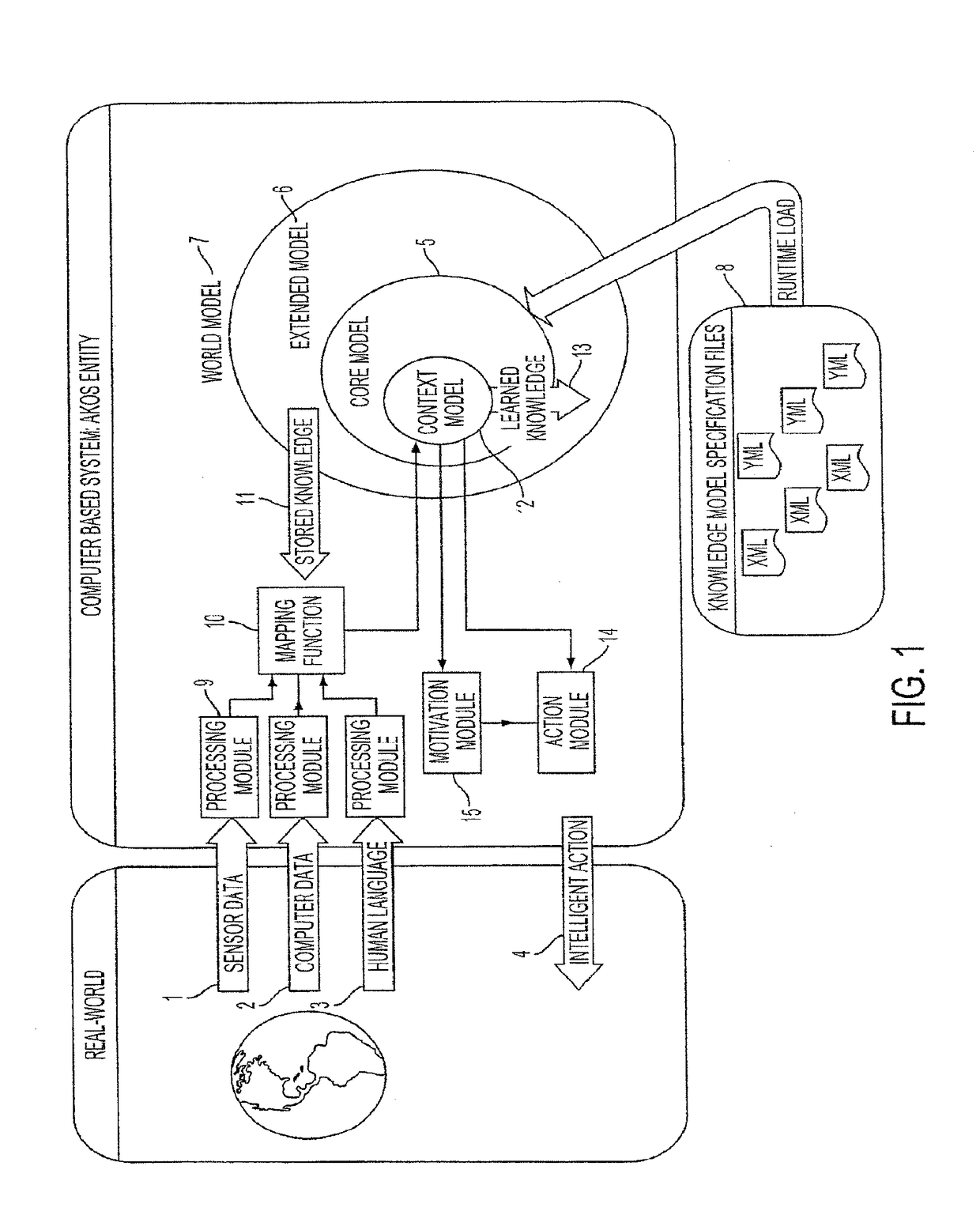

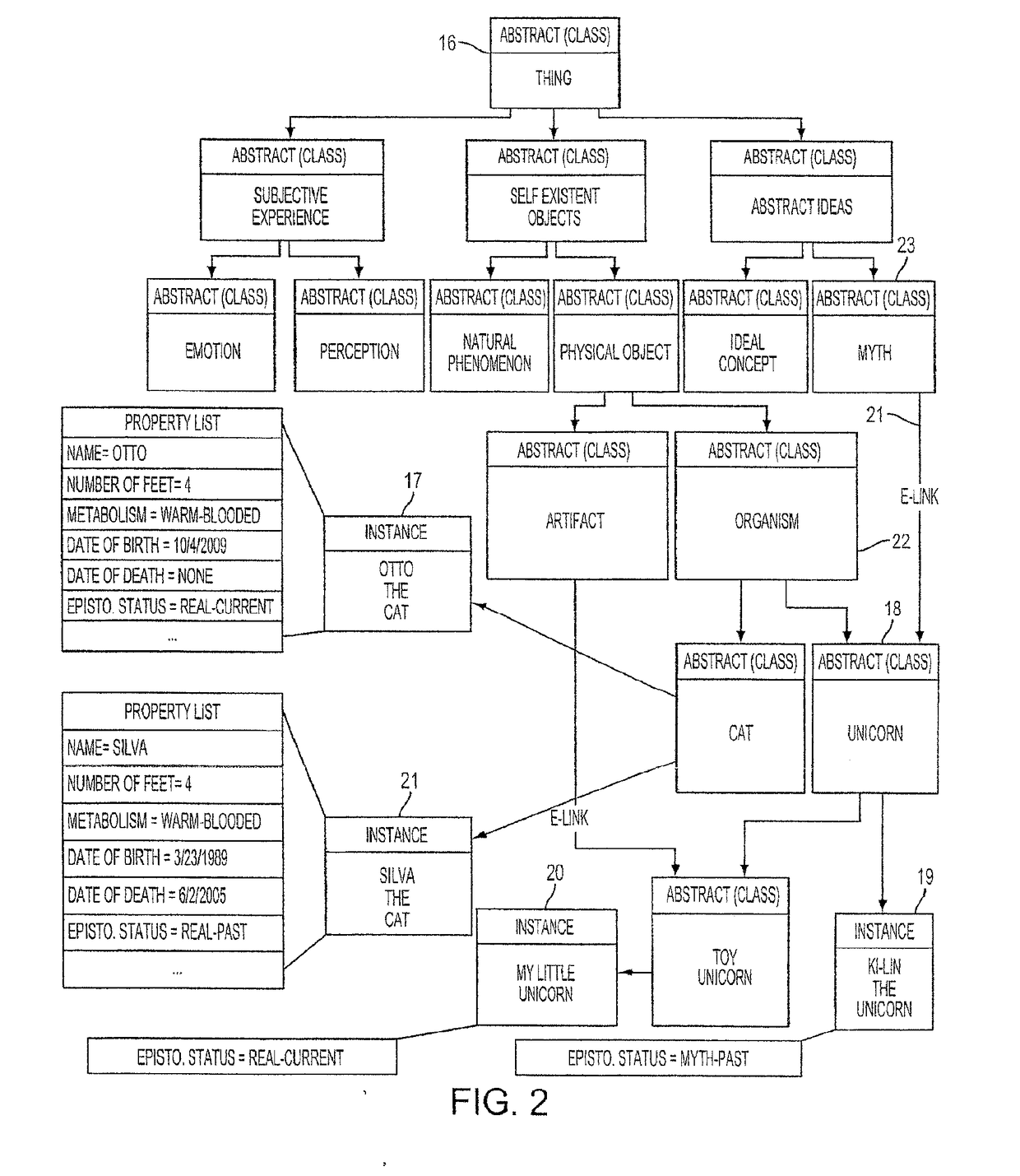

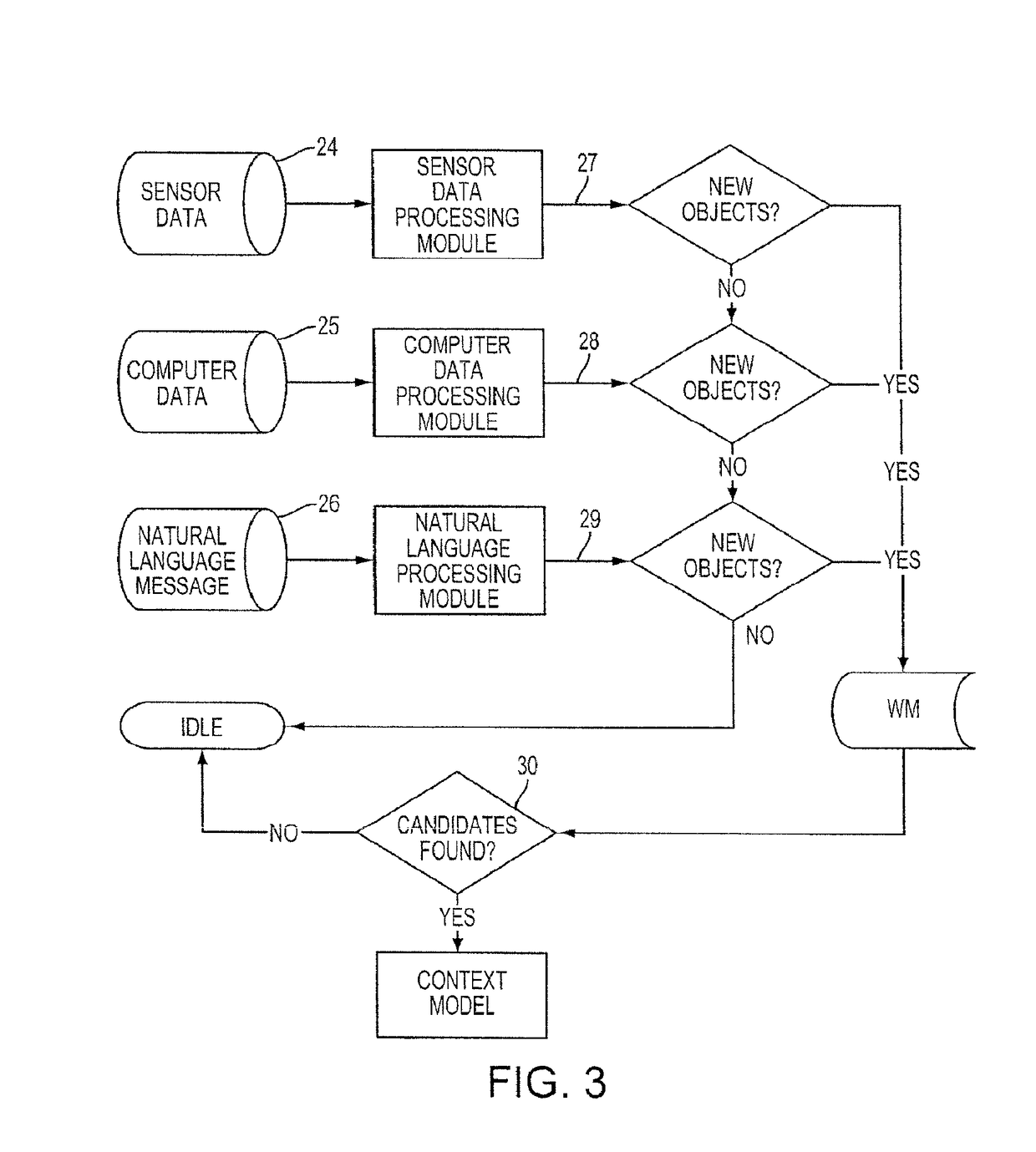

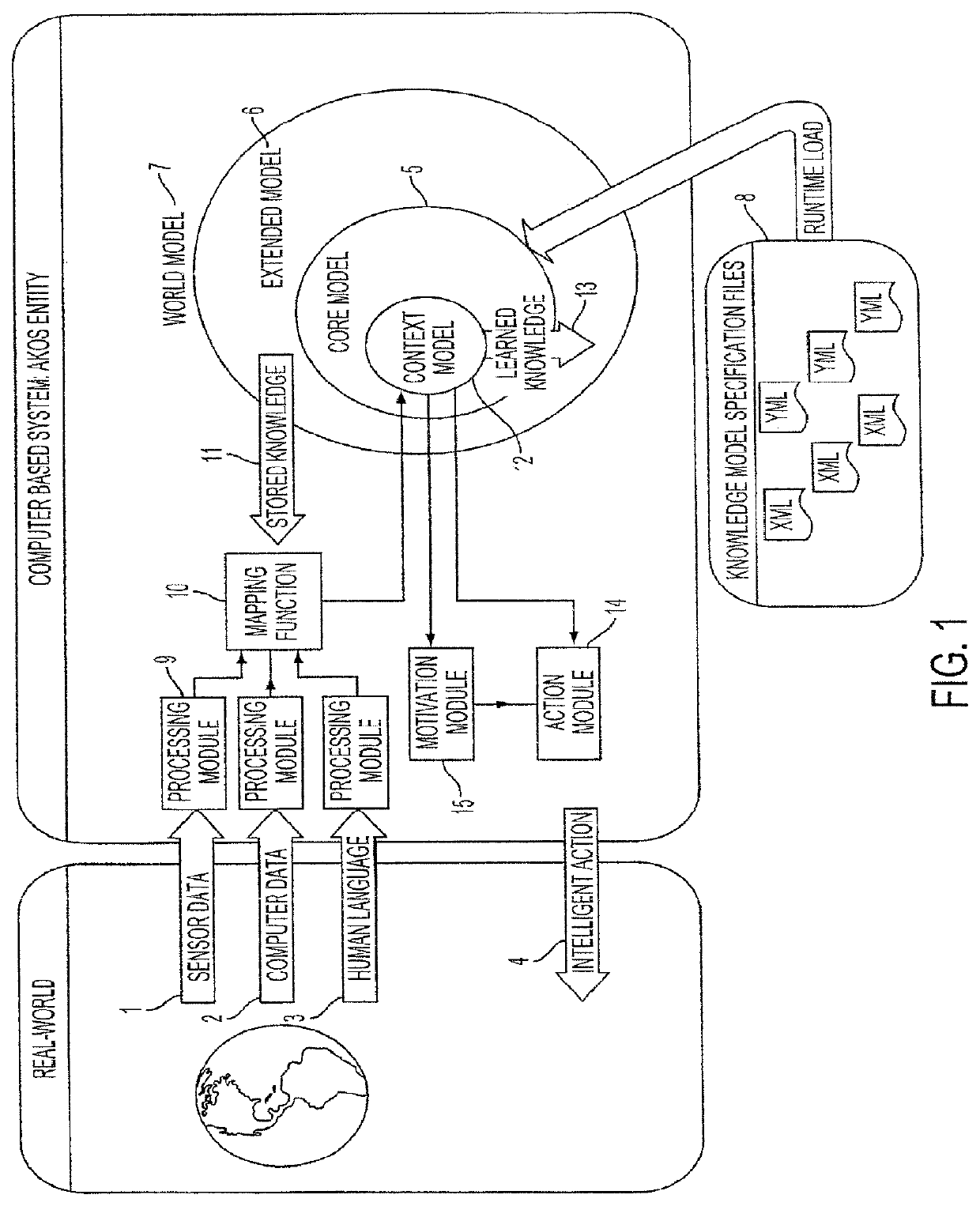

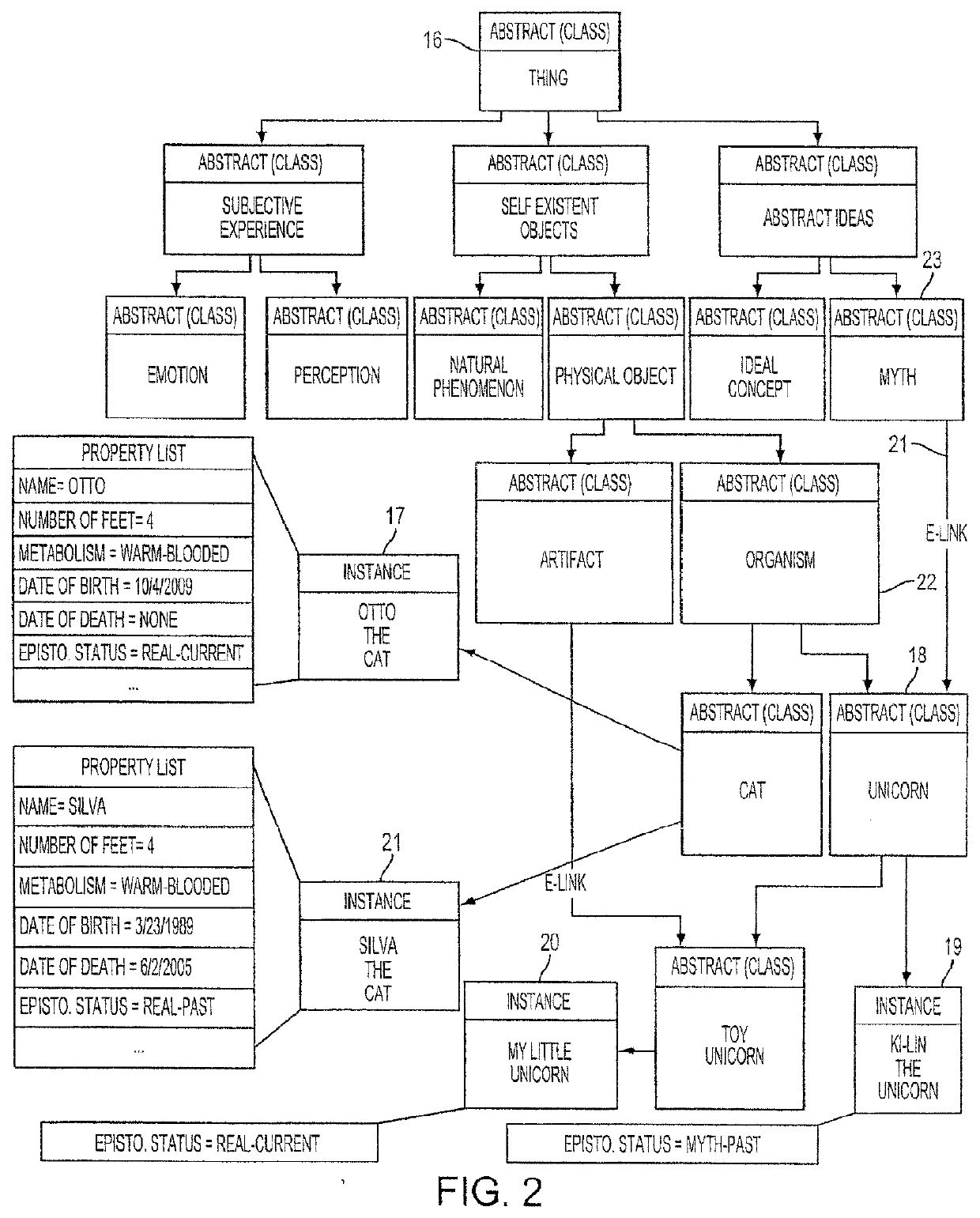

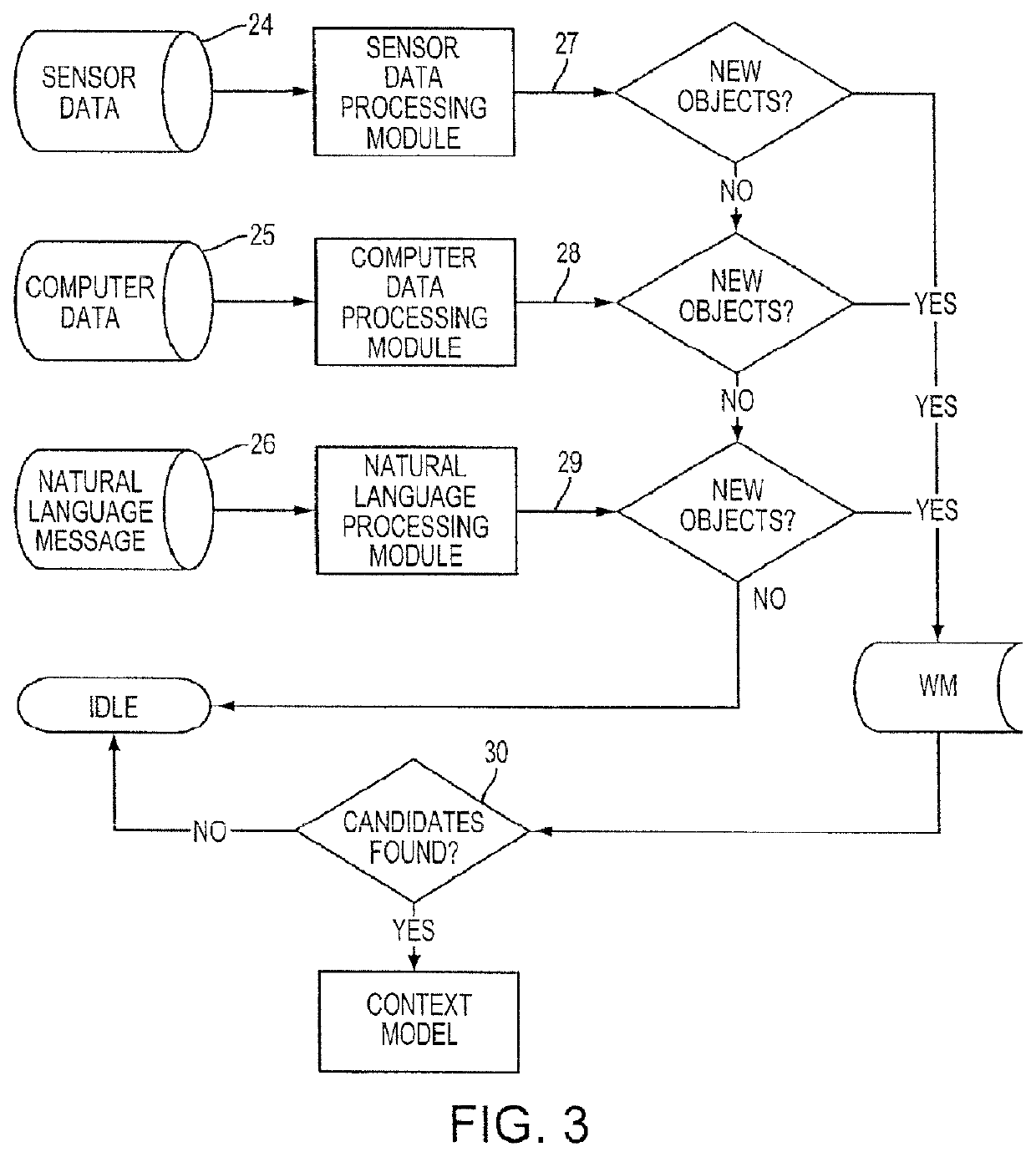

Method and system for machine comprehension

ActiveUS9275341B2Natural language translationKnowledge representationControl signalDirect communication

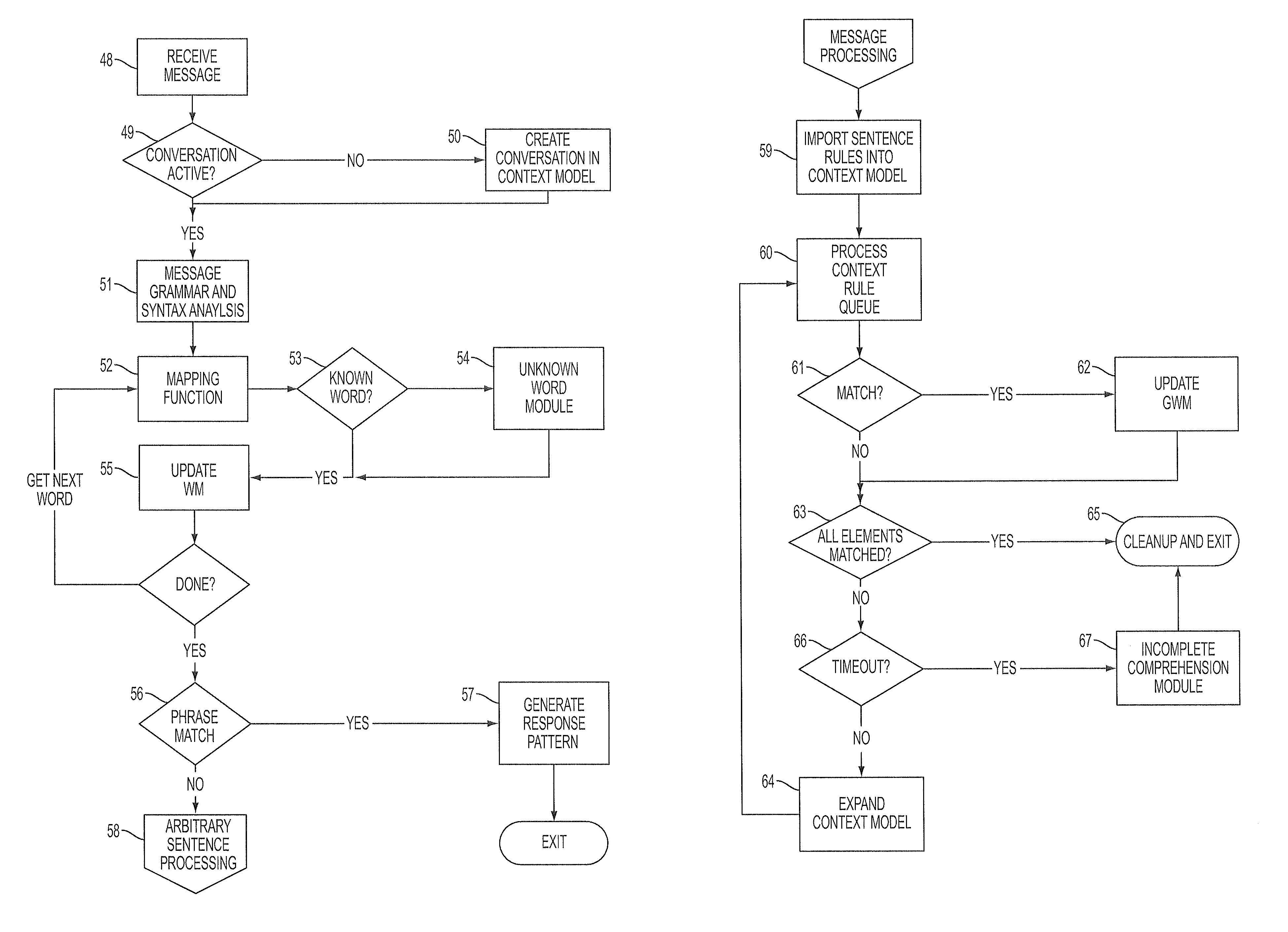

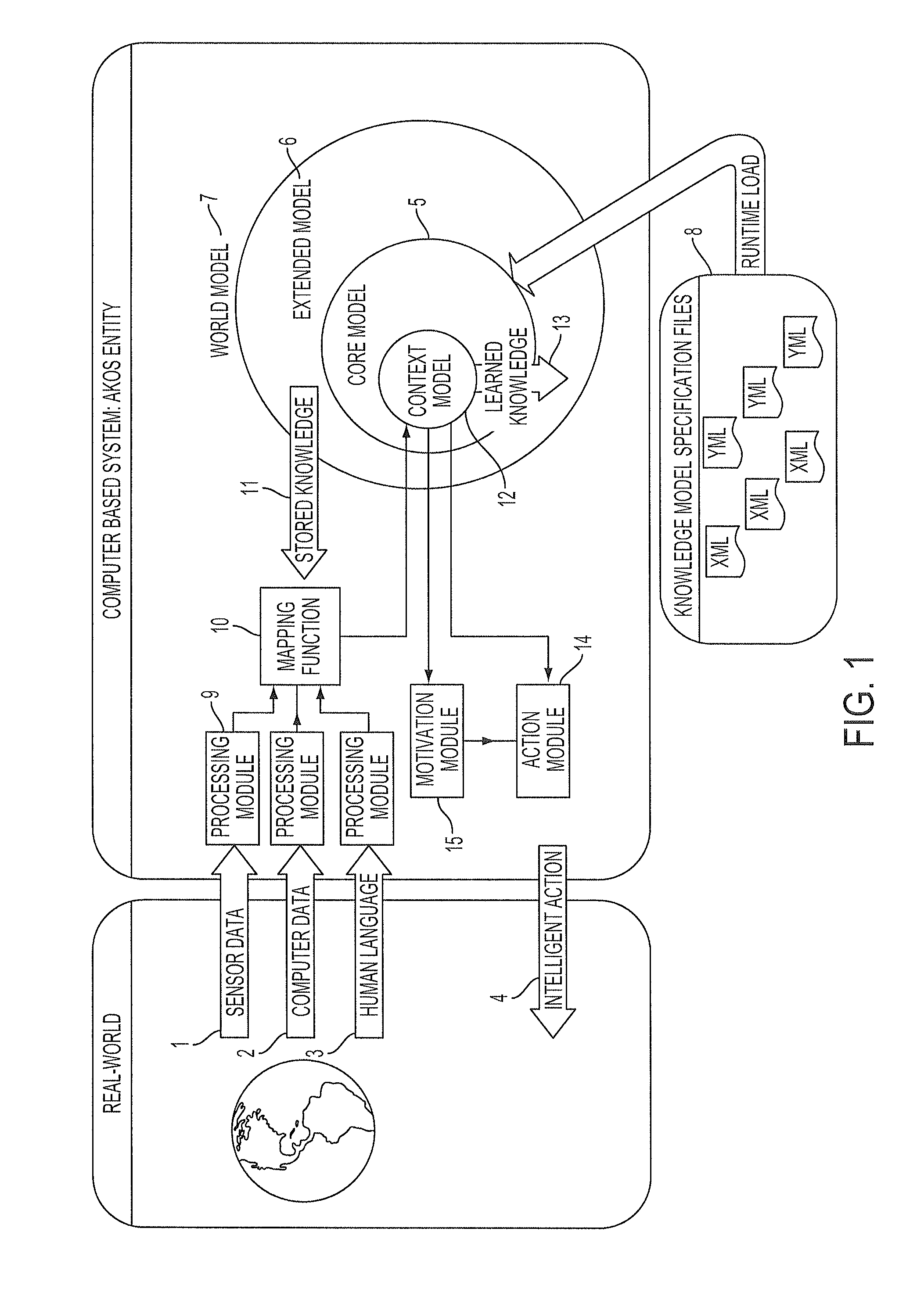

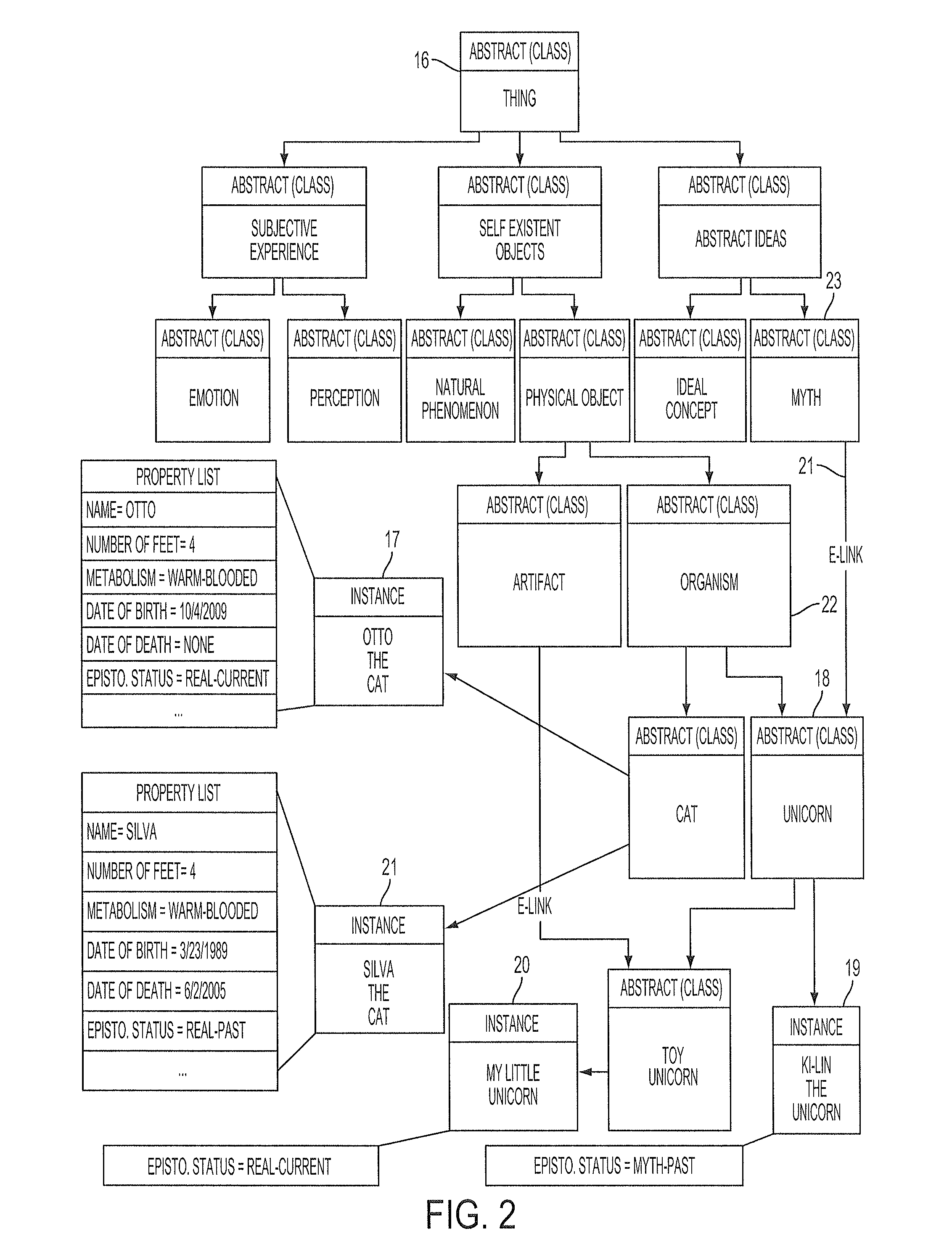

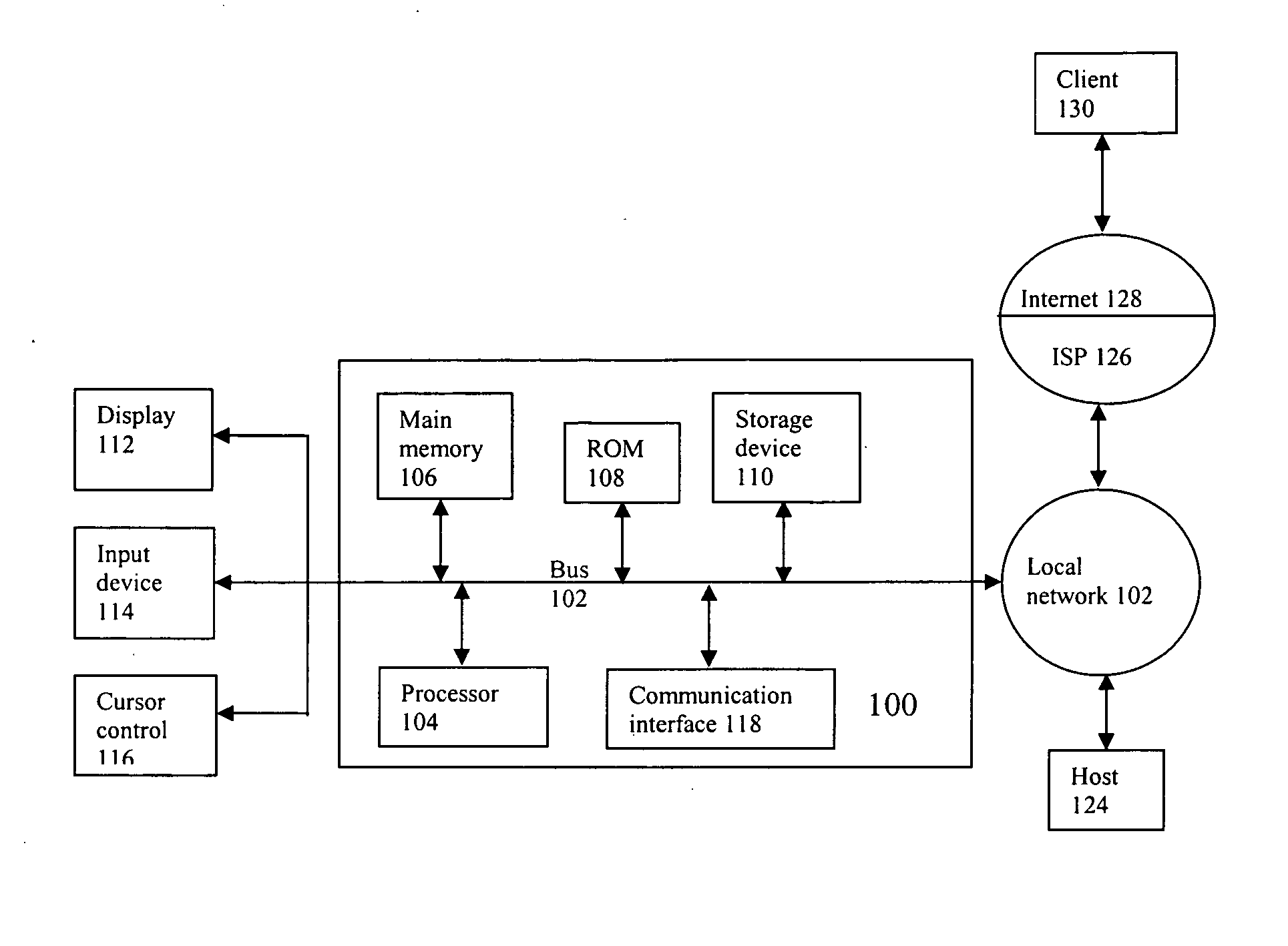

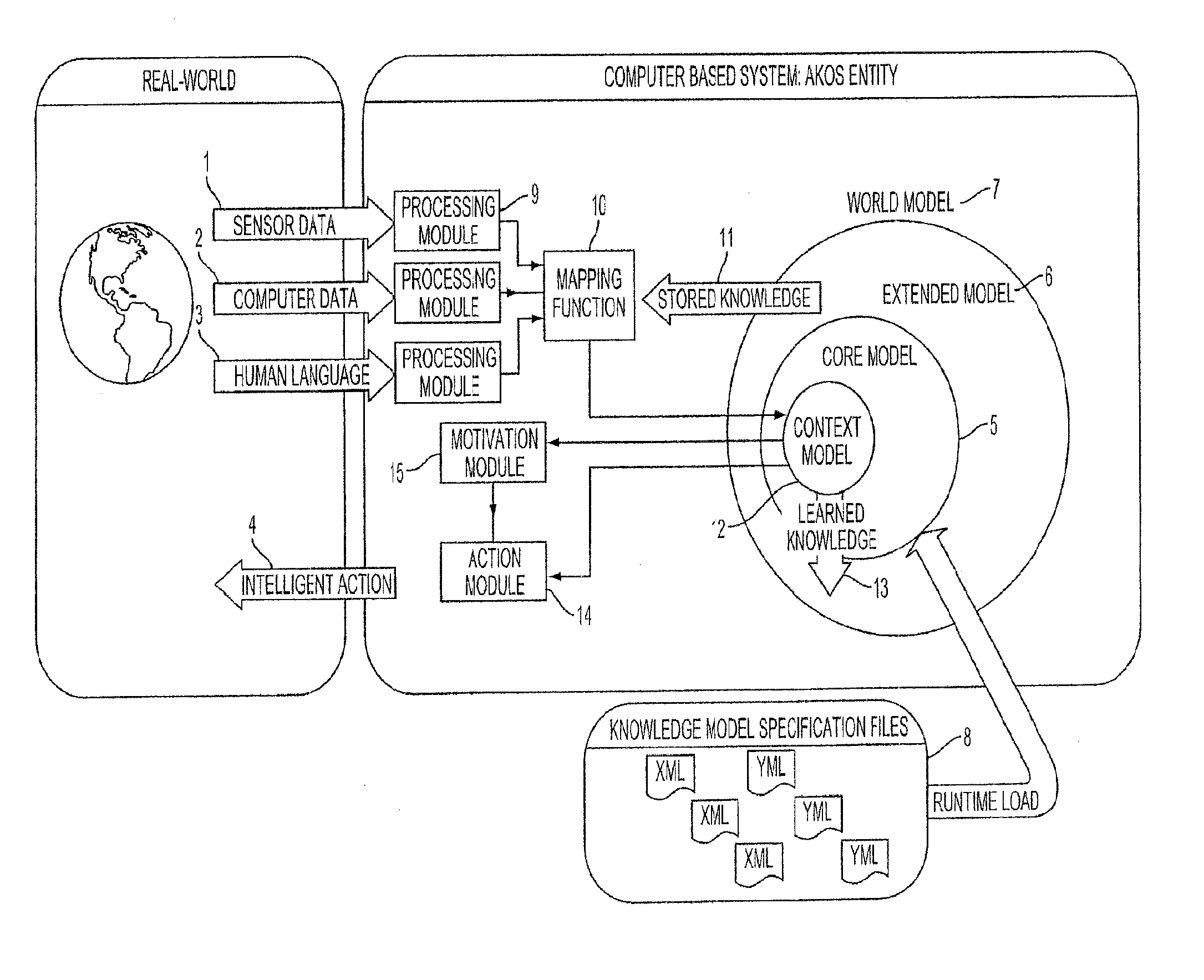

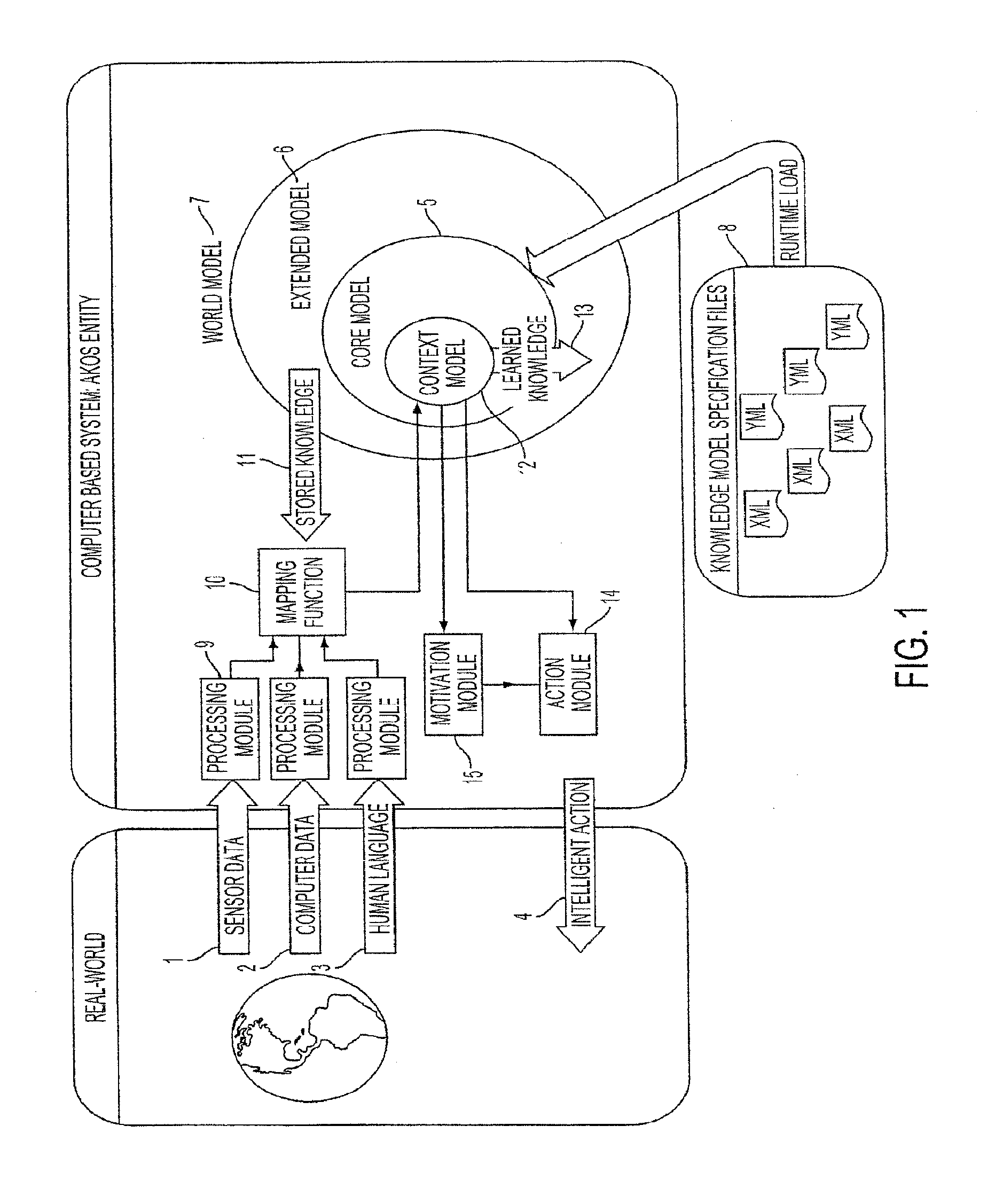

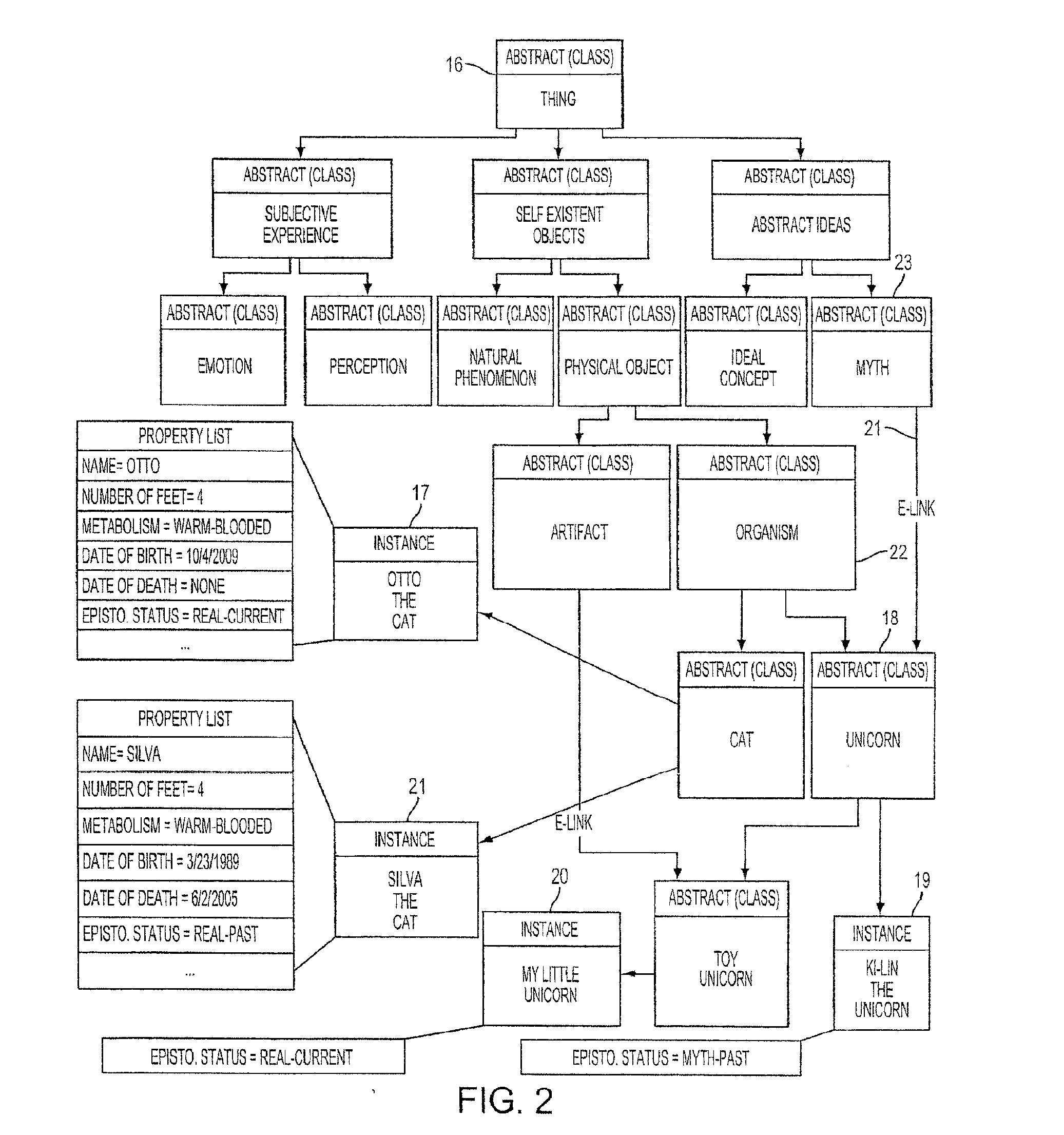

The AKOS (Artificial Knowledge Object System) of the invention is a software processing engine that relates incoming information to pre-existing stored knowledge in the form of a world model and, through a process analogous to human learning and comprehension, updates or extends the knowledge contained in the model, based on the content of the new information. Incoming information can come from sensors, computer to computer communication, or natural human language in the form of text messages. The software creates as an output. Intelligent action is defined as an output to the real-world accompanied by an alteration to the internal world model which accurately reflects an expected, specified outcome from the action. These actions may be control signals across any standard electronic computer interface or may be direct communications to a human in natural language.

Owner:NEW SAPIENCE

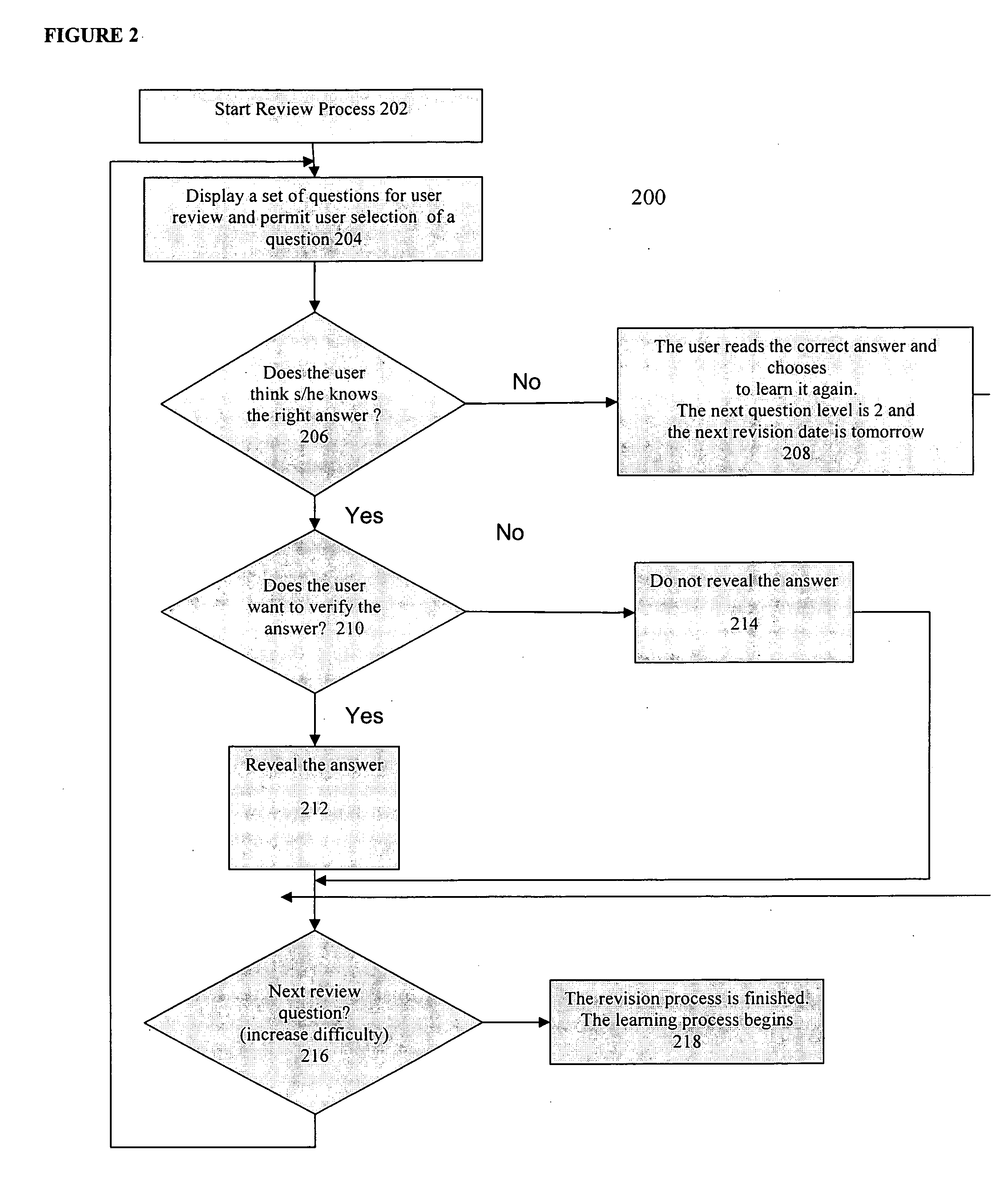

Method for establishing knowledge in long-term memory

A knowledge management system to optimize the human learning and memorization process. Memorization of desired information is achieved through knowledge transfer and rehearsal, whereby a learning system provides a series of associated elements to the user using a dynamical and reactive algorithm that responds to the user's abilities to prepare an efficient rehearsal process. The instant system combines a dynamic learning system with a database that comprises content provided by multiple users and which is overseen by individuals to ensure the validity of the content within the system. Information may be provided in a multimedia format which can include music, video, text and other auditory and visual stimuli as part of the rehearsal process.

Owner:POLARIS IND INC

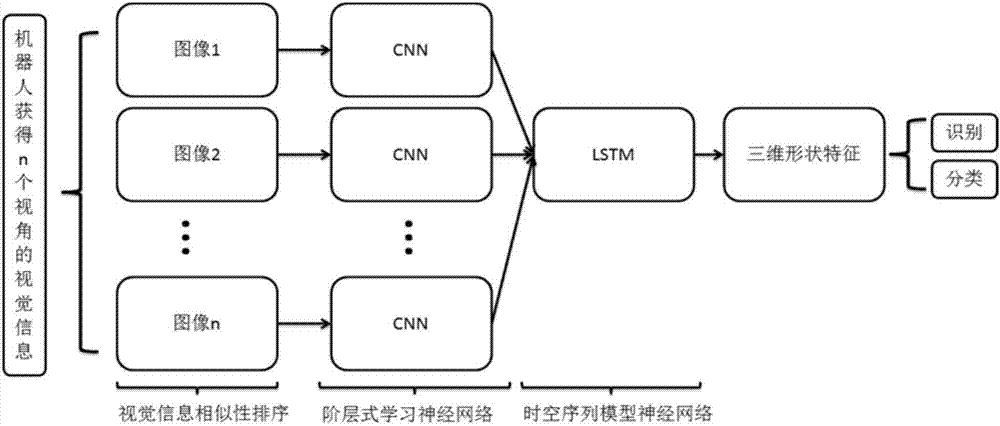

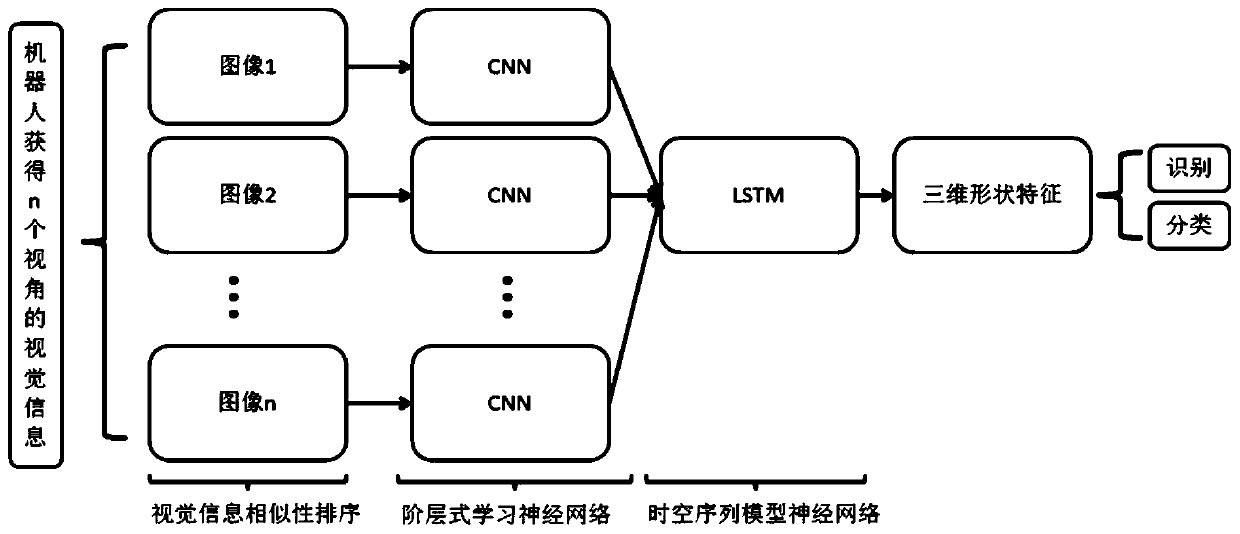

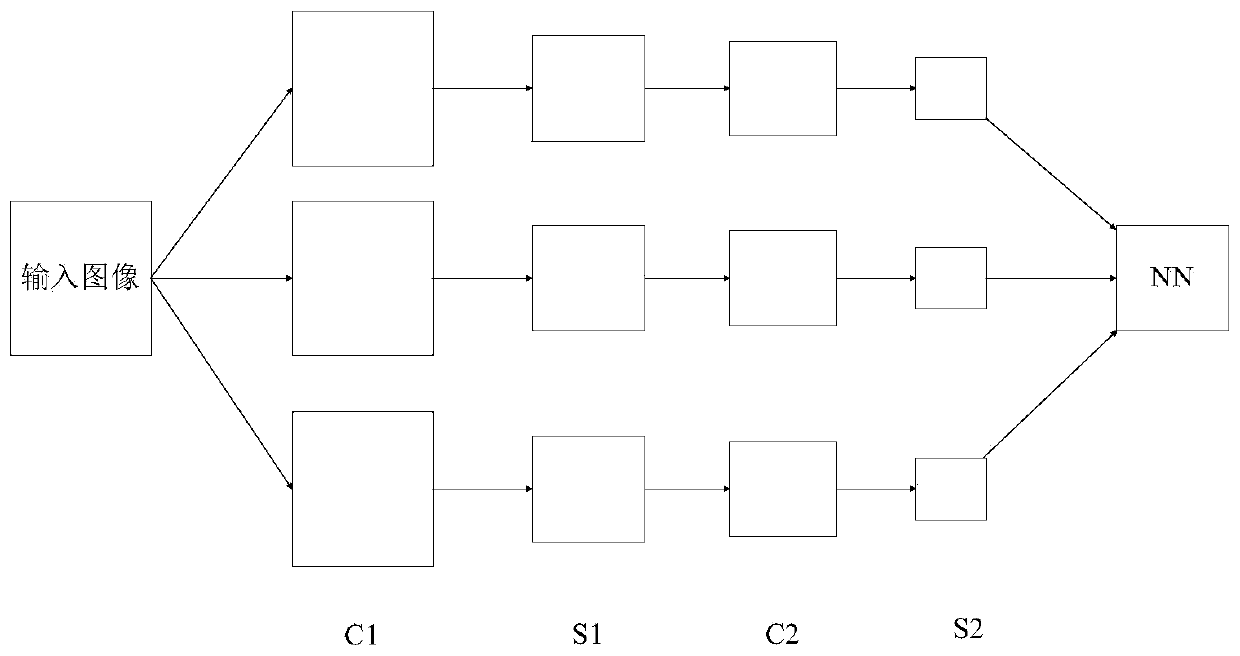

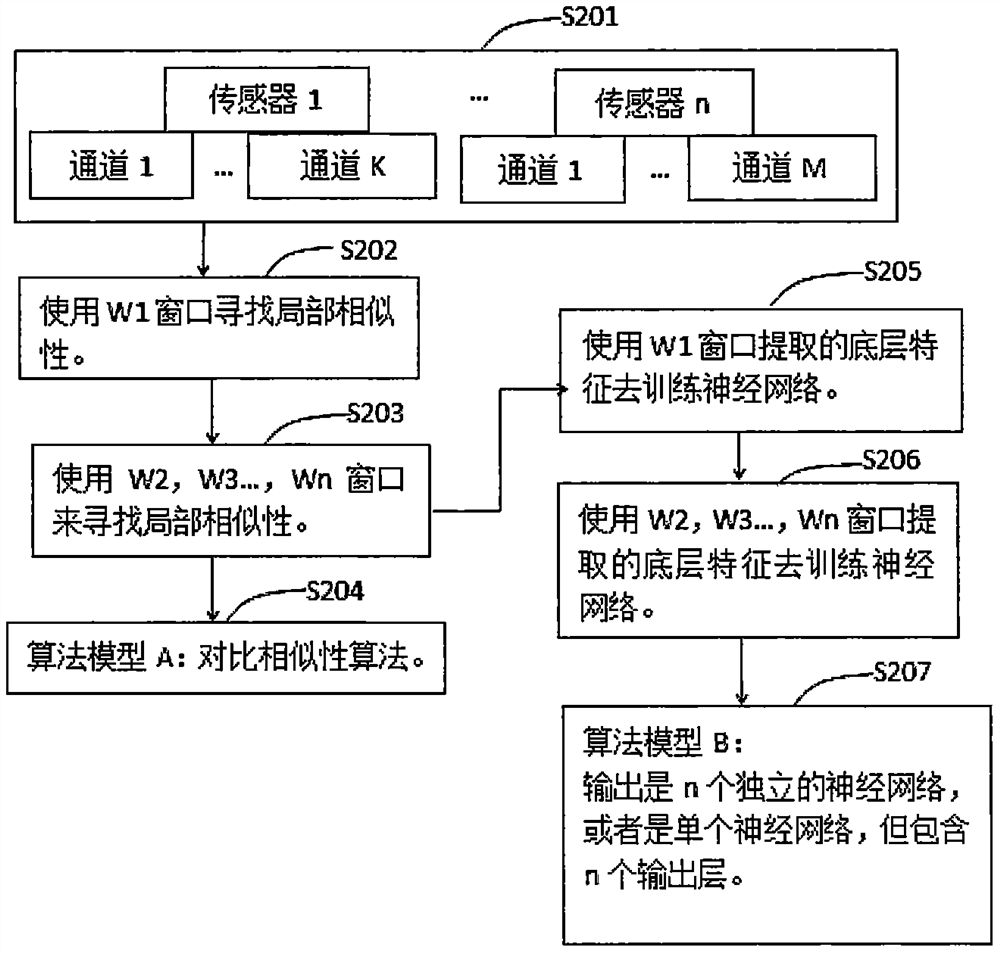

Robot 3D shape recognition method based on multi-view information fusion

ActiveCN106951923AImprove recognition accuracyEfficient sortingCharacter and pattern recognitionNeural learning methods3d shapesAbstract space

The invention provides a robot 3D shape recognition method based on multi-view information fusion. The method combines the advantages of a full-view method and a single-view method, overcomes the disadvantages of the two methods, and comprises steps of: firstly, performing image similarity ordering by using an image similarity detection technique by means of the multi-view information of a 3D shape acquired by a robot in motion, so as to obtain a hierarchical depth feature by a convolutional neural network; finally, learning the visual feature with a certain time and spatial sequence by using a long short term memory model to obtain a highly abstract space-time feature. The method not only simulates the hierarchical learning mechanism of human beings, but also adds the space-time sequence learning mechanism for simulating the human learning, and realizes the high-precision classification and recognition of 3D shape by multi-view information fusion.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Method and system for machine comprehension

ActiveUS20130226847A1Easy to learnAccurate locationNatural language translationDigital computer detailsControl signalDirect communication

The AKOS (Artificial Knowledge Object System) of the invention is a software processing engine that relates incoming information to pre-existing stored knowledge in the form of a world model and, through a process analogous to human learning and comprehension, updates or extends the knowledge contained in the model, based on the content of the new information. Incoming information can come from sensors, computer to computer communication, or natural human language in the form of text messages. The software creates as an output. Intelligent action is defined as an output to the real-world accompanied by an alteration to the internal world model which accurately reflects an expected, specified outcome from the action. These actions may be control signals across any standard electronic computer interface or may be direct communications to a human in natural language.

Owner:NEW SAPIENCE

Method and system for machine comprehension

The AKOS (Artificial Knowledge Object System) of the invention is a software processing engine that relates incoming information to pre-existing stored knowledge in the form of a world model and, through a process analogous to human learning and comprehension, updates or extends the knowledge contained in the model, based on the content of the new information. Incoming information can come from sensors, computer to computer communication, or natural human language in the form of text messages. The software creates as an output. Intelligent action is defined as an output to the real-world accompanied by an alteration to the internal world model which accurately reflects an expected, specified outcome from the action. These actions may be control signals across any standard electronic computer interface or may be direct communications to a human in natural language.

Owner:NEW SAPIENCE

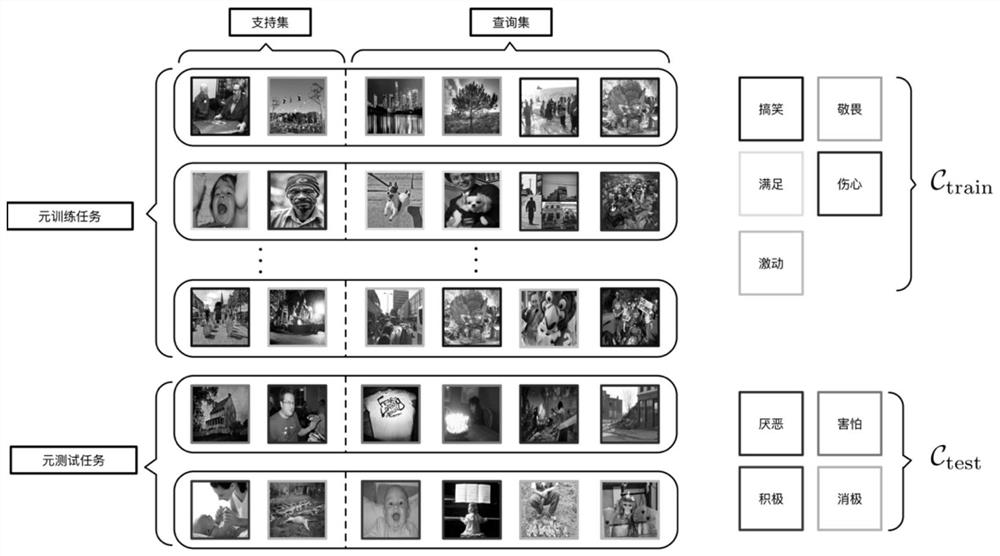

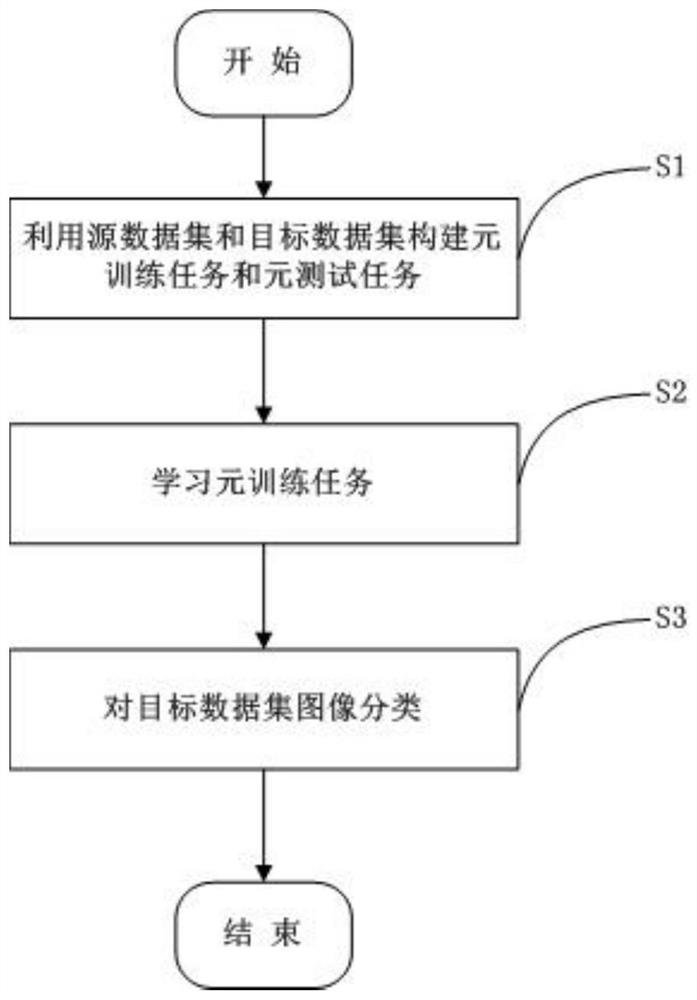

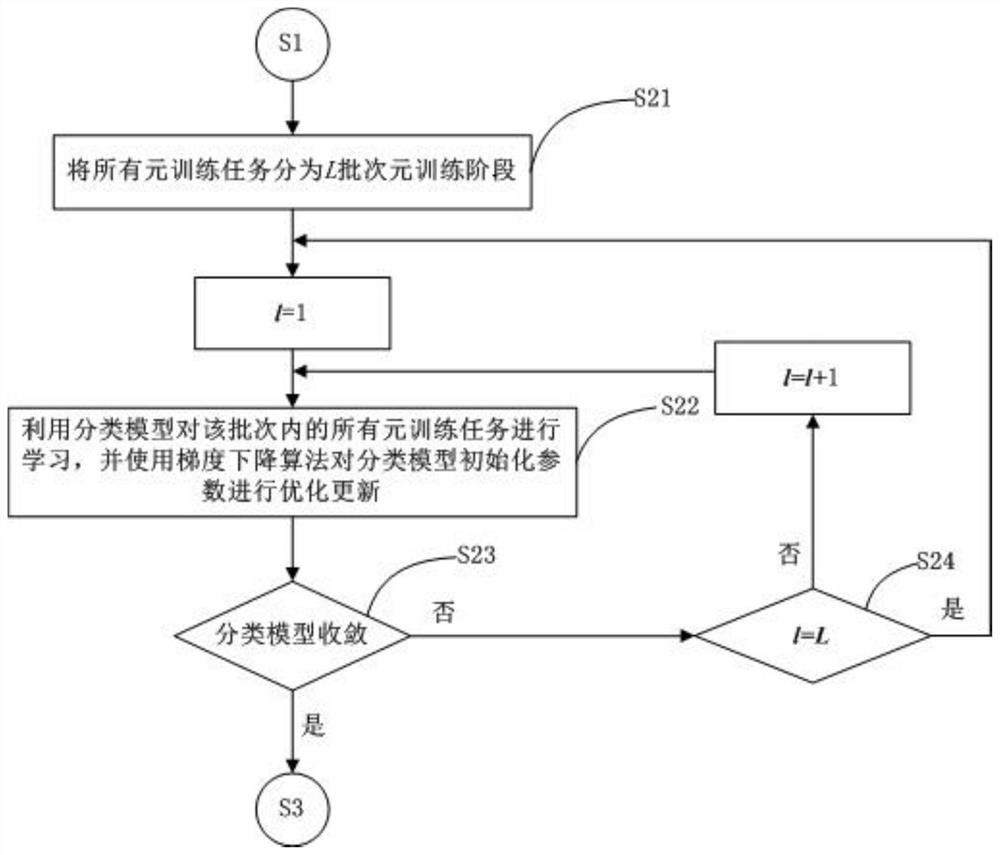

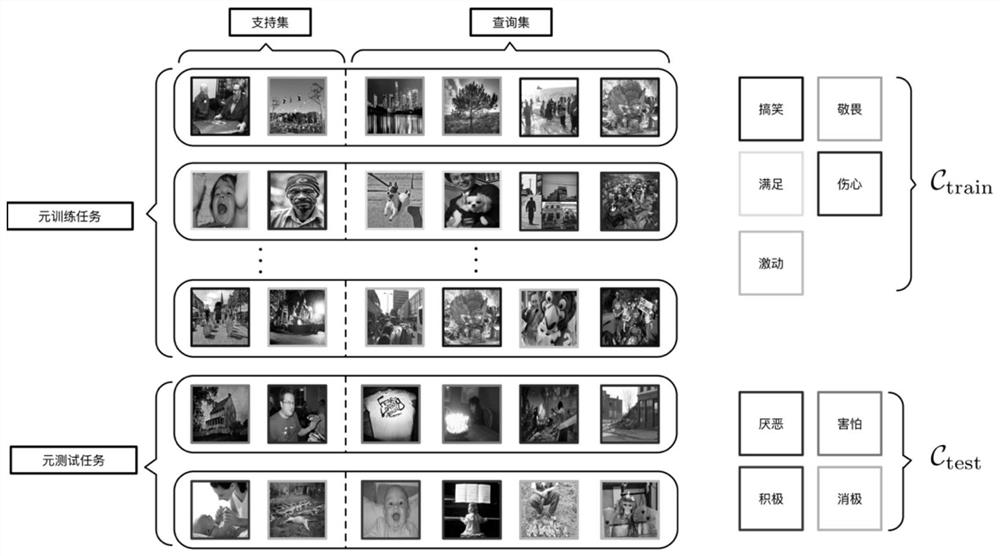

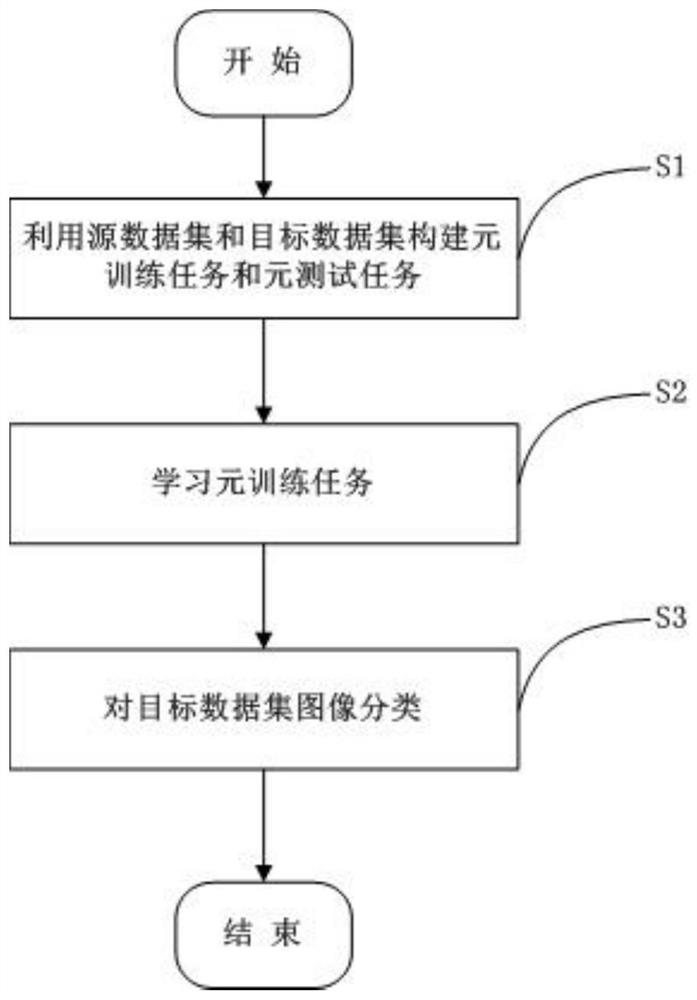

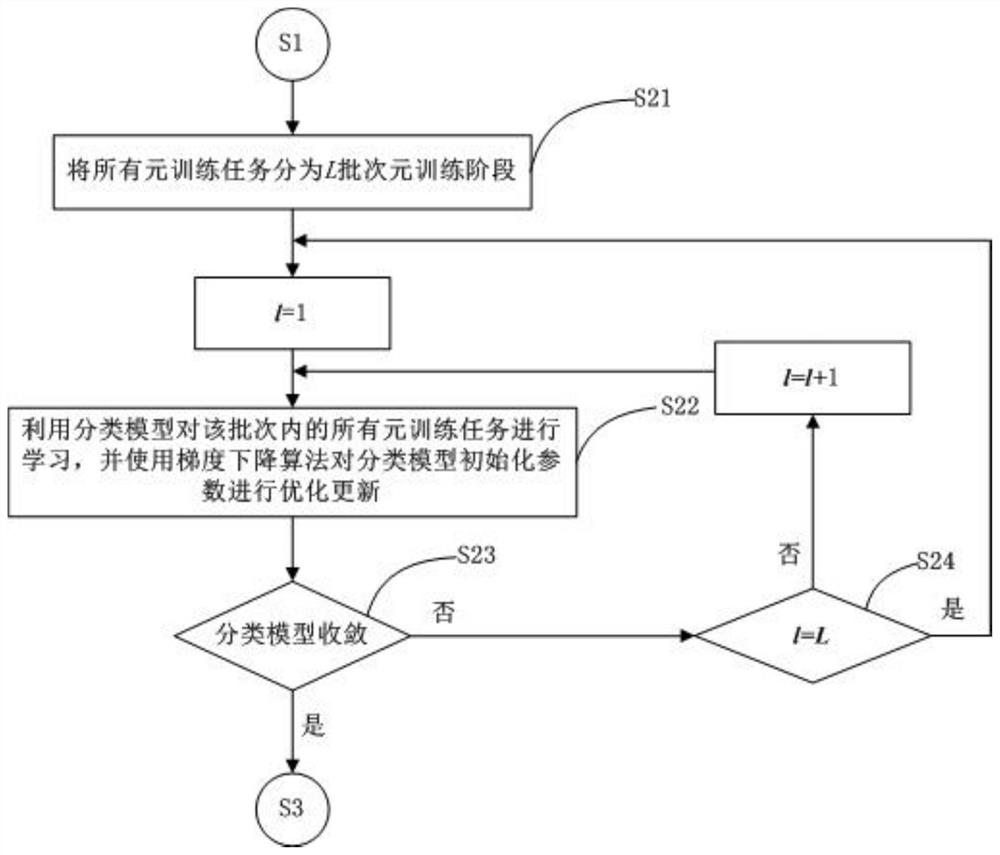

Few-sample image sentiment classification method based on meta-learning

ActiveCN112613556AGood initialization parametersImprove classification effectCharacter and pattern recognitionNeural architecturesSample graphData set

The invention discloses a few-sample image sentiment classification method based on meta-learning, and the method comprises the steps: firstly constructing a plurality of meta-learning tasks similar to few-sample images with sentiment label information in a target data set on a source data set, obtaining a good classification model initialization parameter through the learning of the meta-learning tasks, so as to enable the classification model to obtain a better classification effect when facing emotion images in a few-sample target data set. According to the method, the need for annotation data can be greatly relieved, the mode based on meta-learning better conforms to the human learning mode (new human learning tasks are learned based on learned tasks), and the neural network model can be more intelligent.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

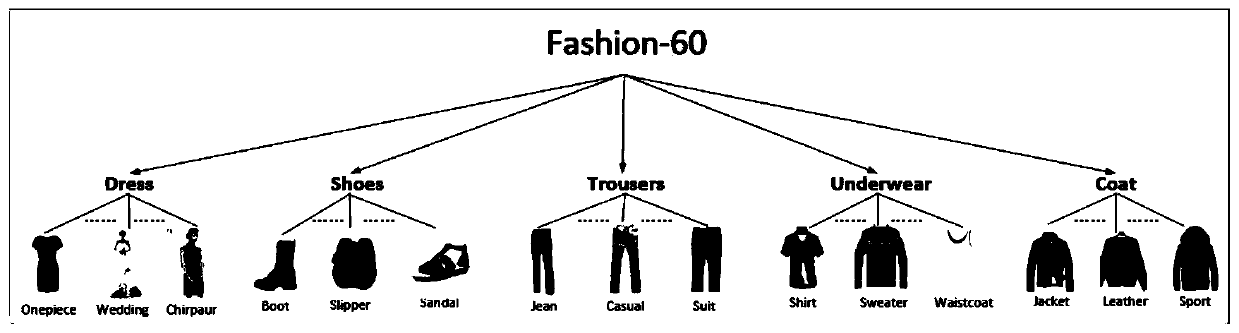

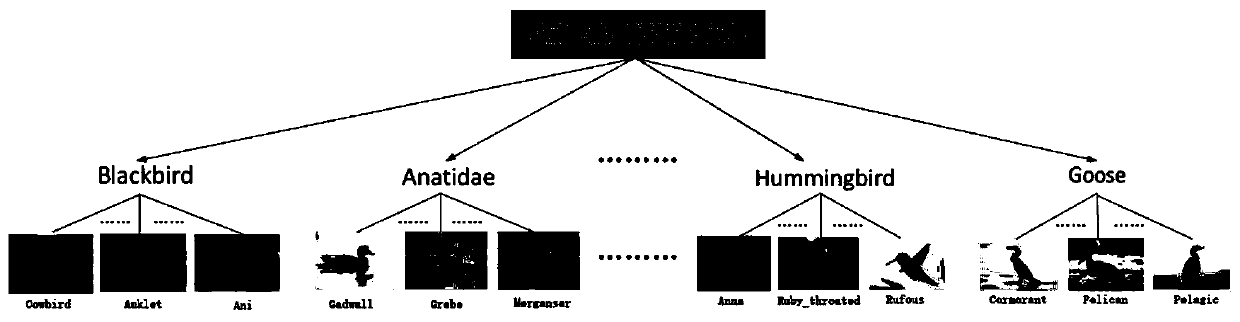

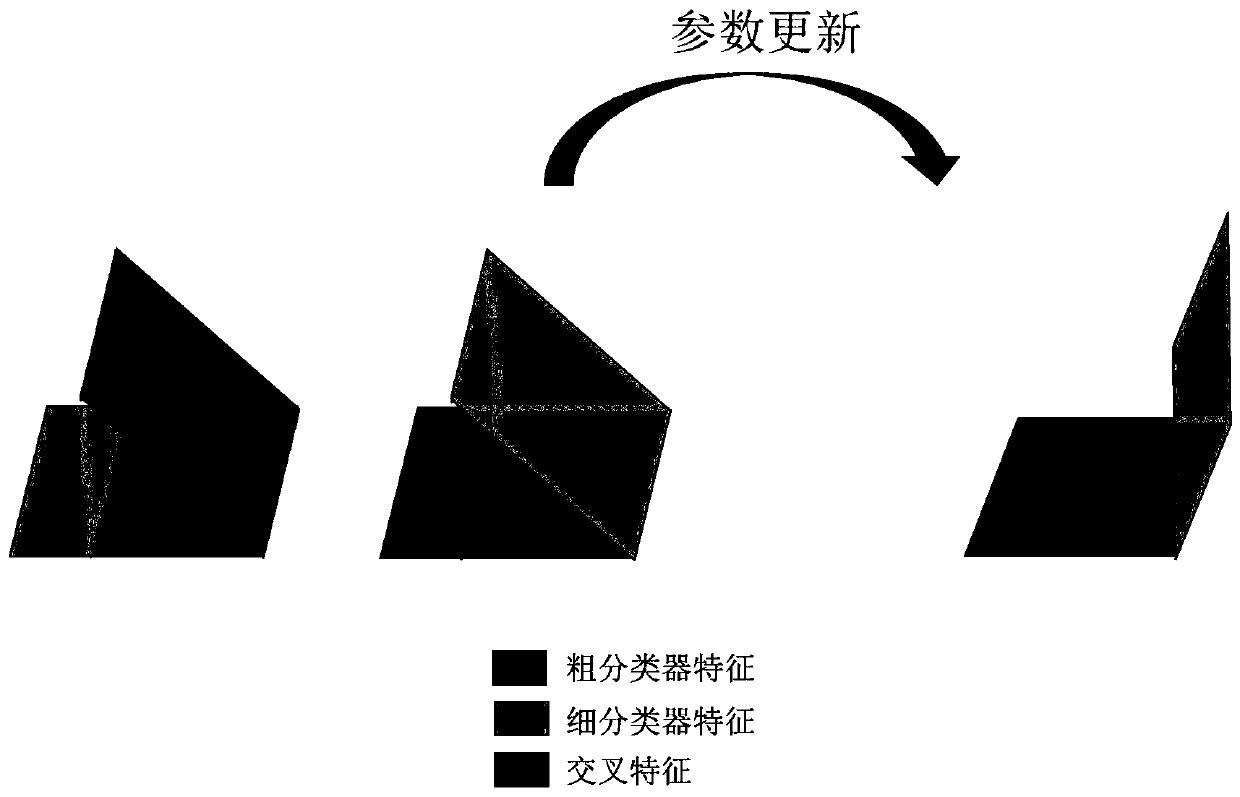

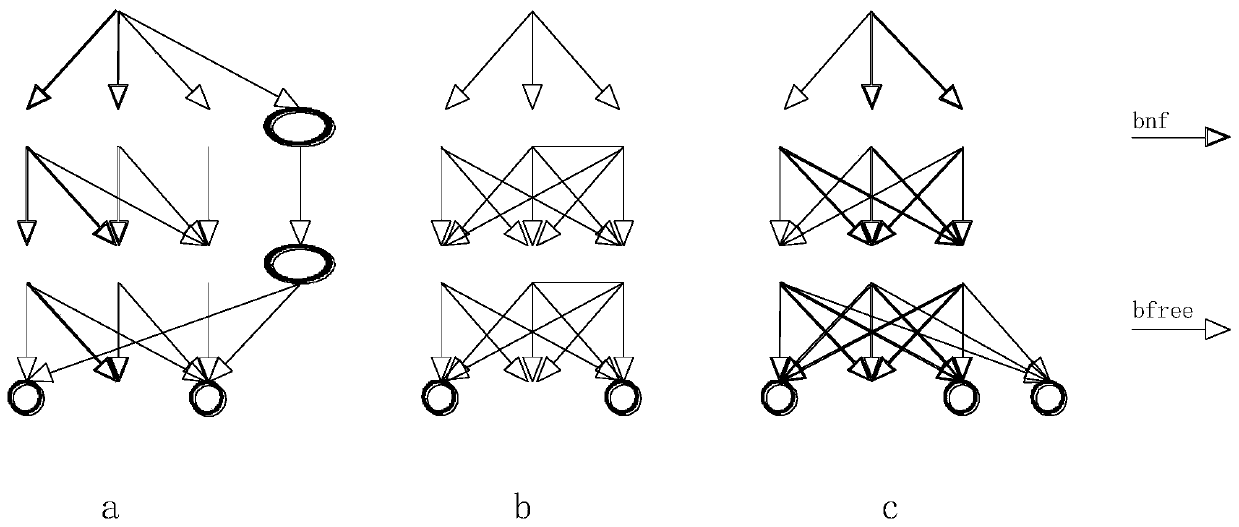

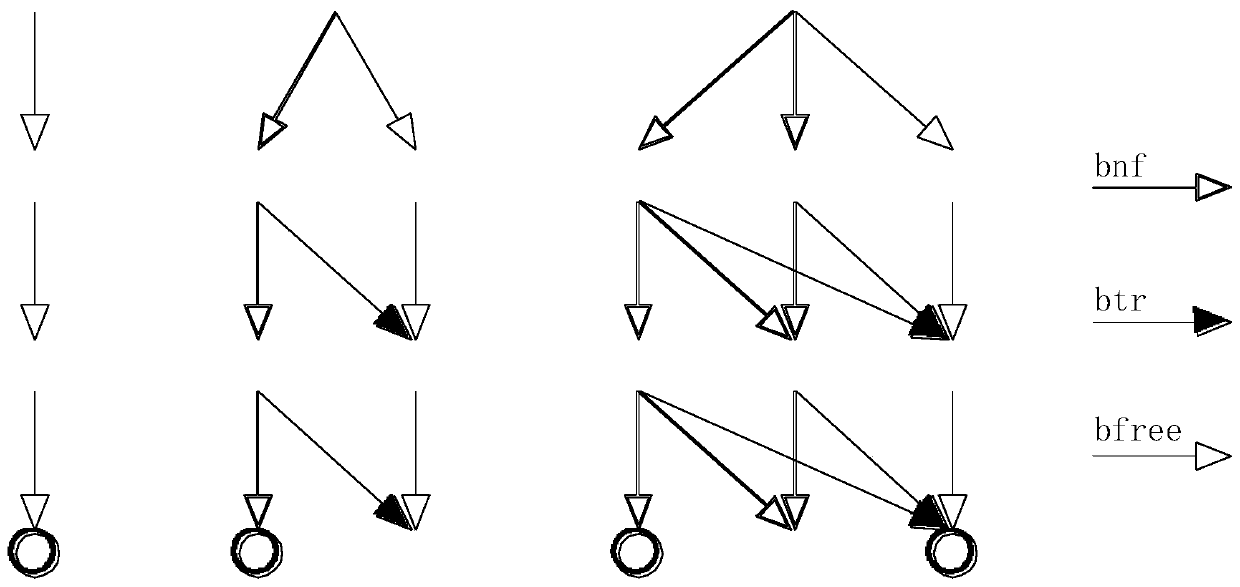

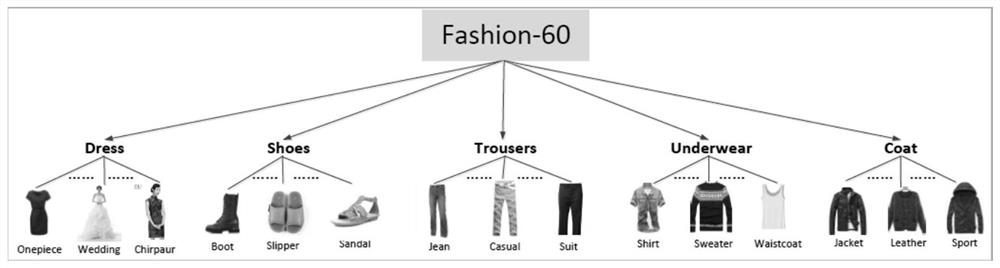

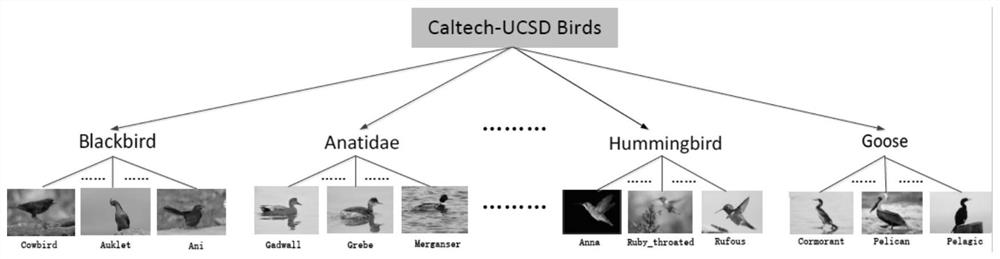

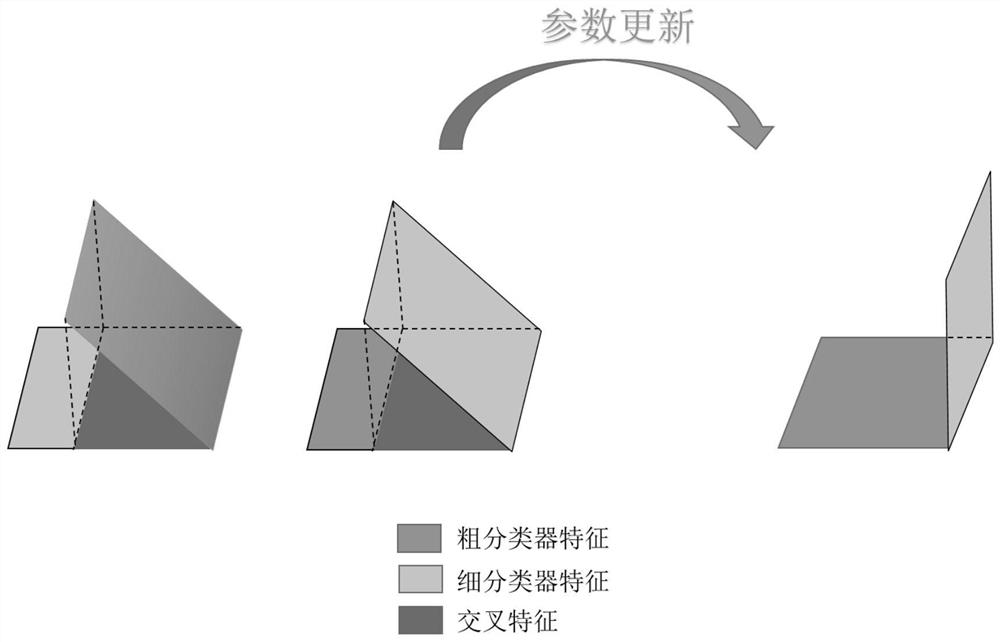

Construction method of multi-task classification network based on orthogonal loss function

ActiveCN110929624AMeet studyImprove separabilityCharacter and pattern recognitionNeural architecturesHidden layerFeature extraction

The invention provides a construction method of a multi-task classification network based on an orthogonal loss function. The constructed multi-task classification network simulates a human learning process, a deep convolutional neural network is used as a hidden layer to simulate the brain of a human to perform deep feature extraction, a tree classifier is used as a task-related output layer to perform progressive classification, and the recognition process forms different learning tasks Characteristics obtained by different tasks to better meet respective requirements. When the classifier completes a coarse classification task, the depth features of the same coarse class are more aggregated; and when the fine classification task is completed, the depth features of different fine classesare more discrete, and the task output layer features of different classification tasks are distinguished, so that classifiers of different hierarchies can obtain features better matched with different classification tasks, useless features are removed, and the classification accuracy is improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Method and system for machine comprehension

InactiveUS20190079739A1Easy to learnAccurate locationArtificial lifeModel driven codeControl signalDirect communication

The AKOS (Artificial Knowledge Object System) of the invention is a software processing engine that relates incoming information to pre-existing stored knowledge in the form of a world model and, through a process analogous to human learning and comprehension, updates or extends the knowledge contained in the model, based on the content of the new information. Incoming information can come from sensors, computer to computer communication, or natural human language in the form of text messages. The software creates as an output. Intelligent action is defined as an output to the real-world accompanied by an alteration to the internal world model which accurately reflects an expected, specified outcome from the action. These actions may be control signals across any standard electronic computer interface or may be direct communications to a human in natural language.

Owner:NEW SAPIENCE

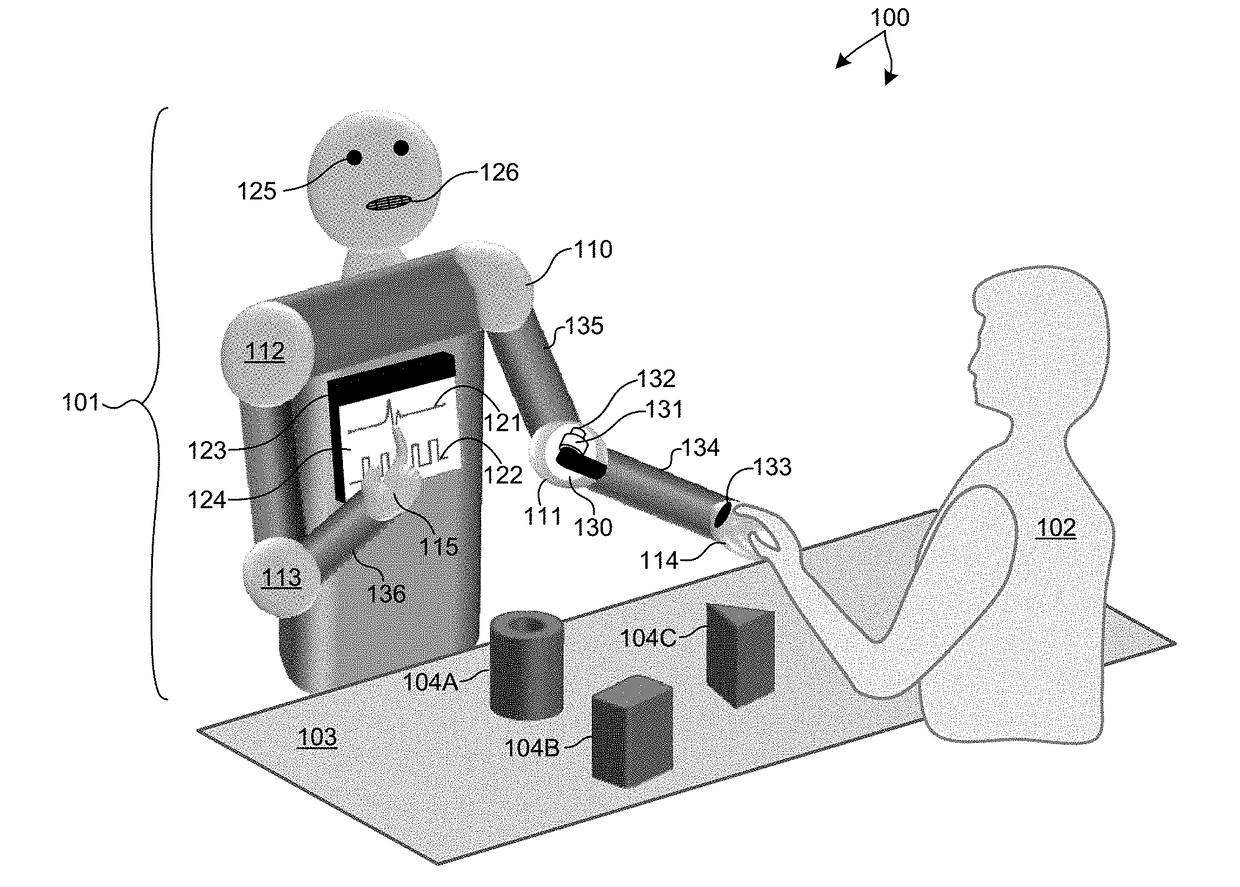

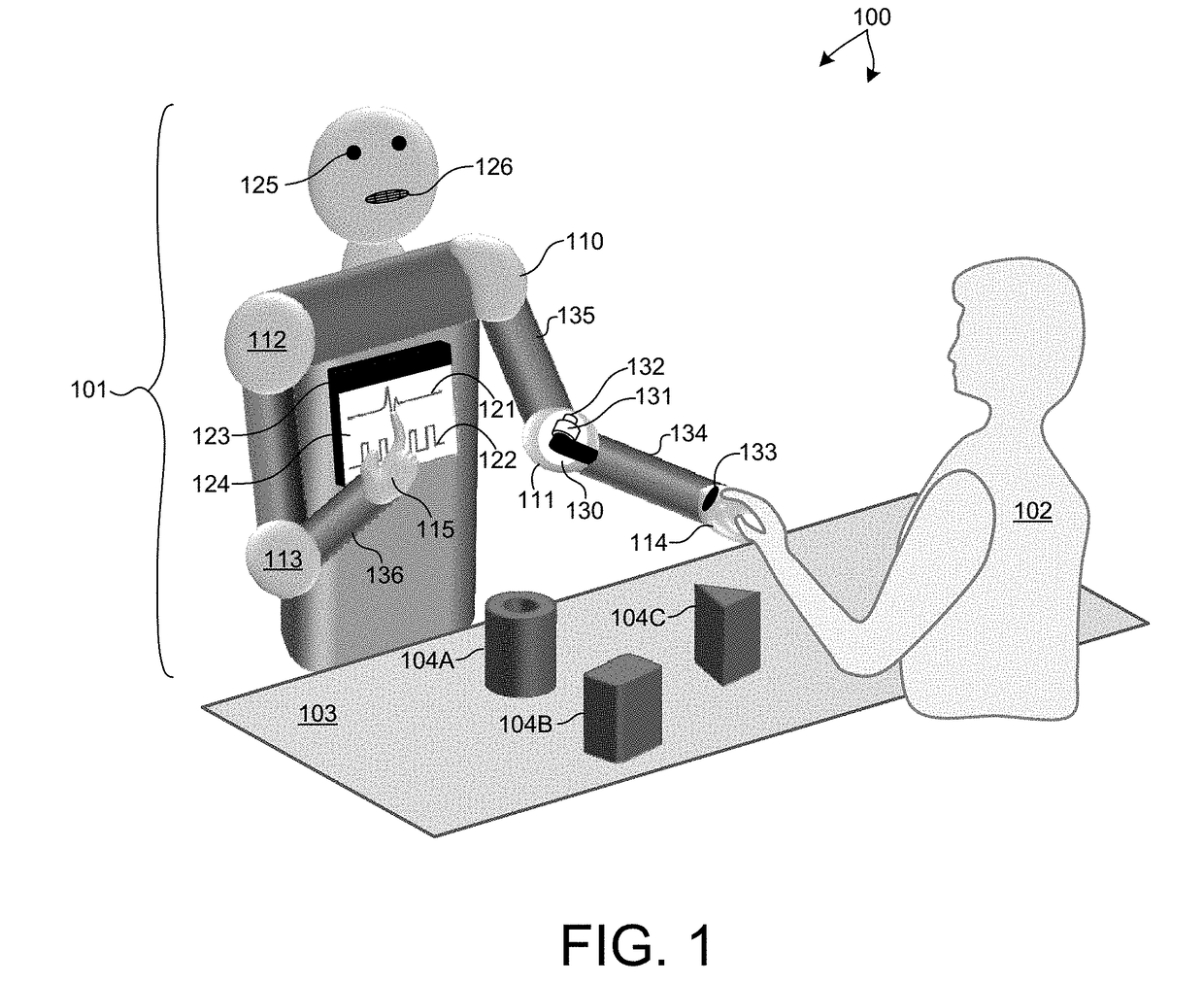

Robotic Instructor And Demonstrator To Train Humans As Automation Specialists

Methods and systems for training a broad population of learners in the field of robotics and automation technology based on physical interactions with a robotic training system are described herein. Specifically, a robotic instructor provides audio-visual instruction and physically interacts with human learners to effectively teach robotics and automation concepts and evaluate learner understanding of those concepts. In some examples, a training robot instructs and demonstrates encoder operation, feedback control, and robot motion coordination with external objects and events while physically interacting with a human learner. In some examples, interlock logic and waypoints of the training robot are programmed by the human user while the training robot physically interacts with the human learner. In a further aspect, a training robot evaluates the proficiency of a human learner with respect to particular robotic concepts. Future instruction by the training robot is determined in part by the measured proficiency.

Owner:BOSTON INCUBATOR CENT LLC

Student profile grading system

The invention relates to a method and means of grading students. This invention is a software application utilizing profiling techniques that when combined or imbedded with available knowledge on the human learning experience will individually evaluate each student based on their current ability and capacity to learn, by subject. Student's tests are graded electronically against their personal ability and capability profile for grade. The profile system software is applicable to many learning situations requiring a measured outcome.

Owner:ROERS PATRICK G

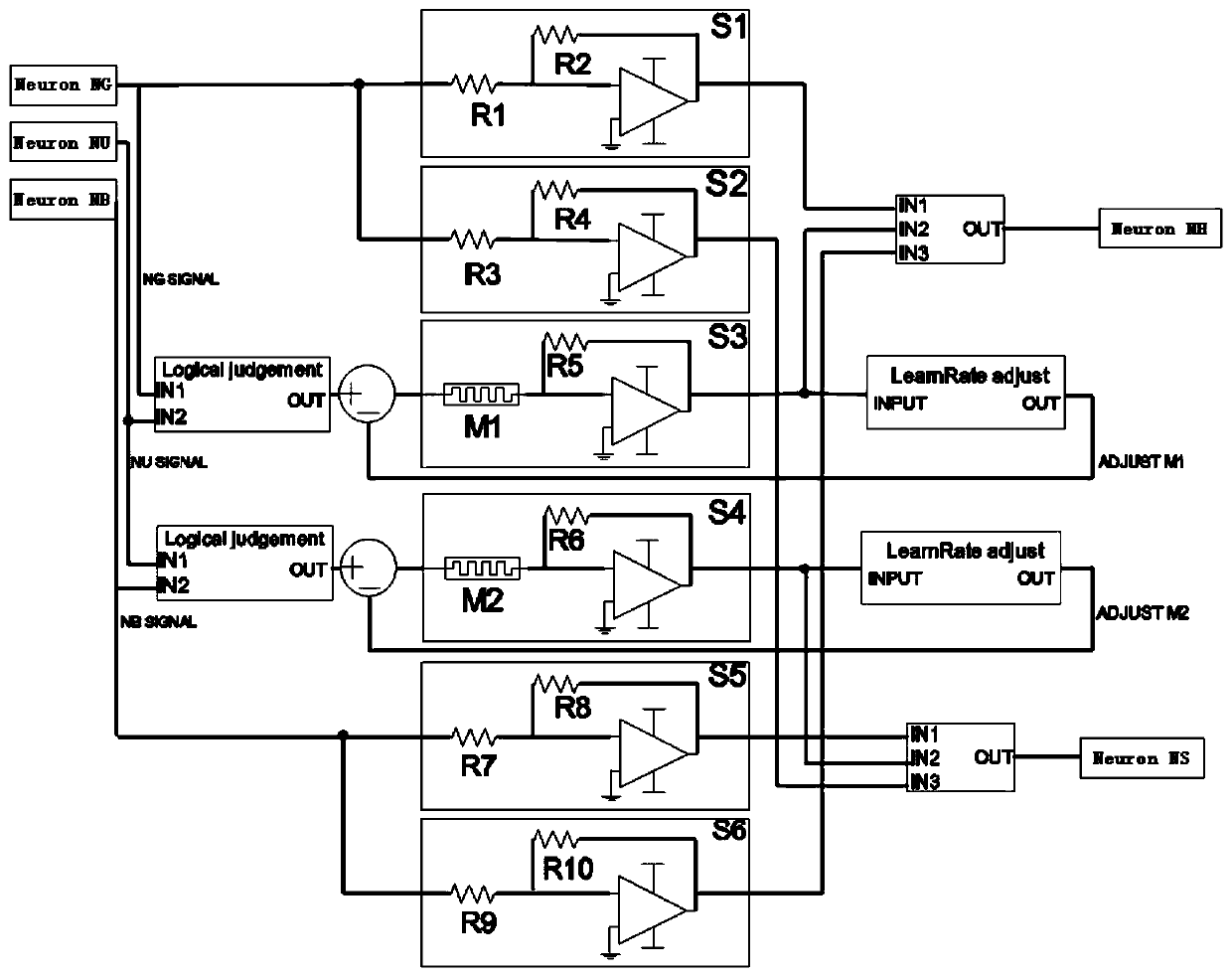

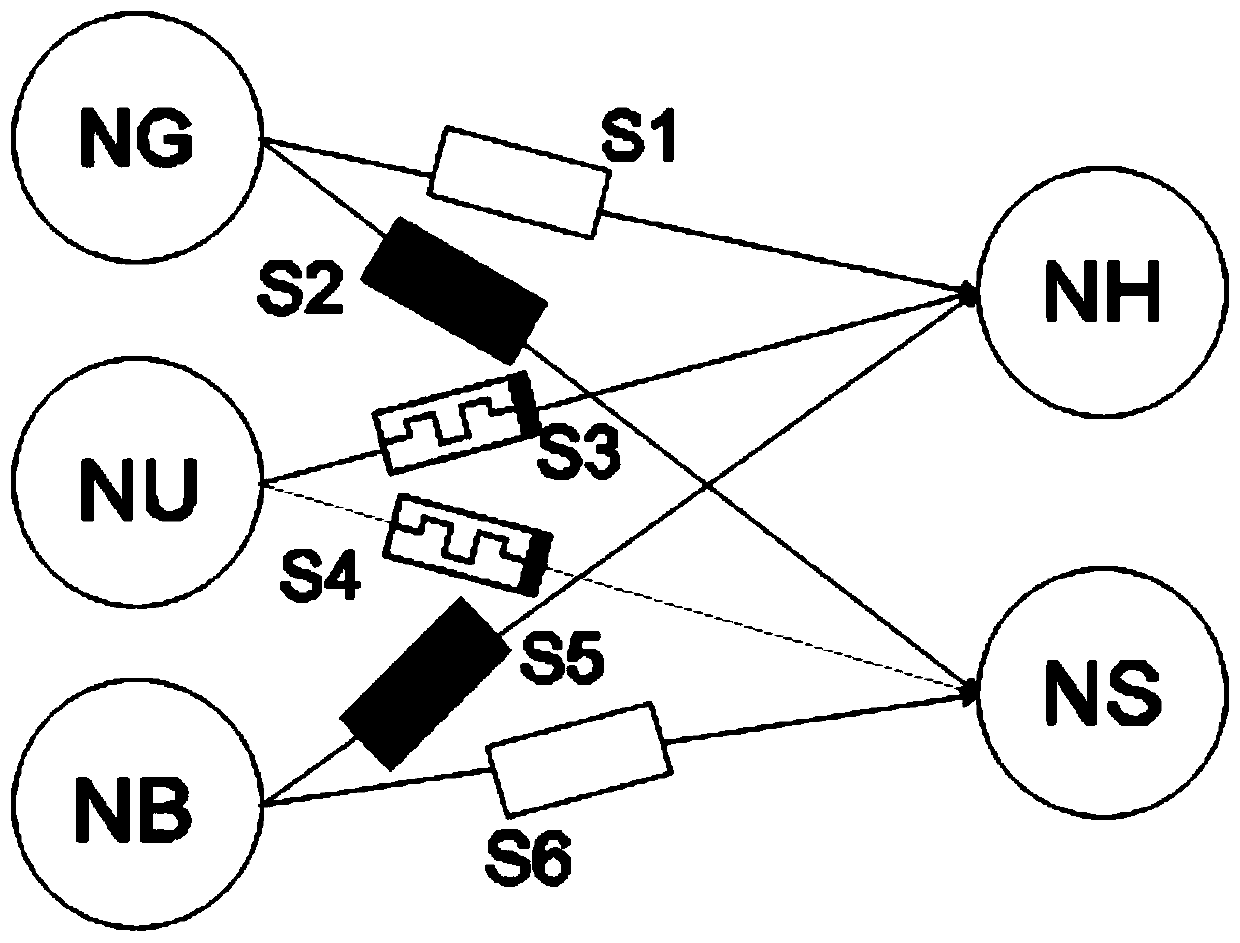

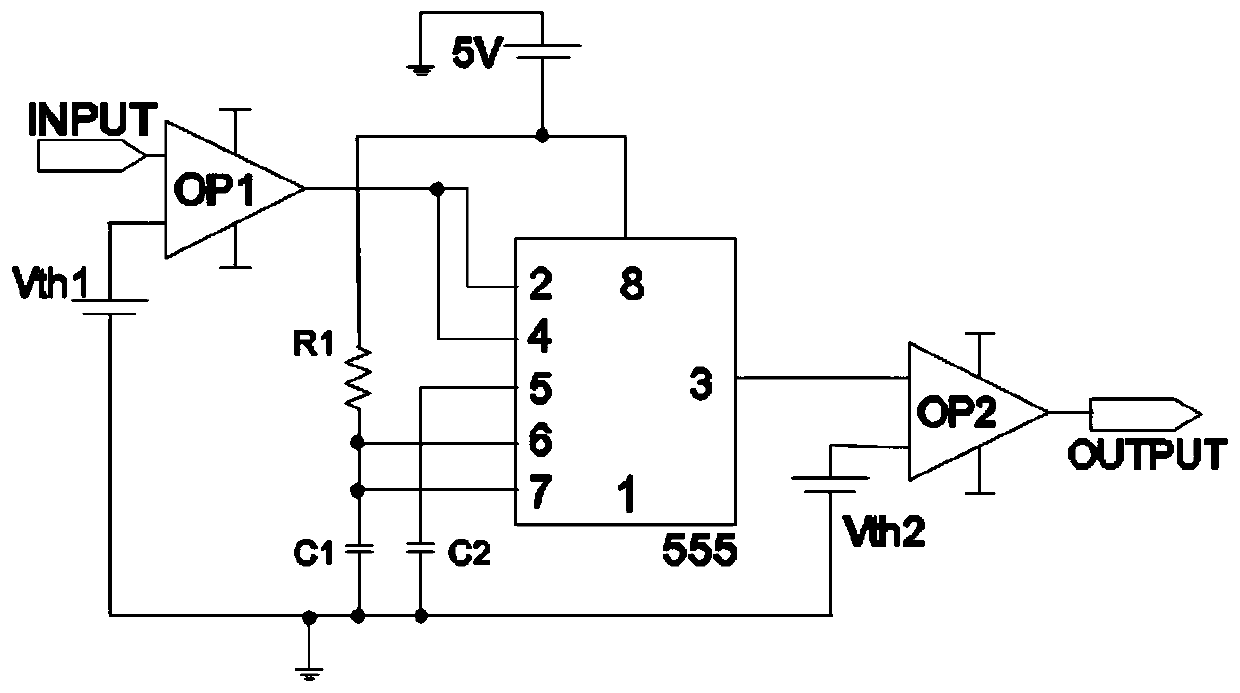

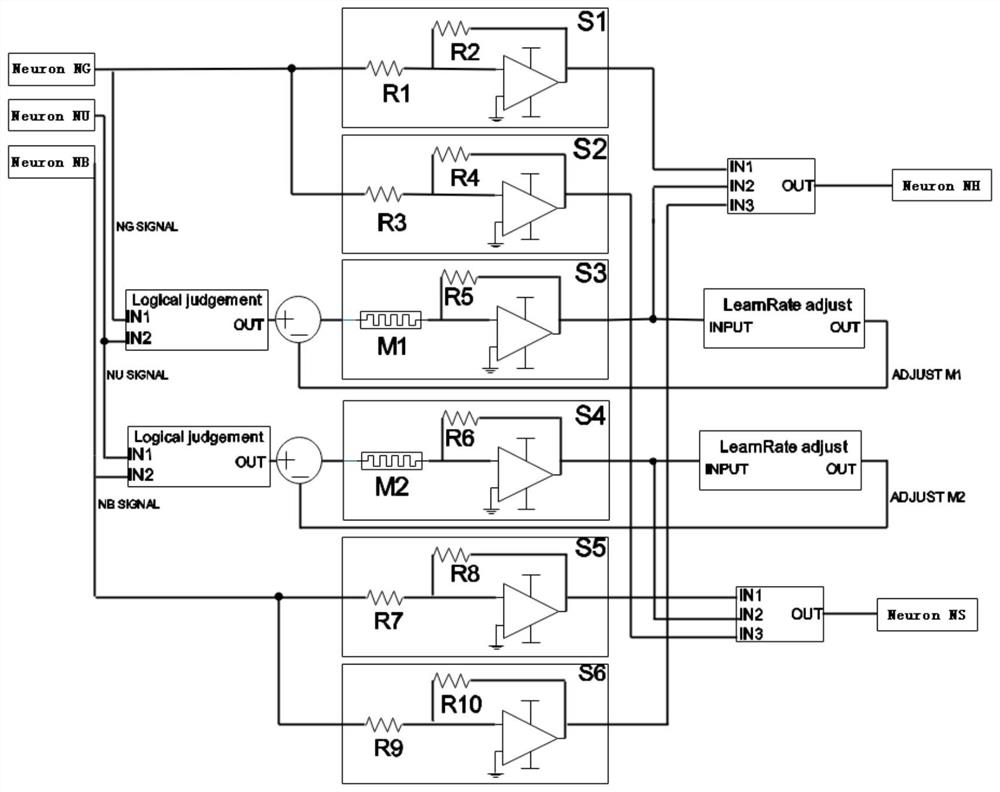

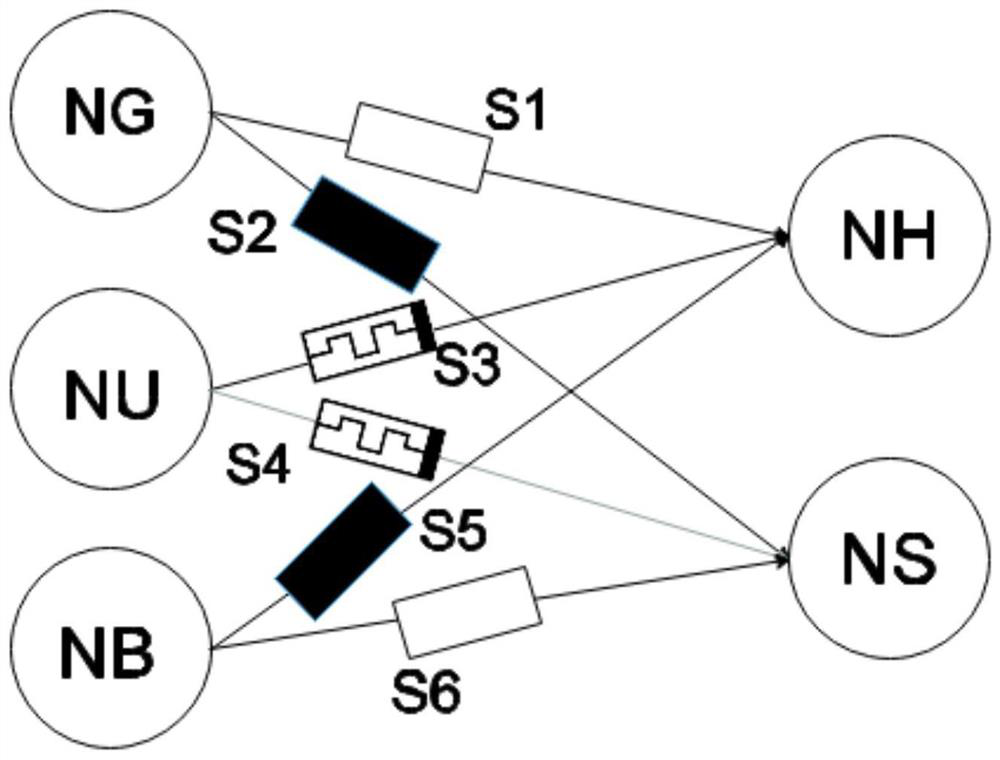

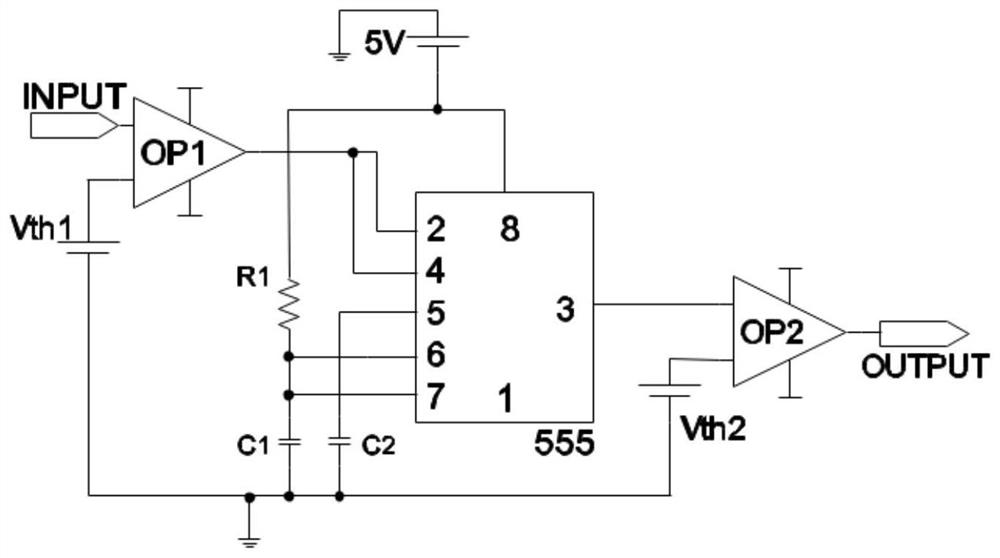

Associative memory emotion recognition circuit based on memristor neural network

ActiveCN110110840AAchieving Change AdjustmentImprove practicalityArtificial lifePhysical realisationNerve networkNeuron

The invention provides an associative memory emotion recognition circuit based on a memristor neural network. The circuit comprises an input unit, a logic judgment unit, a synaptic unit, a learning speed regulation unit, an output processing unit and an output unit. The input unit is used for simulating an input neuron in a neural network. The output unit is used for simulating output neurons in the neural network. The circuit is used for realizing an associative memory emotion recognition method based on a memristor neural network. an associative memory emotion recognition model is established based on the memristor neural network by using the neural network to simulate the human perceptron. The associative memory emotion recognition circuit based on the memristor neural network has the beneficial effects that the integration degree of the associative memory emotion recognition circuit based on the memristor neural network is higher, simulation of human learning speed changes is achieved, the human emotion changes are better simulated, and the probability that an intelligent machine simulates human thinking and behaviors is improved, and the bionic capability and the practicability of the simulated neural network are enhanced.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

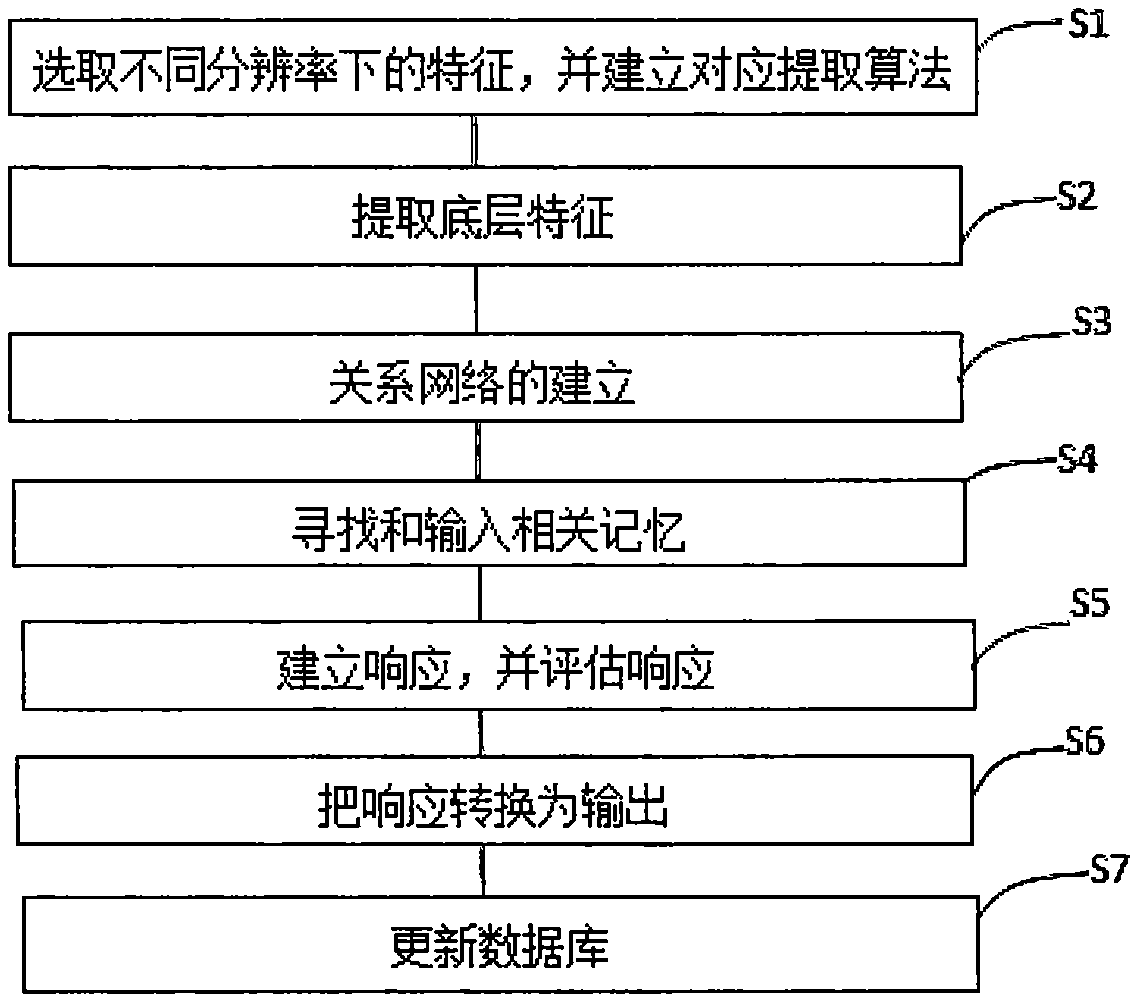

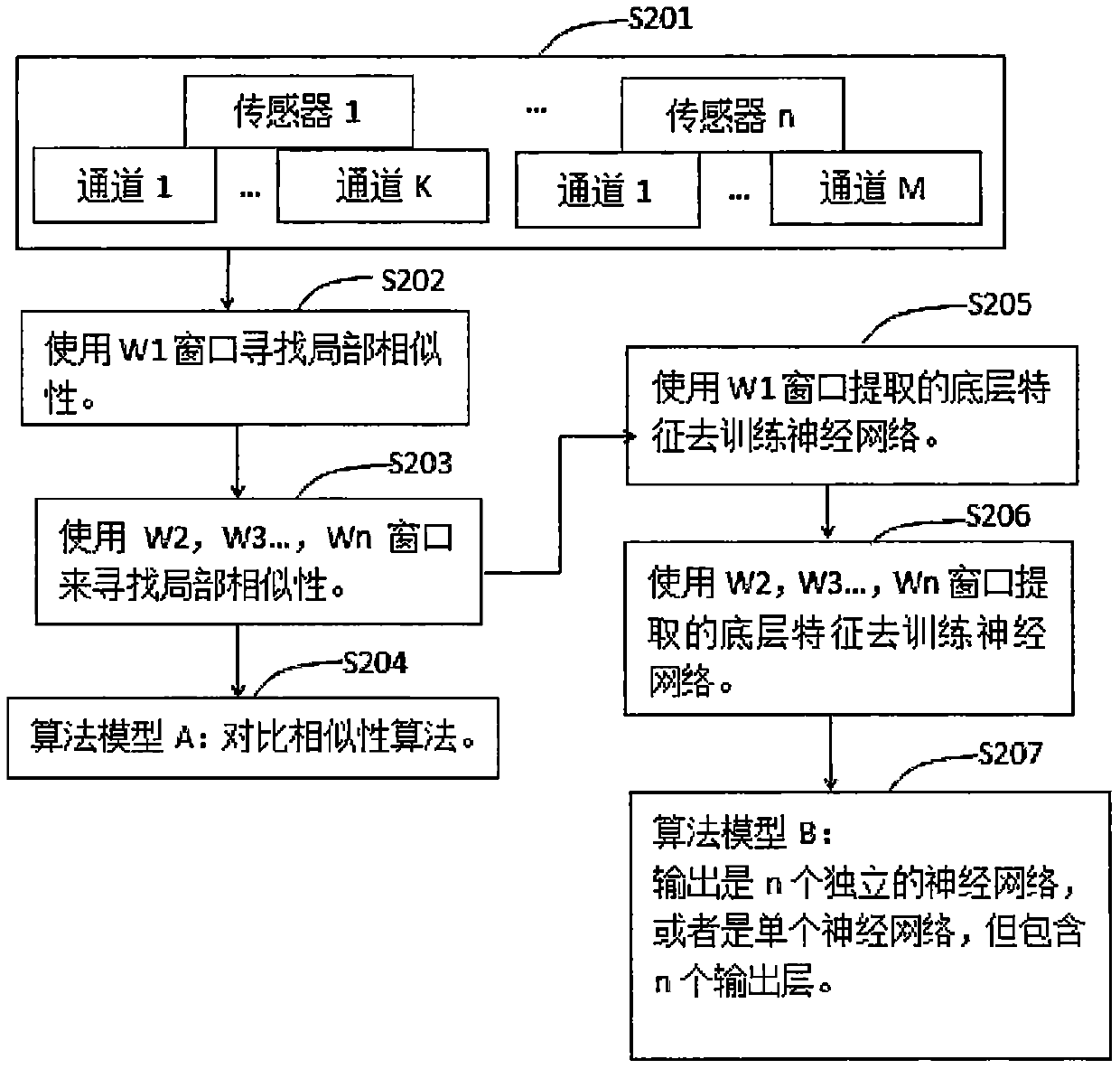

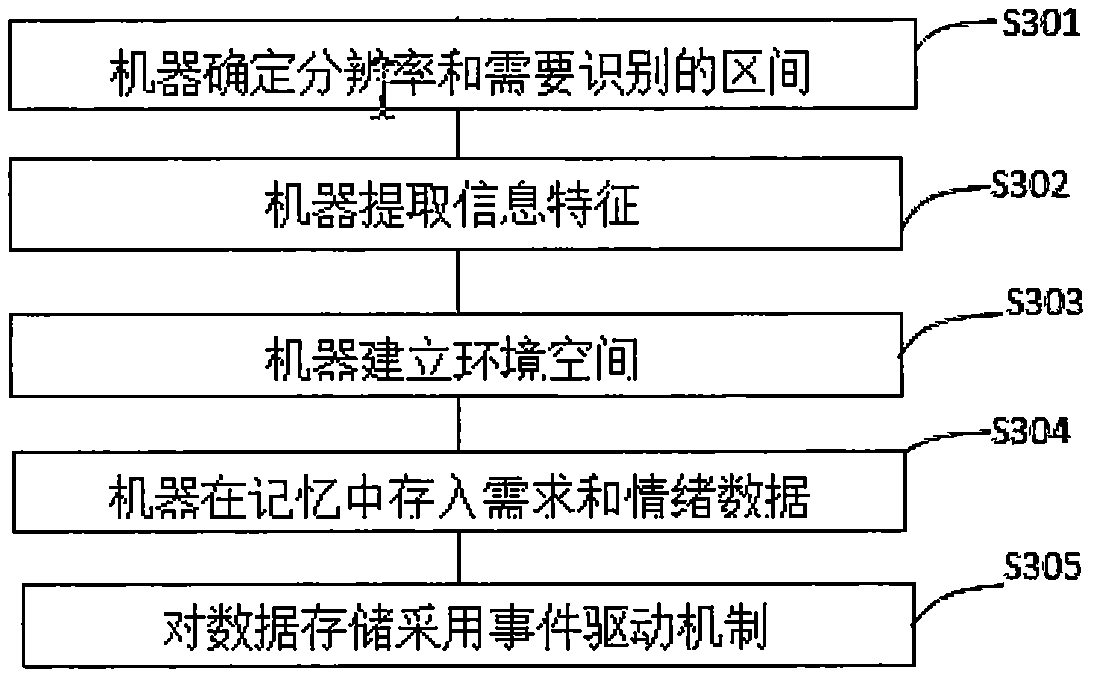

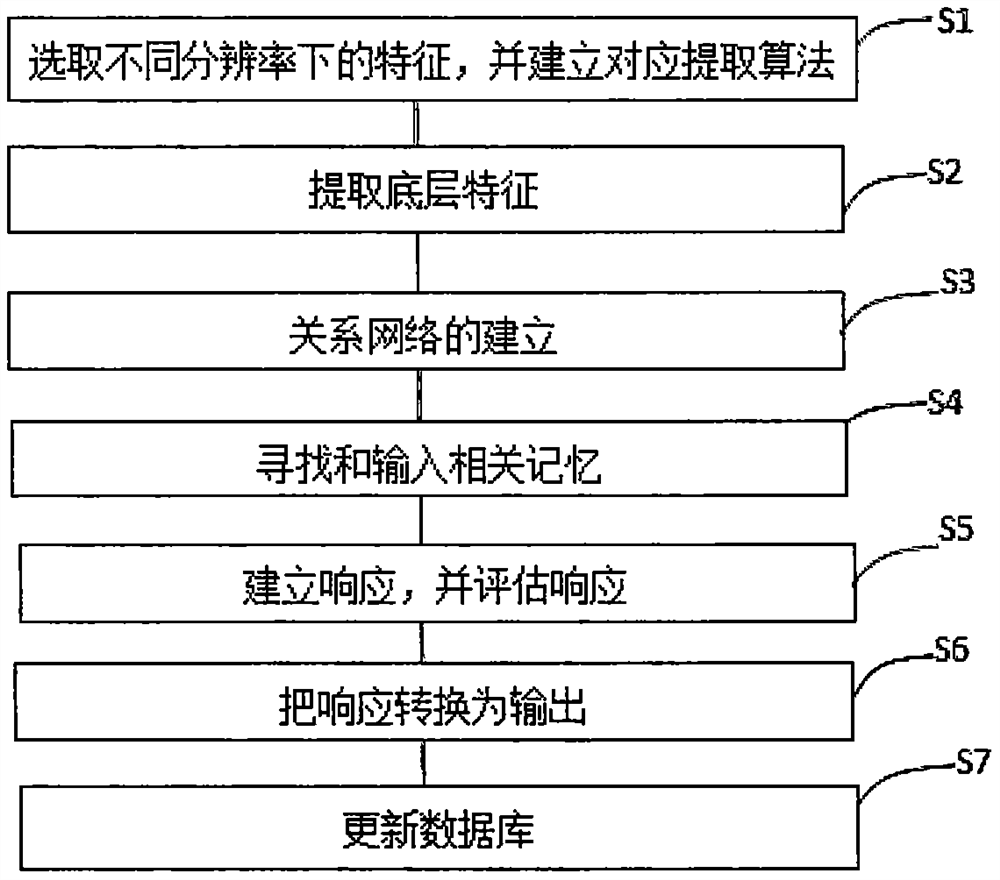

Machine intelligence implementation method similar to human intelligence

The invention provides a learning method. A human learning process is imitated, various recombination schemes are searched through summarizing information and recombining information and a motivatingmachine, and a process is divided into a plurality of intermediate links to search simulatable experience and the like. A machine gradually obtains responses from input to output from simplicity to complexity and has emotion expressions similar to those of human beings, which show that the machine learning method provided by the invention is greatly different from the existing machine learning method in the industry at present, and no similar method exists in the industry at present.

Owner:陈永聪

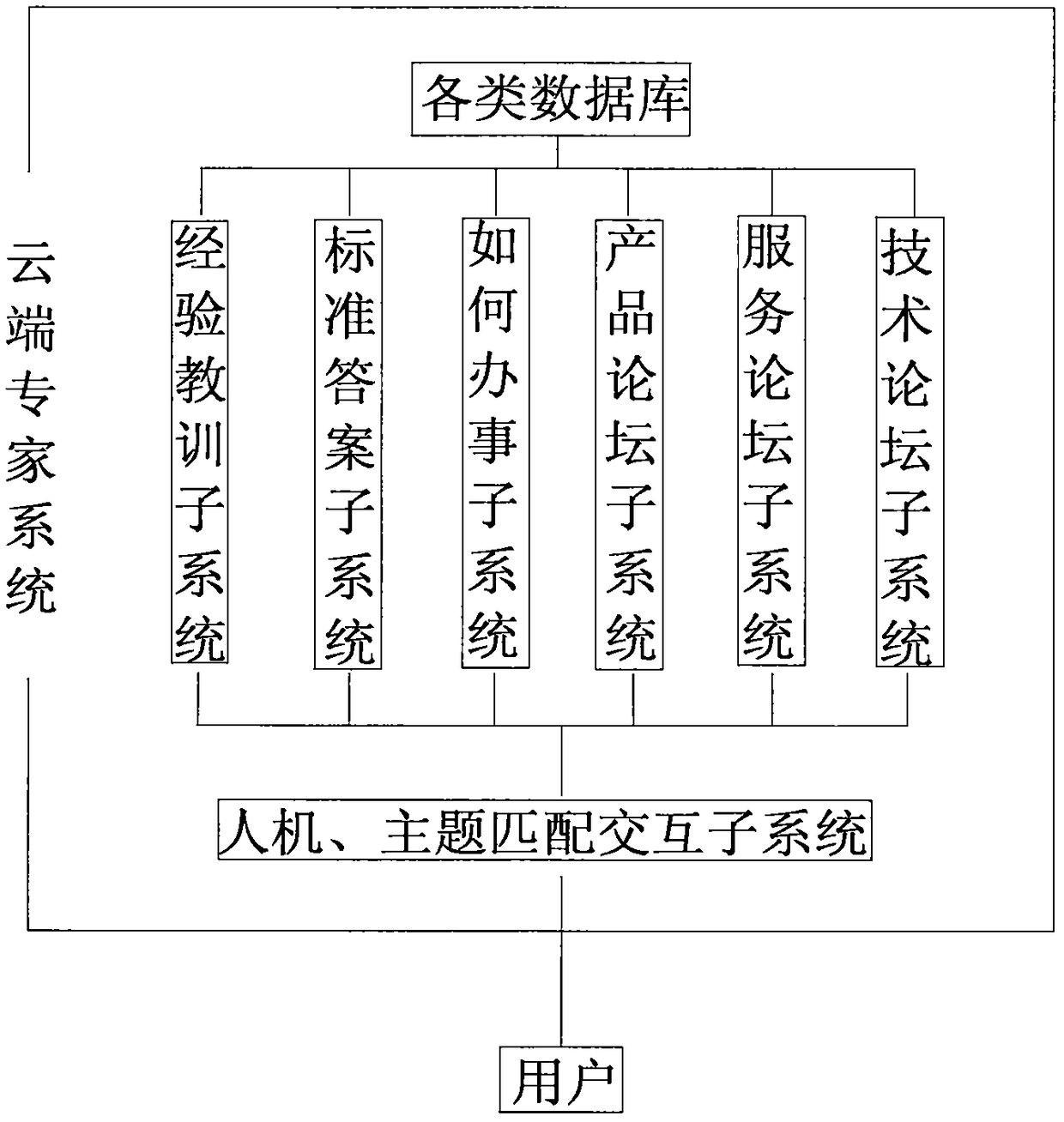

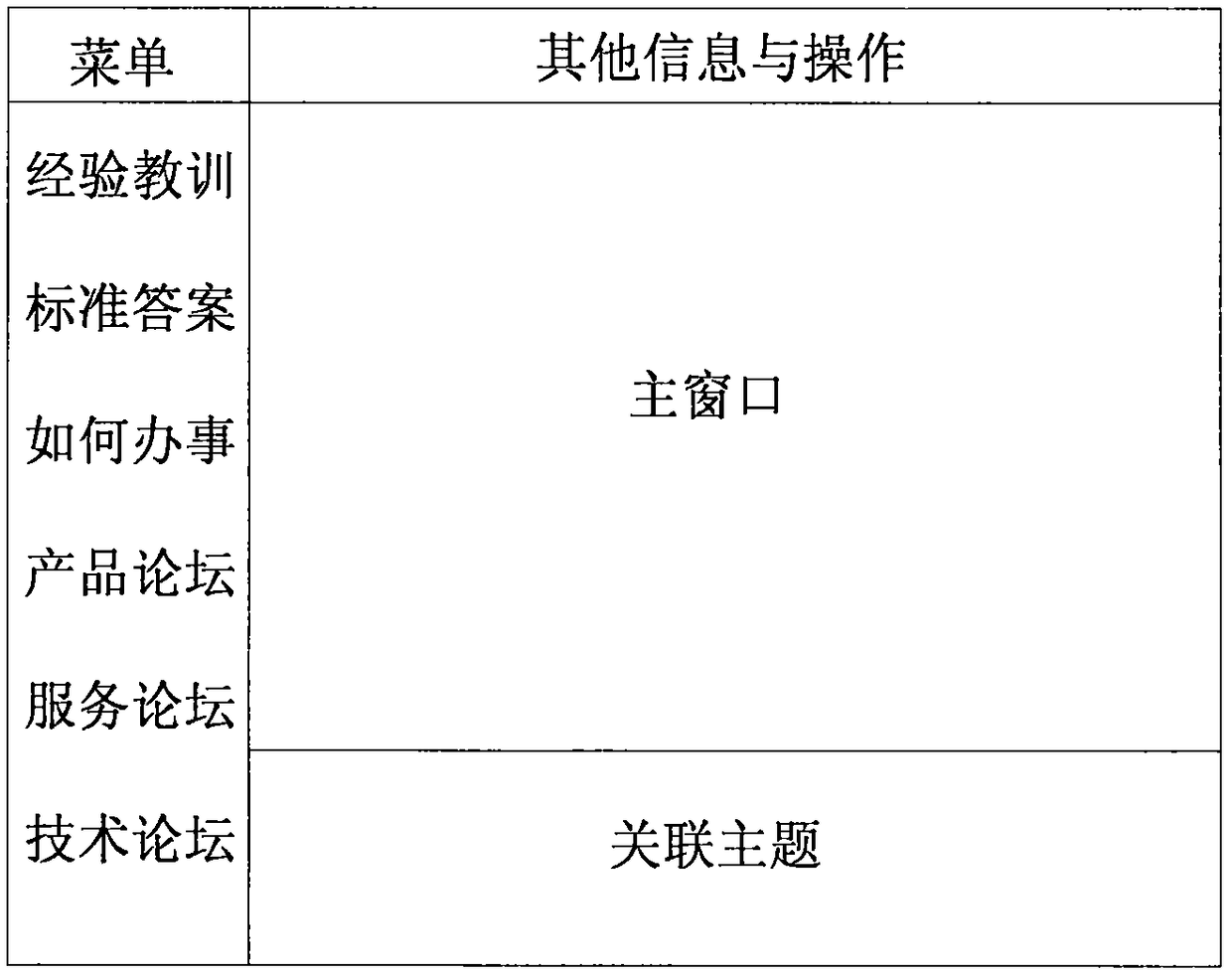

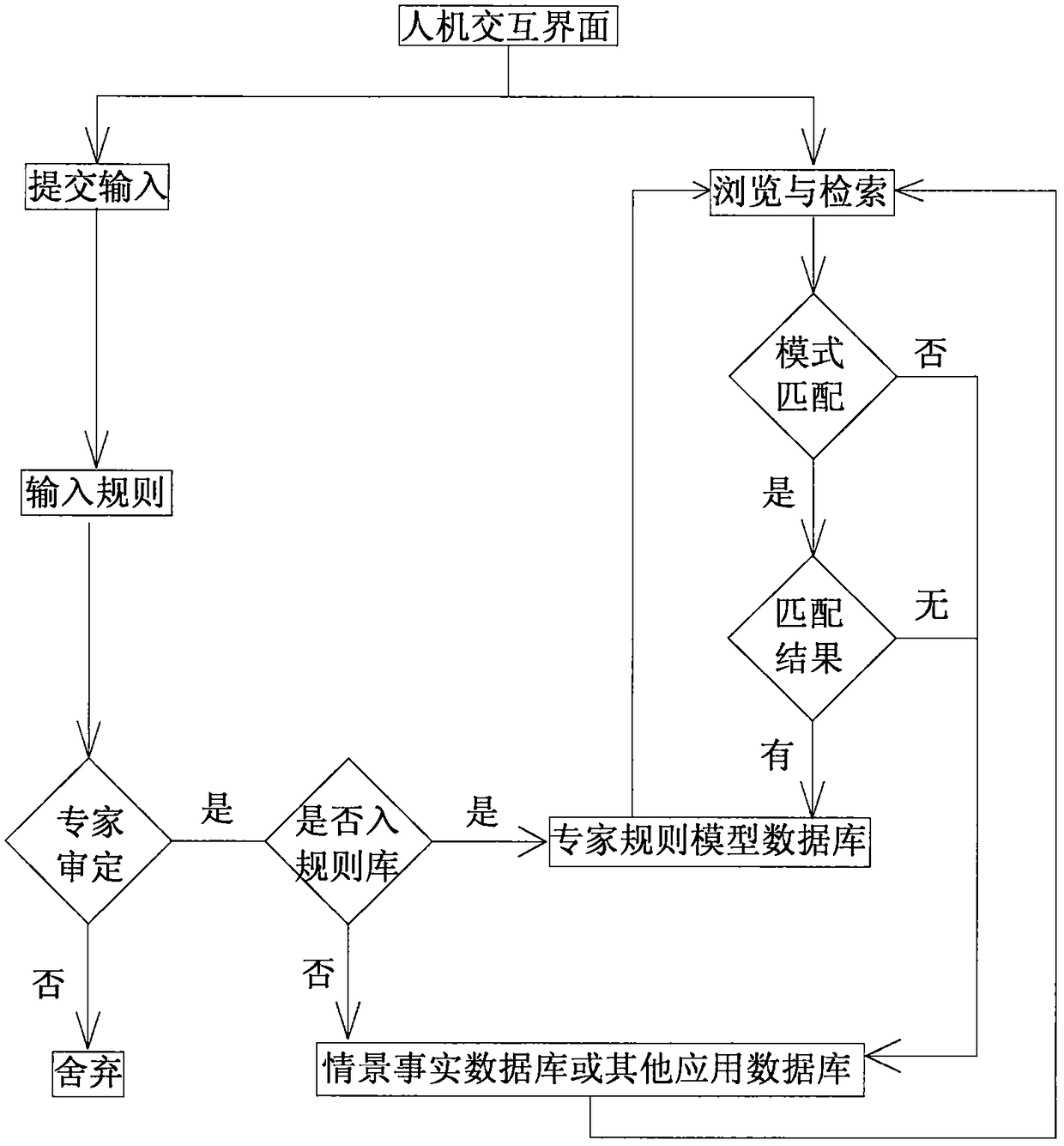

Cloud expert system for improving efficiency of human learning research and decision making affair handling

InactiveCN108629416AImprove convenienceImprove accuracyInference methodsTechnical standardHuman–robot interaction

The invention discloses a cloud expert system for improving efficiency of human learning research and decision making affair handling and belongs to the social application field. The system comprisesa human-computer interaction subsystem, an experience subsystem, a standard answer subsystem, a how-to-do subsystem, a product forum subsystem, a service forum subsystem, a technology forum subsystem,a topic matching interaction subsystem and various databases. Learning, research, decision making and affair handling are problems that human beings always face, repeated labor, fragmentation, wrongdecision making and affair mishandling are problems which led to a large amount of manpower, material and time losses in society, and development of the human society is delayed. The system is advantaged in that on the basis of analyzing impacts on human learning, research, decision making and affair handling efficiency, combined with the current scientific and technological conditions, the cloudexpert system is proposed and established, learning, research, decision making and affair handling are guided and assisted through following ideas and the knowledge of experts, and problems of errors,repetition and inefficiency in human learning research and decision making affair handling are solved.

Owner:有产亿金(湖北)科技有限公司

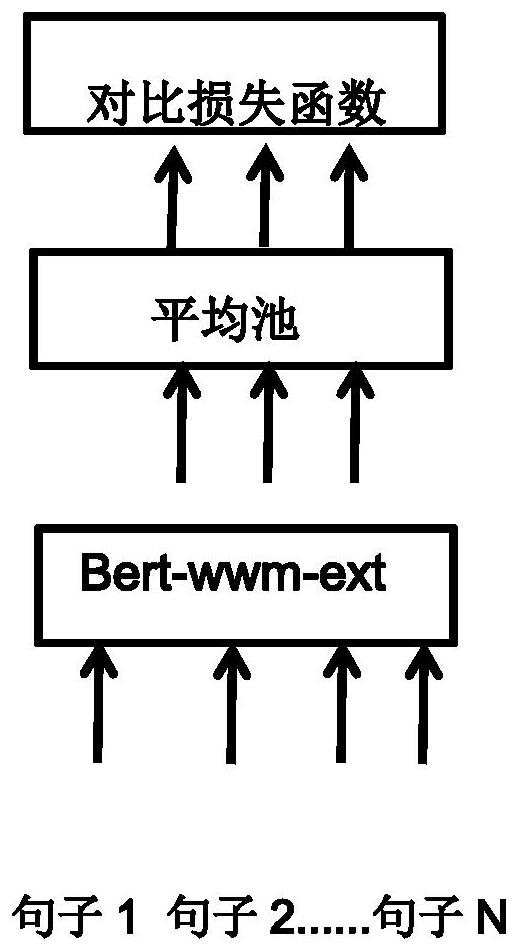

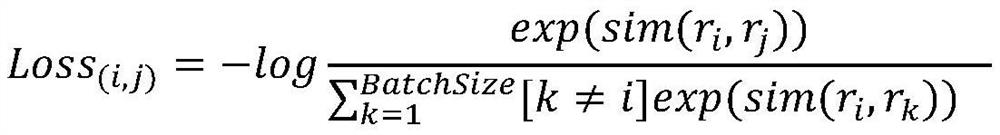

Problem semantic matching method for optimizing BERT

PendingCN114218921AHigh degree of semantic concisenessHigh speedSemantic analysisCharacter and pattern recognitionPattern recognitionSemantic representation

The invention discloses a semantic matching method based on Bert, and the method is based on a pre-training model Bert-wwm-ext of Harbin Institute of Word, the model is firstly used to carry out unsupervised training of full word masks under our big data background, so that the model is firstly adapted to our data characteristics, and after the model based on our data is stored, the model based on our data is subjected to unsupervised training of full word masks under our big data background. The following adjustments are made on the structure of the model, a Pooling layer is added to an output layer of Bert, when sentences are input, each Batch inputs a group of specific sentences, a part of the sentences are similar in semantics, the remaining sentences are different in semantics, and in this way, the model is made to be similar to human learning, and the sentences can be input into the Bert. Contrast learning between data is considered, so that the model converges more quickly, after model architecture transformation is completed, sentence semantic similarity training is conducted again under the background of large corpora based on the model, comparison calculation between synonymous sentences and non-synonymous sentences is added in the training process, then the model is subjected to back propagation, and therefore the sentence semantic similarity is obtained. And finally obtained sentence vector semantic representation is more practical.

Owner:中国医学科学院医学信息研究所

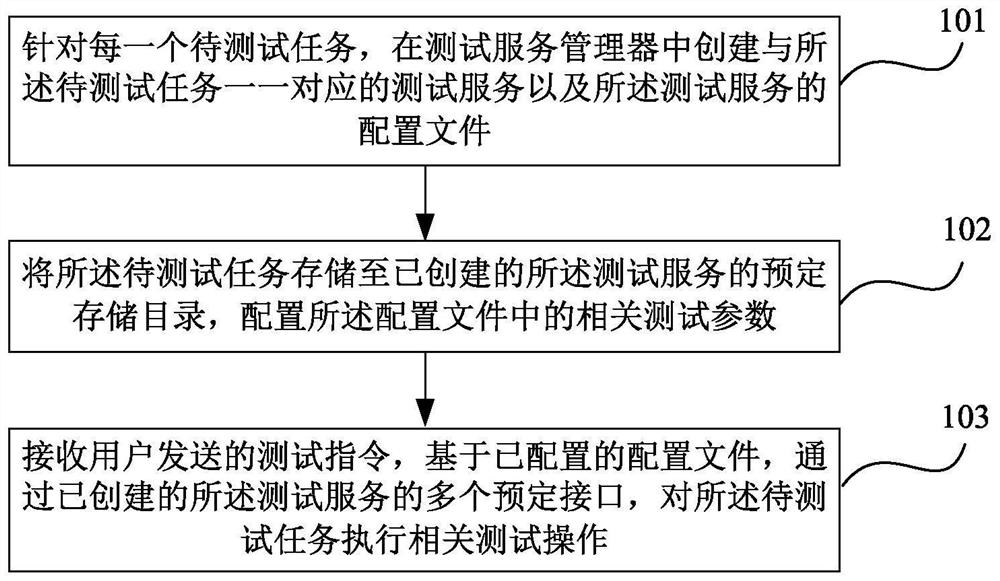

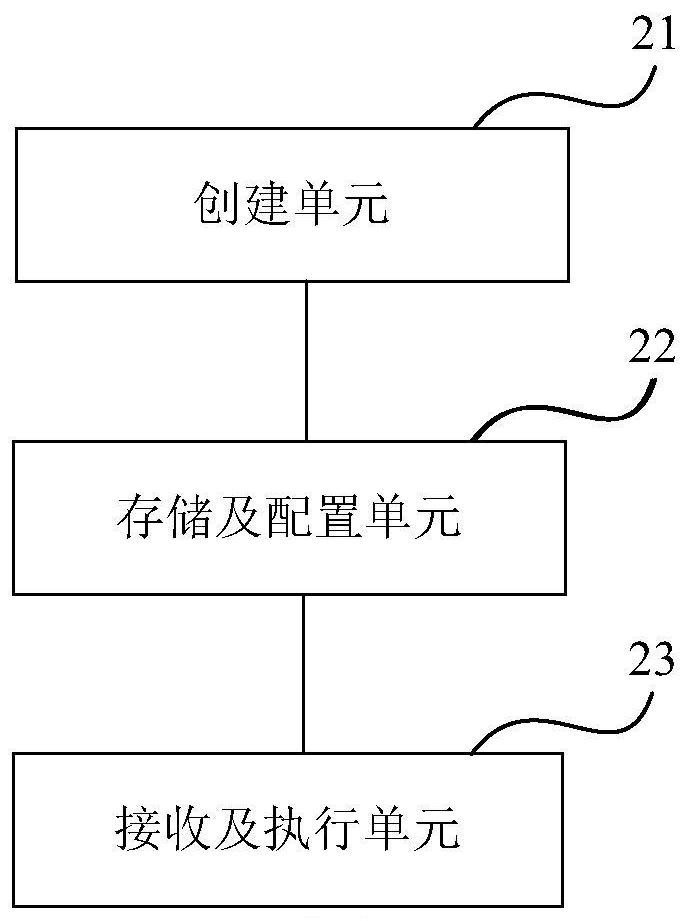

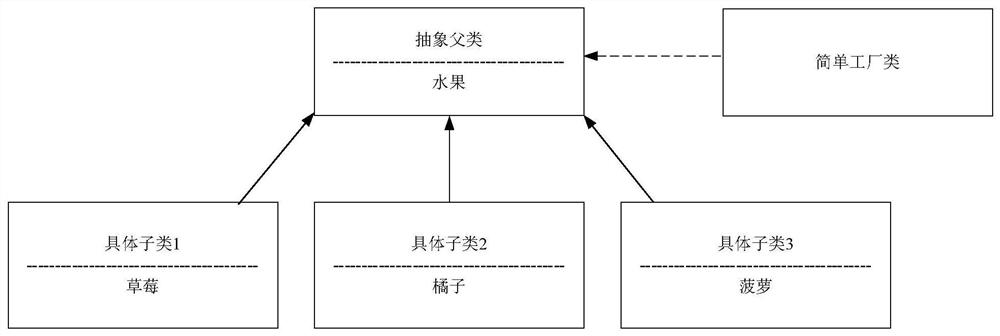

A test method and test device based on simple factory mode

ActiveCN108228452BImprove learning efficiencyImprove compatibilitySoftware testing/debuggingDirectoryTest set

The embodiment of the present invention provides a test method and test device based on a simple factory pattern, the test method includes: for each task to be tested, create a test service corresponding to the task to be tested in the test service manager And the configuration file of the test service; store the task to be tested in the created predetermined storage directory of the test service, configure the relevant test parameters in the configuration file; receive the test instruction sent by the user, based on the configured The configuration file is used to execute related test operations on the task to be tested through multiple predetermined interfaces of the created test service. Through the present invention, the deployment process of the tested environment is incorporated into the test service management system, which is convenient for the testers in charge of deploying the test environment to execute the test process with one key without additional deployment, and at the same time improves the learning efficiency of newcomers.

Owner:MICRO DREAM TECHTRONIC NETWORK TECH CHINACO

Method and system for machine comprehension

The AKOS (Artificial Knowledge Object System) of the invention is a software processing engine that relates incoming information to pre-existing stored knowledge in the form of a world model and, through a process analogous to human learning and comprehension, updates or extends the knowledge contained in the model, based on the content of the new information. Incoming information can come from sensors, computer to computer communication, or natural human language in the form of text messages. The software creates as an output. Intelligent action is defined as an output to the real-world accompanied by an alteration to the internal world model which accurately reflects an expected, specified outcome from the action. These actions may be control signals across any standard electronic computer interface or may be direct communications to a human in natural language.

Owner:NEW SAPIENCE LTD

A three-dimensional shape recognition method for robots based on multi-view information fusion

ActiveCN106951923BImprove recognition accuracyEfficient sortingCharacter and pattern recognitionNeural learning methods3d shapesLearning machine

The invention proposes a robot three-dimensional shape recognition method based on multi-view information fusion, which combines the advantages of the full-view and single-view methods and overcomes the shortcomings of the full-view and single-view methods. Through the multi-view information of the three-dimensional shape obtained by the robot in motion, the image similarity detection technology is first used to sort the similarity of the images, and then the hierarchical depth features are obtained through the convolutional neural network. Visual features of temporal and spatial sequences are learned to obtain highly abstract spatio-temporal features. The invention not only simulates the hierarchical learning mechanism of human beings, but also innovatively adds a learning mechanism that simulates the time-space sequence of human learning, thereby realizing the high-precision classification and recognition of three-dimensional shapes by multi-view information fusion.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

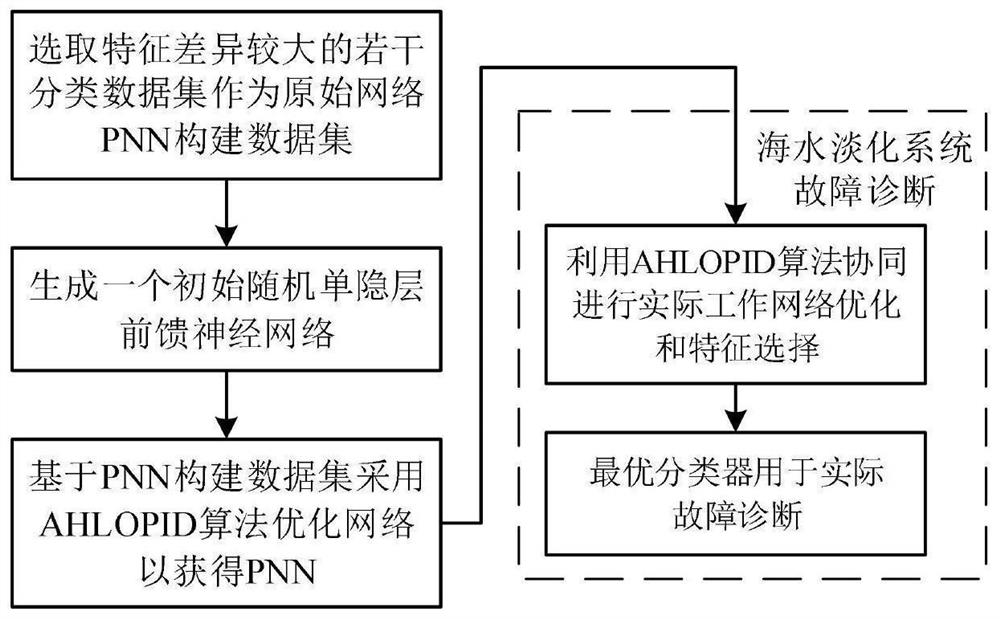

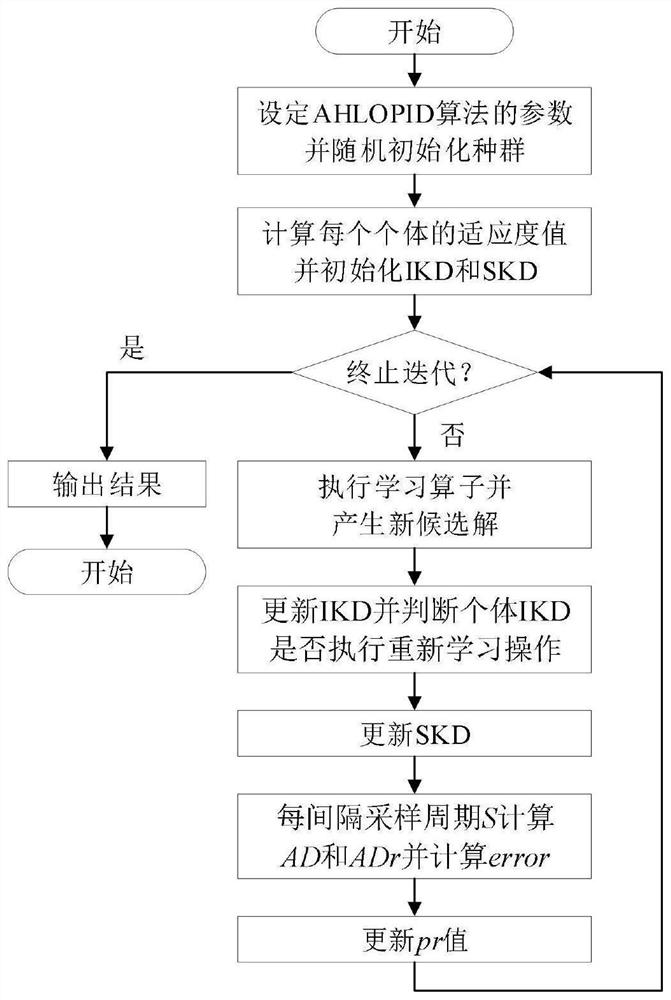

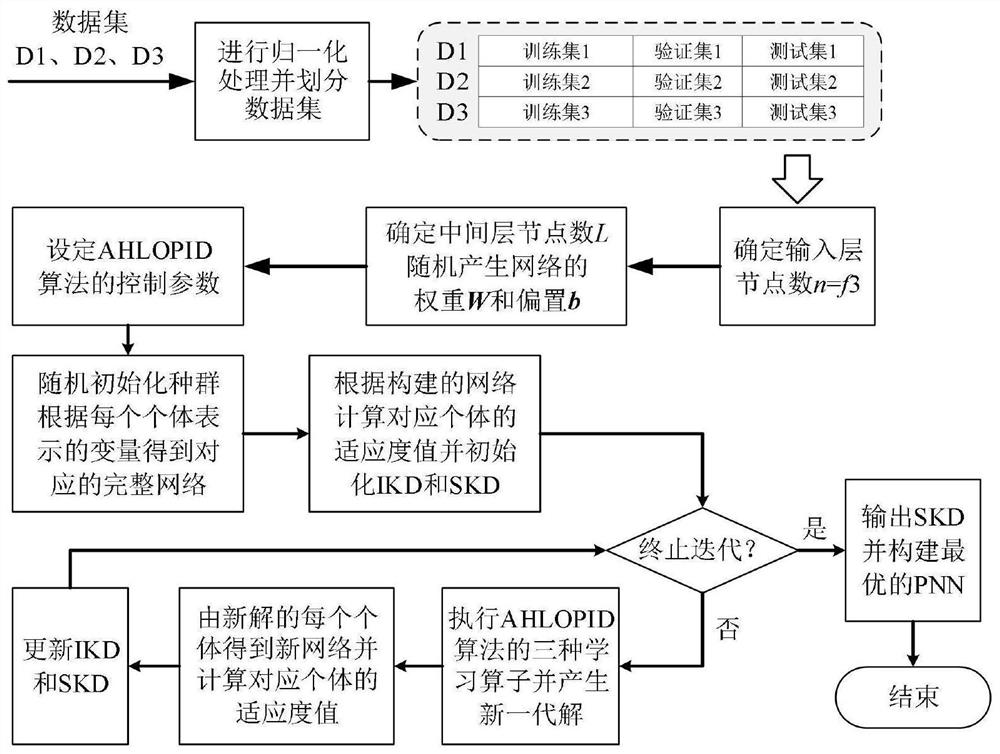

Sea water desalination system fault diagnosis method based on improved selective evolution random network

PendingCN113688559AImprove global optimization capabilitiesSimple structureDesign optimisation/simulationMulti-objective optimisationData setEngineering

The invention discloses a sea water desalination system fault diagnosis method based on an improved selective evolution random network, and relates to the technical field of fault diagnosis, and the method comprises the following steps: selecting a plurality of classification data sets with large feature differences as an original network (PNN) to construct a data set; generating an initial random single hidden layer feedforward neural network; constructing a data set based on the PNN, and optimizing the network by adopting an adaptive human learning optimization algorithm (AHLOPID) based on intelligent PID control to obtain the PNN; enabling the PNN to be used for specific fault diagnosis, and carrying out actual working network optimization and feature selection through AHLOPID cooperation based on fault data of the sea water desalination system; and finally, enabling the obtained optimal classifier to be used for actual fault diagnosis. According to the method, the fault diagnosis generalization performance is improved by constructing the PNN, and the AHLOPID is used for network design to overcome instability caused by randomization in practical application of the random feedforward neural network, so that the fault diagnosis accuracy of the sea water desalination system is improved, and stable operation of the system is ensured.

Owner:SHANGHAI UNIV

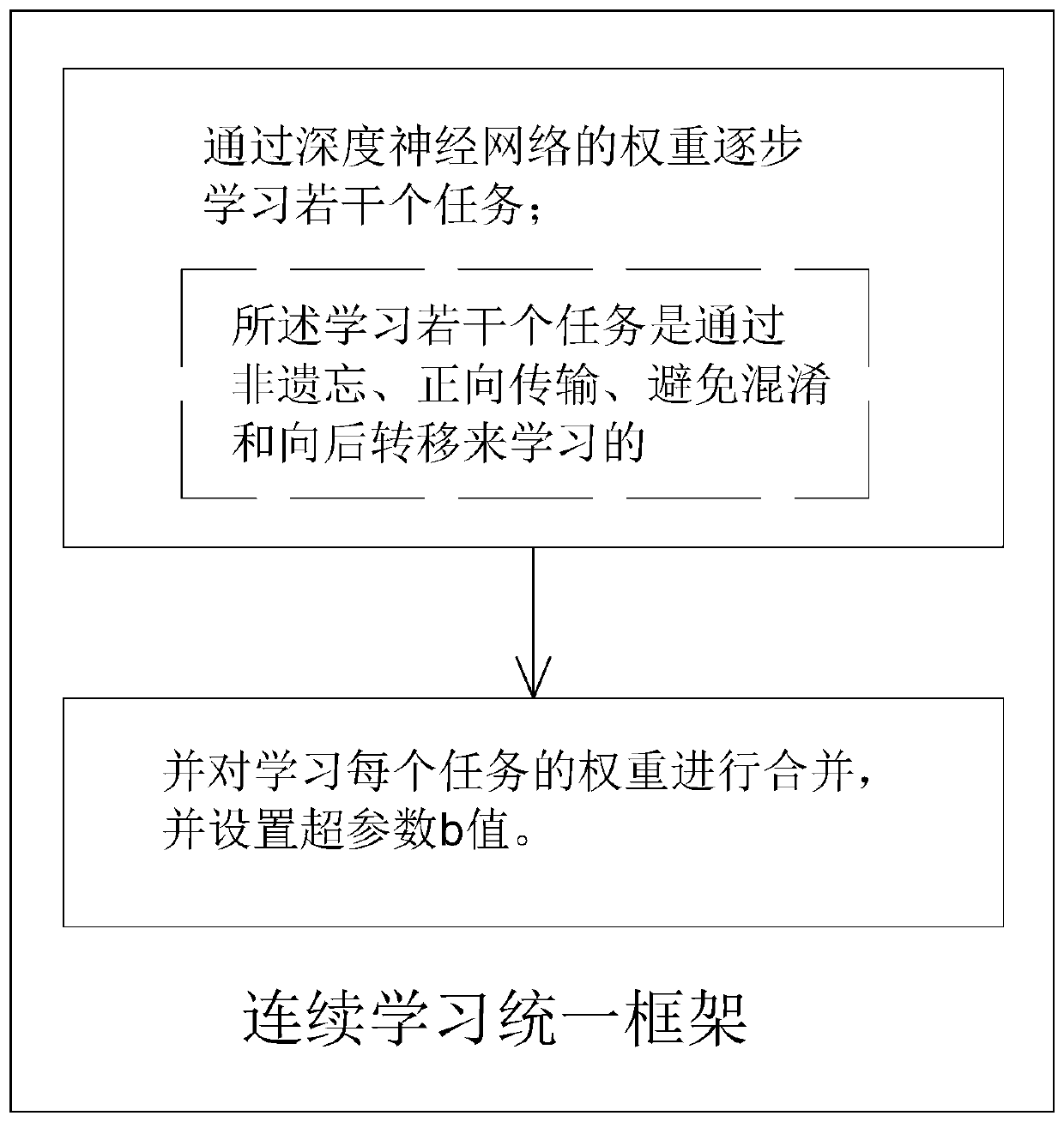

Continuous learning unified framework based on deep neural network

PendingCN111382869AContinuous learningAvoid confusionNeural architecturesNeural learning methodsMachine learningNeural network nn

The invention provides a continuous learning unified framework based on a deep neural network. The continuous learning unified framework comprises the following steps: gradually learning a plurality of tasks through the weight of the deep neural network; wherein the plurality of learning tasks are learned through non-forgetting, forward transmission, confusion prevention and backward transfer; andcombining the weights of the learning tasks, and setting a hyper-parameter b value. According to the framework, all attributes can be demonstrated by using a small amount of weight merging parametersin the deep neural network through the framework; besides, the behavior and mechanism of the framework are similar to those of human learning, such as non-forgetting and forward transmission, so thatconfusion and backward transfer are avoided. As a bidirectional inspiration channel, continuous learning of the machine and human beings is further understood.

Owner:深圳深知未来智能有限公司

An associative memory emotion recognition circuit based on memristive neural network

ActiveCN110110840BAchieving Change AdjustmentImprove practicalityArtificial lifePhysical realisationSynapseNeuron

The invention provides an associative memory emotion recognition circuit based on a memristive neural network, the circuit includes an input unit, a logic judgment unit, a synapse unit, a learning speed adjustment unit, an output processing unit and an output unit; the input unit is used for The input neuron in the simulated neural network; the output unit is used to simulate the output neuron in the neural network; the circuit is used to realize an associative memory emotion recognition method based on the memristive neural network; A Networked Associative Memory Emotion Recognition Model to Simulate Human Perceptrons. The beneficial effects of the present invention are: the associative memory emotion recognition circuit based on the memristive neural network has a higher degree of integration, realizes the simulation of the change of human learning speed, better simulates the change of human emotion, and improves the ability of intelligent machines to simulate human beings. Possibilities for thinking and behaving; enhancing the biomimetic capabilities and usefulness of simulated neural networks.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

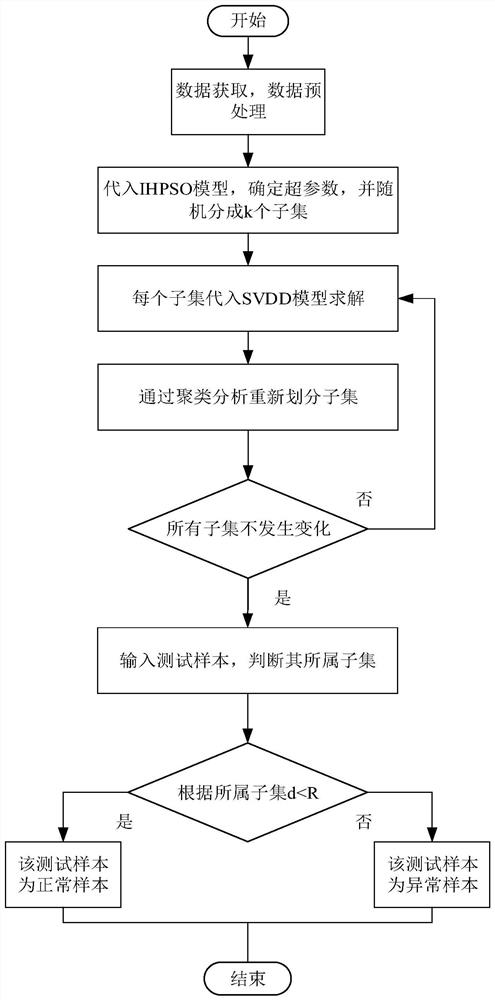

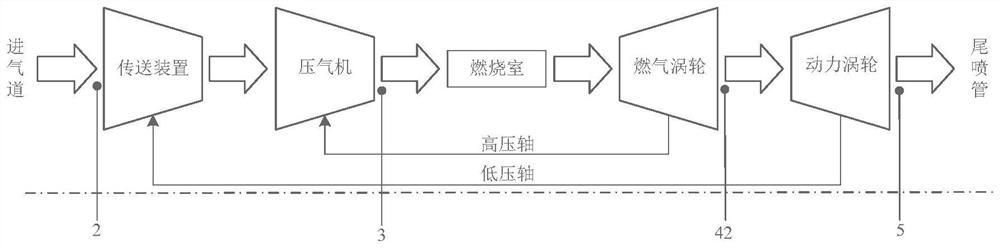

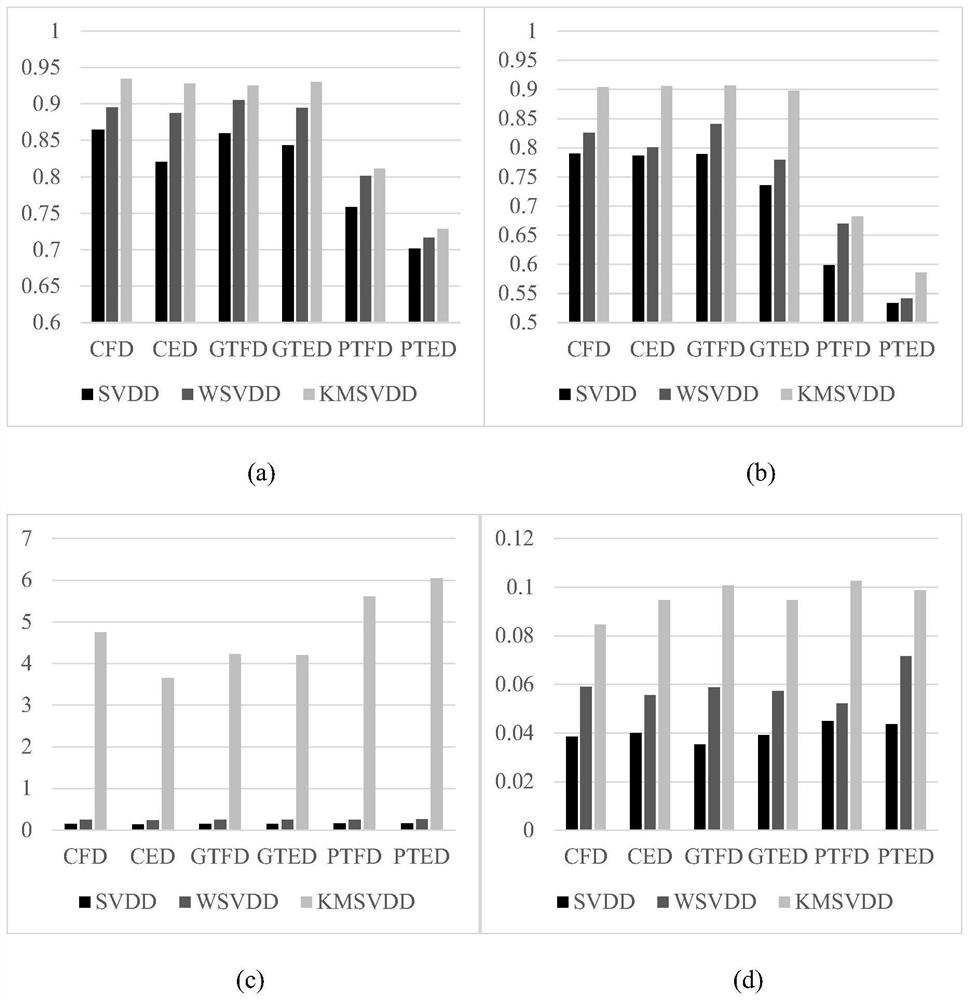

Aero-engine fault detection method based on IHPSO-KMSVDD

PendingCN113361558AEasy to understandEasy to adjustCharacter and pattern recognitionArtificial lifeData descriptionAnomaly detection

The invention provides an aero-engine fault detection method based on IHPSO-KMSVDD. A multi-sphere support vector data description algorithm is proposed, sample data in different states are surrounded by different hyper-spheres for anomaly detection, the situation that a certain state is completely mistakenly recognized is greatly avoided, and the precision and robustness of an original algorithm are improved. Besides, aiming at the defect that the algorithm hyper-parameter training time is too long, the invention provides an improved particle swarm optimization algorithm for simulating human learning behaviors to optimize hyper-parameters, so that the training time can be effectively shortened. The algorithm is suitable for small and medium-scale classification problems, and has good performance in the aspect of aero-engine fault detection. When the aero-engine breaks down due to abrasion, corrosion, blockage and the like, health parameters of corresponding parts can be changed, and when normal data are doped with fault data of different degrees, the fault data can be continuously recognized with excellent performance under the condition that the fault data are mixed, and the working efficiency is effectively improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

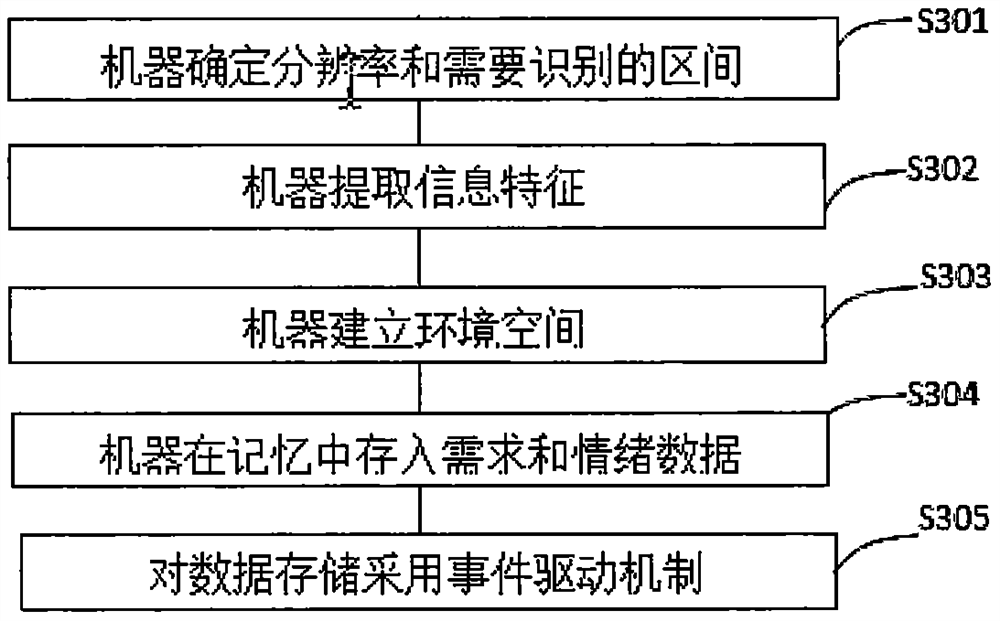

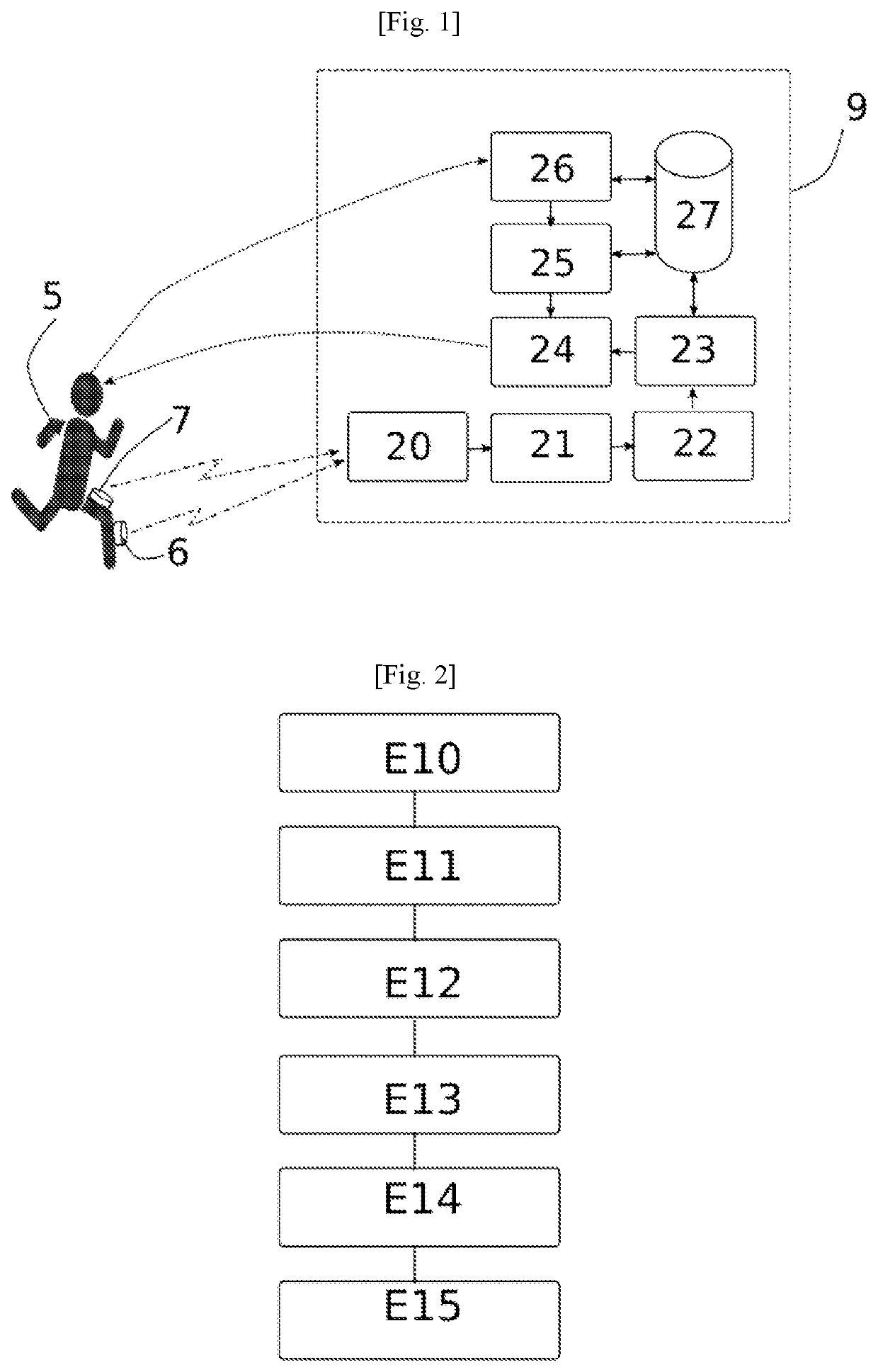

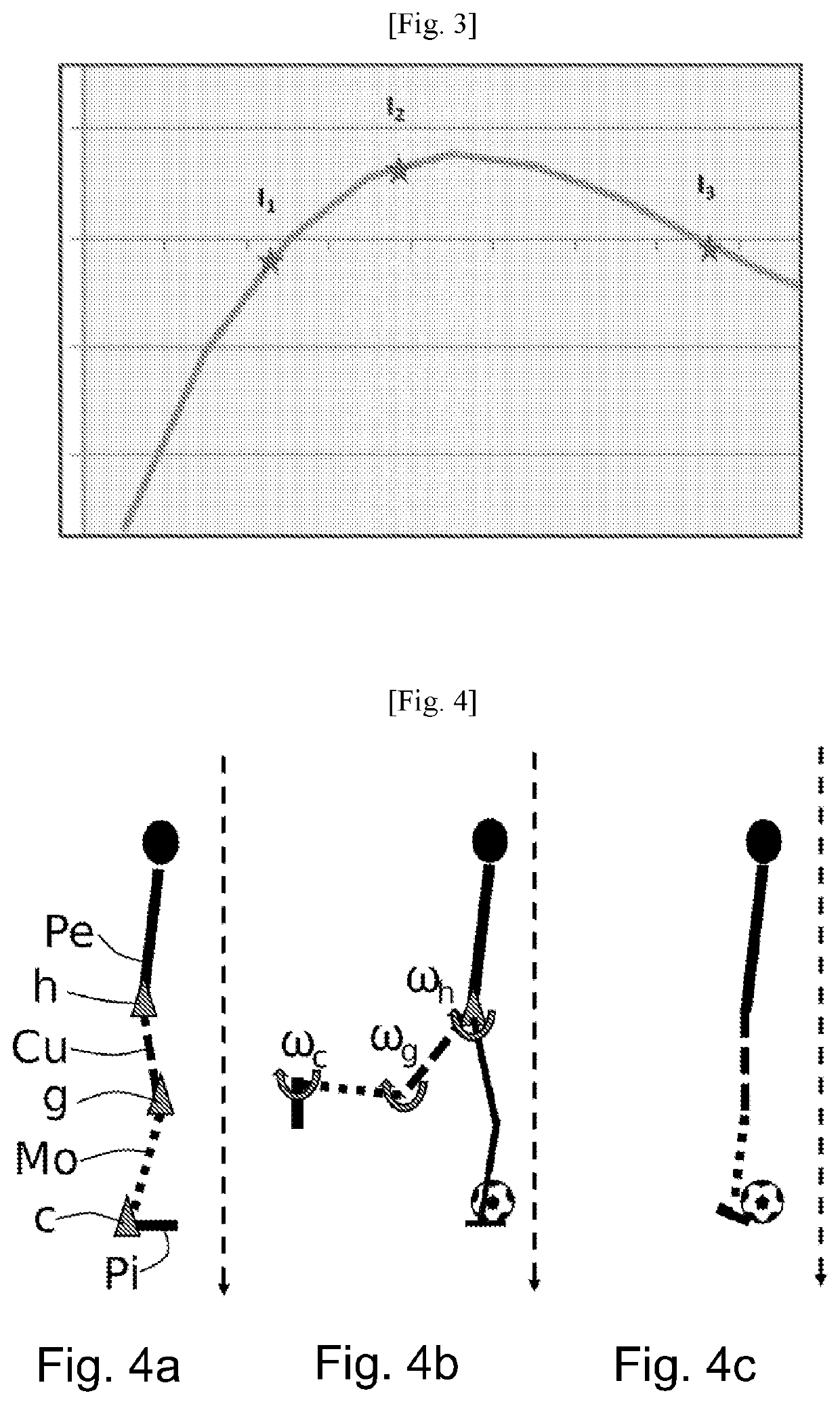

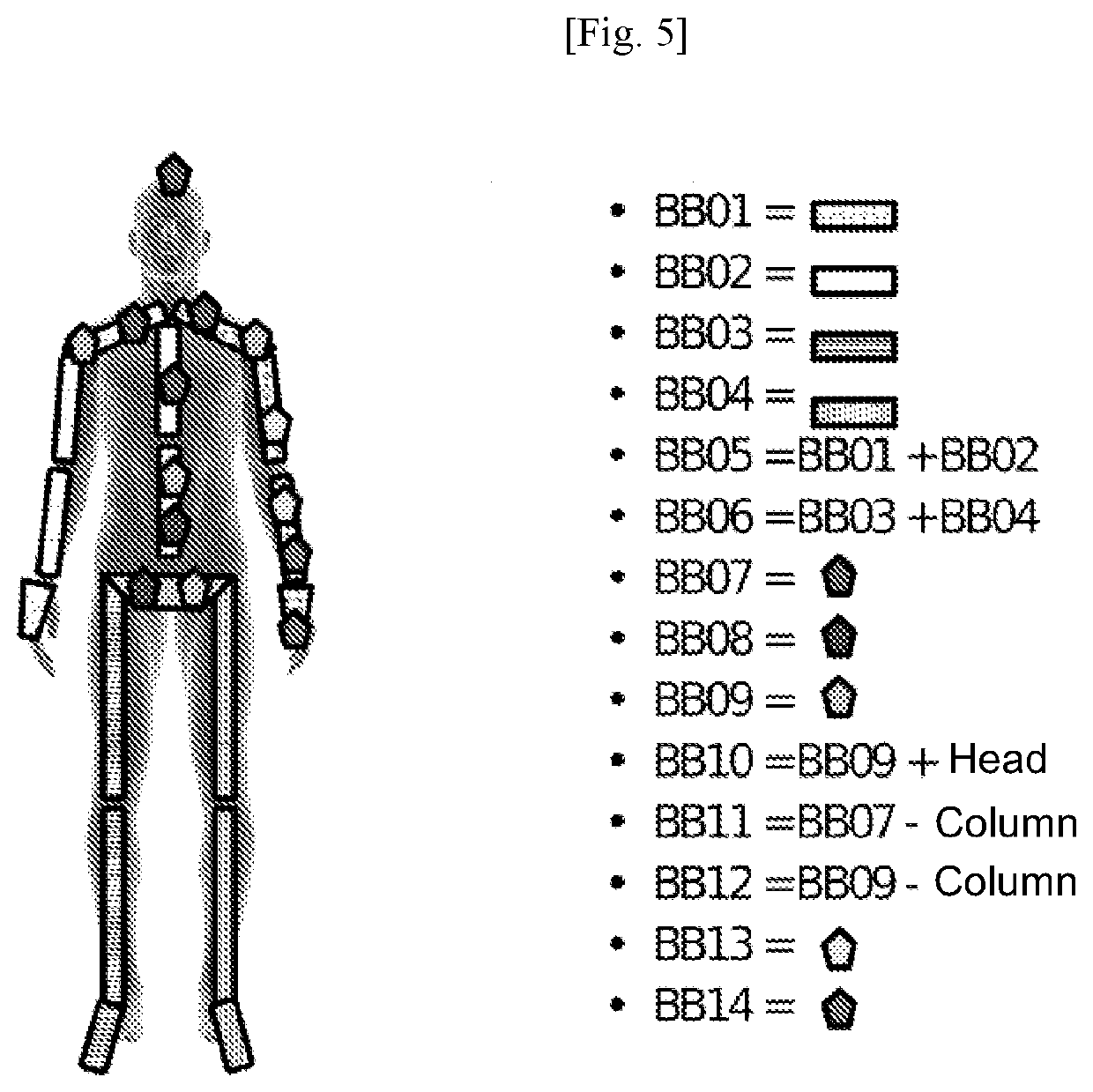

System and method for learning or re-learning a gesture

PendingUS20220365605A1Input/output for user-computer interactionDiagnostic recording/measuringBiomechanicsPhysical therapy

The invention relates to a system and a method for learning a gesture by a human learner (5), comprising the following steps: equipping said learner (5) with a plurality of motion sensors (6, 7) on a plurality of members that are predetermined in accordance with said gesture to be learned; acquiring biomechanical data provided by said plurality of sensors during a gesture performed by the learner; analyzing said acquired biomechanical data and determining a theoretical correction of the gesture by comparing said biomechanical data of the learner with biomechanical data corresponding to a target gesture; customizing the theoretical correction into a specific correction on the basis of behavior models of the learner derived from a history of biomechanical data acquired for the learner; transmitting said specific correction to the learner; updating said specific correction on the basis of information representing the sensation perceived by the learner when performing the corrected gesture.

Owner:VECTOR LABS

A Construction Method of Multi-task Classification Network Based on Orthogonal Loss Function

ActiveCN110929624BMeets requirementsDeep Feature AggregationCharacter and pattern recognitionNeural architecturesHidden layerFeature extraction

The invention provides a method for constructing a multi-task classification network based on an orthogonal loss function. The constructed multi-task classification network simulates the human learning process, and uses a deep convolutional neural network as a hidden layer to simulate the human brain for deep feature extraction. Use a tree classifier as a task-dependent output layer for progressive classification, composing the recognition process into distinct learning tasks. The present invention makes the features obtained by different tasks more in line with their respective needs, and makes the deep features of the same coarse class more aggregated when the classifier completes the coarse classification task, while the deep features of different fine classes are more discrete when the fine classification task is completed, The task output layer features of different classification tasks are distinguished, so that classifiers at different levels can better match the features of different classification tasks, and useless features are removed, thereby improving classification accuracy.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Few-shot Image Sentiment Classification Method Based on Meta-learning

ActiveCN112613556BGood initialization parametersImprove classification effectCharacter and pattern recognitionNeural architecturesSample graphData set

The invention discloses a meta-learning-based emotion classification method for few-sample images. Firstly, a plurality of meta-learning tasks similar to the few-sample images with emotional label information in the target data set are constructed on the source data set, and then these meta-learning tasks are Task learning, to obtain a good classification model initialization parameters, so that the classification model can achieve better classification results when facing emotional images in a few-sample target data set. The present invention can not only greatly alleviate the need for labeling data, but also the method based on meta-learning is more in line with the human learning method (human learning new tasks are all based on the tasks that have been learned), and can make the neural network model more intelligent .

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

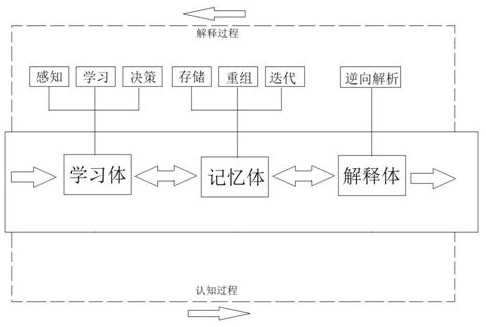

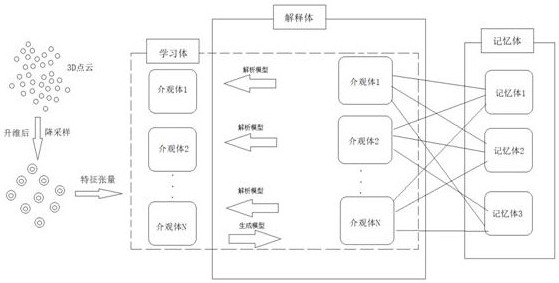

Neural network three-body model based on mesoscopic system

PendingCN113591557ACharacter and pattern recognitionNeural architecturesTissue architectureOriginal data

The invention provides a neural network three-body model based on mesoscopic systems. The neural network three-body model comprises a learning body which is mainly used for construction from original data to a high-level mesoscopic system; a memory body which is mainly used for storing and recombining neuron attribute information in the different mesoscopic systems; and an interpretation body which is mainly used as a reverse propagation process of the learning body so as to realize a corresponding interpretation function of the high-level mesoscopic system to a low-level mesoscopic system. According to the invention, important nodes and intrinsic information in a deep learning process are stored by constructing the three-body neural network model based on the mesoscopic systems, and a memory organization structure is further formed, so that important basis and guarantee are provided for interpretability of deep learning, and a new direction is provided for the development of a brand new neural network which better conforms to a human learning cognition mode.

Owner:CHANGCHUN UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com