Problem semantic matching method for optimizing BERT

A semantic matching and problem-solving technology, applied in semantic analysis, neural learning methods, natural language data processing, etc., can solve problems such as low quality of sentence vectors and difficulty in reflecting semantic similarity, and achieve fast results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Step 1: Data collection and data preprocessing;

[0030] Collect real medical dialogue records on the Internet, and store them in the local machine in the form of natural text.

[0031] Step 2: Carry out data segmentation, and divide the data into training set and verification set;

[0032] Remove expected non-compliant characters, redundant punctuation marks, unify the half-full-width representation of punctuation marks, use regular expressions to remove non-text corpus, perform word segmentation after data preprocessing, and construct word segmentation reference documents for full-width word mask unsupervised training;

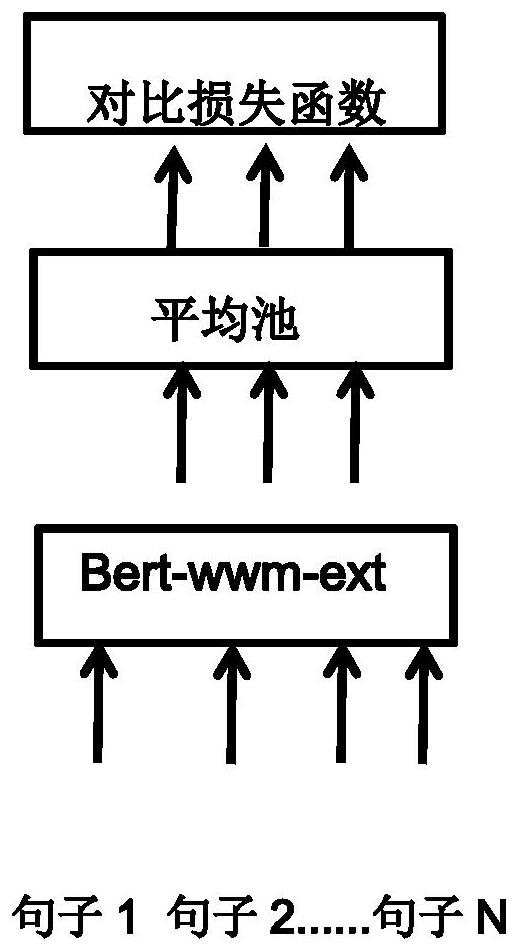

[0033] Step 3: Based on the pre-training model Bert-wwm-ext, do unsupervised training of full word mask;

[0034] Use the following script for unsupervised training of the model, pre-training on the processed data set, so that the model can learn the characteristics of the data set

[0035] export TRAIN_FILE= / path / to / dataset / wiki.train.raw

[0036...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com