Robot 3D shape recognition method based on multi-view information fusion

A technology of three-dimensional shape and recognition method, which is applied in the field of multi-view visual information, can solve the problems of insufficient information, the impossibility of robots to obtain full-view visual information of three-dimensional shapes, large limitations, etc., and achieve high recognition accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Embodiments of the present invention are described in detail below, and the embodiments are exemplary and intended to explain the present invention, but should not be construed as limiting the present invention.

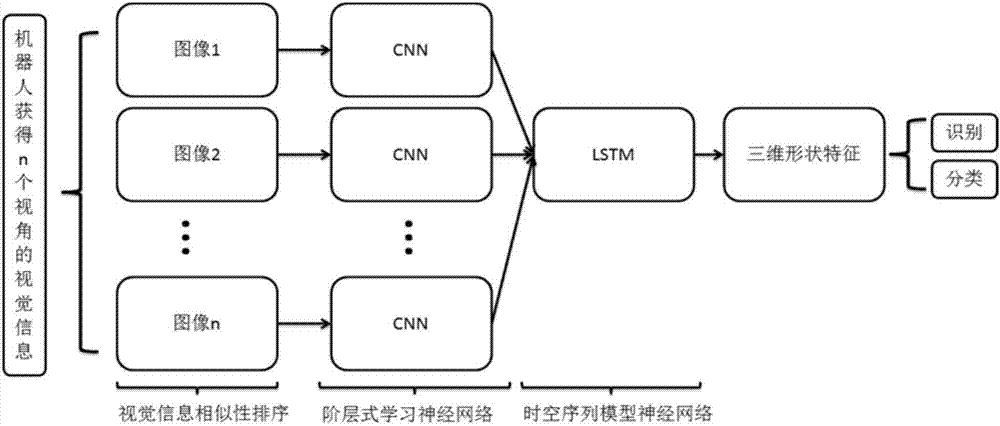

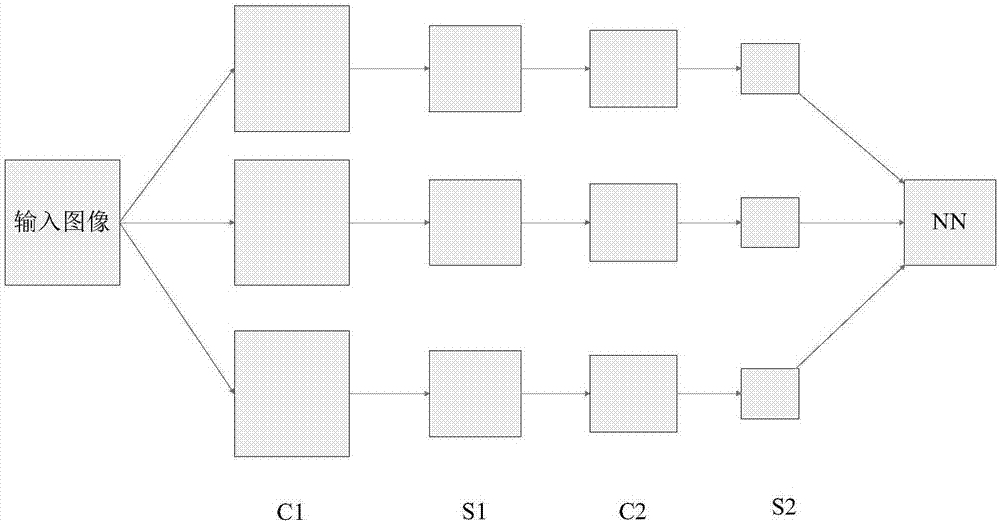

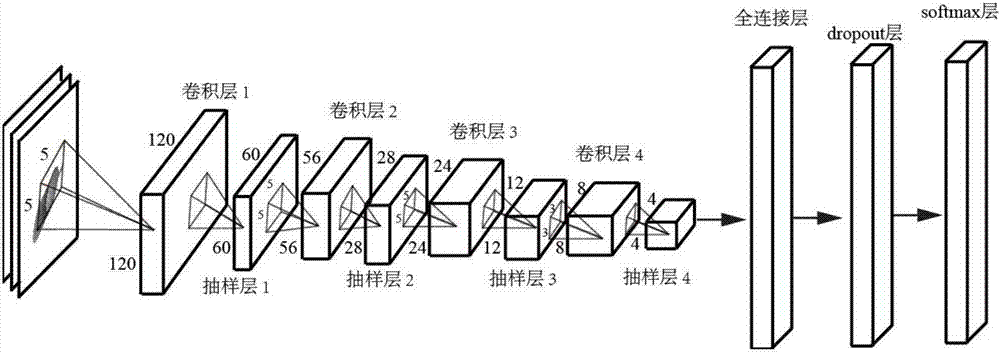

[0036] attached figure 1 The general flow of the recognition of the three-dimensional shape by the robot realized by the present invention is shown. The purpose of the invention is to enable the robot to realize fast and efficient recognition of three-dimensional shapes during motion. The figure contains the visual map of the three-dimensional shape of the different perspectives obtained by the robot during the movement. In the recognition process, the visual information similarity is first sorted to obtain a set of ordered visual information structures; and then the obtained visual map is convolved. Neural network learning to obtain hierarchical deep features, and then brought into the long short-term memory model to obtain deep features of time-space sequen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com