Target long-time tracking method based on content retrieval

A target tracking and long-term technology, applied in neural learning methods, image data processing, instruments, etc., can solve problems such as target deformation, occlusion and out of view, and achieve the effect of improving robustness and efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039] The single target tracking method combined with historical trajectory information proposed by the present invention will be further described below in conjunction with the accompanying drawings and specific embodiments. Advantages and features of the present invention will become apparent from the following description and claims.

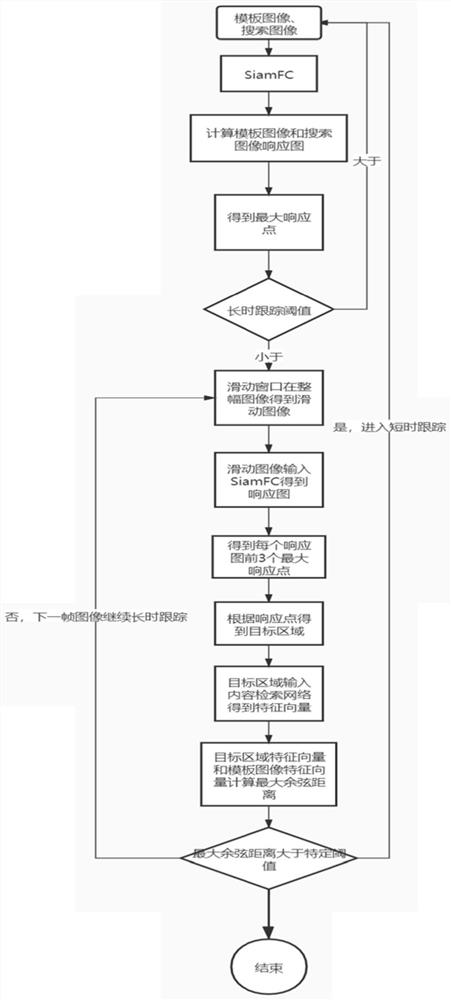

[0040] A long-term target tracking method based on content retrieval provided by the present invention, the method performs the following steps on each frame search image:

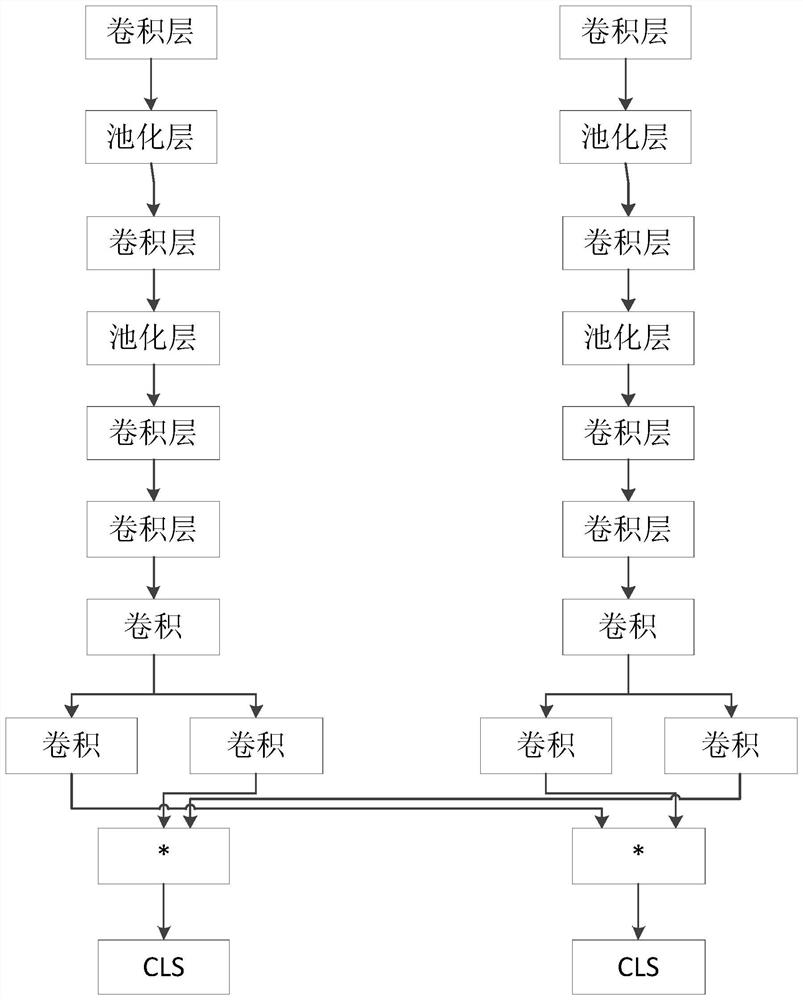

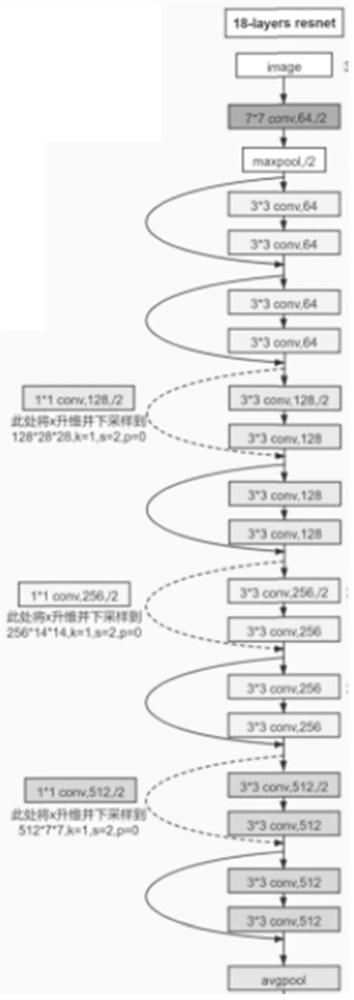

[0041] S1. Use an offline target tracking network for target tracking processing to obtain a classification feature map, and record the target content of the initial frame during tracking as a target template;

[0042] Using the offline target tracking network for target tracking processing, the specific steps to obtain the classification feature map are as follows:

[0043] S1.1. Obtain the template image and the current frame search image; the template image is manual...

Embodiment

[0067] A specific embodiment of the present invention provides the training process of the above-mentioned neural network and the application process of the long-term target tracking method based on content retrieval provided by the present invention.

[0068] (1), data set acquisition and preprocessing

[0069] Select the training data set, and perform size normalization and data enhancement processing on the image input to the network.

[0070] Specific implementation methods, the commonly used data set ILVSRC2015 in the field of single target tracking and 800 videos that were actually shot and marked independently are used as training data. The size normalization and data enhancement methods are as follows:

[0071] According to the first frame of the template image, the real target frame (x min ,y min ,w,h), where x min and y min Respectively represent the point position coordinates of the upper left corner of the ground truth box. w and h represent the width and hei...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com