Multi-sensor-fusion-based unstructured environment understanding method

A multi-sensor fusion and unstructured technology, applied in the field of unstructured environment understanding based on multi-sensor fusion, can solve problems such as large road width changes, unevenness, and impact on vehicle speed, and achieve stable fusion results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

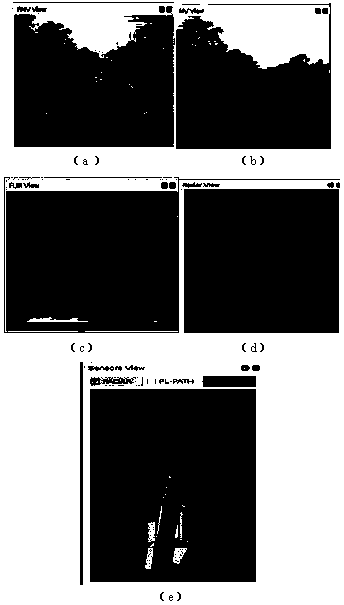

Image

Examples

Embodiment Construction

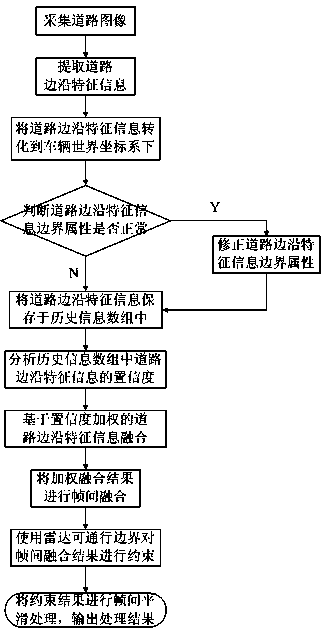

[0027] to combine figure 1 , a method for understanding unstructured environments based on multi-sensor fusion, including the following steps:

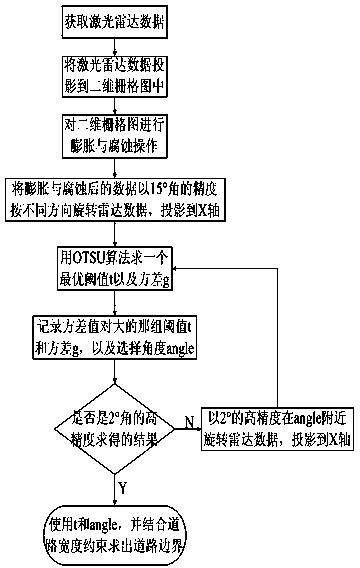

[0028] Step 1. Install two or more visual sensors on the top of the vehicle to obtain visual image information of the road ahead; install a three-dimensional lidar sensor on the top of the vehicle, and install two single-line radars on the head of the vehicle to obtain lidar information around the vehicle body ;Since the 3D laser radar is installed on the top of the vehicle, there is a blind area around the vehicle, especially in front of the front of the vehicle, so two single-line radars are installed in front of the vehicle to eliminate the radar blind area around the vehicle, especially in front of the front of the vehicle, and obtain obstacle information around the vehicle body ;

[0029] Step 2. Extract the road edge feature information Rs[i] (i represents the sensor serial number) from the road images obtained by each visual s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com