Abnormal emotion automatic detection and extraction method and system on basis of short-time analysis

A technology of automatic detection and extraction methods, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of weakening the characteristics of short-term changes in speech emotions, reducing feature discrimination, and short speech segments, and improving automatic processing efficiency. The effect of improving discrimination and improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

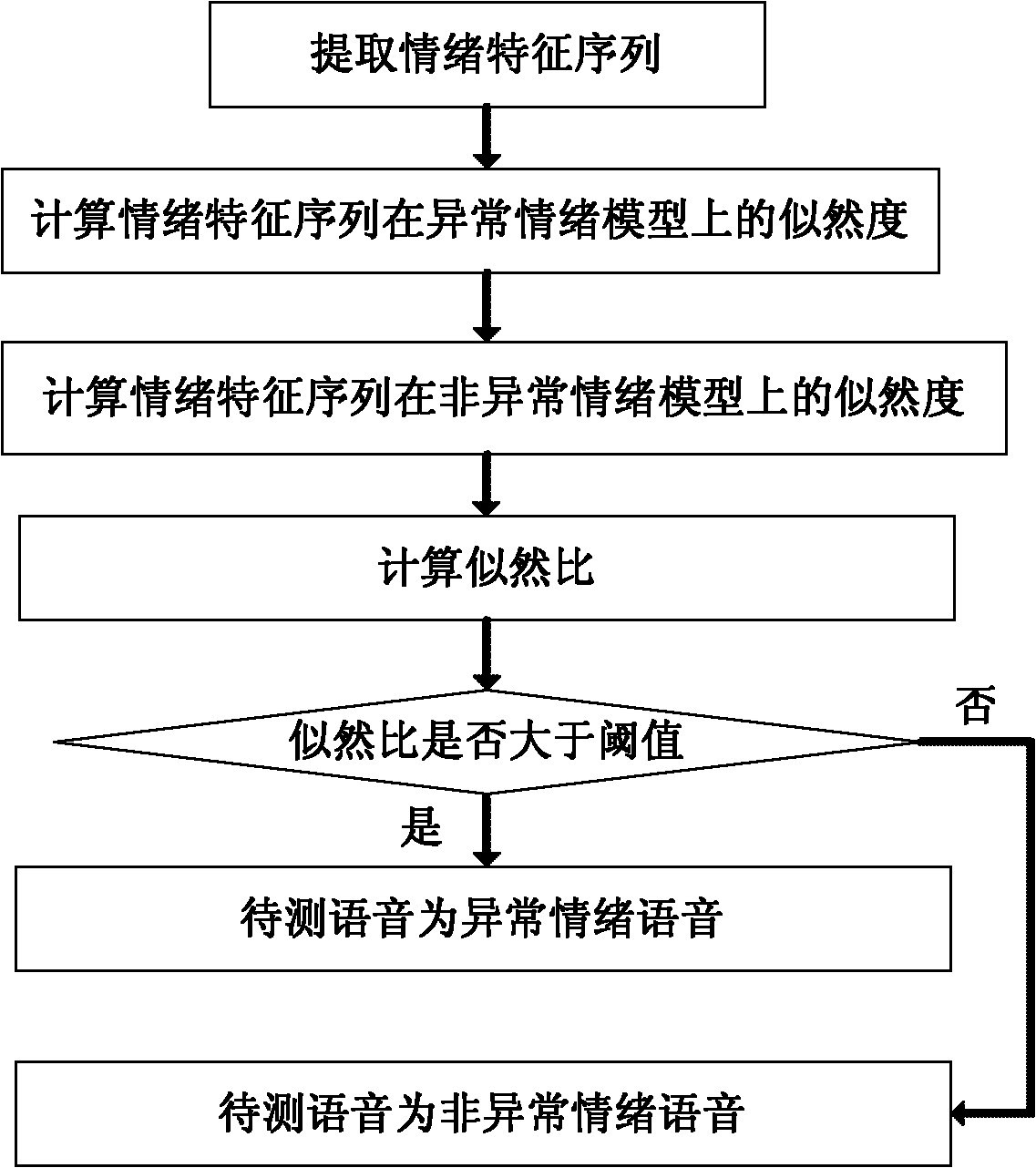

[0068] Such as figure 1 As shown, the abnormal emotion automatic detection and extraction method in the present embodiment comprises the following steps:

[0069] (1) Extract the emotional feature sequence in the speech signal to be tested;

[0070] (2) Calculate the likelihood of the emotional feature sequence and the abnormal emotional model in the preset emotional model, and calculate the likelihood of the emotional feature sequence and the non-abnormal emotional model in the preset emotional model;

[0071] (3) Calculate the likelihood ratio according to the likelihood of the emotional feature sequence and the abnormal emotional model, and the likelihood of the emotional feature sequence and the non-abnormal emotional model;

[0072] (4) Judging whether the likelihood ratio is greater than a set threshold, if so, determining that the speech signal to be tested is an abnormal emotional speech, otherwise determining that the speech signal to be tested is a non-abnormal spee...

Embodiment 2

[0081] Such as figure 1 As shown, this embodiment includes the following steps:

[0082] (1) Extract the emotional feature sequence in the speech signal to be tested;

[0083] (2) Calculate the likelihood of the emotional feature sequence and the abnormal emotional model in the preset emotional model, and calculate the likelihood of the emotional feature sequence and the non-abnormal emotional model in the preset emotional model;

[0084] (3) Calculate the likelihood ratio according to the likelihood of the emotional feature sequence and the abnormal emotional model, and the likelihood of the emotional feature sequence and the non-abnormal emotional model;

[0085] (4) Judging whether the likelihood ratio is greater than a set threshold, if so, determining that the speech signal to be tested is an abnormal emotional speech, otherwise determining that the speech signal to be tested is a non-abnormal speech signal.

[0086] The threshold is preset by the system and debugged on...

Embodiment 3

[0101] Such as figure 1 As shown, this embodiment includes the following steps:

[0102] (1) Extract the emotional feature sequence in the speech signal to be tested;

[0103] (2) Calculate the likelihood of the emotional feature sequence and the abnormal emotional model in the preset emotional model, and calculate the likelihood of the emotional feature sequence and the non-abnormal emotional model in the preset emotional model;

[0104] (3) Calculate the likelihood ratio according to the likelihood of the emotional feature sequence and the abnormal emotional model, and the likelihood of the emotional feature sequence and the non-abnormal emotional model;

[0105] (4) Judging whether the likelihood ratio is greater than a set threshold, if so, determining that the speech signal to be tested is an abnormal emotional speech, otherwise determining that the speech signal to be tested is a non-abnormal speech signal.

[0106] The threshold is preset by the system and debugged on...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com