A high-performance heterogeneous multi-core shared cache buffer management method

A heterogeneous multi-core and buffer management technology, applied in the field of heterogeneous multi-core shared cache buffer management, can solve the problems of unfair allocation of shared last-level cache, affecting system performance and power consumption, inability to adapt to heterogeneous environments, etc. The effect of fair competition, improved utilization, and improved memory hit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] In order to make the objectives, technical solutions and advantages of the present invention clearer, the embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

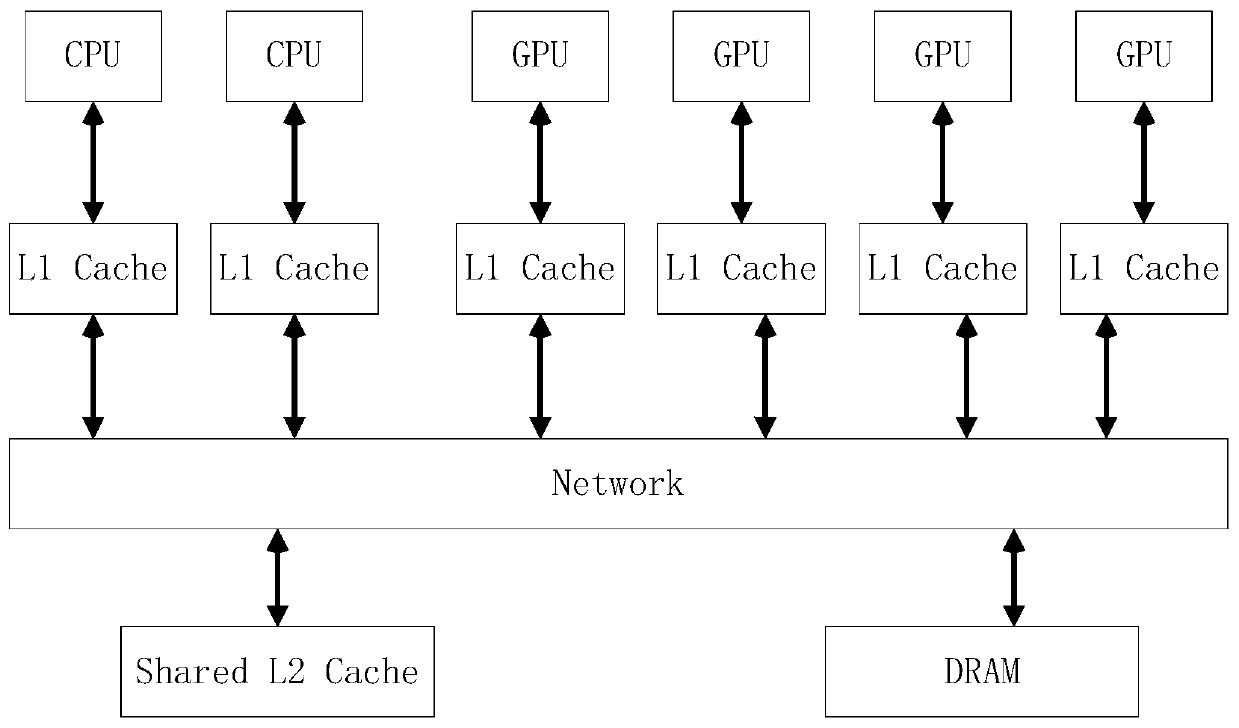

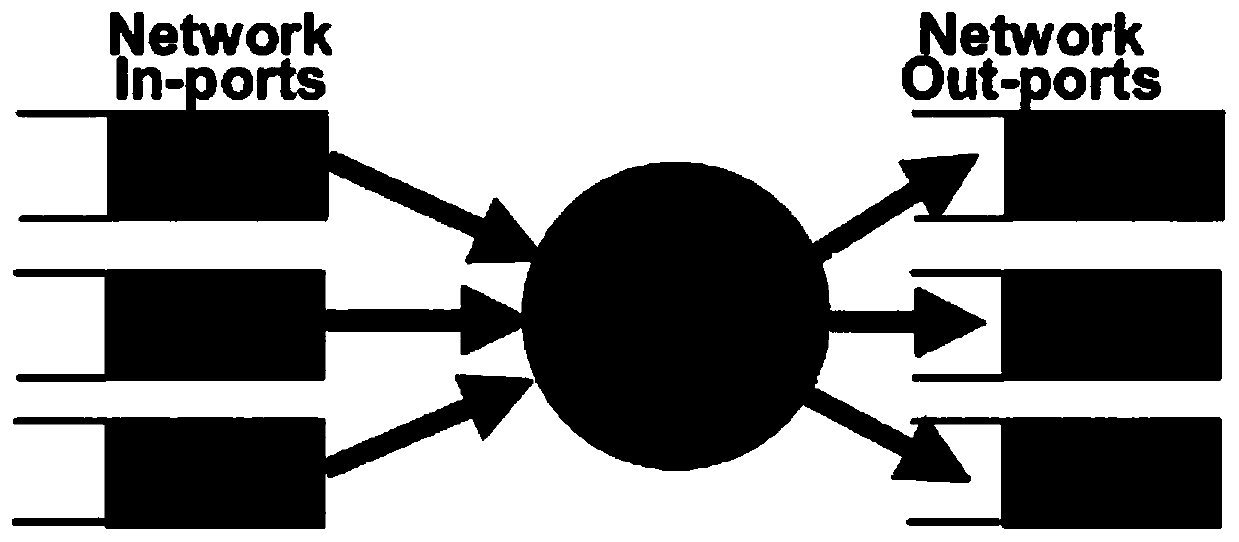

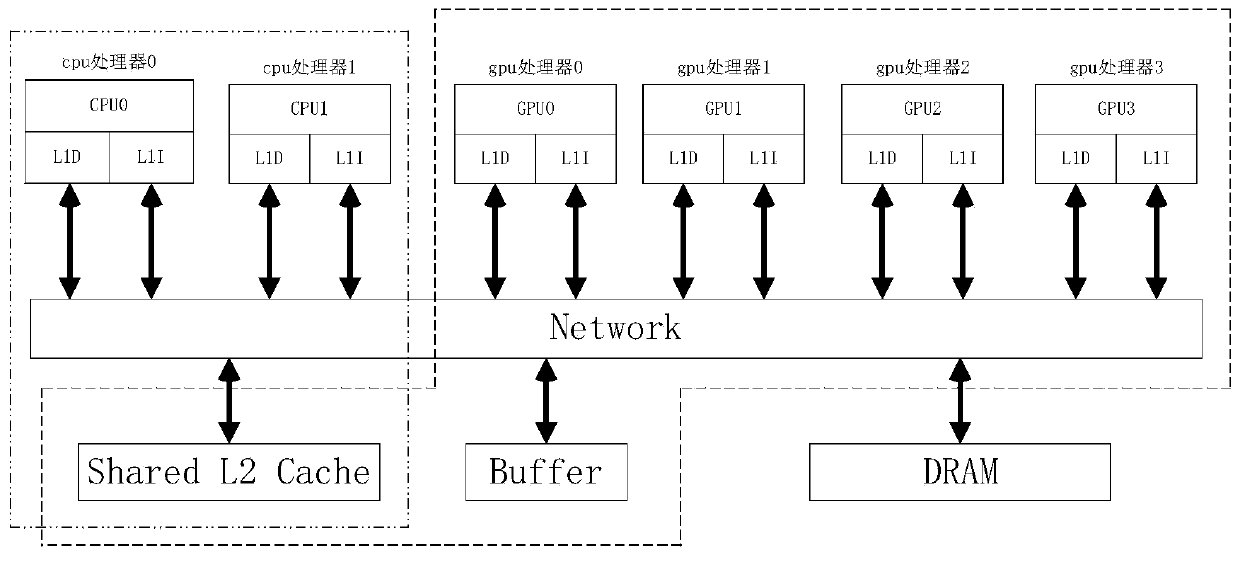

[0029] The invention relates to a high-performance heterogeneous multi-core cache buffer management method, such as figure 1 As shown, take a heterogeneous processor with two CPU cores and four GPU cores, each core having its own L1Cache and sharing one L2Cache as an example. The running CPU test program is single-threaded SPEC CPU2006, and the GPU application program is Rodinia. Each workload consists of a CPU test program and a GPU application program. In the simulator, the SLICC (specification language for implementing cache coherence) script language is used to describe the conformance protocol. Such as figure 2 Shown is a diagram of the SLICC operating mechanism. Specific steps are as follows:

[0030] Step 1. Distinguish the CPU memory access re...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com