Feedback background extraction-based city traffic scene foreground target detection method

A technology for urban traffic and background extraction, which is applied in image data processing, instruments, calculations, etc., to achieve the effect of realizing significant foreground detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0032] The present invention provides a technical solution: a method for detecting a foreground object in an urban traffic scene based on feedback background extraction, comprising the following steps:

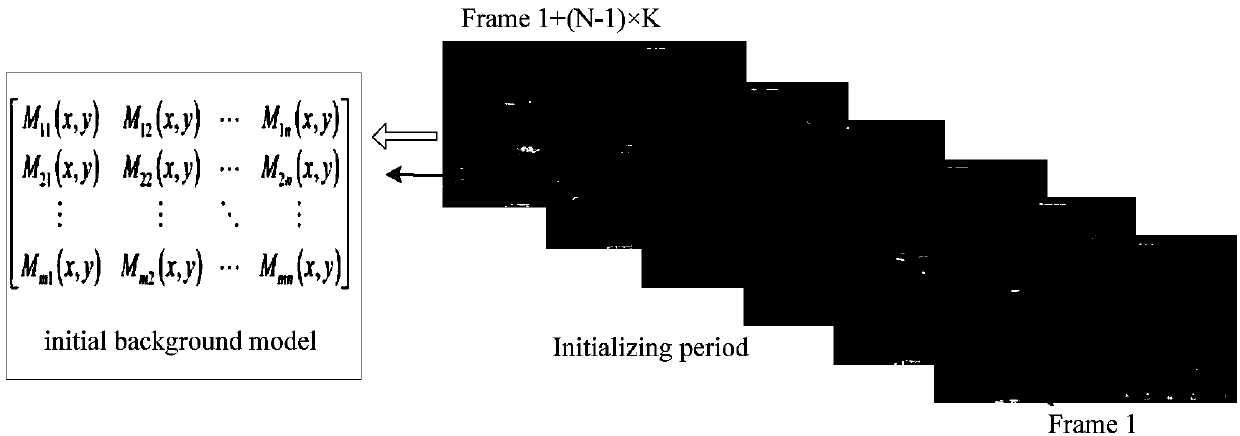

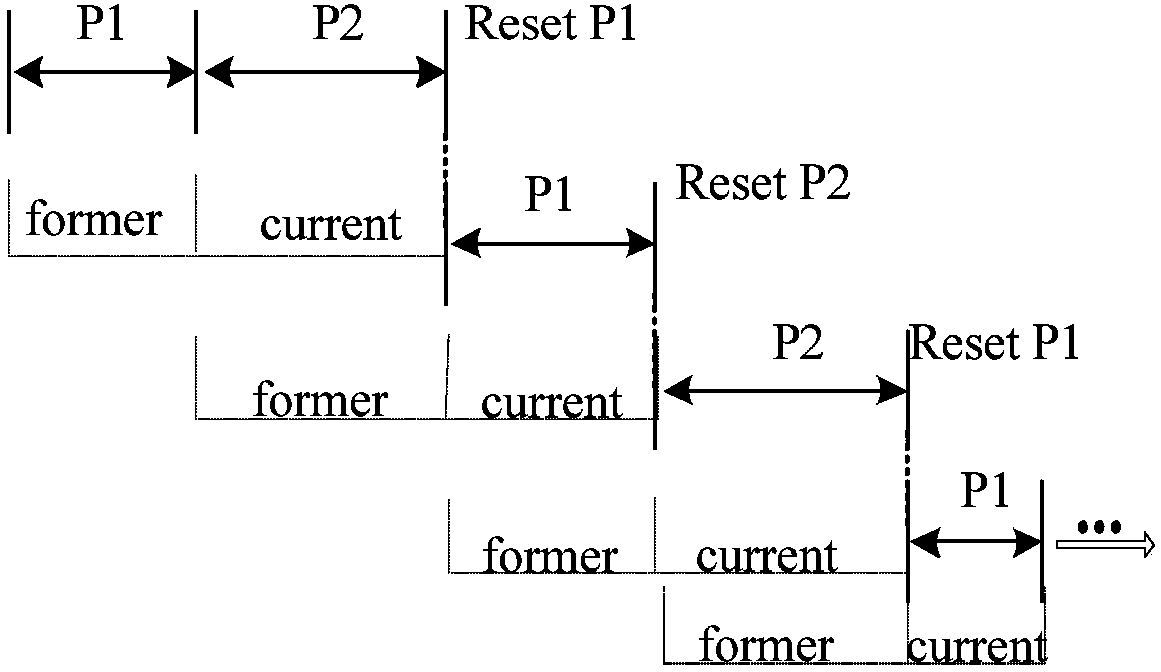

[0033] Step 1: First use the position of each pixel in a group of samples to establish a background model, and use interval frames to initialize the model. In step S1, FViBe uses the latest observed image value v M The set N of (x, y), M ∈ [1, N] representing any pixel is used to describe the background model M(x, y), which uses the most recent observation at a specified time interval at any position (x, y) to initialize the image values as follows:

[0034] M(x,y)={v 1 (x,y),v 2 (x,y),...,v N (x,y)} = {I 1 (x,y),I 1+K (x,y),...,I 1+(M-1)×K (x,y),...,I 1+(N-1)×K (x,y)} (1)

[0035] In the formula, N represents the number of samples in the model, K represents the time interval in the actual scene, and I 1 Refers to frame 1, I 1+(N-1)×KRefers to the 1+(N-1)×K frame. ...

Embodiment 2

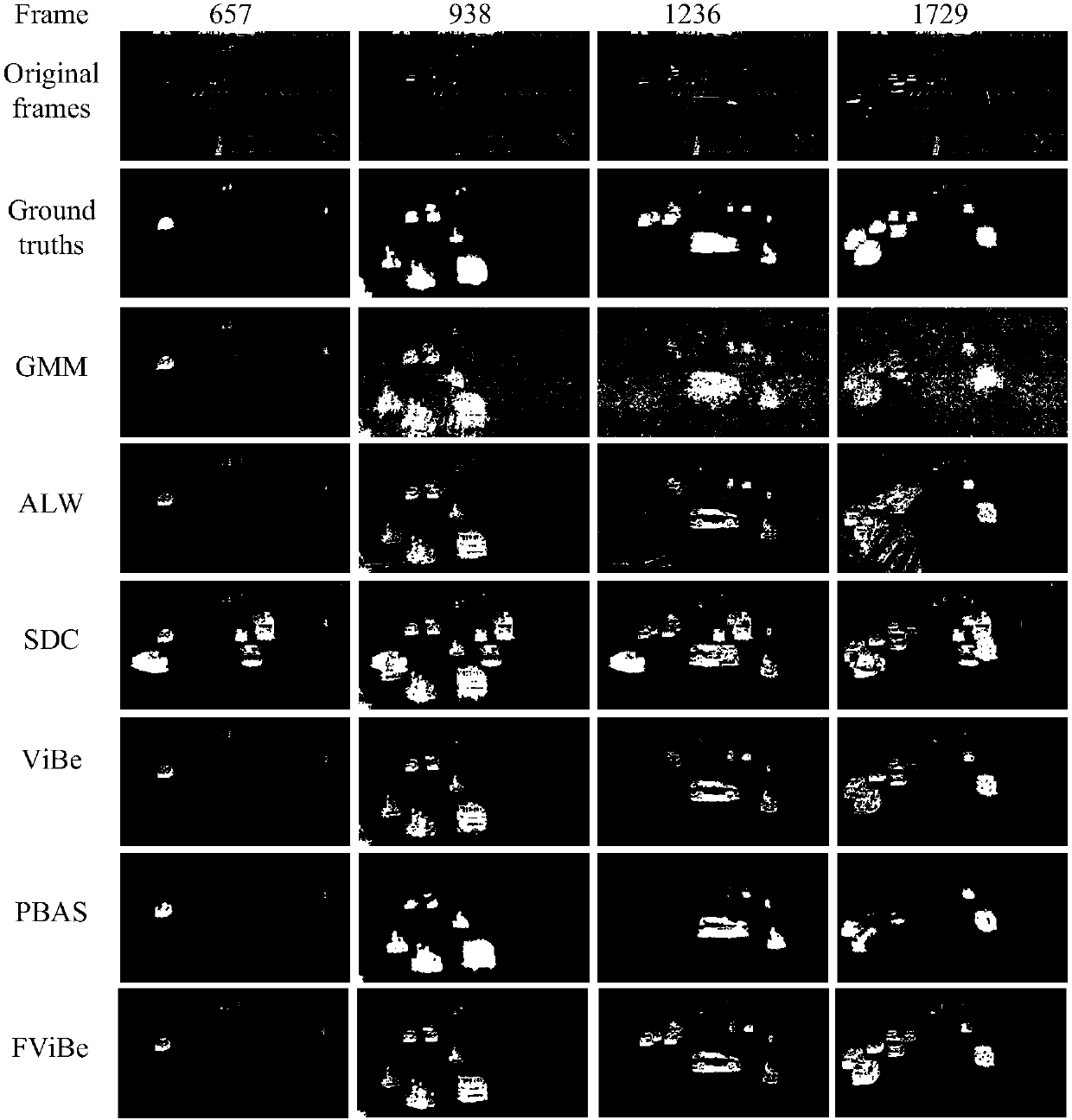

[0059] The present invention provides a specific example to illustrate: the urban traffic data of changes in weather and lighting conditions is provided by the local traffic police detachment of Jining City, Shandong Province, and is provided by charge-coupled devices installed at three different intersections between 7:00 am and 10:00 am. The five detection methods collected by the camera and compared with them are GMM, ALW, SDC, ViBe and PBAS. The performance of these methods is compared according to the author's settings or default parameters, and GMM, ViBe and PBAS are The test is based on the open source computer vision library (OpenCV). In order to deal with the slow-moving or temporarily stopped vehicles in the TLD, the comparison results of four representative frames are given. Several methods involved in the comparison are the same as those in this paper. The detection results of the proposed method are shown in the third row to the eighth row of the figure. The first ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com