Multi-round dialogue model construction method based on hierarchical attention mechanism

A construction method and attention technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of training speed influence, meaningless reply, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

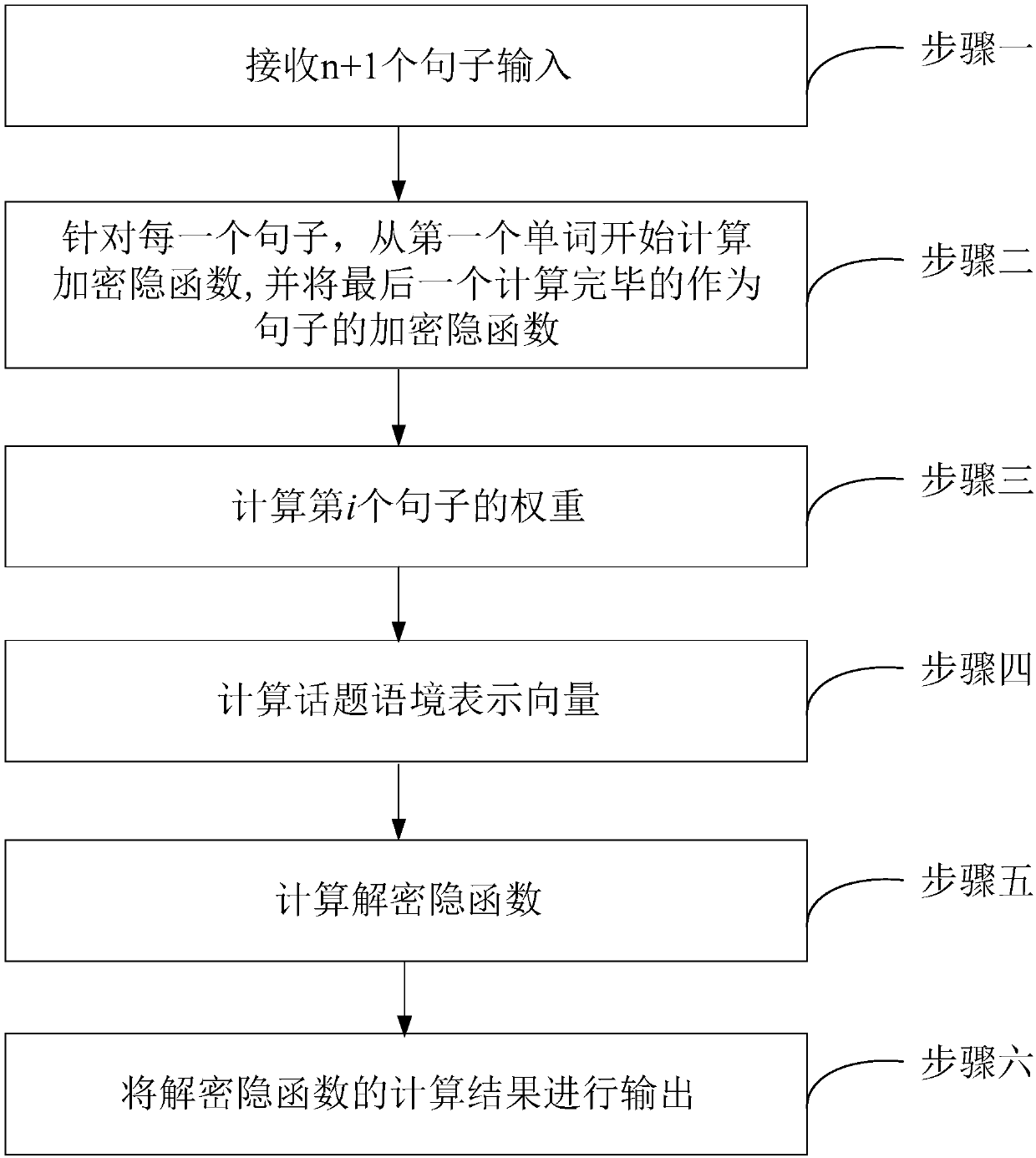

[0049] The present invention provides a method for constructing a multi-round dialogue model based on a hierarchical attention mechanism, such as figure 1 shown, including:

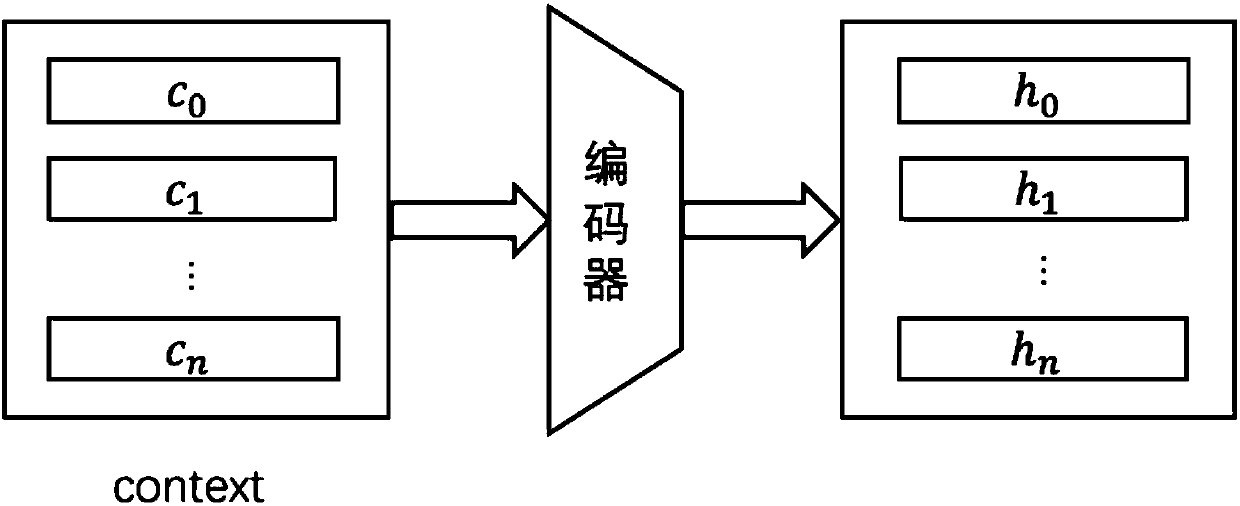

[0050] Step 1. Receive n+1 sentences and input c o ,c 1 ,... c n .

[0051] Step 2, for each sentence c i , starting from the first word to calculate the encrypted implicit function h i,t =f(x i,t ,h i,t-1 ), where x i,t stands for c i the tth word; where h i,0 Recorded as a preset parameter; and the last calculated h i,t denoted as sentence c i The encrypted implicit function h i .

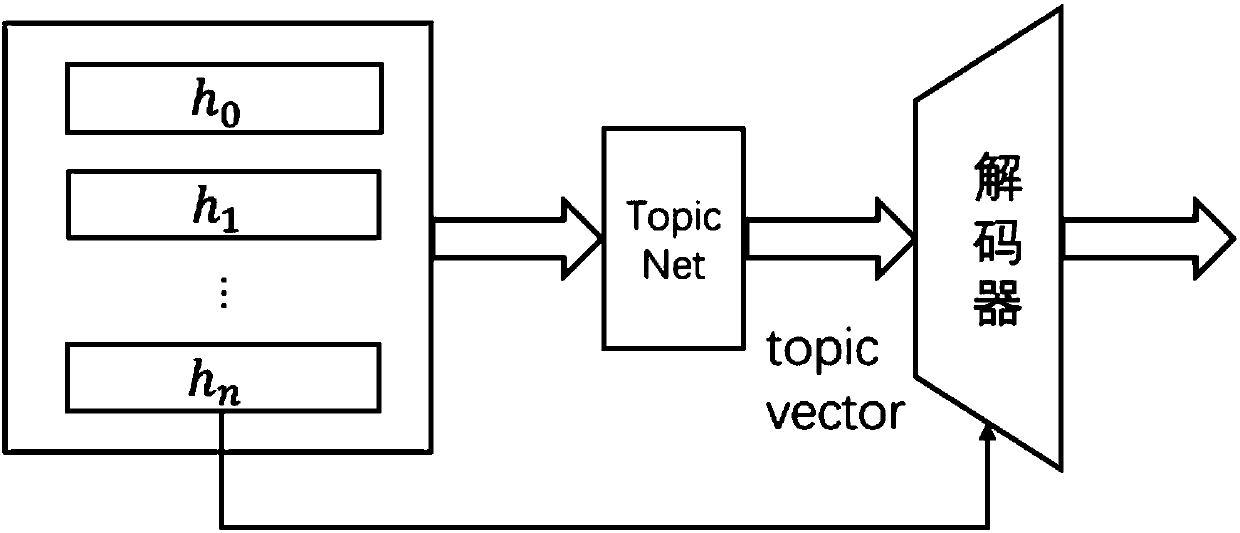

[0052] Step 3. Calculate the Attention weight of the i-th sentence where e i =v T tanh (Wh i +Uh n ); v, W, and U are all preset parameters in the Attention mechanism.

[0053] Step 4. Calculate the topic context representation vector T=∑α i h i .

[0054] Step 5. Calculate and decrypt the implicit function s t =f(y t-1 ,s t-1 ,T), y t-1 Indicates the iterative input amount at time t-1, 0 is the d...

specific Embodiment approach 2

[0059] The present invention also provides another method for constructing a multi-round dialogue model based on a hierarchical attention mechanism, including:

[0060] Step 1. Receive n+1 sentences and input c o ,c 1 ,... c n .

[0061] Step 2, for each sentence c i , starting from the first word to calculate the encrypted implicit function h i,t =f(x i,t ,h i,t-1 ), where x i,t stands for c i the tth word; where h i,0 Recorded as a preset parameter; and the last calculated h i,t denoted as sentence c i The encrypted implicit function h i .

[0062] Step 3. Calculate the Attention weight of the t-th word in the i-th sentence where e it =v T tanh (Wh i +Us t-1 ); v, W, and U are all preset parameters in the Attention mechanism; s t-1 is the state of the hidden layer at time t-1.

[0063] Step 4. Calculate the dynamic representation vector D t = α it h i .

[0064] Step 5. Calculate and decrypt the implicit function s t =f(y t-1 ,s t-1 ,D t ), y t-1...

example 1

[0083] Above:

[0084] Now I'm going to try the development version from the PPA and see if it crashes again.

[0085] Are you looking at your computer's CPU temperature?

[0086] No, I haven't had a problem with the temperature....where can I see it's temperature?

[0087] topic model: you can try to delete your config file and try again

[0088] dyna model: try running lspci from terminal, there is a list

[0089] hred model: System -> Preferences -> Power Management

[0090] vhred model: I don't understand what you mean

[0091] LSTM model: I don't understand

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com