Asynchronous training of machine learning models

A technology of machine learning model and training data, applied in the field of asynchronous training of machine learning model, which can solve problems such as mismatch of working machines

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0012] The present disclosure will now be discussed with reference to several embodiments. It should be understood that these embodiments are discussed only to enable those of ordinary skill in the art to better understand and thus implement the present disclosure, and do not imply any limitation on the scope of the present disclosure.

[0013] As used herein, the term "comprising" and variations thereof are to be read as open-ended terms meaning "including but not limited to". The term "based on" is to be read as "based at least in part on". The terms "an embodiment" and "one embodiment" are to be read as "at least one embodiment". The term "another embodiment" is to be read as "at least one other embodiment". The terms "first", "second", etc. may refer to different or the same object. Other definitions, both express and implied, may also be included below.

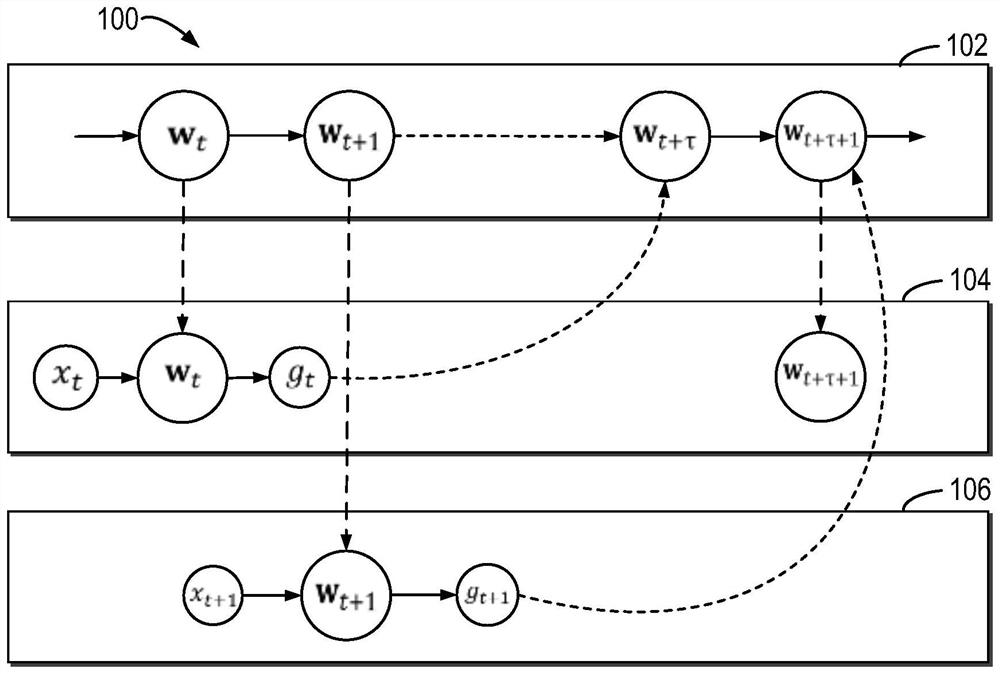

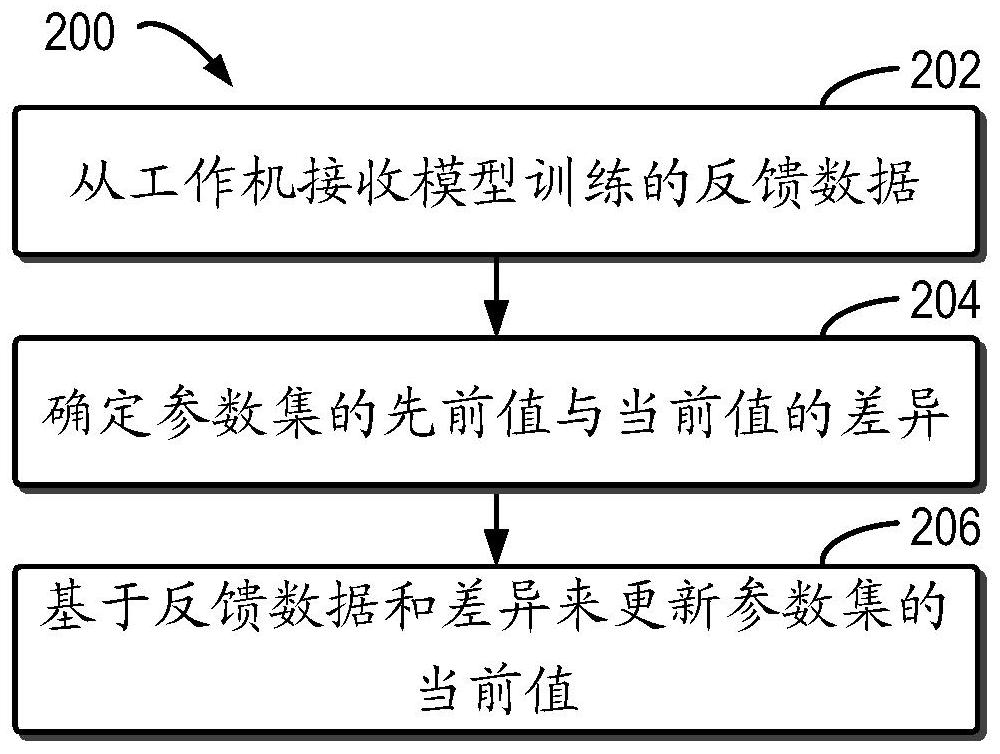

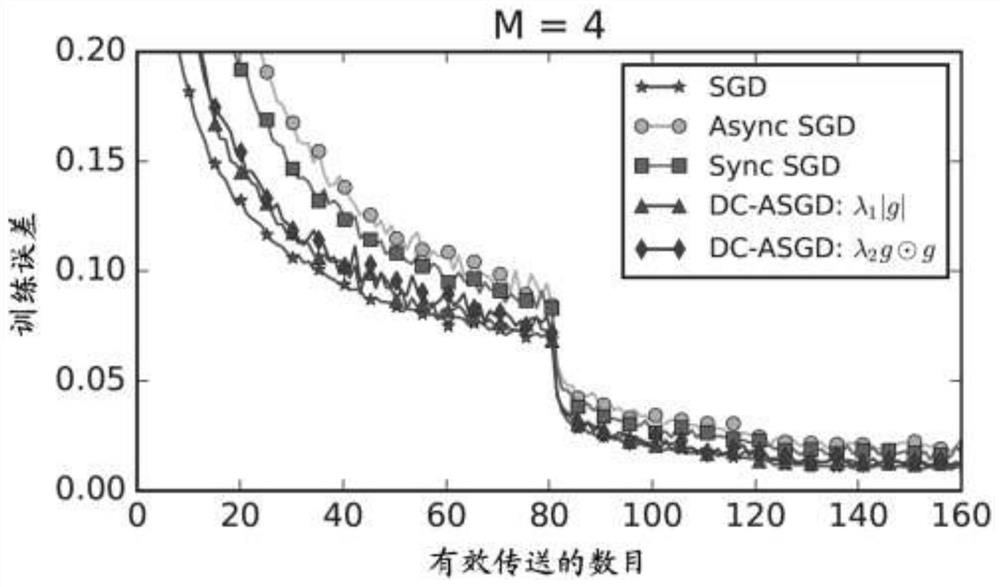

[0014] Asynchronous Training Architecture

[0015] figure 1 A block diagram of a parallel computing environment ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com