Face expression editing method based on generative adversarial networks

A facial expression and generative technology, applied in the field of computer vision, can solve problems such as difficult to achieve facial texture modification, and achieve the effect of maintaining facial identity information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

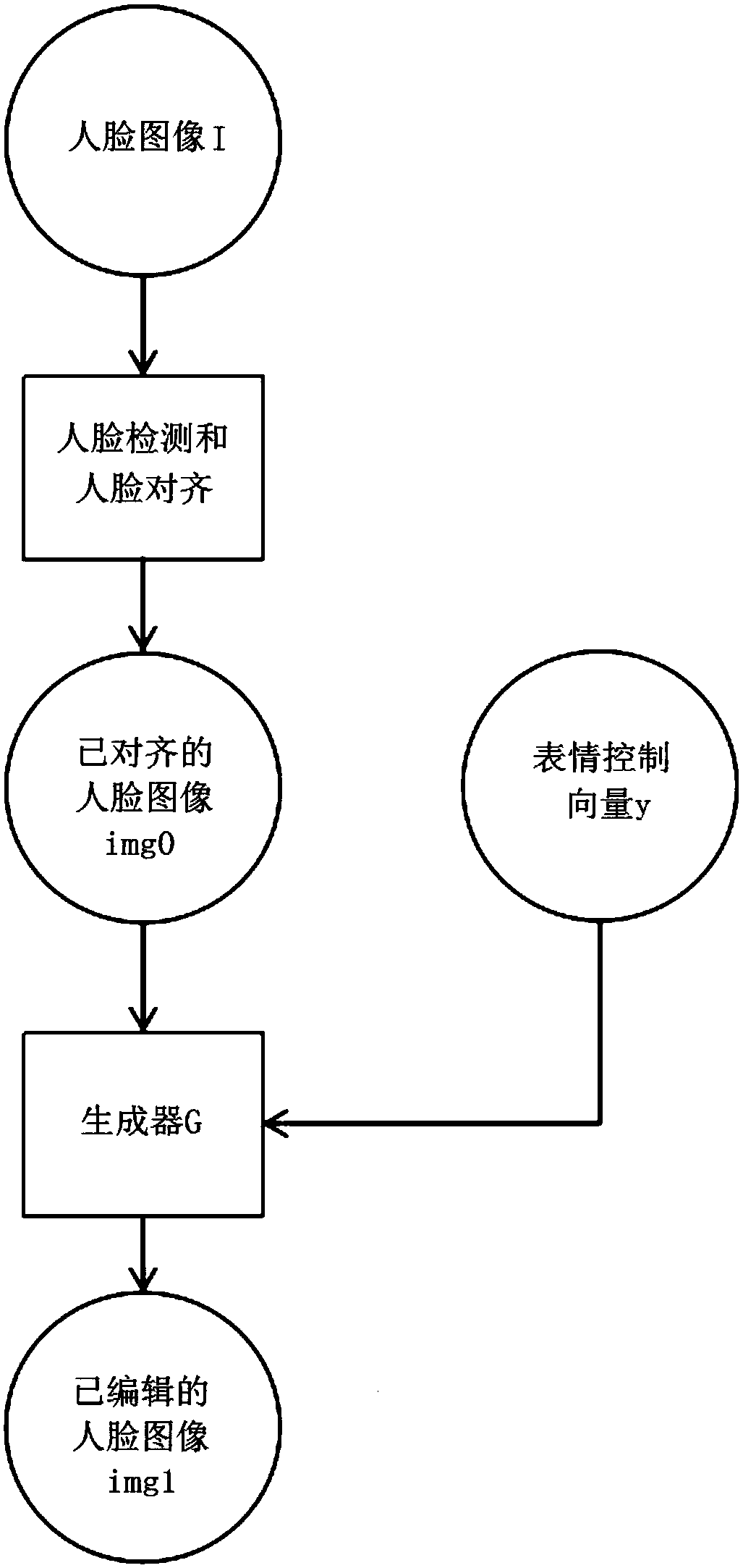

[0024] A facial expression editing method based on a generative confrontation network, the overall steps are:

[0025] Step S1, data preparation stage

[0026] a. Manually label each face in the RGB image collection, and label the face identity information and facial expression information; the label information of each picture is represented by [i, j], i means that the picture belongs to the i-th Individual (0≤i<N), j means that the picture belongs to the jth expression (0≤j<M); the entire picture set contains N individuals and M expressions;

[0027] b. Cut out the faces in the marked image collection from the picture through the face detector and the face feature point detector, and perform face alignment;

[0028] Step S2, model design stage

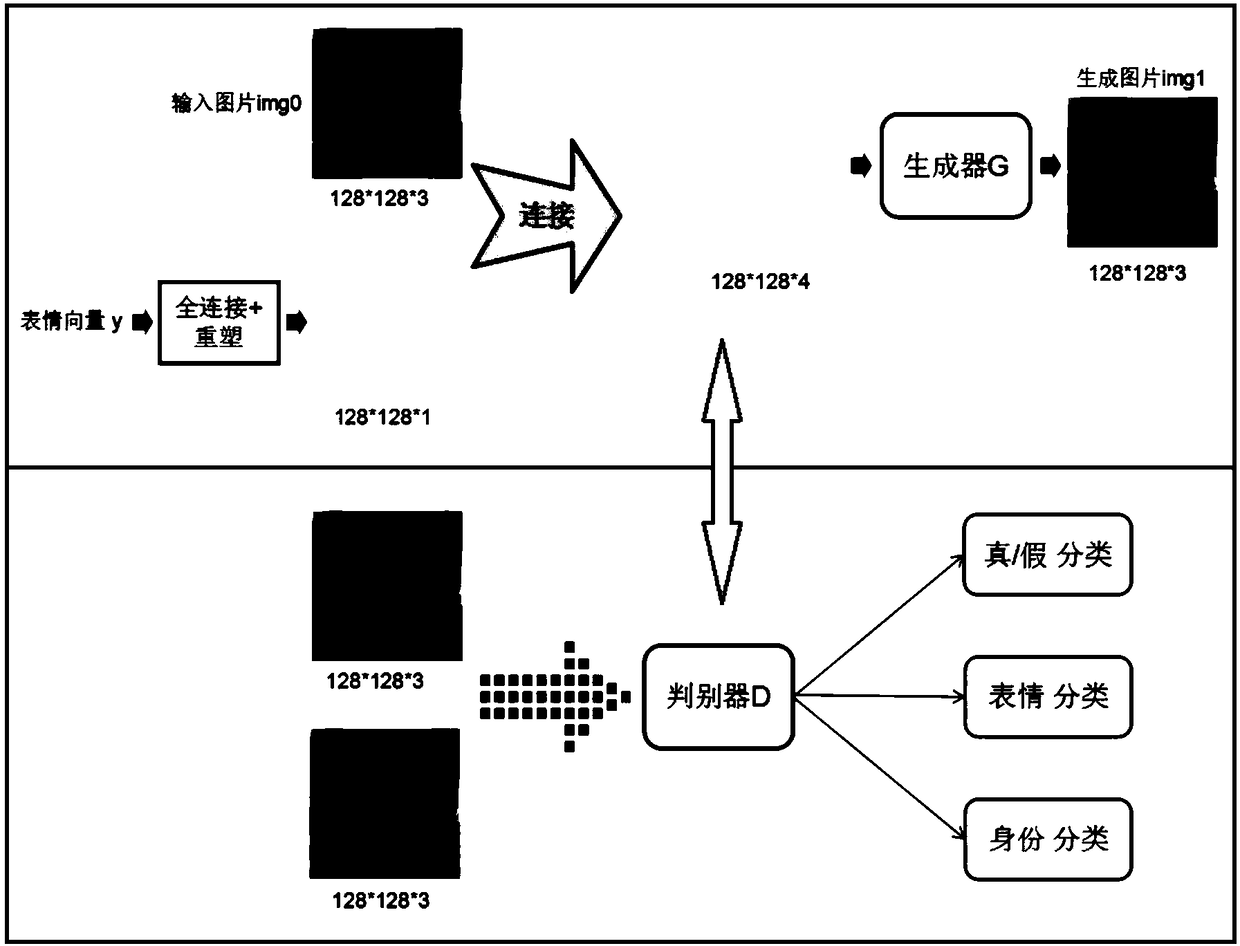

[0029] a. The model consists of two parts, namely the generator G and the discriminat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com