Information fusion method of kinect depth camera and thermal infrared camera

A technology of depth camera and fusion method, applied in image data processing, instrumentation, calculation, etc., can solve the problems of poor imaging quality, unsatisfactory reconstruction effect, limited calibration parameter accuracy, etc., to achieve the effect of optimizing internal and external parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The specific embodiments of the present invention are described below so that those skilled in the art can understand the present invention, but it should be clear that the present invention is not limited to the scope of the specific embodiments. For those of ordinary skill in the art, as long as various changes Within the spirit and scope of the present invention defined and determined by the appended claims, these changes are obvious, and all inventions and creations using the concept of the present invention are included in the protection list.

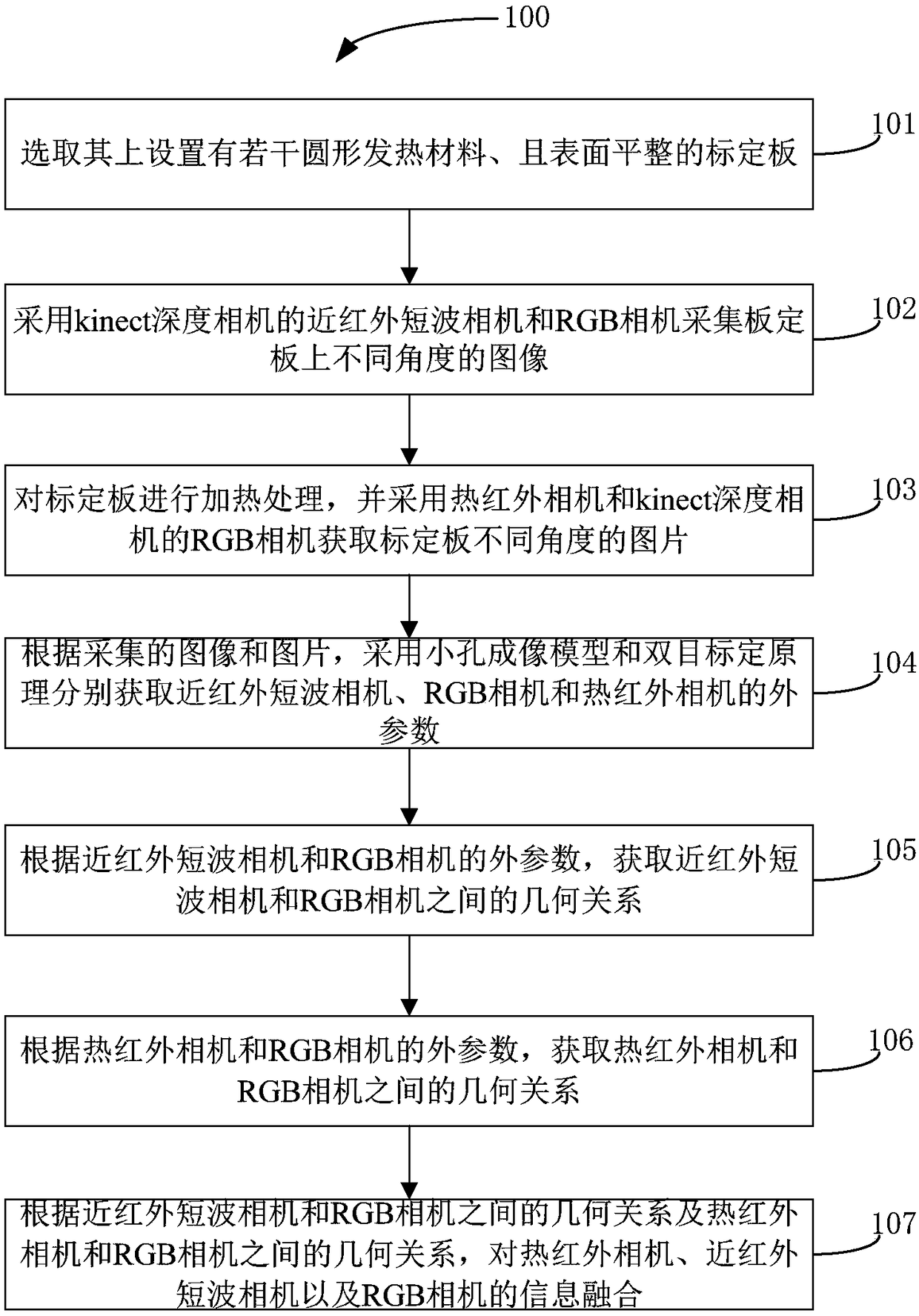

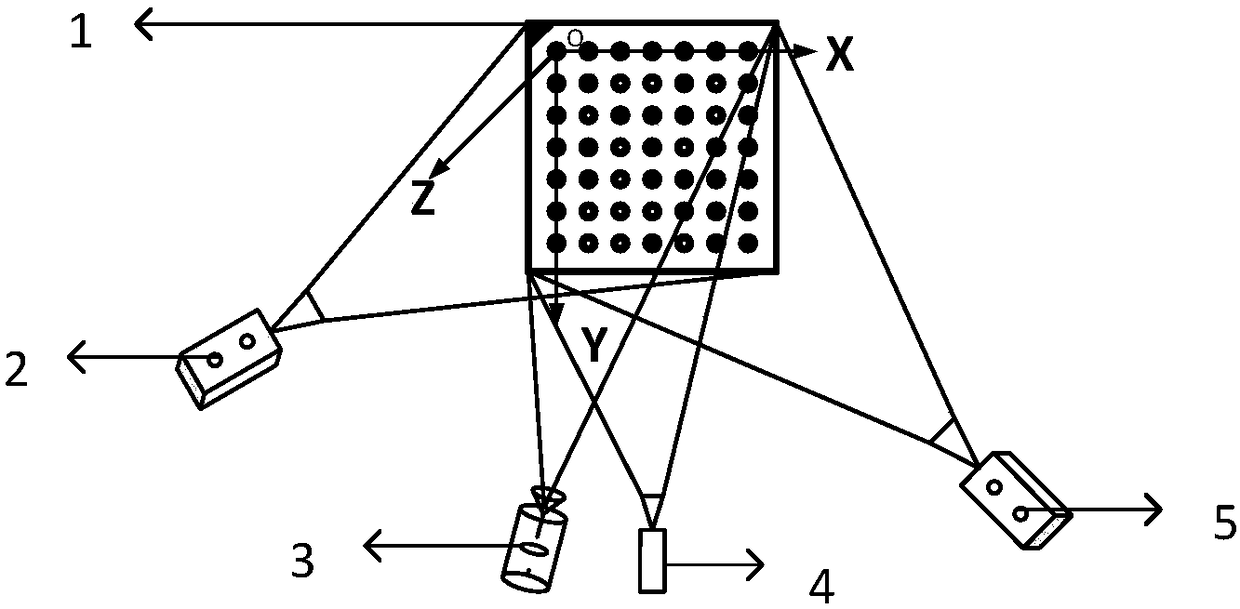

[0028] refer to figure 1 , figure 1 Show the flow chart of Kinect depth camera and thermal infrared camera 5 information fusion methods; As figure 1 As shown, the method 100 includes steps 101 to 107.

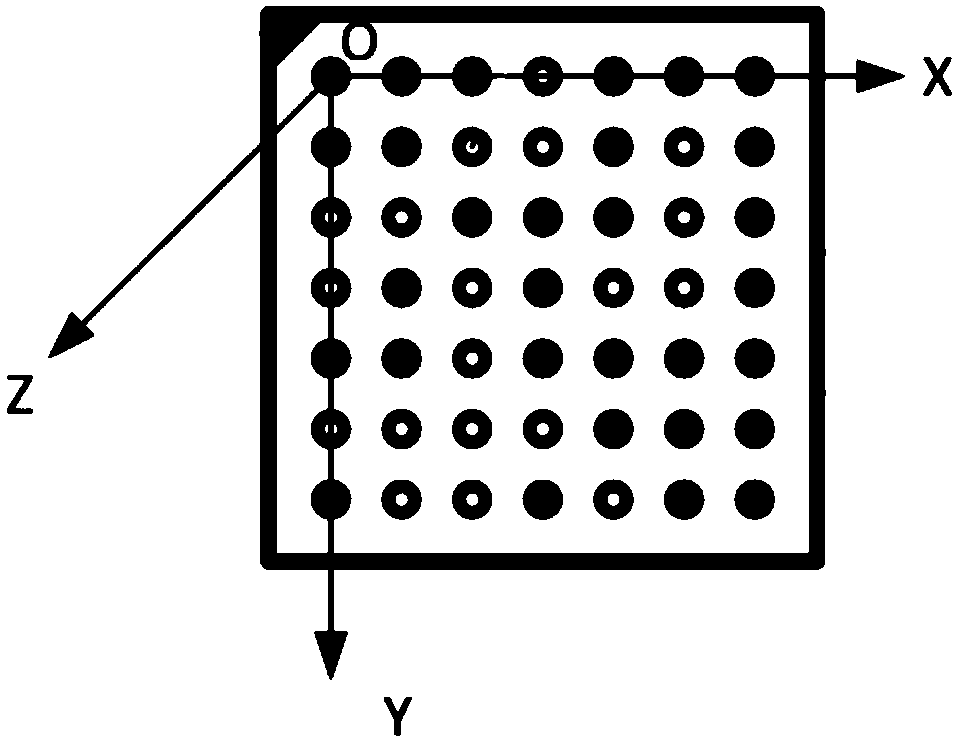

[0029] In step 101, a calibration plate 1 with several circular heating materials and a flat surface is selected.

[0030] During implementation, the material of the calibration plate 1 is preferably heat insulating mater...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com