Video understanding method

A video and video frame technology, applied in the field of video understanding, can solve the problem of inability to extract dense frame features

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] Preferred embodiments of the present invention are described below with reference to the accompanying drawings. Those skilled in the art should understand that these embodiments are only used to explain the technical principles of the present invention, and are not intended to limit the protection scope of the present invention.

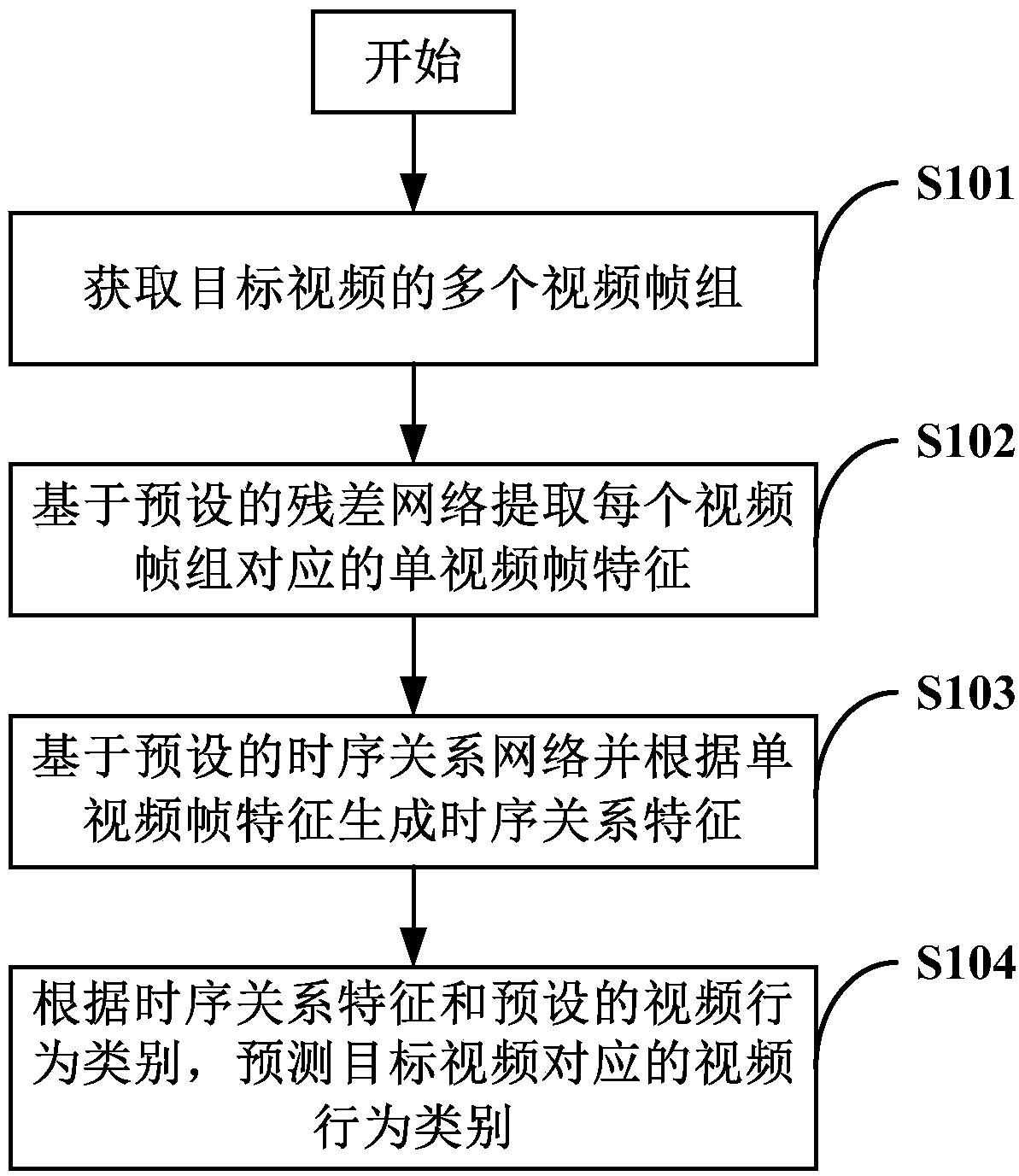

[0044] See attached figure 1 , figure 1 The main steps of a video understanding method in this embodiment are exemplarily shown. like figure 1 As shown, the video understanding method in this embodiment may include the following steps:

[0045] Step S101: Obtain multiple video frame groups of the target video.

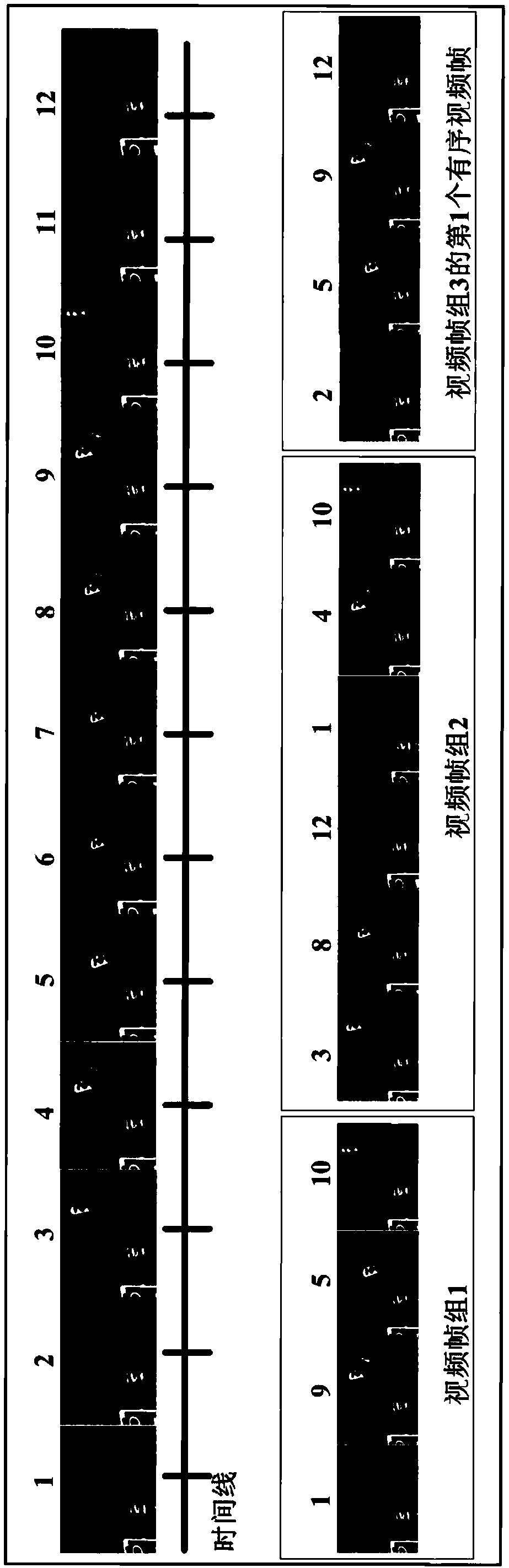

[0046] The video frame group in this embodiment may include two ordered video frames, and each ordered video frame may include a plurality of video frames arranged sequentially in time order.

[0047] Specifically, in this embodiment, the video frame group of the target video can be obtained according to the following steps:

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com