Motion recognition method based on segmented mannequin model applied in human-machine cooperation

A human body model and action recognition technology, applied in the field of human-computer interaction, can solve problems such as differences, large amount of information, and difficulty in human action recognition.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

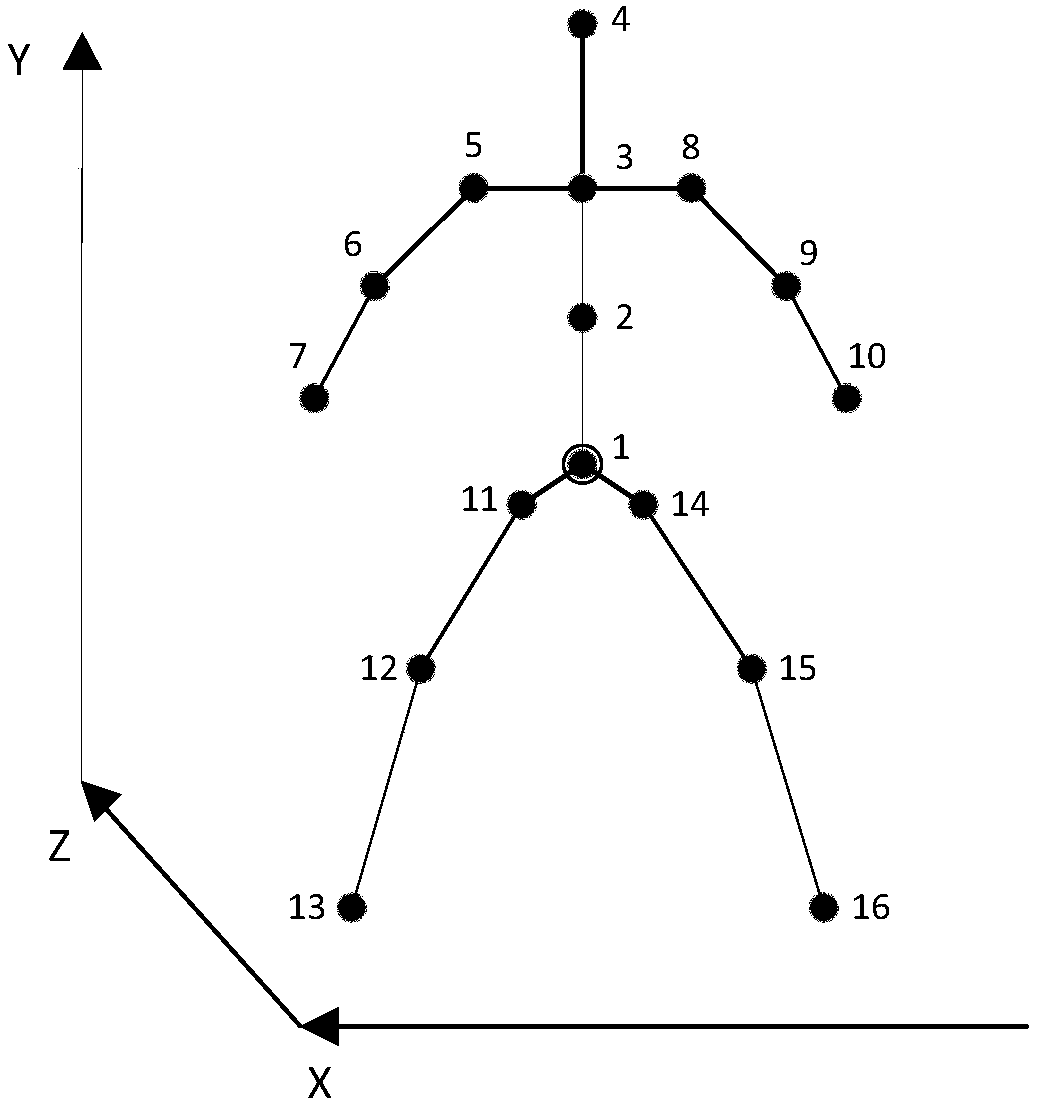

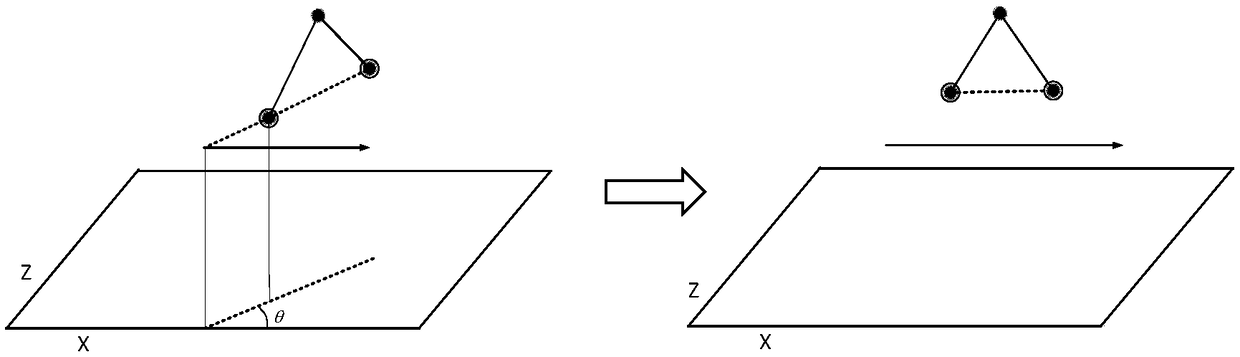

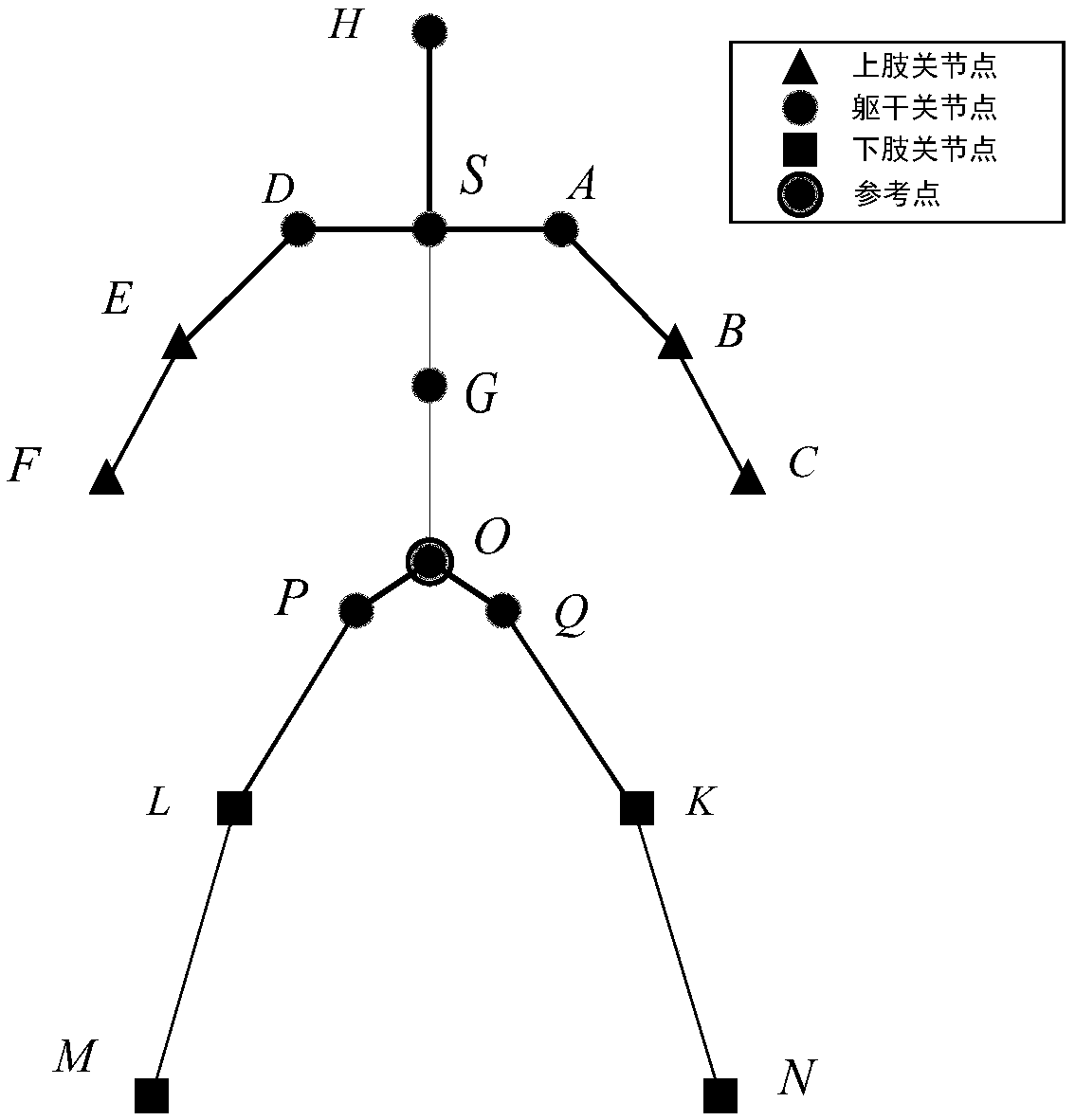

[0089] Step 1: Map the action sequence to be recognized and the joint point data of the action template to 3D, store it as a point cloud, and perform preprocessing, including translation, scaling, and rotation;

[0090] KinectV2 sensor tracking bone data is about 30frame / second, so the frame can be used as the time unit of the bone node.

[0091] The joint data extracted by the Kinect V2 sensor contains 30 frames of data per second, and each frame contains the coordinate information of 25 joint points. In order to store, transmit and read the joint information in the action sequence conveniently and quickly, the innovative The use of point clouds (PCD file format) to store action sequences.

[0092] A point cloud is a collection of a large number of points. It is a data storage structure that has emerged in recent years in applications and three-dimensional reconstruction. It has many file formats. The present invention uses the PCD format three-dimensional ordered points defi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com