Human upper body motion recognition method based on key frame and stochastic forest regression

A technology of random forest and action recognition, applied in character and pattern recognition, computer parts, instruments, etc., can solve the problem of low recognition accuracy and achieve the effect of improving the correct recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described below in conjunction with the accompanying drawings and embodiments. The accompanying drawings are diagrams of a schematic nature and do not limit the present invention in any way.

[0041] Embodiments of the present invention will be described in detail.

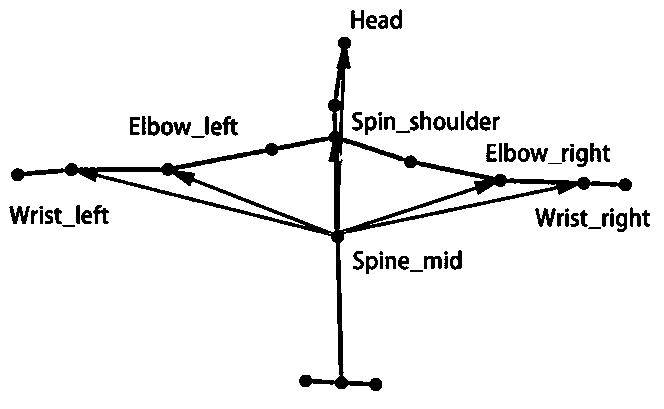

[0042] Step 1. Use Optitrack and Kinect v2 to obtain the joint coordinates of the upper body of the human body. Using the 12 FLEX:V100R2 lenses of the OptiTrack full-body motion capture system, they are arranged according to the layout of the 12 lenses and human body markers in the standard OptiTrack system; the positions of the marker points on the upper body of the human body are collected, and the joint point coordinates are calculated respectively, and converted to Kinect The bone coordinate system of v2; OptiTrack sampling frequency is set to 90FPS. Kinect v2 collects the joint coordinates of the upper body of the human body at the same time.

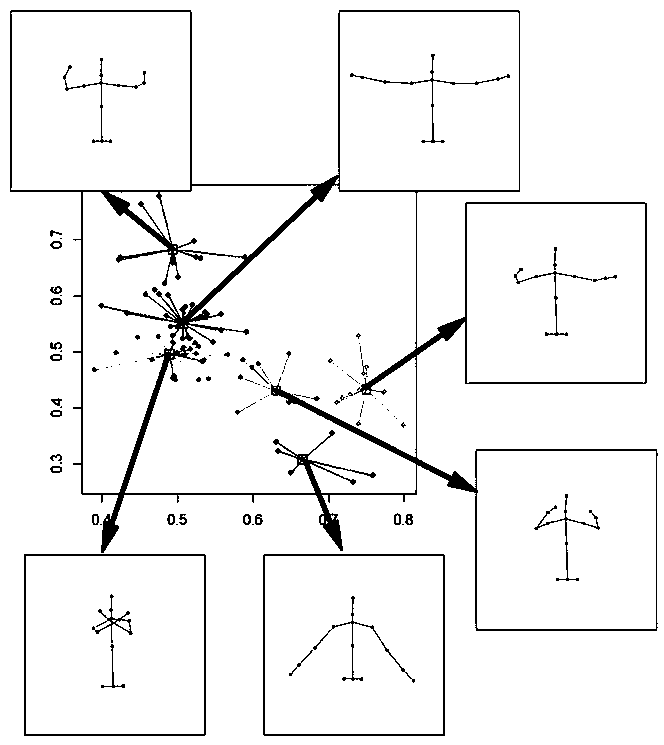

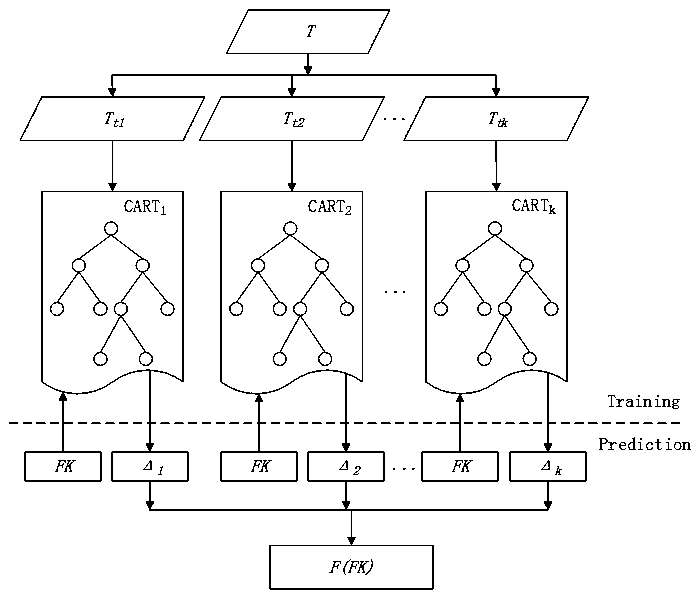

[0043] Step 2. Ext...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com