A Human Action Recognition Method Based on Lie Group Features and Convolutional Neural Networks

A technology of convolutional neural network and human action recognition, which is applied in character and pattern recognition, instruments, computing, etc., can solve the problem of high recognition accuracy, achieve strong robustness, accurate and effective description

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

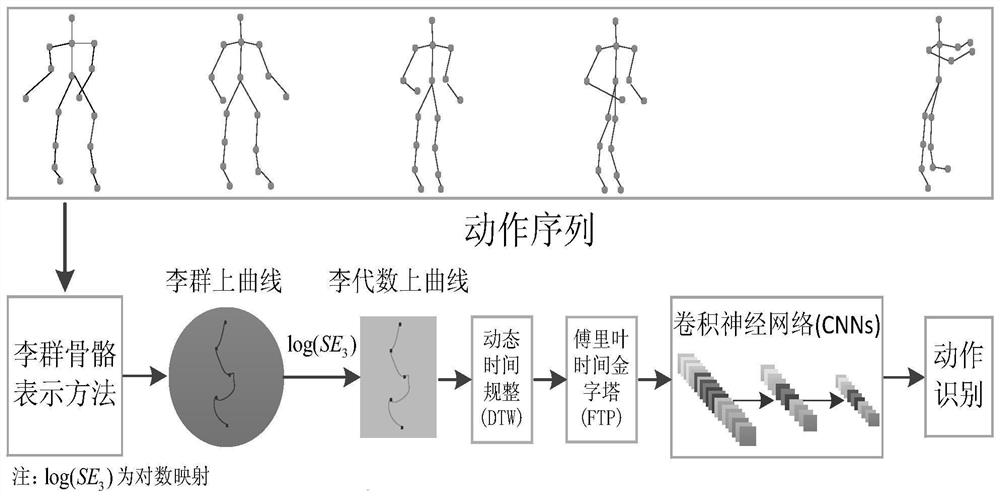

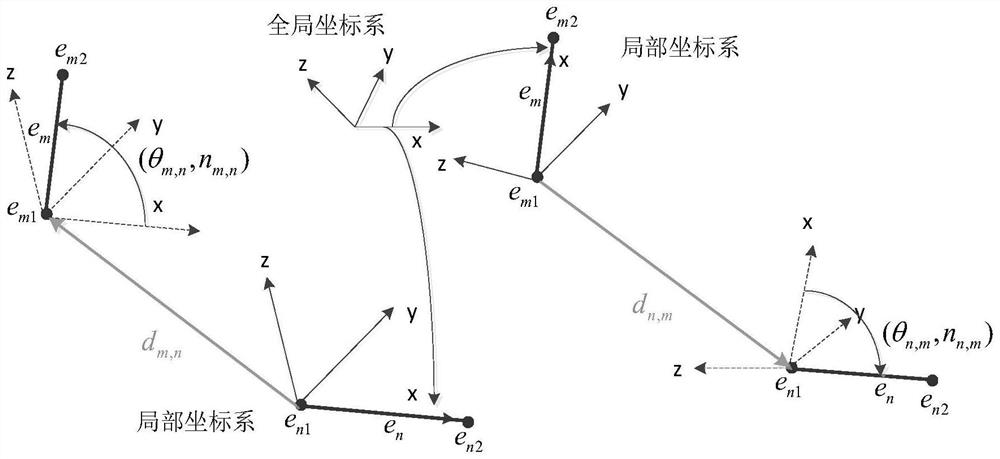

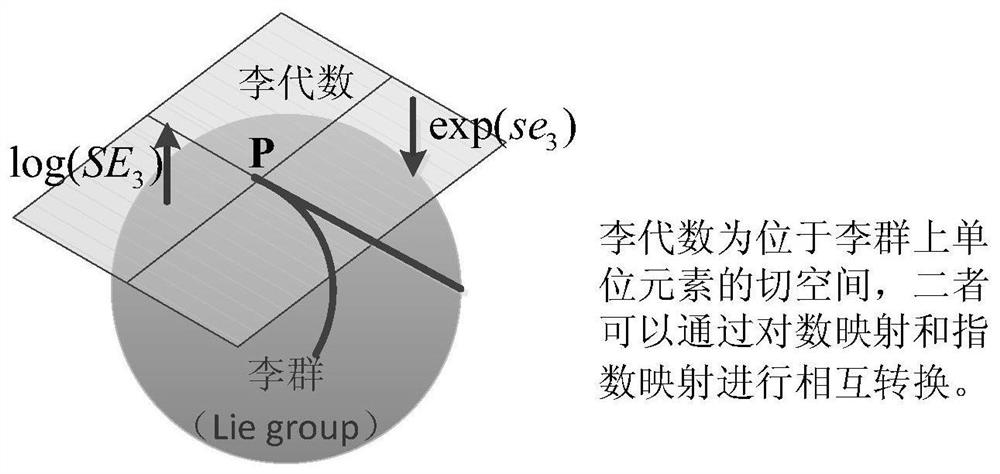

[0051] figure 1 It is the overall framework of the method for human action recognition based on Lie group features and convolutional neural networks according to the present invention, such as figure 1 As shown, the main work of the recognition method of the present invention is to obtain the skeleton information of the human body motion sequence through the somatosensory device Kinect produced by Microsoft, and use a rigid limb transformation (such as rotation, translation, etc. in three-dimensional space) to simulate the movement of the human body. The Lie group skeleton representation method of relative three-dimensional geometric relationship models the human action as a series of curves on the Lie group, and then combines the correspondence between Lie groups and Lie algebras, such as image 3 , and use logarithmic mapping to map the curve based on Lie group space to the curve based on Lie algebra space. Finally, the Lie group feature and the convolutional neural network...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com