Video synthesis method, model training method and related device

一种视频合成、视频的技术,应用在彩色电视的零部件、电视系统的零部件、电视等方向,能够解决连贯性不好、视频连续性差等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

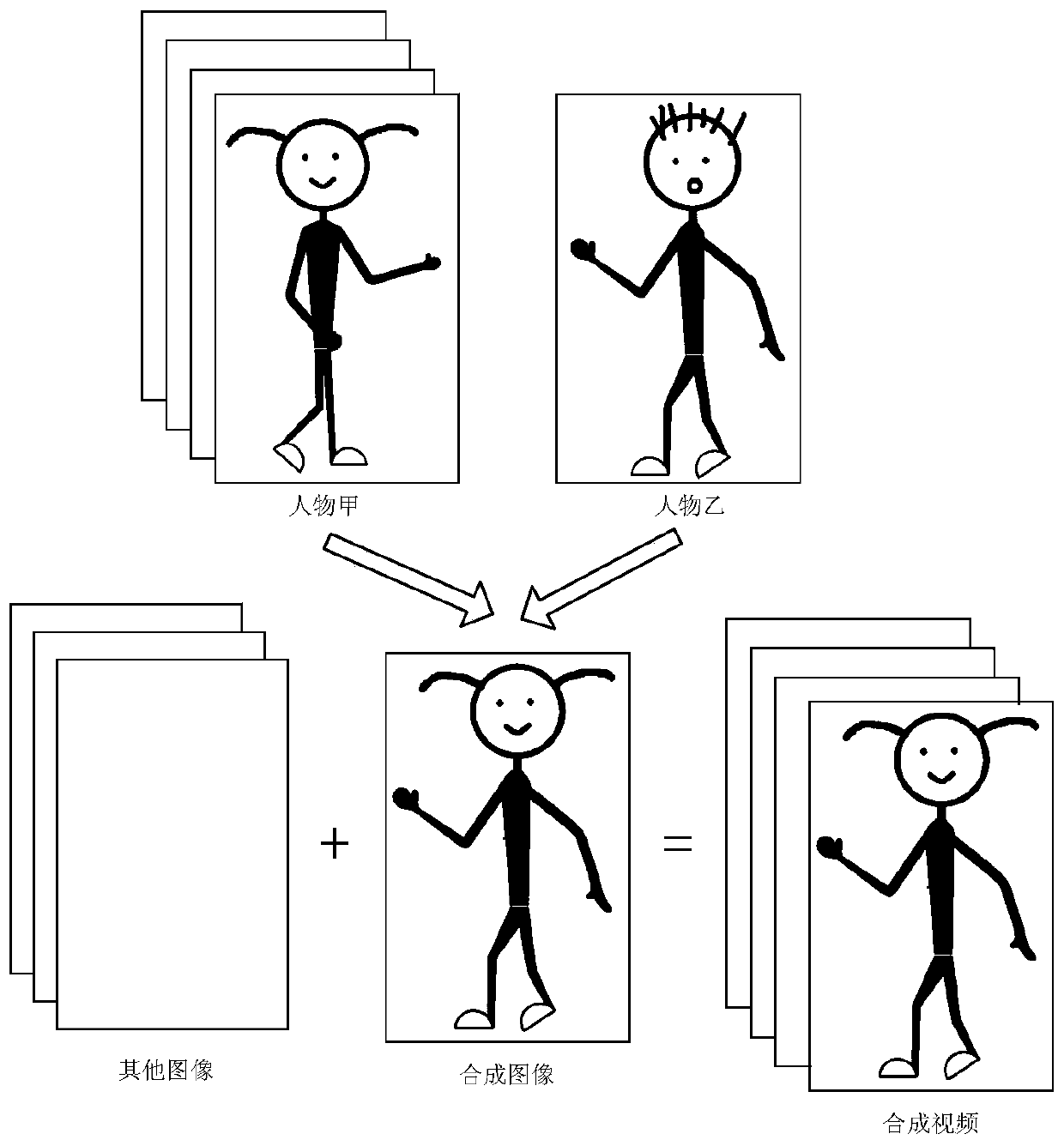

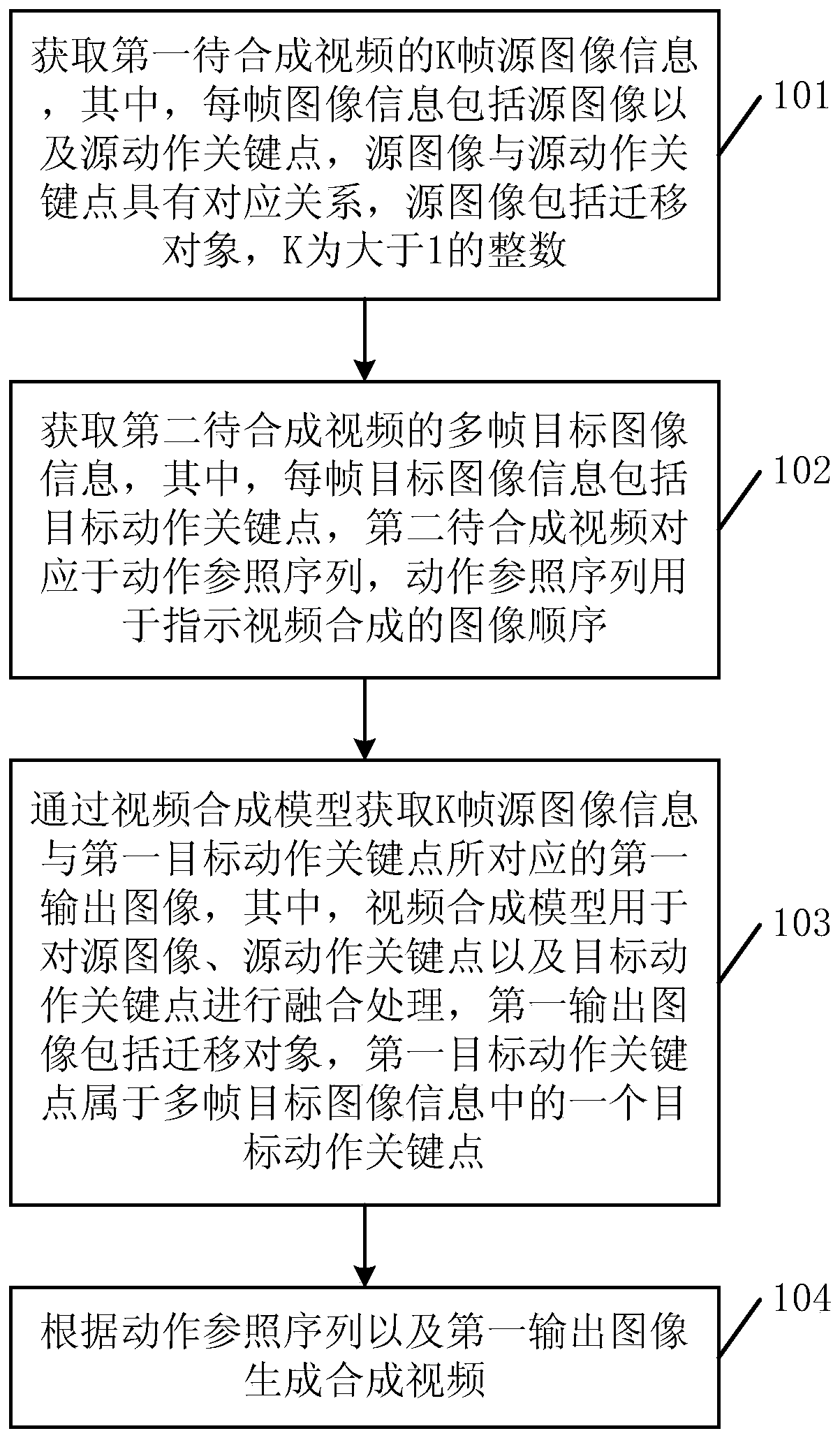

[0116] The embodiment of the present application provides a video synthesis method, a model training method, and related devices, which can use multi-frame source image information to generate an output image corresponding to an action sequence, thereby fully considering the information relevance between consecutive frames, The temporal continuity of the synthesized video is thereby enhanced.

[0117] The terms "first", "second", "third", "fourth", etc. (if any) in the specification and claims of the present application and the above drawings are used to distinguish similar objects, and not necessarily Used to describe a specific sequence or sequence. It is to be understood that the data so used are interchangeable under appropriate circumstances such that the embodiments of the application described herein, for example, can be practiced in sequences other than those illustrated or described herein. Furthermore, the terms "comprising" and "corresponding to" and any variations...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com