Caching method and device

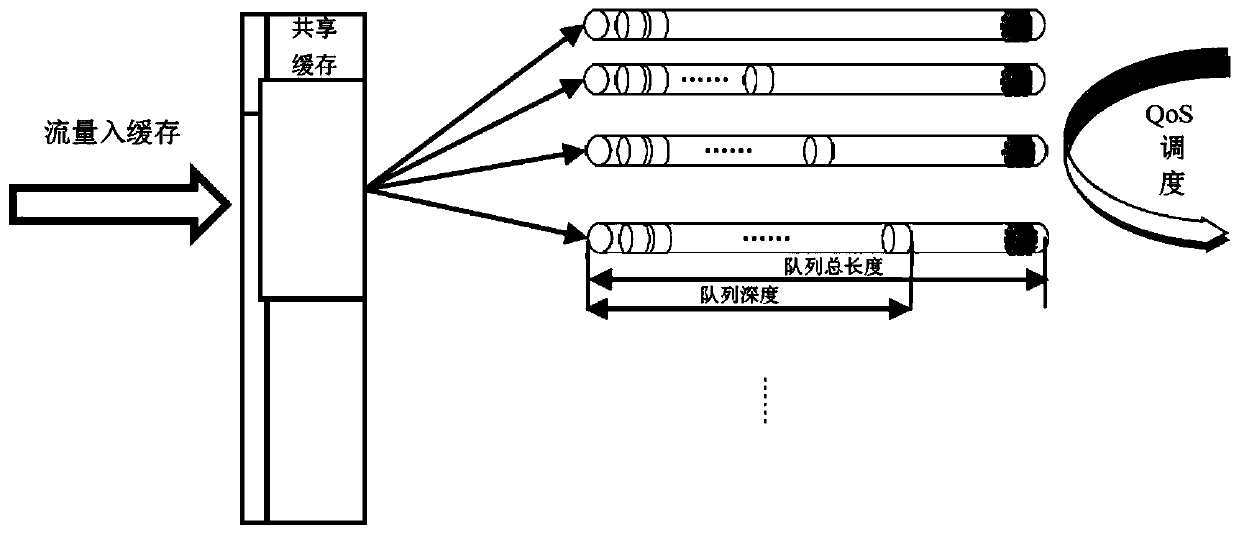

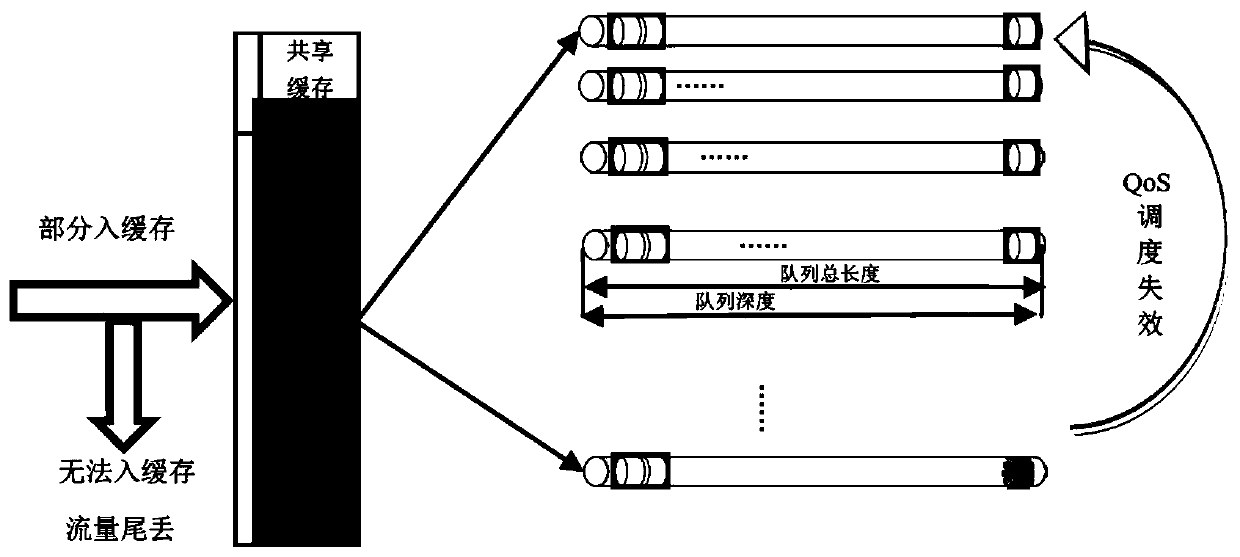

A cache and cache area technology, applied in the field of network communication, can solve problems such as queue congestion, cache use waste, and inability to effectively solve QoS scheduling failures, and achieve the effect of ensuring QoS service quality and good experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

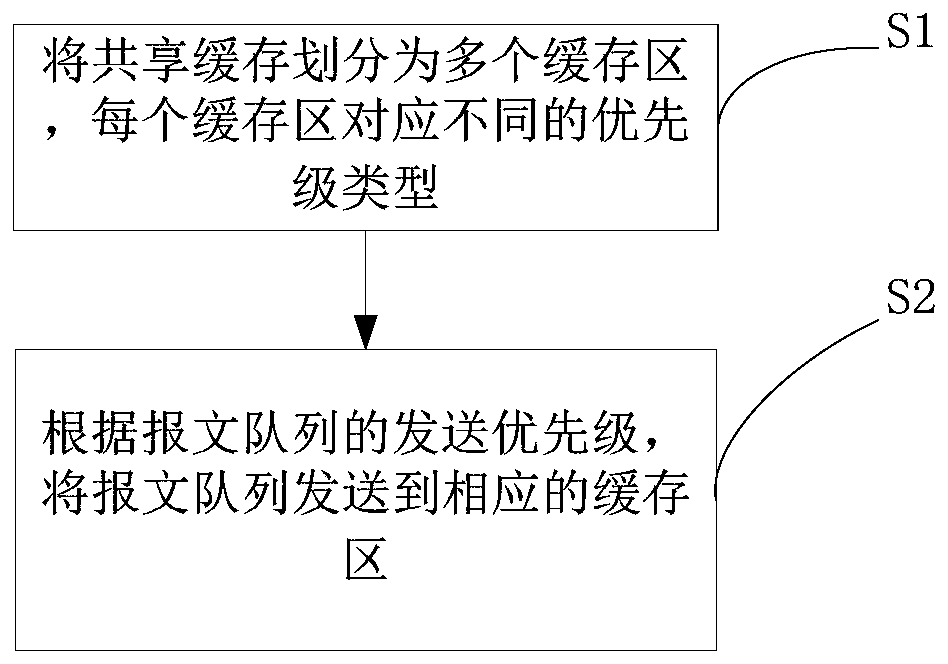

[0028] Specifically, such as image 3 As shown, the specific scheme flow of the embodiment of the present disclosure is as follows:

[0029] A caching method, comprising the steps of:

[0030] S1. Dividing the shared buffer into multiple buffer areas, each buffer area corresponding to a different priority type;

[0031] Wherein, the multiple buffer areas are respectively used for buffering and forwarding corresponding message queues, and may include two or more buffer areas. For example, three buffer areas are included, wherein the first buffer area buffers the packet loss-free queue, the second buffer area buffers the high-priority queue, and the third buffer area buffers the low-priority queue. The ratio of the first cache, the second cache, and the third cache to the shared cache is less than or equal to 100%.

[0032] S2. Send the message queue to a corresponding buffer area according to the sending priority of the message queue. The sending priority may include, for e...

Embodiment 2

[0040] Specifically, such as Figure 4 As shown, the specific scheme flow of the embodiment of the present disclosure is as follows:

[0041] Firstly, the shared buffer is divided into multiple buffer areas, and each buffer area corresponds to a different priority type; then, according to the sending priority of the message queue, the message queue is sent to the corresponding buffer area. According to the queue priority and the possible congestion status, the sending priority of the queue is divided into three types: no packet loss queue, high priority queue, and low priority queue.

[0042] No packet loss queue: In the following table, it is set as the queue aggregation group Group A. There are not many such queues, which are used for the internal protocol packets of the device. It is too large, so it generally does not participate in QoS scheduling. As long as the message enters the queue, it will be sent according to the absolute priority, so this part of the message will...

Embodiment 3

[0061] Such as Figure 6 As shown, a cache device includes:

[0062] The partitioning module 401 is configured to divide the shared cache into multiple cache areas, and each cache area corresponds to a different priority type. Among them, multiple buffer areas are respectively used for caching and forwarding corresponding message queues, among which, the first buffer area caches no packet loss message queues, the second buffer area caches high-priority queues, and the third buffer area caches low-priority queues. queue. The ratio of the first cache, the second cache, and the third cache to the shared cache is less than or equal to 100%.

[0063] The sending module 402 is configured to send the message queue to a corresponding buffer area according to the sending priority of the message queue. Sending priority includes: no packet loss, high priority, low priority.

[0064] If the ratio of the length of the message queue to the capacity of the corresponding buffer is lower t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com