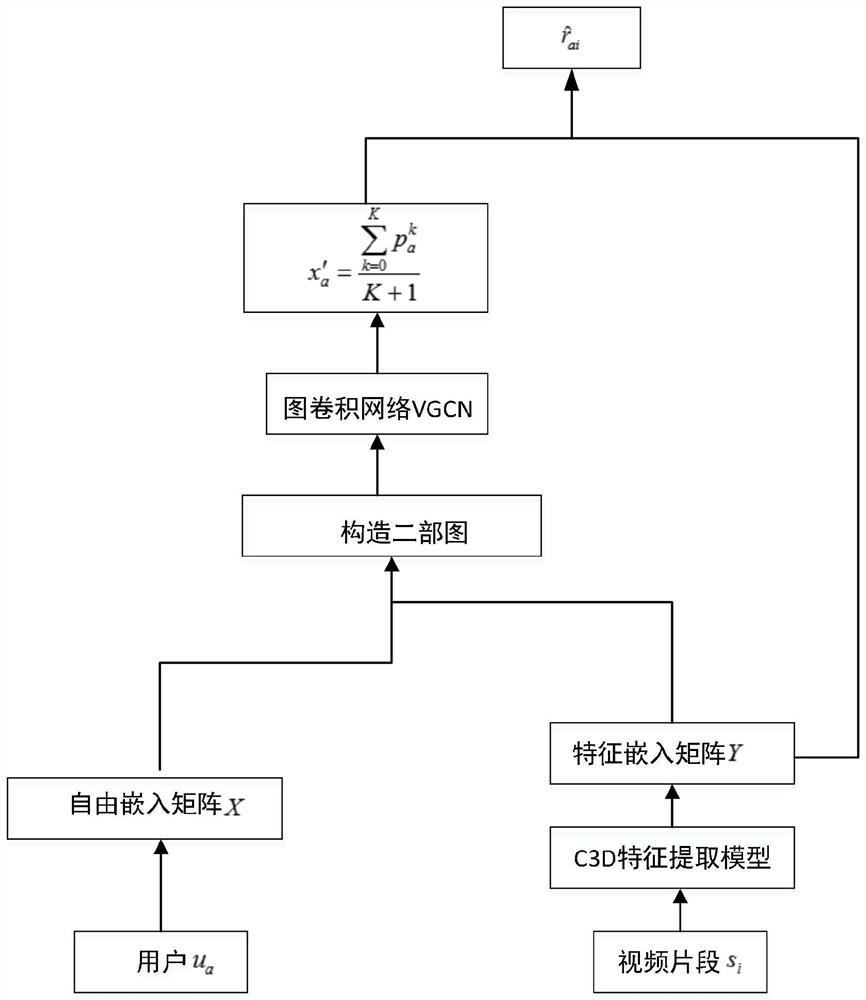

A Video Segment Recommendation Method Based on Graph Convolutional Network

A technology of video clips and convolutional networks, applied in neural learning methods, biological neural network models, instruments, etc., can solve problems such as cold start items, and achieve the effect of avoiding data sparseness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

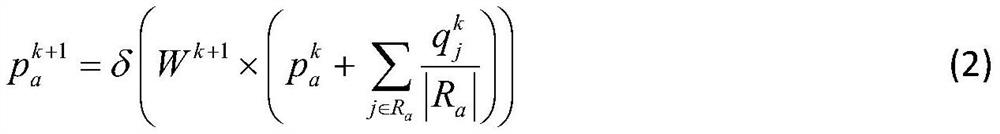

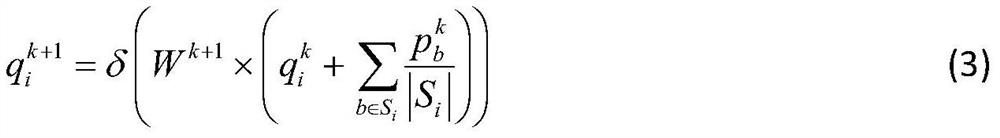

Method used

Image

Examples

Embodiment

[0070] In order to verify the effectiveness of the method of the present invention, the present invention grabs a large number of video clips from the video clip sharing platform Gifs.com as a data set, and each clip consists of a quadruple s ,t e >, where u represents the user id, v represents the source video id of the segment, and t s Indicates the start time point of the segment, t e Indicates the end time point. The original dataset includes 14,000 users, 119,938 videos, and 225,015 segment annotations. In this experiment, all clips are processed into a fixed duration of 5s, and a threshold θ is set. When the overlap between the user’s actual interaction clip and the data set exceeds θ, it is considered that the user has generated positive feedback on the clip. After data cutting, ensure that each segment is fixed for 5s, and the final data set D is obtained.

[0071] The present invention uses five indicators, including MAP (Mean Average Precision), NMSD (Normalized M...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com