Method for controlling vehicle systems in motor vehicles

A technology of a vehicle system, a motor vehicle, applied in the field of motor vehicles, capable of solving problems such as delayed reaction time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

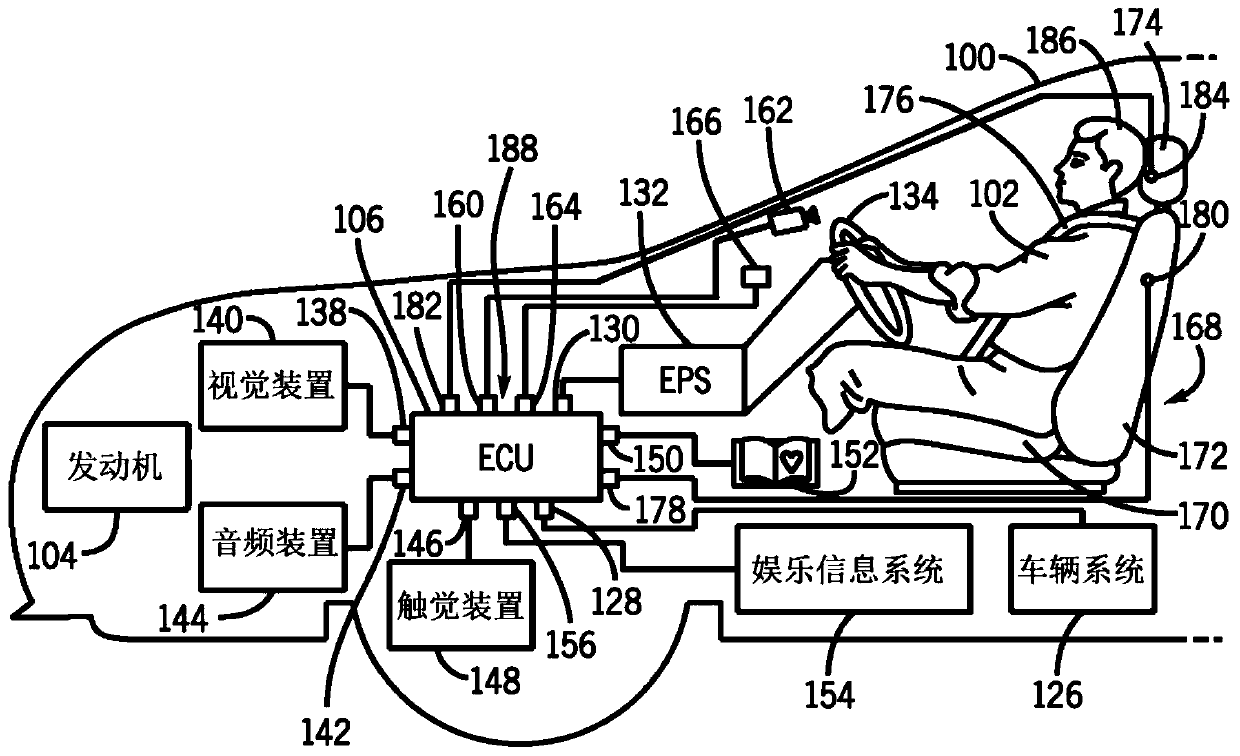

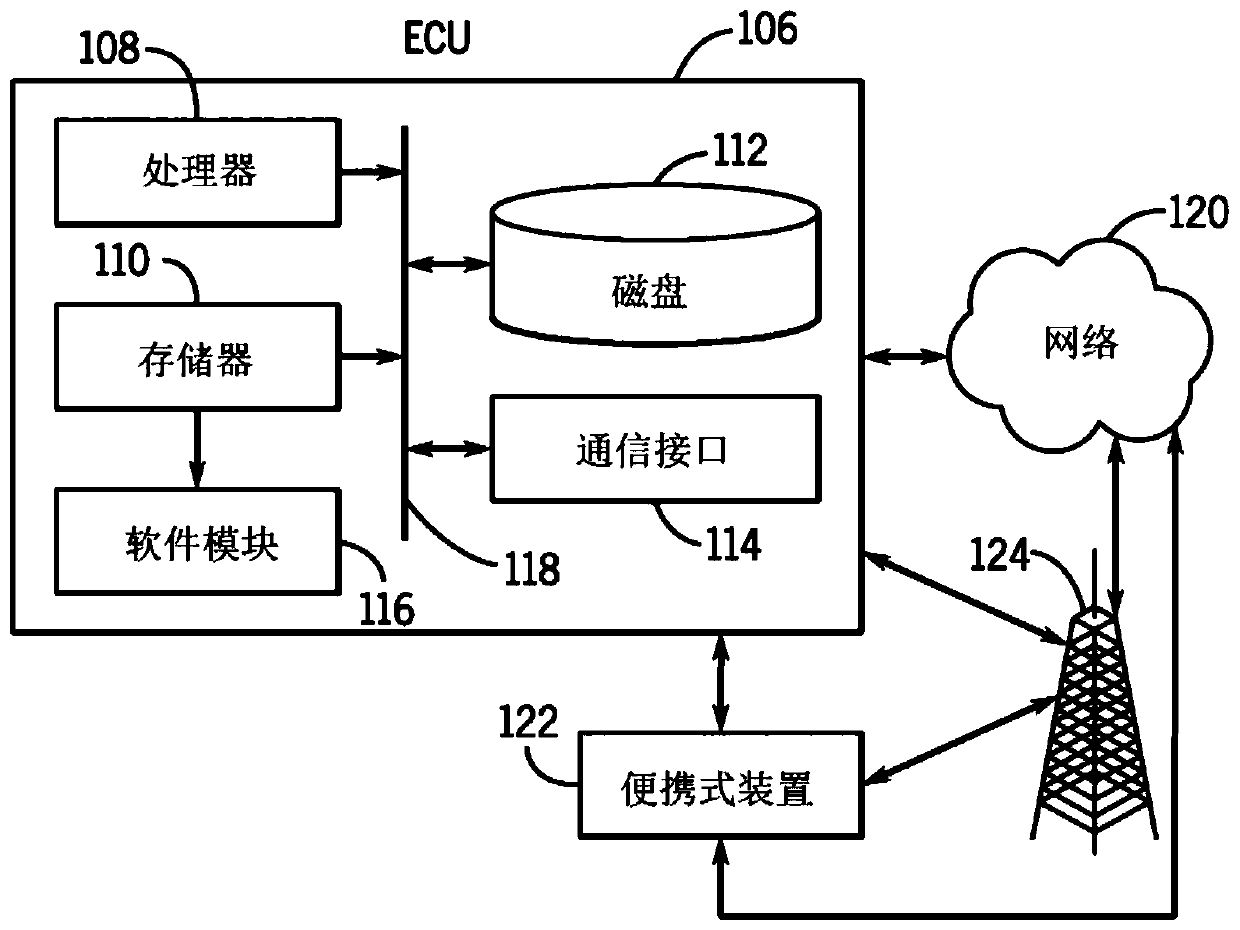

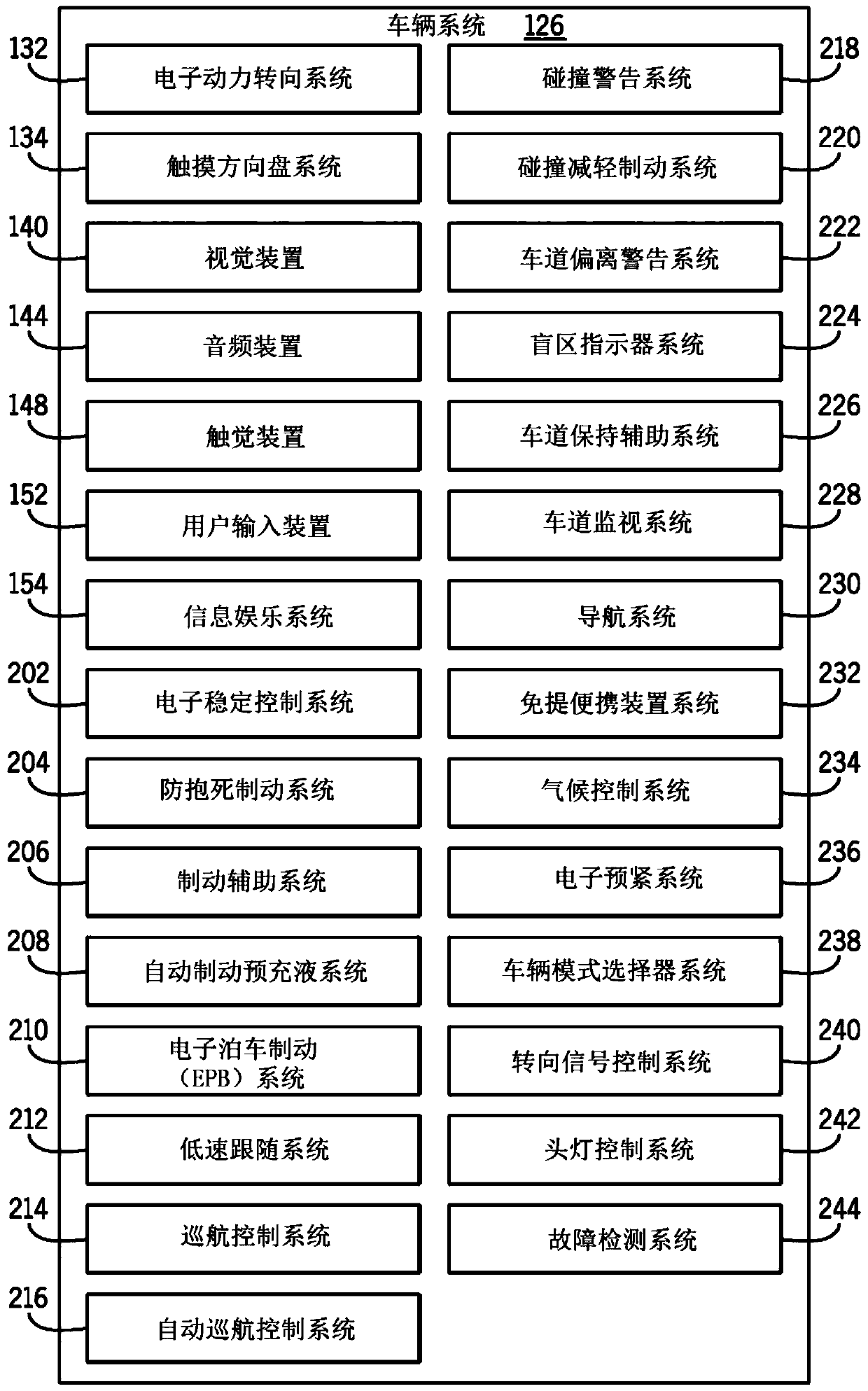

[0198] The following detailed description is intended to be exemplary and those of ordinary skill in the art will recognize that other embodiments and implementations are possible within the scope of the embodiments described herein. Example embodiments are first described generally with respect to a system overview including components of a motor vehicle, example vehicle systems and sensors, and monitoring systems and sensors. Following the general description, presented are systems and methods for assessing driver states and operational responses, including discussions of determining driver states, determining one or more driver states, determining combined driver states, and validating driver states. Exemplary implementations are also described for detecting driver states and exemplary operational responses of vehicle systems based on driver states and / or combined driver states. Additionally, embodiments are discussed that relate to various levels of operational response fr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com